Fairness (machine Learning) on:

[Wikipedia]

[Google]

[Amazon]

"Fairness definitions explained."

In 2018 IEEE/ACM international workshop on software fairness (fairware), pp. 1-7. IEEE, 2018. * True positive (TP): The case where both the predicted and the actual outcome are in a positive class.

* True negative (TN): The case where both the predicted outcome and the actual outcome are assigned to the negative class.

* False positive (FP): A case predicted to befall into a positive class assigned in the actual outcome is to the negative one.

* False negative (FN): A case predicted to be in the negative class with an actual outcome is in the positive one.

These relations can be easily represented with a

* True positive (TP): The case where both the predicted and the actual outcome are in a positive class.

* True negative (TN): The case where both the predicted outcome and the actual outcome are assigned to the negative class.

* False positive (FP): A case predicted to befall into a positive class assigned in the actual outcome is to the negative one.

* False negative (FN): A case predicted to be in the negative class with an actual outcome is in the positive one.

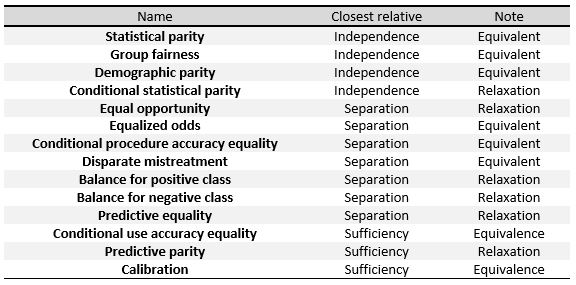

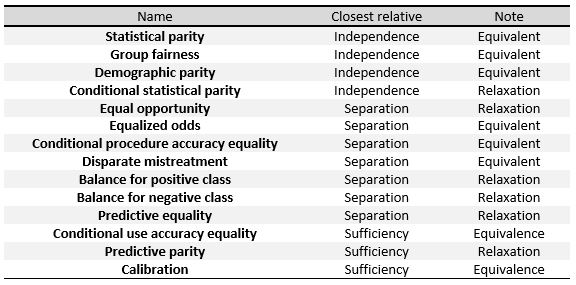

These relations can be easily represented with a  The following criteria can be understood as measures of the three general definitions given at the beginning of this section, namely Independence, Separation and Sufficiency. In the table to the right, we can see the relationships between them.

To define these measures specifically, we will divide them into three big groups as done in Verma et al.: definitions based on a predicted outcome, on predicted and actual outcomes, and definitions based on predicted probabilities and the actual outcome.

We will be working with a binary classifier and the following notation: refers to the score given by the classifier, which is the probability of a certain subject to be in the positive or the negative class. represents the final classification predicted by the algorithm, and its value is usually derived from , for example will be positive when is above a certain threshold. represents the actual outcome, that is, the real classification of the individual and, finally, denotes the sensitive attributes of the subjects.

The following criteria can be understood as measures of the three general definitions given at the beginning of this section, namely Independence, Separation and Sufficiency. In the table to the right, we can see the relationships between them.

To define these measures specifically, we will divide them into three big groups as done in Verma et al.: definitions based on a predicted outcome, on predicted and actual outcomes, and definitions based on predicted probabilities and the actual outcome.

We will be working with a binary classifier and the following notation: refers to the score given by the classifier, which is the probability of a certain subject to be in the positive or the negative class. represents the final classification predicted by the algorithm, and its value is usually derived from , for example will be positive when is above a certain threshold. represents the actual outcome, that is, the real classification of the individual and, finally, denotes the sensitive attributes of the subjects.

"A survey on bias and fairness in machine learning."

ACM Computing Surveys (CSUR) 54, no. 6 (2021): 1-35. Roughly speaking, while group fairness criteria compare quantities at a group level, typically identified by sensitive attributes (e.g. gender, ethnicity, age, etc...), individual criteria compare individuals. In words, individual fairness follow the principle that "similar individuals should receive similar treatments". There is a very intuitive approach to fairness, which usually goes under the name of Fairness Through Unawareness (FTU), or ''Blindness'', that prescribe not to explicitly employ sensitive features when making (automated) decisions. This is effectively a notion of individual fairness, since two individuals differing only for the values of their sensitive attributes would receive the same outcome. However, in general, FTU is subject to several drawbacks, the main being that it does not take into account possible correlations between sensitive attributes and non-sensitive attributes employed in the decision-making process. For example, an agent with the (malignant) intention to discriminate on the basis of gender could introduce in the model a proxy variable for gender (i.e. a variable highly correlated with gender) and effectively using gender information while at the same time being compliant to the FTU prescription. The problem of ''what variables correlated to sensitive ones are fairly employable by a model'' in the decision-making process is a crucial one, and is relevant for group concepts as well: independence metrics require a complete removal of sensitive information, while separation-based metrics allow for correlation, but only as far as the labeled target variable "justify" them. The most general concept of individual fairness was introduced in the pioneer work by Dwork and collaborators in 2012 and can be thought of as a mathematical translation of the principle that the decision map taking features as input should be built such that it is able to "map similar individuals similarly", that is expressed as a Lipschitz condition on the model map. They call this approach Fairness Through Awareness (FTA), precisely as counterpoint to FTU, since they underline the importance of choosing the appropriate target-related distance metric in order to assess which individuals are ''similar'' in specific situations. Again, this problem is very related to the point raised above about what variables can be seen as "legitimate" in particular contexts.

Counterfactual fairness

Advances in neural information processing systems, 30. propose to employ counterfactuals, and define a decision-making process counterfactually fair if, for any individual, the outcome does not change in the counterfactual scenario where the sensitive attributes are changed. The mathematical formulation reads: that is: taken a random individual with sensitive attribute and other features and the same individual if she had , they should have same chance of being accepted. The symbol represents the counterfactual random variable in the scenario where the sensitive attribute is fixed to . The conditioning on means that this requirement is at the individual level, in that we are conditioning on all the variables identifying a single observation. Machine learning models are often trained upon data where the outcome depended on the decision made at that time. For example, if a machine learning model has to determine whether an inmate will recidivate and will determine whether the inmate should be released early, the outcome could be dependent on whether the inmate was released early or not. Mishler et al. propose a formula for counterfactual equalized odds: where is a random variable, denotes the outcome given that the decision was taken, and is a sensitive feature.

''Learning Fair Representations''

Retrieved 1 December 2019 where a multinomial random variable is used as an intermediate representation. In the process, the system is encouraged to preserve all information except that which can lead to biased decisions, and to obtain a prediction as accurate as possible. On the one hand, this procedure has the advantage that the preprocessed data can be used for any machine learning task. Furthermore, the classifier does not need to be modified, as the correction is applied to the dataset before processing. On the other hand, the other methods obtain better results in accuracy and fairness.Ziyuan Zhong

''Tutorial on Fairness in Machine Learning''

Retrieved 1 December 2019

''Data preprocessing techniques for classification without discrimination''

Retrieved 17 December 2019 If the dataset was unbiased the sensitive variable and the target variable would be statistically independent and the probability of the joint distribution would be the product of the probabilities as follows: In reality, however, the dataset is not unbiased and the variables are not statistically independent so the observed probability is: To compensate for the bias, the software adds a

''Fairness Beyond Disparate Treatment & Disparate Impact: Learning Classification without Disparate Mistreatment''

Retrieved 1 December 2019 These constraints force the algorithm to improve fairness, by keeping the same rates of certain measures for the protected group and the rest of individuals. For example, we can add to the objective of the

''Mitigating Unwanted Biases with Adversarial Learning''

Retrieved 17 December 2019Joyce Xu

''Algorithmic Solutions to Algorithmic Bias: A Technical Guide''

Retrieved 17 December 2019 An important point here is that, in order to propagate correctly, above must refer to the raw output of the classifier, not the discrete prediction; for example, with an The intuitive idea is that we want the ''predictor'' to try to minimize (therefore the term ) while, at the same time, maximize (therefore the term ), so that the ''adversary'' fails at predicting the sensitive variable from .

The term prevents the ''predictor'' from moving in a direction that helps the ''adversary'' decrease its loss function.

It can be shown that training a ''predictor'' classification model with this algorithm improves demographic parity with respect to training it without the ''adversary''.

The intuitive idea is that we want the ''predictor'' to try to minimize (therefore the term ) while, at the same time, maximize (therefore the term ), so that the ''adversary'' fails at predicting the sensitive variable from .

The term prevents the ''predictor'' from moving in a direction that helps the ''adversary'' decrease its loss function.

It can be shown that training a ''predictor'' classification model with this algorithm improves demographic parity with respect to training it without the ''adversary''.

''Equality of Opportunity in Supervised Learning''

Retrieved 1 December 2019 The advantages of postprocessing include that the technique can be applied after any classifiers, without modifying it, and has a good performance in fairness measures. The cons are the need to access to the protected attribute in test time and the lack of choice in the balance between accuracy and fairness.

''Decision Theory for Discrimination-aware Classification''

Retrieved 17 December 2019 We say is a "rejected instance" if with a certain such that . The algorithm of "ROC" consists on classifying the non-rejected instances following the rule above and the rejected instances as follows: if the instance is an example of a deprived group () then label it as positive, otherwise, label it as negative. We can optimize different measures of discrimination (link) as functions of to find the optimal for each problem and avoid becoming discriminatory against the privileged group.

Fairness in

''Fairness and Machine Learning''

Retrieved 15 December 2019.

is a measure of the distance (at a given level of the probability score) between the ''predicted'' cumulative percent negative and ''predicted'' cumulative percent positive.

The greater this separation coefficient is at a given score value, the more effective the model is at differentiating between the set of positives and negatives at a particular probability cut-off. According to Mayes: "It is often observed in the credit industry that the selection of validation measures depends on the modeling approach. For example, if modeling procedure is parametric or semi-parametric, the two-sample K-S test is often used. If the model is derived by heuristic or iterative search methods, the measure of model performance is usually divergence. A third option is the coefficient of separation...The coefficient of separation, compared to the other two methods, seems to be most reasonable as a measure for model performance because it reflects the separation pattern of a model."

machine learning

Machine learning (ML) is a field of inquiry devoted to understanding and building methods that 'learn', that is, methods that leverage data to improve performance on some set of tasks. It is seen as a part of artificial intelligence.

Machine ...

refers to the various attempts at correcting algorithmic bias in automated decision processes based on machine learning models. Decisions made by computers after a machine-learning process may be considered unfair if they were based on variables considered sensitive. Examples of these kinds of variable include gender

Gender is the range of characteristics pertaining to femininity and masculinity and differentiating between them. Depending on the context, this may include sex-based social structures (i.e. gender roles) and gender identity. Most cultures us ...

, ethnicity

An ethnic group or an ethnicity is a grouping of people who identify with each other on the basis of shared attributes that distinguish them from other groups. Those attributes can include common sets of traditions, ancestry, language, history, ...

, sexual orientation

Sexual orientation is an enduring pattern of romantic or sexual attraction (or a combination of these) to persons of the opposite sex or gender, the same sex or gender, or to both sexes or more than one gender. These attractions are generally ...

, disability

Disability is the experience of any condition that makes it more difficult for a person to do certain activities or have equitable access within a given society. Disabilities may be cognitive, developmental, intellectual, mental, physical, s ...

and more. As it is the case with many ethical concepts, definitions of fairness and bias are always controversial. In general, fairness and bias are considered relevant when the decision process impacts people's lives. In machine learning

Machine learning (ML) is a field of inquiry devoted to understanding and building methods that 'learn', that is, methods that leverage data to improve performance on some set of tasks. It is seen as a part of artificial intelligence.

Machine ...

, the problem of algorithmic bias is well known and well studied. Outcomes may be skewed by a range of factors and thus might be considered unfair with respect to certain groups or individuals. An example would be the way social media sites deliver personalized news to consumers.

Context

Discussion about fairness in machine learning is a relatively recent topic. Since 2016 there has been a sharp increase in research into the topic. This increase could be partly accounted to an influential report by ProPublica that claimed that theCOMPAS

Compas, also known as compas direct or compas direk (; Haitian Creole: ''konpa'', ''kompa'' or ''kompa dirèk''), is a modern méringue dance music genre of Haiti. The genre was popularized following the creation of Ensemble Aux Callebasses i ...

software, widely used in US courts to predict recidivism

Recidivism (; from ''recidive'' and ''ism'', from Latin ''recidīvus'' "recurring", from ''re-'' "back" and ''cadō'' "I fall") is the act of a person repeating an undesirable behavior after they have experienced negative consequences of th ...

, was racially biased. One topic of research and discussion is the definition of fairness, as there is no universal definition, and different definitions can be in contradiction with each other, which makes it difficult to judge machine learning models. Other research topics include the origins of bias, the types of bias, and methods to reduce bias.

In recent years tech companies have made tools and manuals on how to detect and reduce bias

Bias is a disproportionate weight ''in favor of'' or ''against'' an idea or thing, usually in a way that is closed-minded, prejudicial, or unfair. Biases can be innate or learned. People may develop biases for or against an individual, a group ...

in machine learning. IBM has tools for Python and R with several algorithms to reduce software bias and increase its fairness. Google

Google LLC () is an American Multinational corporation, multinational technology company focusing on Search Engine, search engine technology, online advertising, cloud computing, software, computer software, quantum computing, e-commerce, ar ...

has published guidlines and tools to study and combat bias in machine learning. Facebook

Facebook is an online social media and social networking service owned by American company Meta Platforms. Founded in 2004 by Mark Zuckerberg with fellow Harvard College students and roommates Eduardo Saverin, Andrew McCollum, Dustin ...

have reported their use of a tool, Fairness Flow, to detect bias in their AI. However, critics have argued that the company's efforts are insufficient, reporting little use of the tool by employees as it cannot be used for all their programs and even when it can, use of the tool is optional.

Controversies

The use of algorithmic decision making in the legal system has been a notable area of use under scrutiny. In 2014, then U.S. Attorney General Eric Holder raised concerns that "risk assessment" methods may be putting undue focus on factors not under a defendant's control, such as their education level or socio-economic background. The 2016 report by ProPublica onCOMPAS

Compas, also known as compas direct or compas direk (; Haitian Creole: ''konpa'', ''kompa'' or ''kompa dirèk''), is a modern méringue dance music genre of Haiti. The genre was popularized following the creation of Ensemble Aux Callebasses i ...

claimed that black defendants were almost twice as likely to be incorrectly labelled as higher risk than white defendants, while making the opposite mistake with white defendants. The creator of COMPAS

Compas, also known as compas direct or compas direk (; Haitian Creole: ''konpa'', ''kompa'' or ''kompa dirèk''), is a modern méringue dance music genre of Haiti. The genre was popularized following the creation of Ensemble Aux Callebasses i ...

, Northepointe Inc., disputed the report, claiming their tool is fair and ProPublica made statistical errors, which was subsequently refuted again by ProPublica.

Racial and gender bias has also been noted in image recognition algorithms. Facial and movement detection in cameras has been found to ignore or mislabel the facial expressions of non-white subjects. In 2015, the automatic tagging feature in both Flickr

Flickr ( ; ) is an American image hosting and video hosting service, as well as an online community, founded in Canada and headquartered in the United States. It was created by Ludicorp in 2004 and was a popular way for amateur and professiona ...

and Google Photos was found to label black people with tags such as "animal" and "gorilla". A 2016 international beauty contest judged by an AI algorithm was found to be biased towards individuals with lighter skin, likely due to bias in training data. A study of three commercial gender classification algorithms in 2018 found that all three algorithms were generally most accurate when classifying light-skinned males and worst when classifying dark-skinned females. In 2020, an image cropping tool from Twitter

Twitter is an online social media and social networking service owned and operated by American company Twitter, Inc., on which users post and interact with 280-character-long messages known as "tweets". Registered users can post, like, and ...

was shown to prefer lighter skinned faces. DALL-E, a machine learning Text-to-image model released in 2021, has been prone to create racist and sexist images that reinforce societal stereotypes, something that has been admitted by its creators.

Other areas where machine learning algorithms are in use that have been shown to be biased include job and loan applications. Amazon

Amazon most often refers to:

* Amazons, a tribe of female warriors in Greek mythology

* Amazon rainforest, a rainforest covering most of the Amazon basin

* Amazon River, in South America

* Amazon (company), an American multinational technolog ...

has used software to review job applications that was sexist, for example by penalizing resumes that included the word "women". In 2019, Apple

An apple is an edible fruit produced by an apple tree (''Malus domestica''). Apple trees are cultivated worldwide and are the most widely grown species in the genus '' Malus''. The tree originated in Central Asia, where its wild ances ...

's algorithm to determine credit card limits for their new Apple Card gave significantly higher limits to males than females, even for couples that shared their finances. Mortgage-approval algorithms in use in the U.S. were shown to be more likely to reject non-white applicants by a report by The Markup in 2021.

Group Fairness criteria

Inclassification Classification is a process related to categorization, the process in which ideas and objects are recognized, differentiated and understood.

Classification is the grouping of related facts into classes.

It may also refer to:

Business, organizat ...

problems, an algorithm learns a function to predict a discrete characteristic , the target variable, from known characteristics . We model as a discrete random variable

A random variable (also called random quantity, aleatory variable, or stochastic variable) is a mathematical formalization of a quantity or object which depends on random events. It is a mapping or a function from possible outcomes (e.g., the p ...

which encodes some characteristics contained or implicitly encoded in that we consider as sensitive characteristics (gender, ethnicity, sexual orientation, etc.). We finally denote by the prediction of the classifier.

Now let us define three main criteria to evaluate if a given classifier is fair, that is if its predictions are not influenced by some of these sensitive variables.Solon Barocas; Moritz Hardt; Arvind Narayanan''Fairness and Machine Learning''

Retrieved 15 December 2019.

Independence

We say therandom variable

A random variable (also called random quantity, aleatory variable, or stochastic variable) is a mathematical formalization of a quantity or object which depends on random events. It is a mapping or a function from possible outcomes (e.g., the p ...

s satisfy independence if the sensitive characteristics are statistically independent of the prediction , and we write

We can also express this notion with the following formula:

This means that the classification rate for each target classes is equal for people belonging to different groups with respect to sensitive characteristics .

Yet another equivalent expression for independence can be given using the concept of mutual information

In probability theory and information theory, the mutual information (MI) of two random variables is a measure of the mutual dependence between the two variables. More specifically, it quantifies the " amount of information" (in units such as ...

between random variables, defined as

In this formula, is the entropy

Entropy is a scientific concept, as well as a measurable physical property, that is most commonly associated with a state of disorder, randomness, or uncertainty. The term and the concept are used in diverse fields, from classical thermodyna ...

of the random variable

A random variable (also called random quantity, aleatory variable, or stochastic variable) is a mathematical formalization of a quantity or object which depends on random events. It is a mapping or a function from possible outcomes (e.g., the p ...

. Then satisfy independence if .

A possible relaxation of the independence definition include introducing a positive slack

Slack may refer to:

Places

* Slack, West Yorkshire, a village in Calderdale, England

* The Slack, a village in County Durham, England

* Slack (river), a river in Pas-de-Calais department, France

* Slacks Creek, Queensland, a suburb of Logan City, ...

Finally, another possible relaxation is to require .

Separation

We say therandom variable

A random variable (also called random quantity, aleatory variable, or stochastic variable) is a mathematical formalization of a quantity or object which depends on random events. It is a mapping or a function from possible outcomes (e.g., the p ...

s satisfy separation if the sensitive characteristics are statistically independent of the prediction given the target value , and we write

We can also express this notion with the following formula:

This means that all the dependence of the decision on the sensitive attribute must be justified by the actual dependence of the true target variable .

Another equivalent expression, in the case of a binary target rate, is that the true positive rate and the false positive rate

In statistics, when performing multiple comparisons, a false positive ratio (also known as fall-out or false alarm ratio) is the probability of falsely rejecting the null hypothesis for a particular test. The false positive rate is calculated as ...

are equal (and therefore the false negative rate

A false positive is an error in binary classification in which a test result incorrectly indicates the presence of a condition (such as a disease when the disease is not present), while a false negative is the opposite error, where the test result ...

and the true negative rate are equal) for every value of the sensitive characteristics:

A possible relaxation of the given definitions is to allow the value for the difference between rates to be a positive number lower than a given slack

Slack may refer to:

Places

* Slack, West Yorkshire, a village in Calderdale, England

* The Slack, a village in County Durham, England

* Slack (river), a river in Pas-de-Calais department, France

* Slacks Creek, Queensland, a suburb of Logan City, ...

Sufficiency

We say therandom variable

A random variable (also called random quantity, aleatory variable, or stochastic variable) is a mathematical formalization of a quantity or object which depends on random events. It is a mapping or a function from possible outcomes (e.g., the p ...

s satisfy sufficiency if the sensitive characteristics are statistically independent of the target value given the prediction , and we write

We can also express this notion with the following formula:

This means that the probability

Probability is the branch of mathematics concerning numerical descriptions of how likely an Event (probability theory), event is to occur, or how likely it is that a proposition is true. The probability of an event is a number between 0 and ...

of actually being in each of the groups is equal for two individuals with different sensitive characteristics given that they were predicted to belong to the same group.

Relationships between definitions

Finally, we sum up some of the main results that relate the three definitions given above: * Assuming is binary, if and are not statistically independent, and and are not statistically independent either, then independence and separation cannot both hold. * If as a joint distribution has positiveprobability

Probability is the branch of mathematics concerning numerical descriptions of how likely an Event (probability theory), event is to occur, or how likely it is that a proposition is true. The probability of an event is a number between 0 and ...

for all its possible values and and are not statistically independent, then separation and sufficiency cannot both hold.

Mathematical formulation of group fairness definitions

Preliminary definitions

Most statistical measures of fairness rely on different metrics, so we will start by defining them. When working with a binary classifier, both the predicted and the actual classes can take two values: positive and negative. Now let us start explaining the different possible relations between predicted and actual outcome:Verma, Sahil, and Julia Rubin"Fairness definitions explained."

In 2018 IEEE/ACM international workshop on software fairness (fairware), pp. 1-7. IEEE, 2018.

* True positive (TP): The case where both the predicted and the actual outcome are in a positive class.

* True negative (TN): The case where both the predicted outcome and the actual outcome are assigned to the negative class.

* False positive (FP): A case predicted to befall into a positive class assigned in the actual outcome is to the negative one.

* False negative (FN): A case predicted to be in the negative class with an actual outcome is in the positive one.

These relations can be easily represented with a

* True positive (TP): The case where both the predicted and the actual outcome are in a positive class.

* True negative (TN): The case where both the predicted outcome and the actual outcome are assigned to the negative class.

* False positive (FP): A case predicted to befall into a positive class assigned in the actual outcome is to the negative one.

* False negative (FN): A case predicted to be in the negative class with an actual outcome is in the positive one.

These relations can be easily represented with a confusion matrix

In the field of machine learning and specifically the problem of statistical classification, a confusion matrix, also known as an error matrix, is a specific table layout that allows visualization of the performance of an algorithm, typically a ...

, a table that describes the accuracy of a classification model. In this matrix, columns and rows represent instances of the predicted and the actual cases, respectively.

By using these relations, we can define multiple metrics which can be later used to measure the fairness of an algorithm:

* Positive predicted value (PPV): the fraction of positive cases which were correctly predicted out of all the positive predictions. It is usually referred to as precision, and represents the probability

Probability is the branch of mathematics concerning numerical descriptions of how likely an Event (probability theory), event is to occur, or how likely it is that a proposition is true. The probability of an event is a number between 0 and ...

of a correct positive prediction. It is given by the following formula:

* False discovery rate (FDR): the fraction of positive predictions which were actually negative out of all the positive predictions. It represents the probability

Probability is the branch of mathematics concerning numerical descriptions of how likely an Event (probability theory), event is to occur, or how likely it is that a proposition is true. The probability of an event is a number between 0 and ...

of an erroneous positive prediction, and it is given by the following formula:

* Negative predicted value (NPV): the fraction of negative cases which were correctly predicted out of all the negative predictions. It represents the probability

Probability is the branch of mathematics concerning numerical descriptions of how likely an Event (probability theory), event is to occur, or how likely it is that a proposition is true. The probability of an event is a number between 0 and ...

of a correct negative prediction, and it is given by the following formula:

* False omission rate (FOR): the fraction of negative predictions which were actually positive out of all the negative predictions. It represents the probability

Probability is the branch of mathematics concerning numerical descriptions of how likely an Event (probability theory), event is to occur, or how likely it is that a proposition is true. The probability of an event is a number between 0 and ...

of an erroneous negative prediction, and it is given by the following formula:

* True positive rate (TPR): the fraction of positive cases which were correctly predicted out of all the positive cases. It is usually referred to as sensitivity or recall, and it represents the probability

Probability is the branch of mathematics concerning numerical descriptions of how likely an Event (probability theory), event is to occur, or how likely it is that a proposition is true. The probability of an event is a number between 0 and ...

of the positive subjects to be classified correctly as such. It is given by the formula:

* False negative rate (FNR): the fraction of positive cases which were incorrectly predicted to be negative out of all the positive cases. It represents the probability

Probability is the branch of mathematics concerning numerical descriptions of how likely an Event (probability theory), event is to occur, or how likely it is that a proposition is true. The probability of an event is a number between 0 and ...

of the positive subjects to be classified incorrectly as negative ones, and it is given by the formula:

* True negative rate (TNR): the fraction of negative cases which were correctly predicted out of all the negative cases. It represents the probability

Probability is the branch of mathematics concerning numerical descriptions of how likely an Event (probability theory), event is to occur, or how likely it is that a proposition is true. The probability of an event is a number between 0 and ...

of the negative subjects to be classified correctly as such, and it is given by the formula:

* False positive rate (FPR): the fraction of negative cases which were incorrectly predicted to be positive out of all the negative cases. It represents the probability

Probability is the branch of mathematics concerning numerical descriptions of how likely an Event (probability theory), event is to occur, or how likely it is that a proposition is true. The probability of an event is a number between 0 and ...

of the negative subjects to be classified incorrectly as positive ones, and it is given by the formula:

The following criteria can be understood as measures of the three general definitions given at the beginning of this section, namely Independence, Separation and Sufficiency. In the table to the right, we can see the relationships between them.

To define these measures specifically, we will divide them into three big groups as done in Verma et al.: definitions based on a predicted outcome, on predicted and actual outcomes, and definitions based on predicted probabilities and the actual outcome.

We will be working with a binary classifier and the following notation: refers to the score given by the classifier, which is the probability of a certain subject to be in the positive or the negative class. represents the final classification predicted by the algorithm, and its value is usually derived from , for example will be positive when is above a certain threshold. represents the actual outcome, that is, the real classification of the individual and, finally, denotes the sensitive attributes of the subjects.

The following criteria can be understood as measures of the three general definitions given at the beginning of this section, namely Independence, Separation and Sufficiency. In the table to the right, we can see the relationships between them.

To define these measures specifically, we will divide them into three big groups as done in Verma et al.: definitions based on a predicted outcome, on predicted and actual outcomes, and definitions based on predicted probabilities and the actual outcome.

We will be working with a binary classifier and the following notation: refers to the score given by the classifier, which is the probability of a certain subject to be in the positive or the negative class. represents the final classification predicted by the algorithm, and its value is usually derived from , for example will be positive when is above a certain threshold. represents the actual outcome, that is, the real classification of the individual and, finally, denotes the sensitive attributes of the subjects.

Definitions based on predicted outcome

The definitions in this section focus on a predicted outcome for various distributions of subjects. They are the simplest and most intuitive notions of fairness. * Demographic parity, also referred to as statistical parity, acceptance rate parity and benchmarking. A classifier satisfies this definition if the subjects in the protected and unprotected groups have equal probability of being assigned to the positive predicted class. This is, if the following formula is satisfied: * Conditional statistical parity. Basically consists in the definition above, but restricted only to asubset

In mathematics, set ''A'' is a subset of a set ''B'' if all elements of ''A'' are also elements of ''B''; ''B'' is then a superset of ''A''. It is possible for ''A'' and ''B'' to be equal; if they are unequal, then ''A'' is a proper subset o ...

of the instances. In mathematical notation this would be:

Definitions based on predicted and actual outcomes

These definitions not only considers the predicted outcome but also compare it to the actual outcome . * Predictive parity, also referred to as outcome test. A classifier satisfies this definition if the subjects in the protected and unprotected groups have equal PPV. This is, if the following formula is satisfied: : Mathematically, if a classifier has equal PPV for both groups, it will also have equal FDR, satisfying the formula: * False positive error rate balance, also referred to as predictive equality. A classifier satisfies this definition if the subjects in the protected and unprotected groups have aqual FPR. This is, if the following formula is satisfied: : Mathematically, if a classifier has equal FPR for both groups, it will also have equal TNR, satisfying the formula: * False negative error rate balance, also referred to as equal opportunity. A classifier satisfies this definition if the subjects in the protected and unprotected groups have equal FNR. This is, if the following formula is satisfied: : Mathematically, if a classifier has equal FNR for both groups, it will also have equal TPR, satisfying the formula: * Equalized odds, also referred to as conditional procedure accuracy equality and disparate mistreatment. A classifier satisfies this definition if the subjects in the protected and unprotected groups have equal TPR and equal FPR, satisfying the formula: * Conditional use accuracy equality. A classifier satisfies this definition if the subjects in the protected and unprotected groups have equal PPV and equal NPV, satisfying the formula: * Overall accuracy equality. A classifier satisfies this definition if the subject in the protected and unprotected groups have equal prediction accuracy, that is, the probability of a subject from one class to be assigned to it. This is, if it satisfies the following formula: * Treatment equality. A classifier satisfies this definition if the subjects in the protected and unprotected groups have an equal ratio of FN and FP, satisfying the formula:Definitions based on predicted probabilities and actual outcome

These definitions are based in the actual outcome and the predicted probability score . * Test-fairness, also known as calibration or matching conditional frequencies. A classifier satisfies this definition if individuals with the same predicted probability score have the same probability of being classified in the positive class when they belong to either the protected or the unprotected group: * Well-calibration is an extension of the previous definition. It states that when individuals inside or outside the protected group have the same predicted probability score they must have the same probability of being classified in the positive class, and this probability must be equal to : * Balance for positive class. A classifier satisfies this definition if the subjects constituting the positive class from both protected and unprotected groups have equal average predicted probability score . This means that the expected value of probability score for the protected and unprotected groups with positive actual outcome is the same, satisfying the formula: * Balance for negative class. A classifier satisfies this definition if the subjects constituting the negative class from both protected and unprotected groups have equal average predicted probability score . This means that the expected value of probability score for the protected and unprotected groups with negative actual outcome is the same, satisfying the formula:Social welfare function

Some scholars have proposed defining algorithmic fairness in terms of asocial welfare function

In welfare economics, a social welfare function is a function that ranks social states (alternative complete descriptions of the society) as less desirable, more desirable, or indifferent for every possible pair of social states. Inputs of the ...

. They argue that using a social welfare function enables an algorithm designer to consider fairness and predictive accuracy in terms of their benefits to the people affected by the algorithm. It also allows the designer to trade off efficiency and equity in a principled way. Sendhil Mullainathan has stated that algorithm designers should use social welfare functions in order to recognize absolute gains for disadvantaged groups. For example, a study found that using a decision-making algorithm in pretrial detention rather than pure human judgment reduced the detention rates for Blacks, Hispanics, and racial minorities overall, even while keeping the crime rate constant.

Individual Fairness criteria

An important distinction among fairness definitions is the one between group and individual notions.Mehrabi, Ninareh, Fred Morstatter, Nripsuta Saxena,Kristina Lerman

Kristina Lerman (born 1967) is an American network scientist whose research concerns the spread of information on social networks, and fairness in machine learning. She is a research professor at the University of Southern California, in the Co ...

, and Aram Galstyan"A survey on bias and fairness in machine learning."

ACM Computing Surveys (CSUR) 54, no. 6 (2021): 1-35. Roughly speaking, while group fairness criteria compare quantities at a group level, typically identified by sensitive attributes (e.g. gender, ethnicity, age, etc...), individual criteria compare individuals. In words, individual fairness follow the principle that "similar individuals should receive similar treatments". There is a very intuitive approach to fairness, which usually goes under the name of Fairness Through Unawareness (FTU), or ''Blindness'', that prescribe not to explicitly employ sensitive features when making (automated) decisions. This is effectively a notion of individual fairness, since two individuals differing only for the values of their sensitive attributes would receive the same outcome. However, in general, FTU is subject to several drawbacks, the main being that it does not take into account possible correlations between sensitive attributes and non-sensitive attributes employed in the decision-making process. For example, an agent with the (malignant) intention to discriminate on the basis of gender could introduce in the model a proxy variable for gender (i.e. a variable highly correlated with gender) and effectively using gender information while at the same time being compliant to the FTU prescription. The problem of ''what variables correlated to sensitive ones are fairly employable by a model'' in the decision-making process is a crucial one, and is relevant for group concepts as well: independence metrics require a complete removal of sensitive information, while separation-based metrics allow for correlation, but only as far as the labeled target variable "justify" them. The most general concept of individual fairness was introduced in the pioneer work by Dwork and collaborators in 2012 and can be thought of as a mathematical translation of the principle that the decision map taking features as input should be built such that it is able to "map similar individuals similarly", that is expressed as a Lipschitz condition on the model map. They call this approach Fairness Through Awareness (FTA), precisely as counterpoint to FTU, since they underline the importance of choosing the appropriate target-related distance metric in order to assess which individuals are ''similar'' in specific situations. Again, this problem is very related to the point raised above about what variables can be seen as "legitimate" in particular contexts.

Causality-based metrics

Causal fairness measures the frequency with which two nearly identical users or applications who differ only in a set of characteristics with respect to which resource allocation must be fair receive identical treatment. An entire branch of the academic research on fairness metrics is devoted to leverage causal models to assess bias inmachine learning

Machine learning (ML) is a field of inquiry devoted to understanding and building methods that 'learn', that is, methods that leverage data to improve performance on some set of tasks. It is seen as a part of artificial intelligence.

Machine ...

models. This approach is usually justified by the fact that the same observational distribution of data may hide different causal relationships among the variables at play, possibly with different interpretations of whether the outcome are affected by some form of bias or not.

Kusner et al.Kusner, M. J., Loftus, J., Russell, C., & Silva, R. (2017)Counterfactual fairness

Advances in neural information processing systems, 30. propose to employ counterfactuals, and define a decision-making process counterfactually fair if, for any individual, the outcome does not change in the counterfactual scenario where the sensitive attributes are changed. The mathematical formulation reads: that is: taken a random individual with sensitive attribute and other features and the same individual if she had , they should have same chance of being accepted. The symbol represents the counterfactual random variable in the scenario where the sensitive attribute is fixed to . The conditioning on means that this requirement is at the individual level, in that we are conditioning on all the variables identifying a single observation. Machine learning models are often trained upon data where the outcome depended on the decision made at that time. For example, if a machine learning model has to determine whether an inmate will recidivate and will determine whether the inmate should be released early, the outcome could be dependent on whether the inmate was released early or not. Mishler et al. propose a formula for counterfactual equalized odds: where is a random variable, denotes the outcome given that the decision was taken, and is a sensitive feature.

Bias Mitigation strategies

Fairness can be applied to machine learning algorithms in three different ways: data preprocessing, optimization during software training, or post-processing results of the algorithm.Preprocessing

Usually, the classifier is not the only problem; the dataset is also biased. The discrimination of a dataset with respect to the group can be defined as follows: That is, an approximation to the difference between the probabilities of belonging in the positive class given that the subject has a protected characteristic different from and equal to . Algorithms correcting bias at preprocessing remove information about dataset variables which might result in unfair decisions, while trying to alter as little as possible. This is not as simple as just removing the sensitive variable, because other attributes can be correlated to the protected one. A way to do this is to map each individual in the initial dataset to an intermediate representation in which it is impossible to identify whether it belongs to a particular protected group while maintaining as much information as possible. Then, the new representation of the data is adjusted to get the maximum accuracy in the algorithm. This way, individuals are mapped into a new multivariable representation where the probability of any member of a protected group to be mapped to a certain value in the new representation is the same as the probability of an individual which doesn't belong to the protected group. Then, this representation is used to obtain the prediction for the individual, instead of the initial data. As the intermediate representation is constructed giving the same probability to individuals inside or outside the protected group, this attribute is hidden to the classificator. An example is explained in Zemel et al.Richard Zemel; Yu (Ledell) Wu; Kevin Swersky; Toniann Pitassi; Cyntia Dwork''Learning Fair Representations''

Retrieved 1 December 2019 where a multinomial random variable is used as an intermediate representation. In the process, the system is encouraged to preserve all information except that which can lead to biased decisions, and to obtain a prediction as accurate as possible. On the one hand, this procedure has the advantage that the preprocessed data can be used for any machine learning task. Furthermore, the classifier does not need to be modified, as the correction is applied to the dataset before processing. On the other hand, the other methods obtain better results in accuracy and fairness.Ziyuan Zhong

''Tutorial on Fairness in Machine Learning''

Retrieved 1 December 2019

Reweighing

Reweighing is an example of a preprocessing algorithm. The idea is to assign a weight to each dataset point such that the weighted discrimination is 0 with respect to the designated group.Faisal Kamiran; Toon Calders''Data preprocessing techniques for classification without discrimination''

Retrieved 17 December 2019 If the dataset was unbiased the sensitive variable and the target variable would be statistically independent and the probability of the joint distribution would be the product of the probabilities as follows: In reality, however, the dataset is not unbiased and the variables are not statistically independent so the observed probability is: To compensate for the bias, the software adds a

weight

In science and engineering, the weight of an object is the force acting on the object due to gravity.

Some standard textbooks define weight as a vector quantity, the gravitational force acting on the object. Others define weight as a scalar q ...

, lower for favored objects and higher for unfavored objects. For each we get:

When we have for each a weight associated we compute the weighted discrimination with respect to group as follows:

It can be shown that after reweighting this weighted discrimination is 0.

Inprocessing

Another approach is to correct thebias

Bias is a disproportionate weight ''in favor of'' or ''against'' an idea or thing, usually in a way that is closed-minded, prejudicial, or unfair. Biases can be innate or learned. People may develop biases for or against an individual, a group ...

at training time. This can be done by adding constraints to the optimization objective of the algorithm.Muhammad Bilal Zafar; Isabel Valera; Manuel Gómez Rodríguez; Krishna P. Gummadi''Fairness Beyond Disparate Treatment & Disparate Impact: Learning Classification without Disparate Mistreatment''

Retrieved 1 December 2019 These constraints force the algorithm to improve fairness, by keeping the same rates of certain measures for the protected group and the rest of individuals. For example, we can add to the objective of the

algorithm

In mathematics and computer science, an algorithm () is a finite sequence of rigorous instructions, typically used to solve a class of specific problems or to perform a computation. Algorithms are used as specifications for performing ...

the condition that the false positive rate is the same for individuals in the protected group and the ones outside the protected group.

The main measures used in this approach are false positive rate, false negative rate, and overall misclassification rate. It is possible to add just one or several of these constraints to the objective of the algorithm. Note that the equality of false negative rates implies the equality of true positive rates so this implies the equality of opportunity. After adding the restrictions to the problem it may turn intractable, so a relaxation on them may be needed.

This technique obtains good results in improving fairness while keeping high accuracy and lets the programmer

A computer programmer, sometimes referred to as a software developer, a software engineer, a programmer or a coder, is a person who creates computer programs — often for larger computer software.

A programmer is someone who writes/creates ...

choose the fairness measures to improve. However, each machine learning task may need a different method to be applied and the code in the classifier needs to be modified, which is not always possible.

Adversarial debiasing

We train two classifiers at the same time through some gradient-based method (f.e.: gradient descent). The first one, the ''predictor'' tries to accomplish the task of predicting , the target variable, given , the input, by modifying its weights to minimize someloss function

In mathematical optimization and decision theory, a loss function or cost function (sometimes also called an error function) is a function that maps an event or values of one or more variables onto a real number intuitively representing some "co ...

. The second one, the ''adversary'' tries to accomplish the task of predicting , the sensitive variable, given by modifying its weights to minimize some loss function .Brian Hu Zhang; Blake Lemoine; Margaret Mitchell''Mitigating Unwanted Biases with Adversarial Learning''

Retrieved 17 December 2019Joyce Xu

''Algorithmic Solutions to Algorithmic Bias: A Technical Guide''

Retrieved 17 December 2019 An important point here is that, in order to propagate correctly, above must refer to the raw output of the classifier, not the discrete prediction; for example, with an

artificial neural network

Artificial neural networks (ANNs), usually simply called neural networks (NNs) or neural nets, are computing systems inspired by the biological neural networks that constitute animal brains.

An ANN is based on a collection of connected units ...

and a classification problem, could refer to the output of the softmax layer.

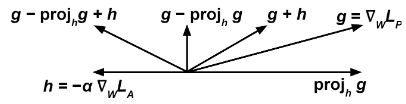

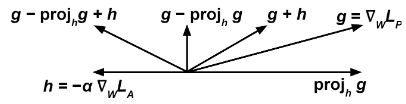

Then we update to minimize at each training step according to the gradient

In vector calculus, the gradient of a scalar-valued differentiable function of several variables is the vector field (or vector-valued function) \nabla f whose value at a point p is the "direction and rate of fastest increase". If the gr ...

and we modify according to the expression:

where is a tuneable hyperparameter that can vary at each time step.

The intuitive idea is that we want the ''predictor'' to try to minimize (therefore the term ) while, at the same time, maximize (therefore the term ), so that the ''adversary'' fails at predicting the sensitive variable from .

The term prevents the ''predictor'' from moving in a direction that helps the ''adversary'' decrease its loss function.

It can be shown that training a ''predictor'' classification model with this algorithm improves demographic parity with respect to training it without the ''adversary''.

The intuitive idea is that we want the ''predictor'' to try to minimize (therefore the term ) while, at the same time, maximize (therefore the term ), so that the ''adversary'' fails at predicting the sensitive variable from .

The term prevents the ''predictor'' from moving in a direction that helps the ''adversary'' decrease its loss function.

It can be shown that training a ''predictor'' classification model with this algorithm improves demographic parity with respect to training it without the ''adversary''.

Postprocessing

The final method tries to correct the results of a classifier to achieve fairness. In this method, we have a classifier that returns a score for each individual and we need to do a binary prediction for them. High scores are likely to get a positive outcome, while low scores are likely to get a negative one, but we can adjust thethreshold

Threshold may refer to:

Architecture

* Threshold (door), the sill of a door

Media

* ''Threshold'' (1981 film)

* ''Threshold'' (TV series), an American science fiction drama series produced during 2005-2006

* "Threshold" (''Stargate SG-1''), ...

to determine when to answer yes as desired. Note that variations in the threshold value affect the trade-off between the rates for true positives and true negatives.

If the score function is fair in the sense that it is independent of the protected attribute, then any choice of the threshold will also be fair, but classifiers of this type tend to be biased, so a different threshold may be required for each protected group to achieve fairness. A way to do this is plotting the true positive rate against the false negative rate at various threshold settings (this is called ROC curve) and find a threshold where the rates for the protected group and other individuals are equal.Moritz Hardt; Eric Price; Nathan Srebro''Equality of Opportunity in Supervised Learning''

Retrieved 1 December 2019 The advantages of postprocessing include that the technique can be applied after any classifiers, without modifying it, and has a good performance in fairness measures. The cons are the need to access to the protected attribute in test time and the lack of choice in the balance between accuracy and fairness.

Reject Option based Classification

Given a classifier let be the probability computed by the classifiers as theprobability

Probability is the branch of mathematics concerning numerical descriptions of how likely an Event (probability theory), event is to occur, or how likely it is that a proposition is true. The probability of an event is a number between 0 and ...

that the instance belongs to the positive class +. When is close to 1 or to 0, the instance is specified with high degree of certainty to belong to class + or - respectively. However, when is closer to 0.5 the classification is more unclear.Faisal Kamiran; Asim Karim; Xiangliang Zhang''Decision Theory for Discrimination-aware Classification''

Retrieved 17 December 2019 We say is a "rejected instance" if with a certain such that . The algorithm of "ROC" consists on classifying the non-rejected instances following the rule above and the rejected instances as follows: if the instance is an example of a deprived group () then label it as positive, otherwise, label it as negative. We can optimize different measures of discrimination (link) as functions of to find the optimal for each problem and avoid becoming discriminatory against the privileged group.

See also

* Algorithmic bias *Machine learning

Machine learning (ML) is a field of inquiry devoted to understanding and building methods that 'learn', that is, methods that leverage data to improve performance on some set of tasks. It is seen as a part of artificial intelligence.

Machine ...

References