CcNUMA on:

[Wikipedia]

[Google]

[Amazon]

Non-uniform memory access (NUMA) is a computer memory design used in

Non-uniform memory access (NUMA) is a computer memory design used in

NUMA FAQ

OpenSolaris NUMA Project

Introduction video for the Alpha EV7 system architecture

More videos related to EV7 systems: CPU, IO, etc

NUMA optimization in Windows Applications

NUMA Support in Linux at SGI

Intel Tukwila

Intel QPI (CSI) explained

current Itanium NUMA systems

{{Parallel Computing Parallel computing Computer memory

Non-uniform memory access (NUMA) is a computer memory design used in

Non-uniform memory access (NUMA) is a computer memory design used in multiprocessing

Multiprocessing is the use of two or more central processing units (CPUs) within a single computer system. The term also refers to the ability of a system to support more than one processor or the ability to allocate tasks between them. There ar ...

, where the memory access time depends on the memory location relative to the processor. Under NUMA, a processor can access its own local memory faster than non-local memory (memory local to another processor or memory shared between processors). The benefits of NUMA are limited to particular workloads, notably on servers where the data is often associated strongly with certain tasks or users.

NUMA architectures logically follow in scaling from symmetric multiprocessing

Symmetric multiprocessing or shared-memory multiprocessing (SMP) involves a multiprocessor computer hardware and software architecture where two or more identical processors are connected to a single, shared main memory, have full access to all ...

(SMP) architectures. They were developed commercially during the 1990s by Unisys, Convex Computer (later Hewlett-Packard

The Hewlett-Packard Company, commonly shortened to Hewlett-Packard ( ) or HP, was an American multinational information technology company headquartered in Palo Alto, California. HP developed and provided a wide variety of hardware components ...

), Honeywell Information Systems Italy (HISI) (later Groupe Bull

Bull SAS (also known as Groupe Bull, Bull Information Systems, or simply Bull) is a French computer company headquartered in Les Clayes-sous-Bois, in the western suburbs of Paris. The company has also been known at various times as Bull General El ...

), Silicon Graphics (later Silicon Graphics International), Sequent Computer Systems (later IBM), Data General (later EMC, now Dell Technologies), and Digital

Digital usually refers to something using discrete digits, often binary digits.

Technology and computing Hardware

*Digital electronics, electronic circuits which operate using digital signals

**Digital camera, which captures and stores digital i ...

(later Compaq, then HP, now HPE). Techniques developed by these companies later featured in a variety of Unix-like operating systems, and to an extent in Windows NT.

The first commercial implementation of a NUMA-based Unix system was the Symmetrical Multi Processing XPS-100 family of servers, designed by Dan Gielan of VAST Corporation for Honeywell Information Systems Italy.

Overview

Modern CPUs operate considerably faster than the main memory they use. In the early days of computing and data processing, the CPU generally ran slower than its own memory. The performance lines of processors and memory crossed in the 1960s with the advent of the firstsupercomputer

A supercomputer is a computer with a high level of performance as compared to a general-purpose computer. The performance of a supercomputer is commonly measured in floating-point operations per second ( FLOPS) instead of million instructions ...

s. Since then, CPUs increasingly have found themselves "starved for data" and having to stall while waiting for data to arrive from memory (e.g. for Von-Neumann architecture-based computers, see Von Neumann bottleneck). Many supercomputer designs of the 1980s and 1990s focused on providing high-speed memory access as opposed to faster processors, allowing the computers to work on large data sets at speeds other systems could not approach.

Limiting the number of memory accesses provided the key to extracting high performance from a modern computer. For commodity processors, this meant installing an ever-increasing amount of high-speed cache memory and using increasingly sophisticated algorithms to avoid cache misses. But the dramatic increase in size of the operating systems and of the applications run on them has generally overwhelmed these cache-processing improvements. Multi-processor systems without NUMA make the problem considerably worse. Now a system can starve several processors at the same time, notably because only one processor can access the computer's memory at a time.

NUMA attempts to address this problem by providing separate memory for each processor, avoiding the performance hit when several processors attempt to address the same memory. For problems involving spread data (common for servers and similar applications), NUMA can improve the performance over a single shared memory by a factor of roughly the number of processors (or separate memory banks). Another approach to addressing this problem is the multi-channel memory architecture, in which a linear increase in the number of memory channels increases the memory access concurrency linearly.

Of course, not all data ends up confined to a single task, which means that more than one processor may require the same data. To handle these cases, NUMA systems include additional hardware or software to move data between memory banks. This operation slows the processors attached to those banks, so the overall speed increase due to NUMA heavily depends on the nature of the running tasks.

Implementations

AMD implemented NUMA with its Opteron processor (2003), using HyperTransport. Intel announced NUMA compatibility for its x86 and Itanium servers in late 2007 with its Nehalem and Tukwila CPUs. Both Intel CPU families share a common chipset; the interconnection is called IntelQuickPath Interconnect

The Intel QuickPath Interconnect (QPI) is a point-to-point processor interconnect developed by Intel which replaced the front-side bus (FSB) in Xeon, Itanium, and certain desktop platforms starting in 2008. It increased the scalability and availab ...

(QPI), which provides extremely high bandwidth to enable high on-board scalability and was replaced by a new version called Intel UltraPath Interconnect with the release of Skylake Skylake or Sky Lake may refer to:

* Skylake (microarchitecture), the codename for a processor microarchitecture developed by Intel as the successor to Broadwell

* Skylake (Mysia), a town of ancient Mysia, now in Turkey

* Sky Lake, Florida

Sky La ...

(2017).

Cache coherent NUMA (ccNUMA)

Nearly all CPU architectures use a small amount of very fast non-shared memory known as cache to exploit locality of reference in memory accesses. With NUMA, maintainingcache coherence

In computer architecture, cache coherence is the uniformity of shared resource data that ends up stored in multiple local caches. When clients in a system maintain caches of a common memory resource, problems may arise with incoherent data, whi ...

across shared memory has a significant overhead. Although simpler to design and build, non-cache-coherent NUMA systems become prohibitively complex to program in the standard von Neumann architecture programming model.

Typically, ccNUMA uses inter-processor communication between cache controllers to keep a consistent memory image when more than one cache stores the same memory location. For this reason, ccNUMA may perform poorly when multiple processors attempt to access the same memory area in rapid succession. Support for NUMA in operating systems attempts to reduce the frequency of this kind of access by allocating processors and memory in NUMA-friendly ways and by avoiding scheduling and locking algorithms that make NUMA-unfriendly accesses necessary.

Alternatively, cache coherency protocols such as the MESIF protocol attempt to reduce the communication required to maintain cache coherency. Scalable Coherent Interface (SCI) is an IEEE standard defining a directory-based cache coherency protocol to avoid scalability limitations found in earlier multiprocessor systems. For example, SCI is used as the basis for the NumaConnect technology.

NUMA vs. cluster computing

One can view NUMA as a tightly coupled form of cluster computing. The addition of virtual memory paging to a cluster architecture can allow the implementation of NUMA entirely in software. However, the inter-node latency of software-based NUMA remains several orders of magnitude greater (slower) than that of hardware-based NUMA.Software support

Since NUMA largely influences memory access performance, certain software optimizations are needed to allow scheduling threads and processes close to their in-memory data. * Microsoft Windows 7 and Windows Server 2008 R2 added support for NUMA architecture over 64 logical cores. *Java 7

The Java language has undergone several changes since JDK 1.0 as well as numerous additions of classes and packages to the standard library. Since J2SE 1.4, the evolution of the Java language has been governed by the Java Community P ...

added support for NUMA-aware memory allocator and garbage collector.

* Linux kernel

The Linux kernel is a free and open-source, monolithic, modular, multitasking, Unix-like operating system kernel. It was originally authored in 1991 by Linus Torvalds for his i386-based PC, and it was soon adopted as the kernel for the GNU ope ...

:

**Version 2.5 provided a basic NUMA support, which was further improved in subsequent kernel releases.

**Version 3.8 of the Linux kernel brought a new NUMA foundation that allowed development of more efficient NUMA policies in later kernel releases.

**Version 3.13 of the Linux kernel brought numerous policies that aim at putting a process near its memory, together with the handling of cases such as having memory pages shared between processes, or the use of transparent huge pages; new sysctl settings allow NUMA balancing to be enabled or disabled, as well as the configuration of various NUMA memory balancing parameters.

* OpenSolaris

OpenSolaris () is a discontinued open-source computer operating system based on Solaris and created by Sun Microsystems. It was also, perhaps confusingly, the name of a project initiated by Sun to build a developer and user community around th ...

models NUMA architecture with lgroups.

* FreeBSD

FreeBSD is a free and open-source Unix-like operating system descended from the Berkeley Software Distribution (BSD), which was based on Research Unix. The first version of FreeBSD was released in 1993. In 2005, FreeBSD was the most popular ...

added support for NUMA architecture in version 9.0.

* Silicon Graphics IRIX

IRIX ( ) is a discontinued operating system developed by Silicon Graphics (SGI) to run on the company's proprietary MIPS workstations and servers. It is based on UNIX System V with BSD extensions. In IRIX, SGI originated the XFS file system and ...

(discontinued as of 2021) support for ccNUMA architecture over 1240 CPU with Origin server series.

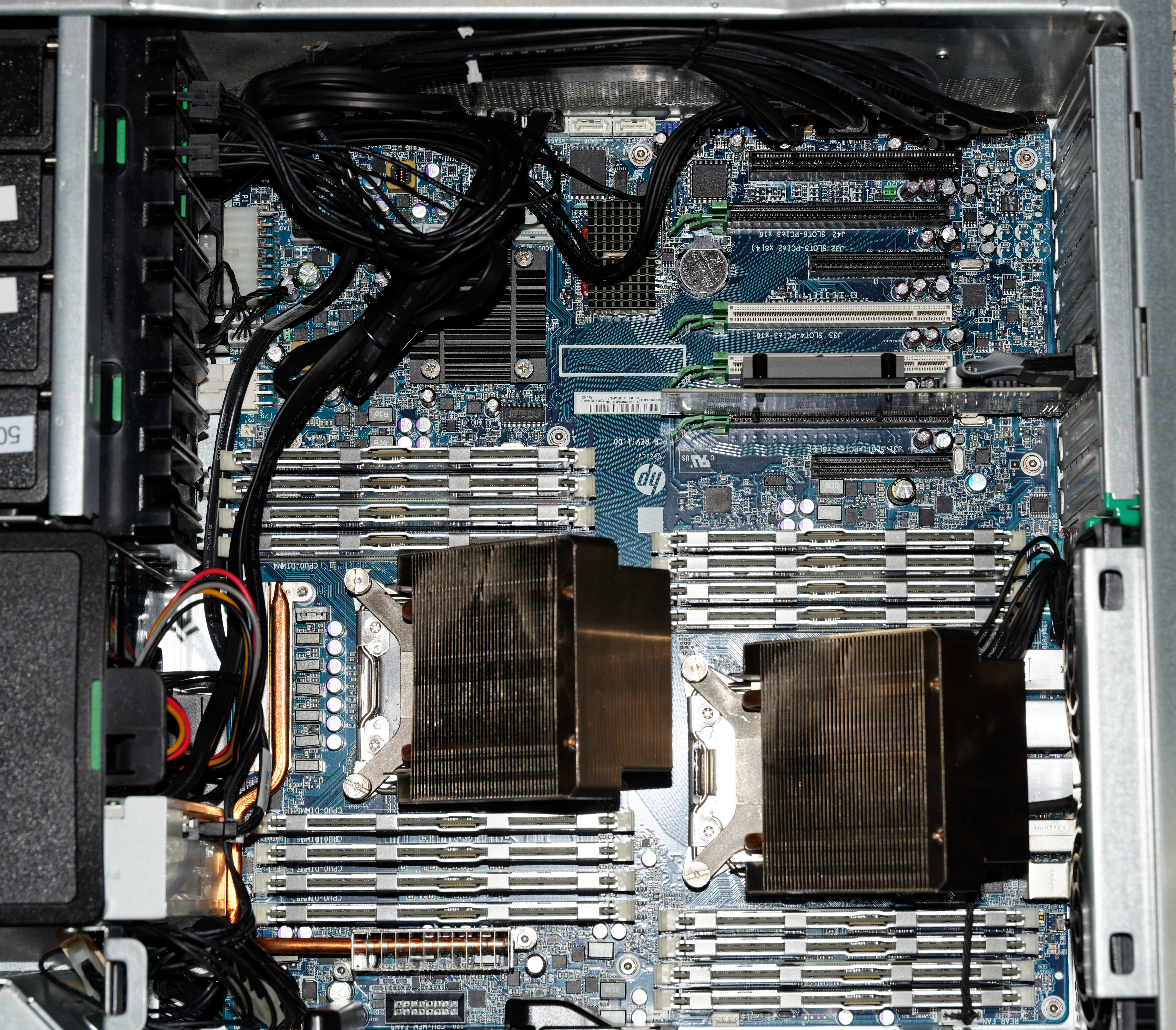

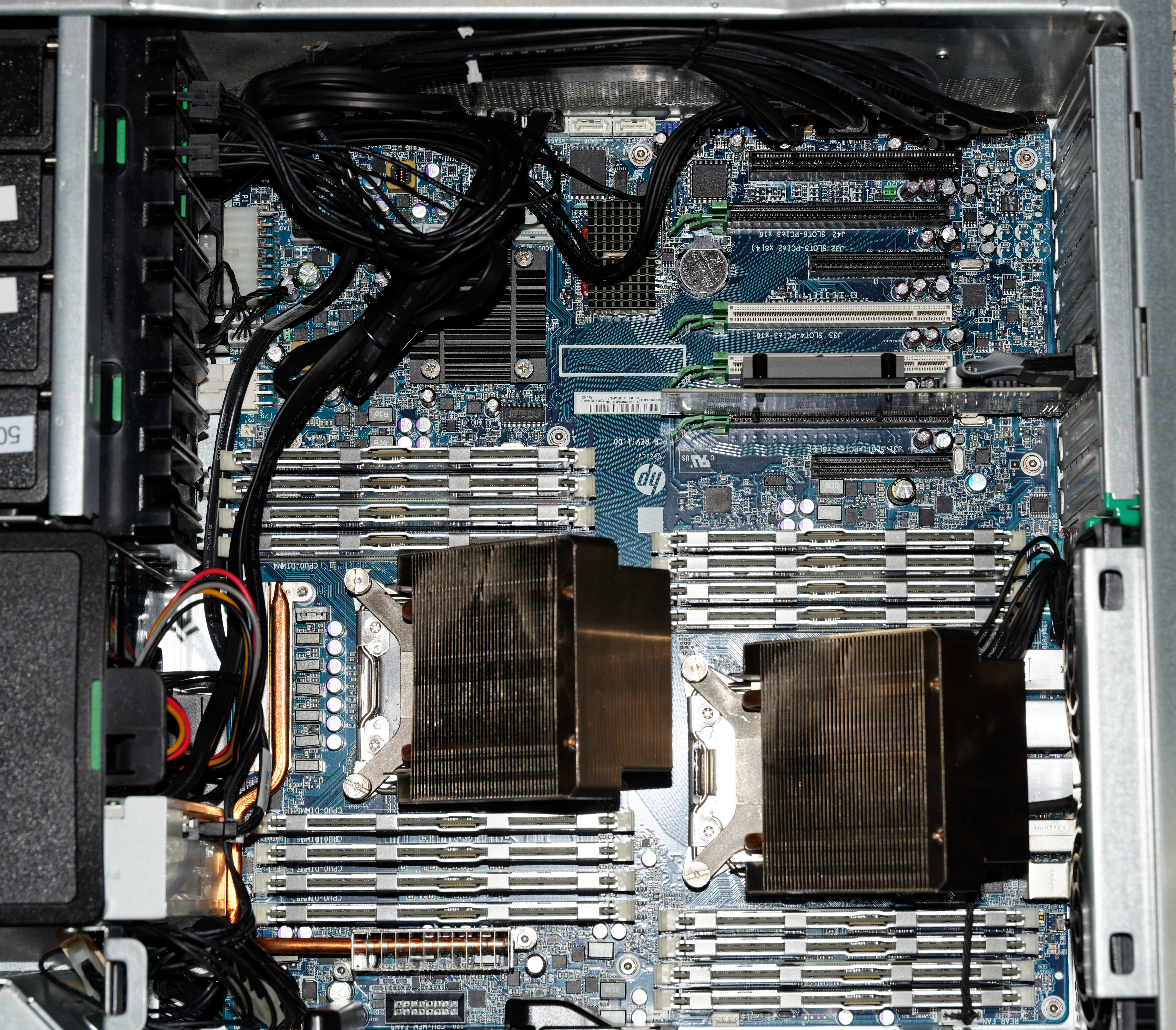

Hardware support

As of 2011, ccNUMA systems are multiprocessor systems based on the AMD Opteron processor, which can be implemented without external logic, and the Intel Itanium processor, which requires the chipset to support NUMA. Examples of ccNUMA-enabled chipsets are the SGI Shub (Super hub), the Intel E8870, the HP sx2000 (used in the Integrity and Superdome servers), and those found in NEC Itanium-based systems. Earlier ccNUMA systems such as those from Silicon Graphics were based on MIPS processors and the DECAlpha 21364

The Alpha 21364, code-named "Marvel", also known as EV7 is a microprocessor developed by Digital Equipment Corporation (DEC), later Compaq Computer Corporation, that implemented the Alpha instruction set architecture (ISA).

History

The Alpha 21 ...

(EV7) processor.

See also

* Uniform memory access (UMA) * Cache-only memory architecture (COMA) *HiperDispatch

HiperDispatch is a workload dispatching feature found in recent IBM mainframe models (the System z10 and IBM zEnterprise System processors and later models) running recent releases of z/OS. HiperDispatch was introduced in February 2008. Support wa ...

* Partitioned global address space

* Nodal architecture

* Scratchpad memory (SPM)

References

External links

NUMA FAQ

OpenSolaris NUMA Project

Introduction video for the Alpha EV7 system architecture

More videos related to EV7 systems: CPU, IO, etc

NUMA optimization in Windows Applications

NUMA Support in Linux at SGI

Intel Tukwila

Intel QPI (CSI) explained

current Itanium NUMA systems

{{Parallel Computing Parallel computing Computer memory