|

Unit Propagation

Unit propagation (UP) or boolean constraint propagation (BCP) or the one-literal rule (OLR) is a procedure of automated theorem proving that can simplify a set of (usually propositional) clauses. Definition The procedure is based on unit clauses, i.e. clauses that are composed of a single literal, in conjunctive normal form. Because each clause needs to be satisfied, we know that this literal must be true. If a set of clauses contains the unit clause l, the other clauses are simplified by the application of the two following rules: # every clause (other than the unit clause itself) containing l is removed (the clause is satisfied if l is); # in every clause that contains \neg l this literal is deleted (\neg l can not contribute to it being satisfied). The application of these two rules lead to a new set of clauses that is equivalent to the old one. For example, the following set of clauses can be simplified by unit propagation because it contains the unit clause a. : \ Sin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

|

|

Algorithm

In mathematics and computer science, an algorithm () is a finite sequence of Rigour#Mathematics, mathematically rigorous instructions, typically used to solve a class of specific Computational problem, problems or to perform a computation. Algorithms are used as specifications for performing calculations and data processing. More advanced algorithms can use Conditional (computer programming), conditionals to divert the code execution through various routes (referred to as automated decision-making) and deduce valid inferences (referred to as automated reasoning). In contrast, a Heuristic (computer science), heuristic is an approach to solving problems without well-defined correct or optimal results.David A. Grossman, Ophir Frieder, ''Information Retrieval: Algorithms and Heuristics'', 2nd edition, 2004, For example, although social media recommender systems are commonly called "algorithms", they actually rely on heuristics as there is no truly "correct" recommendation. As an e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

|

|

Automated Theorem Proving

Automated theorem proving (also known as ATP or automated deduction) is a subfield of automated reasoning and mathematical logic dealing with proving mathematical theorems by computer programs. Automated reasoning over mathematical proof was a major motivating factor for the development of computer science. Logical foundations While the roots of formalized Logicism, logic go back to Aristotelian logic, Aristotle, the end of the 19th and early 20th centuries saw the development of modern logic and formalized mathematics. Gottlob Frege, Frege's ''Begriffsschrift'' (1879) introduced both a complete propositional logic, propositional calculus and what is essentially modern predicate logic. His ''The Foundations of Arithmetic, Foundations of Arithmetic'', published in 1884, expressed (parts of) mathematics in formal logic. This approach was continued by Bertrand Russell, Russell and Alfred North Whitehead, Whitehead in their influential ''Principia Mathematica'', first published 1910� ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

|

|

Propositional Logic

The propositional calculus is a branch of logic. It is also called propositional logic, statement logic, sentential calculus, sentential logic, or sometimes zeroth-order logic. Sometimes, it is called ''first-order'' propositional logic to contrast it with System F, but it should not be confused with first-order logic. It deals with propositions (which can be true or false) and relations between propositions, including the construction of arguments based on them. Compound propositions are formed by connecting propositions by logical connectives representing the truth functions of conjunction, disjunction, implication, biconditional, and negation. Some sources include other connectives, as in the table below. Unlike first-order logic, propositional logic does not deal with non-logical objects, predicates about them, or quantifiers. However, all the machinery of propositional logic is included in first-order logic and higher-order logics. In this sense, propositional logi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

|

|

Clause (logic)

In logic, a clause is a propositional formula formed from a finite collection of literals (atoms or their negations) and logical connectives. A clause is true either whenever at least one of the literals that form it is true (a disjunctive clause, the most common use of the term), or when all of the literals that form it are true (a conjunctive clause, a less common use of the term). That is, it is a finite disjunction or conjunction of literals, depending on the context. Clauses are usually written as follows, where the symbols l_i are literals: :l_1 \vee \cdots \vee l_n Empty clauses A clause can be empty (defined from an empty set of literals). The empty clause is denoted by various symbols such as \empty, \bot, or \Box. The truth evaluation of an empty disjunctive clause is always false. This is justified by considering that false is the neutral element of the monoid (\, \vee). The truth evaluation of an empty conjunctive clause is always true. This is related to the conc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

|

|

Literal (mathematical Logic)

Literal may refer to: * Interpretation of legal concepts: ** Strict constructionism ** The plain meaning rule (a.k.a. "literal rule") * Literal (mathematical logic), certain logical roles taken by propositions * Literal (computer programming), a fixed value in a program's source code * Biblical literalism * Titled works: ** Literal (magazine), ''Literal'' (magazine) ** Three-issue series Fables (comics)#The Literals, ''The Literals'', in ''Fables'' comics franchise See also * Literal and figurative language * Literal translation * Literalism (other) * Littoral (other) * ''Literally'', English adverb {{disambiguation ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

|

|

Conjunctive Normal Form

In Boolean algebra, a formula is in conjunctive normal form (CNF) or clausal normal form if it is a conjunction of one or more clauses, where a clause is a disjunction of literals; otherwise put, it is a product of sums or an AND of ORs. In automated theorem proving, the notion "''clausal normal form''" is often used in a narrower sense, meaning a particular representation of a CNF formula as a set of sets of literals. Definition A logical formula is considered to be in CNF if it is a conjunction of one or more disjunctions of one or more literals. As in disjunctive normal form (DNF), the only propositional operators in CNF are or (\vee), and (\and), and not (\neg). The ''not'' operator can only be used as part of a literal, which means that it can only precede a propositional variable. The following is a context-free grammar for CNF: : ''CNF'' \, \to \, ''Disjunct'' \, \mid \, ''Disjunct'' \, \land \, ''CNF'' : ''Disjunct'' \, \to \, ''Literal'' \, \mid\, ''Literal'' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

|

|

Resolution (logic)

In mathematical logic and automated theorem proving, resolution is a rule of inference leading to a refutation-complete theorem-proving technique for sentences in propositional logic and first-order logic. For propositional logic, systematically applying the resolution rule acts as a decision procedure for formula unsatisfiability, solving the (complement of the) Boolean satisfiability problem. For first-order logic, resolution can be used as the basis for a semi-algorithm for the unsatisfiability problem of first-order logic, providing a more practical method than one following from Gödel's completeness theorem. The resolution rule can be traced back to Davis and Putnam (1960); however, their algorithm required trying all ground instances of the given formula. This source of combinatorial explosion was eliminated in 1965 by John Alan Robinson's syntactical unification algorithm, which allowed one to instantiate the formula during the proof "on demand" just as far as needed ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

|

|

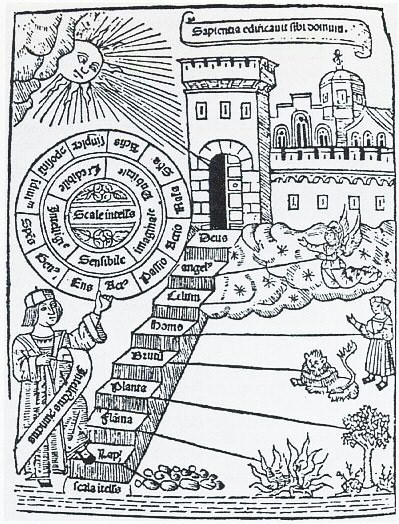

Hierarchy

A hierarchy (from Ancient Greek, Greek: , from , 'president of sacred rites') is an arrangement of items (objects, names, values, categories, etc.) that are represented as being "above", "below", or "at the same level as" one another. Hierarchy is an important concept in a wide variety of fields, such as architecture, philosophy, design, mathematics, computer science, organizational theory, systems theory, systematic biology, and the social sciences (especially political science). A hierarchy can link entities either directly or indirectly, and either vertically or diagonally. The only direct links in a hierarchy, insofar as they are hierarchical, are to one's immediate superior or to one of one's subordinates, although a system that is largely hierarchical can also incorporate alternative hierarchies. Hierarchical links can extend "vertically" upwards or downwards via multiple links in the same direction, following a path (graph theory), path. All parts of the hierarchy that are ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

|

Horn Clause

In mathematical logic and logic programming, a Horn clause is a logical formula of a particular rule-like form that gives it useful properties for use in logic programming, formal specification, universal algebra and model theory. Horn clauses are named for the logician Alfred Horn, who first pointed out their significance in 1951. Definition A Horn clause is a disjunctive clause (a disjunction of literals) with at most one positive, i.e. unnegated, literal. Conversely, a disjunction of literals with at most one negated literal is called a dual-Horn clause. A Horn clause with exactly one positive literal is a definite clause or a strict Horn clause; a definite clause with no negative literals is a unit clause, and a unit clause without variables is a fact; a Horn clause without a positive literal is a goal clause. The empty clause, consisting of no literals (which is equivalent to ''false''), is a goal clause. These three kinds of Horn clauses are illustrated in the follo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

|

|

Horn-satisfiability

In formal logic, Horn-satisfiability, or HORNSAT, is the problem of deciding whether a given conjunction of propositional Horn clauses is satisfiable or not. Horn-satisfiability and Horn clauses are named after Alfred Horn. A Horn clause is a clause with at most one positive literal, called the ''head'' of the clause, and any number of negative literals, forming the ''body'' of the clause. A Horn formula is a propositional formula formed by conjunction of Horn clauses. Horn satisfiability is actually one of the "hardest" or "most expressive" problems which is known to be computable in polynomial time, in the sense that it is a P-complete problem.Author's 2008 draft version see p.213f) The extension of the problem for quantified Horn formulae can be also solved in polynomial time. The Horn satisfiability problem can also be asked for propositional many-valued logics. The algorithms are not usually linear, but some are polynomial; see Hähnle (2001 or 2003) for a survey. Algori ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

|

|

Quadratic Growth

In mathematics, a function or sequence is said to exhibit quadratic growth when its values are proportional to the square of the function argument or sequence position. "Quadratic growth" often means more generally "quadratic growth in the limit", as the argument or sequence position goes to infinity – in big Theta notation, f(x)=\Theta(x^2). This can be defined both continuously (for a real-valued function of a real variable) or discretely (for a sequence of real numbers, i.e., real-valued function of an integer or natural number variable). Examples Examples of quadratic growth include: *Any quadratic polynomial. *Certain integer sequences such as the triangular numbers. The nth triangular number has value n(n+1)/2, approximately n^2/2. For a real function of a real variable, quadratic growth is equivalent to the second derivative being constant (i.e., the third derivative being zero), and thus functions with quadratic growth are exactly the quadratic polynomials, as the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

|

|

Automated Theorem Proving

Automated theorem proving (also known as ATP or automated deduction) is a subfield of automated reasoning and mathematical logic dealing with proving mathematical theorems by computer programs. Automated reasoning over mathematical proof was a major motivating factor for the development of computer science. Logical foundations While the roots of formalized Logicism, logic go back to Aristotelian logic, Aristotle, the end of the 19th and early 20th centuries saw the development of modern logic and formalized mathematics. Gottlob Frege, Frege's ''Begriffsschrift'' (1879) introduced both a complete propositional logic, propositional calculus and what is essentially modern predicate logic. His ''The Foundations of Arithmetic, Foundations of Arithmetic'', published in 1884, expressed (parts of) mathematics in formal logic. This approach was continued by Bertrand Russell, Russell and Alfred North Whitehead, Whitehead in their influential ''Principia Mathematica'', first published 1910� ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |