|

Symbolic Linguistic Representation

A symbolic linguistic representation is a representation of an utterance that uses symbols to represent linguistic information about the utterance, such as information about phonetics, phonology, morphology, syntax, or semantics. Symbolic linguistic representations are different from non-symbolic representations, such as recordings, because they use symbols to represent linguistic information rather than measurements. Symbolic representations are widely used in linguistics. In syntactic representations, atomic category symbols often refer to the syntactic category of a lexical item. Examples include lexical categories such as auxiliary verbs (INFL), phrasal categories such as relative clauses (SRel) and empty categories such as wh-traces (tWH). In some formalisms, such as Lexical Functional Grammar, these symbols can refer to both grammatical functions and values of grammatical categories. In linguistics, empty categories are represented with ∅. Symbolic representations also ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Utterance

In spoken language analysis, an utterance is a continuous piece of speech, by one person, before or after which there is silence on the part of the person. In the case of oral language, spoken languages, it is generally, but not always, bounded by silence. In written language, utterances only exist indirectly, though their representations or portrayals. They can be represented and delineated in written language in many ways. In spoken language, utterances have several characteristics such as paralinguistic features, which are aspects of speech such as facial expression, gesture, and posture. Prosody (linguistics) , Prosodic features include Stress (linguistics), stress, Intonation (linguistics), intonation, and Paralanguage, tone of voice, as well as ellipsis, which are words that the listener inserts in spoken language to fill gaps. Moreover, other aspects of utterances found in spoken languages are non-fluency features including: voiced/un-voiced pauses (i.e. "umm"), tag quest ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

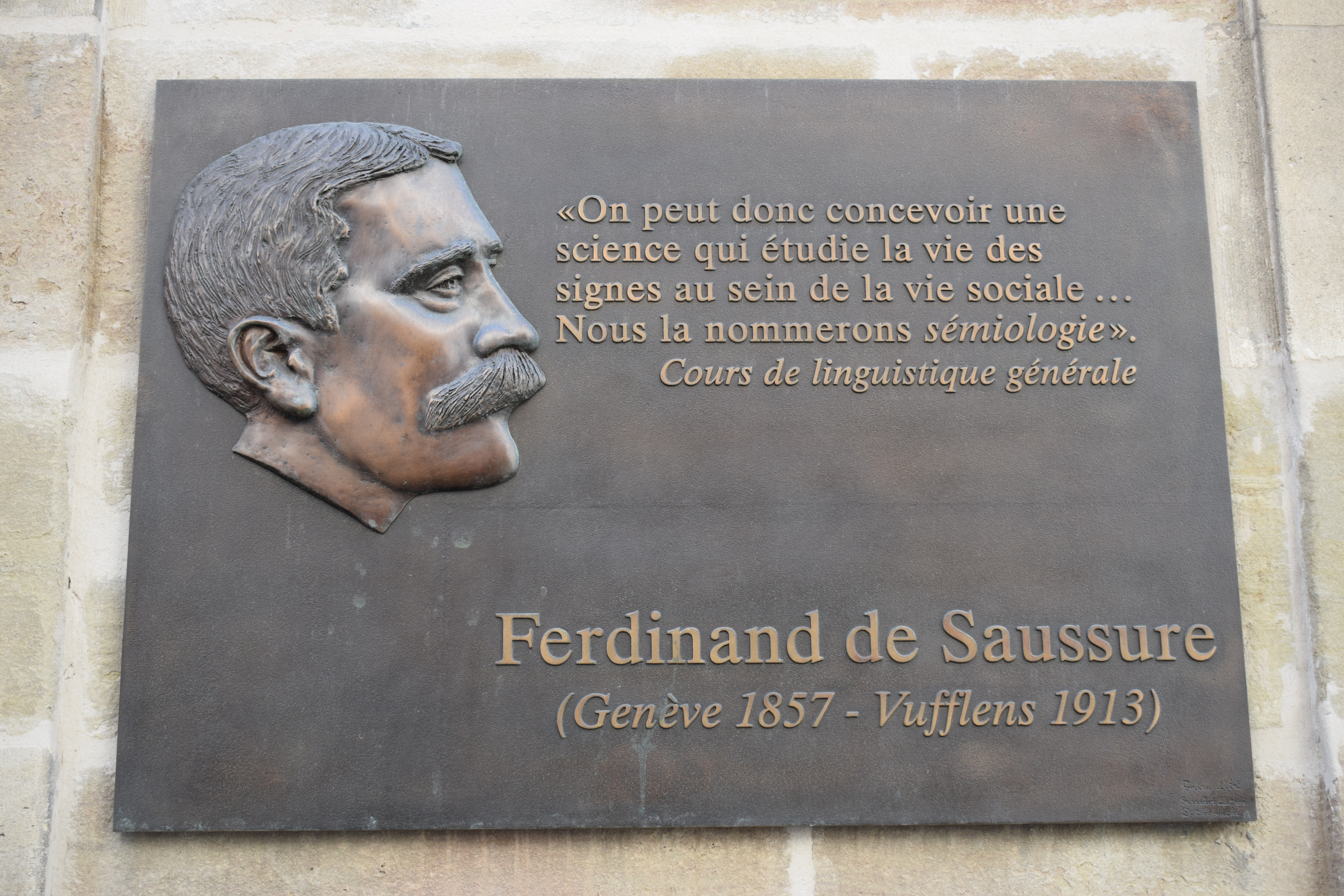

Linguistics

Linguistics is the scientific study of language. The areas of linguistic analysis are syntax (rules governing the structure of sentences), semantics (meaning), Morphology (linguistics), morphology (structure of words), phonetics (speech sounds and equivalent gestures in sign languages), phonology (the abstract sound system of a particular language, and analogous systems of sign languages), and pragmatics (how the context of use contributes to meaning). Subdisciplines such as biolinguistics (the study of the biological variables and evolution of language) and psycholinguistics (the study of psychological factors in human language) bridge many of these divisions. Linguistics encompasses Outline of linguistics, many branches and subfields that span both theoretical and practical applications. Theoretical linguistics is concerned with understanding the universal grammar, universal and Philosophy of language#Nature of language, fundamental nature of language and developing a general ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Sonority Hierarchy

A sonority hierarchy or sonority scale is a hierarchical ranking of speech sounds (or phones). Sonority is loosely defined as the loudness of speech sounds relative to other sounds of the same pitch, length and stress, therefore sonority is often related to rankings for phones to their amplitude. For example, pronouncing the fricative [v] will produce a louder sound than the stop [b], so would rank higher in the hierarchy. However, grounding sonority in amplitude is not universally accepted. Instead, many researchers refer to sonority as the resonance of speech sounds. This relates to the degree to which production of phones results in vibrations of air particles. Thus, sounds that are described as more sonorous are less subject to masking by ambient noises. Sonority hierarchies are especially important when analyzing syllable structure; rules about what segments may appear in onsets or codas together, such as SSP, are formulated in terms of the difference ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Phonotactics

Phonotactics (from Ancient Greek 'voice, sound' and 'having to do with arranging') is a branch of phonology that deals with restrictions in a language on the permissible combinations of phonemes. Phonotactics defines permissible syllable structure, consonant clusters and vowel sequences by means of ''phonotactic constraints''. Phonotactic constraints are highly language-specific. For example, in Japanese, consonant clusters like do not occur. Similarly, the clusters and are not permitted at the beginning of a word in Modern English but are permitted in German and were permitted in Old and Middle English. In contrast, in some Slavic languages and are used alongside vowels as syllable nuclei. Syllables have the following internal segmental structure: * Onset (optional) * Rhyme (obligatory, comprises nucleus and coda): ** Nucleus (obligatory) ** Coda (optional) Both onset and coda may be empty, forming a vowel-only syllable, or alternatively, the nucleus can be occup ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Computational Linguistics

Computational linguistics is an interdisciplinary field concerned with the computational modelling of natural language, as well as the study of appropriate computational approaches to linguistic questions. In general, computational linguistics draws upon linguistics, computer science, artificial intelligence, mathematics, logic, philosophy, cognitive science, cognitive psychology, psycholinguistics, anthropology and neuroscience, among others. Computational linguistics is closely related to mathematical linguistics. Origins The field overlapped with artificial intelligence since the efforts in the United States in the 1950s to use computers to automatically translate texts from foreign languages, particularly Russian scientific journals, into English. Since rule-based approaches were able to make arithmetic (systematic) calculations much faster and more accurately than humans, it was expected that lexicon, morphology, syntax and semantics can be learned using explicit rules, a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Neural CKY

In biology, the nervous system is the highly complex part of an animal that coordinates its actions and sensory information by transmitting signals to and from different parts of its body. The nervous system detects environmental changes that impact the body, then works in tandem with the endocrine system to respond to such events. Nervous tissue first arose in wormlike organisms about 550 to 600 million years ago. In vertebrates, it consists of two main parts, the central nervous system (CNS) and the peripheral nervous system (PNS). The CNS consists of the brain and spinal cord. The PNS consists mainly of nerves, which are enclosed bundles of the long fibers, or axons, that connect the CNS to every other part of the body. Nerves that transmit signals from the brain are called motor nerves (efferent), while those nerves that transmit information from the body to the CNS are called sensory nerves (afferent). The PNS is divided into two separate subsystems, the somatic and a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Word Vectors

In natural language processing, a word embedding is a representation of a word. The embedding is used in text analysis. Typically, the representation is a real-valued vector that encodes the meaning of the word in such a way that the words that are closer in the vector space are expected to be similar in meaning. Word embeddings can be obtained using language modeling and feature learning techniques, where words or phrases from the vocabulary are mapped to vectors of real numbers. Methods to generate this mapping include neural networks, dimensionality reduction on the word co-occurrence matrix, probabilistic models, explainable knowledge base method, and explicit representation in terms of the context in which words appear. Word and phrase embeddings, when used as the underlying input representation, have been shown to boost the performance in NLP tasks such as syntactic parsing and sentiment analysis. Development and history of the approach In distributional semantics, a qua ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Byte Pair Encoding

Byte-pair encoding (also known as BPE, or digram coding) is an algorithm, first described in 1994 by Philip Gage, for encoding strings of text into smaller strings by creating and using a translation table. A slightly modified version of the algorithm is used in large language model tokenizers. The original version of the algorithm focused on compression. It replaces the highest-frequency pair of bytes with a new byte that was not contained in the initial dataset. A lookup table of the replacements is required to rebuild the initial dataset. The modified version builds "tokens" (units of recognition) that match varying amounts of source text, from single characters (including single digits or single punctuation marks) to whole words (even long compound words). Original algorithm The original BPE algorithm operates by iteratively replacing the most common contiguous sequences of characters in a target text with unused 'placeholder' bytes. The iteration ends when no sequences can be ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |