|

Statistical Machine Translation

Statistical machine translation (SMT) is a machine translation approach where translations are generated on the basis of statistical models whose parameters are derived from the analysis of bilingual text corpora. The statistical approach contrasts with the rule-based approaches to machine translation as well as with example-based machine translation, that superseded the previous rule-based approach that required explicit description of each and every linguistic rule, which was costly, and which often did not generalize to other languages. The first ideas of statistical machine translation were introduced by Warren Weaver in 1949, including the ideas of applying Claude Shannon's information theory. Statistical machine translation was re-introduced in the late 1980s and early 1990s by researchers at IBM's Thomas J. Watson Research Center. Before the introduction of neural machine translation, it was by far the most widely studied machine translation method. Basis The idea b ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Machine Translation

Machine translation is use of computational techniques to translate text or speech from one language to another, including the contextual, idiomatic and pragmatic nuances of both languages. Early approaches were mostly rule-based or statistical. These methods have since been superseded by neural machine translation and large language models. History Origins The origins of machine translation can be traced back to the work of Al-Kindi, a ninth-century Arabic cryptographer who developed techniques for systemic language translation, including cryptanalysis, frequency analysis, and probability and statistics, which are used in modern machine translation. The idea of machine translation later appeared in the 17th century. In 1629, René Descartes proposed a universal language, with equivalent ideas in different tongues sharing one symbol. The idea of using digital computers for translation of natural languages was proposed as early as 1947 by England's A. D. Booth and Warr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hidden Markov Model

A hidden Markov model (HMM) is a Markov model in which the observations are dependent on a latent (or ''hidden'') Markov process (referred to as X). An HMM requires that there be an observable process Y whose outcomes depend on the outcomes of X in a known way. Since X cannot be observed directly, the goal is to learn about state of X by observing Y. By definition of being a Markov model, an HMM has an additional requirement that the outcome of Y at time t = t_0 must be "influenced" exclusively by the outcome of X at t = t_0 and that the outcomes of X and Y at t < t_0 must be conditionally independent of at given at time . Estimation of the parameters in an HMM can be performed using maximum likelihood estimation. For linear chain HMMs, the Baum–Welch algorithm can be used to estimate parameters. Hidden Markov models are known for their applications to thermodynamics, statistical mechanics, physics, chem ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Synchronous Context-free Grammar

Synchronous context-free grammars (SynCFG or SCFG; not to be confused with stochastic CFGs) are a type of formal grammar A formal grammar is a set of Terminal and nonterminal symbols, symbols and the Production (computer science), production rules for rewriting some of them into every possible string of a formal language over an Alphabet (formal languages), alphabe ... designed for use in transfer-based machine translation. Rules in these grammars apply to two languages at the same time, capturing grammatical structures that are each other's translations. The theory of SynCFGs borrows from syntax-directed transduction and syntax-based machine translation, modeling the reordering of clauses that occurs when translating a sentence by correspondences between phrase-structure rules in the source and target languages. Performance of SCFG-based MT systems has been found comparable with, or even better than, state-of-the-art phrase-based machine translation systems. Several algorith ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data-oriented Parsing

Data-oriented parsing (DOP, also data-oriented processing) is a probabilistic model in computational linguistics. DOP was conceived by Remko Scha in 1990 with the aim of developing a performance-oriented grammar framework. Unlike other probabilistic models, DOP takes into account all subtrees contained in a treebank rather than being restricted to, for example, 2-level subtrees (like PCFGs), thus allowing for more context-sensitive information. Several variants of DOP have been developed. The initial version developed by Rens Bod in 1992 was based on tree-substitution grammar,R. Bod, A computational model of language performance: Data oriented parsing, in: COLING 1992 Volume 3: The 15th International Conference on Computational Linguistics, https://www.aclweb.org/anthology/C92-3126.pdf while more recently, DOP has been combined with lexical-functional grammar (LFG). The resulting DOP-LFG finds an application in machine translation. Other work on learning and parameter estimation f ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Stochastic Parsing

Stochastic (; ) is the property of being well-described by a random probability distribution. ''Stochasticity'' and ''randomness'' are technically distinct concepts: the former refers to a modeling approach, while the latter describes phenomena; in everyday conversation, however, these terms are often used interchangeably. In probability theory, the formal concept of a ''stochastic process'' is also referred to as a ''random process''. Stochasticity is used in many different fields, including image processing, signal processing, computer science, information theory, telecommunications, chemistry, ecology, neuroscience, physics, and cryptography. It is also used in finance (e.g., stochastic oscillator), due to seemingly random changes in the different markets within the financial sector and in medicine, linguistics, music, media, colour theory, botany, manufacturing and geomorphology. Etymology The word ''stochastic'' in English was originally used as an adjective with the definit ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Parse Tree

A parse tree or parsing tree (also known as a derivation tree or concrete syntax tree) is an ordered, rooted tree that represents the syntactic structure of a string according to some context-free grammar. The term ''parse tree'' itself is used primarily in computational linguistics; in theoretical syntax, the term ''syntax tree'' is more common. Concrete syntax trees reflect the syntax of the input language, making them distinct from the abstract syntax trees used in computer programming. Unlike Reed-Kellogg sentence diagrams used for teaching grammar, parse trees do not use distinct symbol shapes for different types of constituents. Parse trees are usually constructed based on either the constituency relation of constituency grammars ( phrase structure grammars) or the dependency relation of dependency grammars. Parse trees may be generated for sentences in natural languages (see natural language processing), as well as during processing of computer languages, such a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Syntax (linguistics)

In linguistics, syntax ( ) is the study of how words and morphemes combine to form larger units such as phrases and sentences. Central concerns of syntax include word order, grammatical relations, hierarchical sentence structure (constituency), agreement, the nature of crosslinguistic variation, and the relationship between form and meaning (semantics). Diverse approaches, such as generative grammar and functional grammar, offer unique perspectives on syntax, reflecting its complexity and centrality to understanding human language. Etymology The word ''syntax'' comes from the ancient Greek word , meaning an orderly or systematic arrangement, which consists of (''syn-'', "together" or "alike"), and (''táxis'', "arrangement"). In Hellenistic Greek, this also specifically developed a use referring to the grammatical order of words, with a slightly altered spelling: . The English term, which first appeared in 1548, is partly borrowed from Latin () and Greek, though the Latin t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

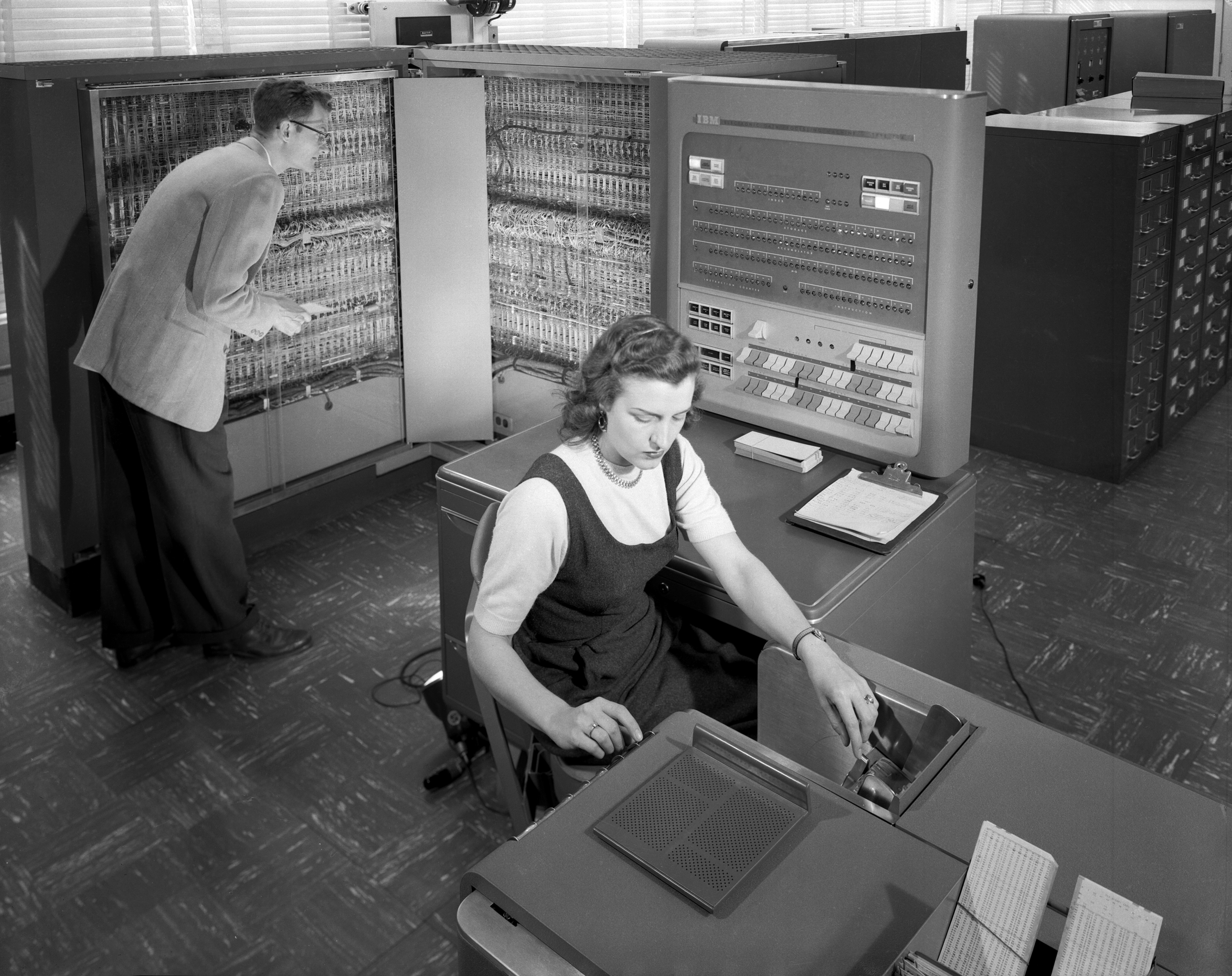

IBM Alignment Models

International Business Machines Corporation (using the trademark IBM), nicknamed Big Blue, is an American multinational technology company headquartered in Armonk, New York, and present in over 175 countries. It is a publicly traded company and one of the 30 companies in the Dow Jones Industrial Average. IBM is the largest industrial research organization in the world, with 19 research facilities across a dozen countries; for 29 consecutive years, from 1993 to 2021, it held the record for most annual U.S. patents generated by a business. IBM was founded in 1911 as the Computing-Tabulating-Recording Company (CTR), a holding company of manufacturers of record-keeping and measuring systems. It was renamed "International Business Machines" in 1924 and soon became the leading manufacturer of punch-card tabulating systems. During the 1960s and 1970s, the IBM mainframe, exemplified by the System/360 and its successors, was the world's dominant computing platform, with the company p ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Expectation–maximization Algorithm

In statistics, an expectation–maximization (EM) algorithm is an iterative method to find (local) maximum likelihood or maximum a posteriori (MAP) estimates of parameters in statistical models, where the model depends on unobserved latent variables. The EM iteration alternates between performing an expectation (E) step, which creates a function for the expectation of the log-likelihood evaluated using the current estimate for the parameters, and a maximization (M) step, which computes parameters maximizing the expected log-likelihood found on the ''E'' step. These parameter-estimates are then used to determine the distribution of the latent variables in the next E step. It can be used, for example, to estimate a mixture of gaussians, or to solve the multiple linear regression problem. History The EM algorithm was explained and given its name in a classic 1977 paper by Arthur Dempster, Nan Laird, and Donald Rubin. They pointed out that the method had been "proposed man ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Syntactic Categories

A syntactic category is a syntactic unit that theories of syntax assume. Word classes, largely corresponding to traditional parts of speech (e.g. noun, verb, preposition, etc.), are syntactic categories. In phrase structure grammars, the ''phrasal categories'' (e.g. noun phrase, verb phrase, prepositional phrase, etc.) are also syntactic categories. Dependency grammars, however, do not acknowledge phrasal categories (at least not in the traditional sense). Word classes considered as syntactic categories may be called ''lexical categories'', as distinct from phrasal categories. The terminology is somewhat inconsistent between the theoretical models of different linguists. However, many grammars also draw a distinction between ''lexical categories'' (which tend to consist of content words, or phrases headed by them) and ''functional categories'' (which tend to consist of function words or abstract functional elements, or phrases headed by them). The term ''lexical category'' the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Phraseme

A phraseme, also called a set phrase, fixed expression, multiword expression (in computational linguistics), or idiom, is a multi-word or multi-morphemic utterance whose components include at least one that is selectionally constrained or restricted by linguistic convention such that it is not freely chosen. In the most extreme cases, there are expressions such as ''X kicks the bucket'' ≈ ‘person X dies of natural causes, the speaker being flippant about X’s demise’ where the unit is selected as a whole to express a meaning that bears little or no relation to the meanings of its parts. All of the words in this expression are chosen restrictedly, as part of a chunk. At the other extreme, there are collocations such as ''stark naked'', ''hearty laugh'', or ''infinite patience'' where one of the words is chosen freely (''naked'', ''laugh'', and ''patience'', respectively) based on the meaning the speaker wishes to express while the choice of the other (intensifying) word ('' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |