|

Split-plot

In statistics, restricted randomization occurs in the design of experiments and in particular in the context of randomized experiments and randomized controlled trials. Restricted randomization allows intuitively poor allocations of treatments to experimental units to be avoided, while retaining the theoretical benefits of randomization. For example, in a clinical trial of a new proposed treatment of obesity compared to a control, an experimenter would want to avoid outcomes of the randomization in which the new treatment was allocated only to the heaviest patients. The concept was introduced by Frank Yates (1948) and William J. Youden (1972) "as a way of avoiding bad spatial patterns of treatments in designed experiments." Example of nested data Consider a batch process that uses 7 monitor wafers in each run. The plan further calls for measuring a response variable on each wafer at each of 9 sites. The organization of the sampling plan has a hierarchical or nested structure: the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Degrees Of Freedom (statistics)

In statistics, the number of degrees of freedom is the number of values in the final calculation of a statistic that are free to vary. Estimates of statistical parameters can be based upon different amounts of information or data. The number of independent pieces of information that go into the estimate of a parameter is called the degrees of freedom. In general, the degrees of freedom of an estimate of a parameter are equal to the number of independent scores that go into the estimate minus the number of parameters used as intermediate steps in the estimation of the parameter itself. For example, if the variance is to be estimated from a random sample of N independent scores, then the degrees of freedom is equal to the number of independent scores (''N'') minus the number of parameters estimated as intermediate steps (one, namely, the sample mean) and is therefore equal to N-1. Mathematically, degrees of freedom is the number of dimensions of the domain of a random vector, or e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistics

Statistics (from German language, German: ', "description of a State (polity), state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model to be studied. Populations can be diverse groups of people or objects such as "all people living in a country" or "every atom composing a crystal". Statistics deals with every aspect of data, including the planning of data collection in terms of the design of statistical survey, surveys and experimental design, experiments. When census data (comprising every member of the target population) cannot be collected, statisticians collect data by developing specific experiment designs and survey sample (statistics), samples. Representative sampling assures that inferences and conclusions can reasonably extend from the sample ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Factorial Experiment

In statistics, a factorial experiment (also known as full factorial experiment) investigates how multiple factors influence a specific outcome, called the response variable. Each factor is tested at distinct values, or levels, and the Experimental unit, experiment includes every possible combination of these levels across all factors. This comprehensive approach lets researchers see not only how each factor individually affects the response, but also how the factors interaction (statistics), interact and influence each other. Often, factorial experiments simplify things by using just two levels for each factor. A 2x2 factorial design, for instance, has two factors, each with two levels, leading to four unique combinations to test. The interaction between these factors is often the most crucial finding, even when the individual factors also have an effect. If a full factorial design becomes too complex due to the sheer number of combinations, researchers can use a fractional fact ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

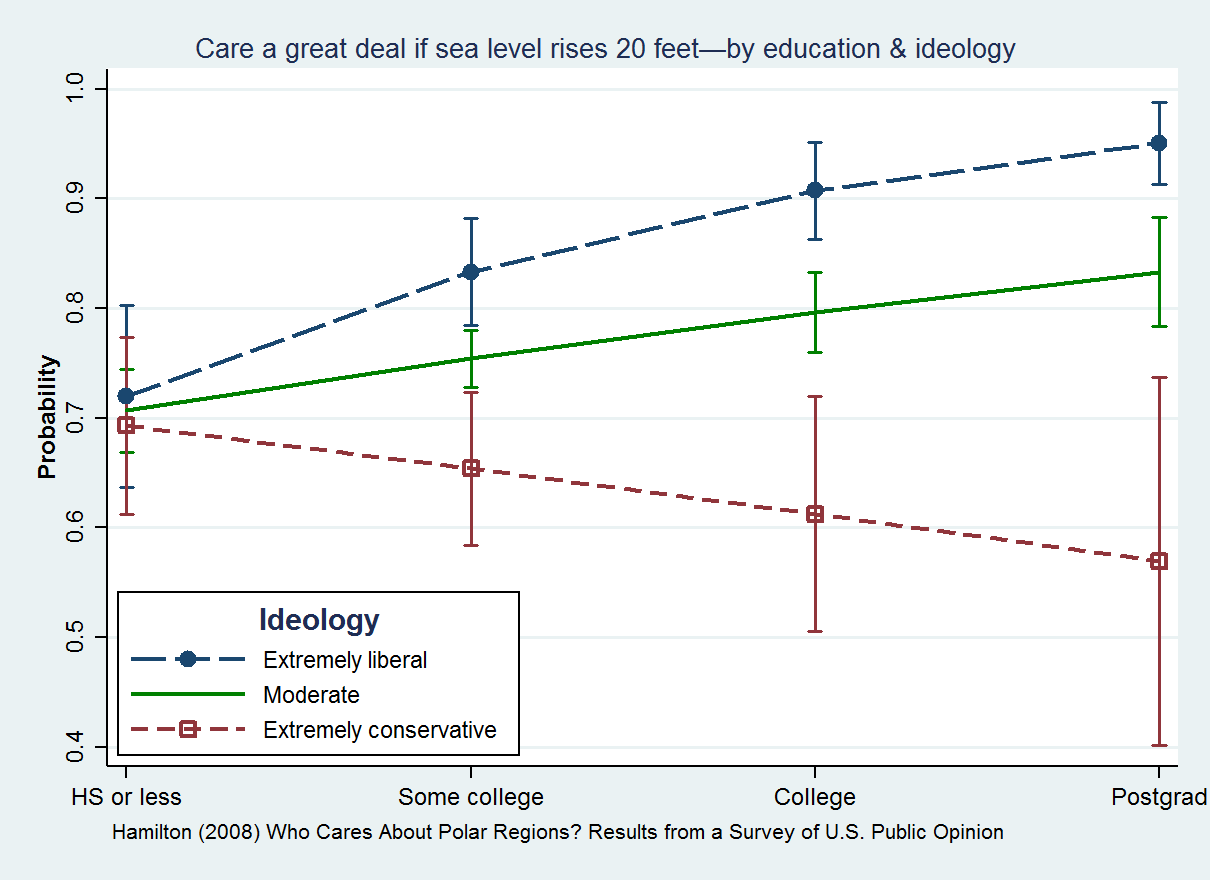

Interaction Effects

In statistics, an interaction may arise when considering the relationship among three or more variables, and describes a situation in which the effect of one causal variable on an outcome depends on the state of a second causal variable (that is, when effects of the two causes are not additive). Although commonly thought of in terms of causal relationships, the concept of an interaction can also describe non-causal associations (then also called ''moderation'' or ''effect modification''). Interactions are often considered in the context of regression analyses or factorial experiments. The presence of interactions can have important implications for the interpretation of statistical models. If two variables of interest interact, the relationship between each of the interacting variables and a third "dependent variable" depends on the value of the other interacting variable. In practice, this makes it more difficult to predict the consequences of changing the value of a variab ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mixed Model

A mixed model, mixed-effects model or mixed error-component model is a statistical model containing both fixed effects and random effects. These models are useful in a wide variety of disciplines in the physical, biological and social sciences. They are particularly useful in settings where repeated measures design, repeated measurements are made on the same statistical units (see also longitudinal study), or where measurements are made on clusters of related statistical units. Mixed models are often preferred over traditional analysis of variance regression models because they don't rely on the independent observations assumption. Further, they have their flexibility in dealing with missing values and uneven spacing of repeated measurements. The Mixed model analysis allows measurements to be explicitly modeled in a wider variety of correlation and variance-covariance variance, avoiding biased estimations structures. This page will discuss mainly linear mixed-effects models rathe ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Control Group

In the design of experiments, hypotheses are applied to experimental units in a treatment group. In comparative experiments, members of a control group receive a standard treatment, a placebo, or no treatment at all. There may be more than one treatment group, more than one control group, or both. A placebo control group can be used to support a double-blind study, in which some subjects are given an ineffective treatment (in medical studies typically a sugar pill) to minimize differences in the experiences of subjects in the different groups; this is done in a way that ensures no participant in the experiment (subject or experimenter) knows to which group each subject belongs. In such cases, a third, non-treatment control group can be used to measure the placebo effect directly, as the difference between the responses of placebo subjects and untreated subjects, perhaps paired by age group or other factors (such as being twins). For the conclusions drawn from the results of an ex ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Randomized Complete Block Design

In common usage, randomness is the apparent or actual lack of definite pattern or predictability in information. A random sequence of events, symbols or steps often has no order and does not follow an intelligible pattern or combination. Individual random events are, by definition, unpredictable, but if there is a known probability distribution, the frequency of different outcomes over repeated events (or "trials") is predictable.Strictly speaking, the frequency of an outcome will converge almost surely to a predictable value as the number of trials becomes arbitrarily large. Non-convergence or convergence to a different value is possible, but has probability zero. Consistent non-convergence is thus evidence of the lack of a fixed probability distribution, as in many evolutionary processes. For example, when throwing two dice, the outcome of any particular roll is unpredictable, but a sum of 7 will tend to occur twice as often as 4. In this view, randomness is not haphazardne ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Completely Randomized Design

In the design of experiments, completely randomized designs are for studying the effects of one primary factor without the need to take other nuisance variables into account. This article describes completely randomized designs that have one primary factor. The experiment compares the values of a response variable based on the different levels of that primary factor. For completely randomized designs, the levels of the primary factor are randomly assigned to the experimental units. Randomization To randomize is to determine the run sequence of the experimental units randomly. For example, if there are 3 levels of the primary factor with each level to be run 2 times, then there are 6! (where ! denotes factorial) possible run sequences (or ways to order the experimental trials). Because of the replication, the number of unique orderings is 90 (since 90 = 6!/(2!*2!*2!)). An example of an unrandomized design would be to always run 2 replications for the first level, then 2 for the sec ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

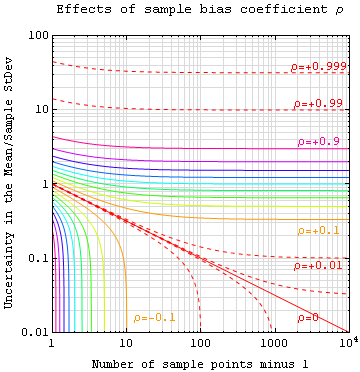

Standard Error

The standard error (SE) of a statistic (usually an estimator of a parameter, like the average or mean) is the standard deviation of its sampling distribution or an estimate of that standard deviation. In other words, it is the standard deviation of statistic values (each value is per sample that is a set of observations made per sampling on the same population). If the statistic is the sample mean, it is called the standard error of the mean (SEM). The standard error is a key ingredient in producing confidence intervals. The sampling distribution of a mean is generated by repeated sampling from the same population and recording the sample mean per sample. This forms a distribution of different means, and this distribution has its own mean and variance. Mathematically, the variance of the sampling mean distribution obtained is equal to the variance of the population divided by the sample size. This is because as the sample size increases, sample means cluster more closely arou ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Factorial Design

In statistics, a factorial experiment (also known as full factorial experiment) investigates how multiple factors influence a specific outcome, called the response variable. Each factor is tested at distinct values, or levels, and the experiment includes every possible combination of these levels across all factors. This comprehensive approach lets researchers see not only how each factor individually affects the response, but also how the factors interact and influence each other. Often, factorial experiments simplify things by using just two levels for each factor. A 2x2 factorial design, for instance, has two factors, each with two levels, leading to four unique combinations to test. The interaction between these factors is often the most crucial finding, even when the individual factors also have an effect. If a full factorial design becomes too complex due to the sheer number of combinations, researchers can use a fractional factorial design. This method strategically omit ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mean Squared Error

In statistics, the mean squared error (MSE) or mean squared deviation (MSD) of an estimator (of a procedure for estimating an unobserved quantity) measures the average of the squares of the errors—that is, the average squared difference between the estimated values and the true value. MSE is a risk function, corresponding to the expected value of the squared error loss. The fact that MSE is almost always strictly positive (and not zero) is because of randomness or because the estimator does not account for information that could produce a more accurate estimate. In machine learning, specifically empirical risk minimization, MSE may refer to the ''empirical'' risk (the average loss on an observed data set), as an estimate of the true MSE (the true risk: the average loss on the actual population distribution). The MSE is a measure of the quality of an estimator. As it is derived from the square of Euclidean distance, it is always a positive value that decreases as the erro ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistically Significant

In statistical hypothesis testing, a result has statistical significance when a result at least as "extreme" would be very infrequent if the null hypothesis were true. More precisely, a study's defined significance level, denoted by \alpha, is the probability of the study rejecting the null hypothesis, given that the null hypothesis is true; and the ''p''-value of a result, ''p'', is the probability of obtaining a result at least as extreme, given that the null hypothesis is true. The result is said to be ''statistically significant'', by the standards of the study, when p \le \alpha. The significance level for a study is chosen before data collection, and is typically set to 5% or much lower—depending on the field of study. In any experiment or observation that involves drawing a sample from a population, there is always the possibility that an observed effect would have occurred due to sampling error alone. But if the ''p''-value of an observed effect is less than (or equal to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |