|

Signal-to-interference-plus-noise Ratio

In information theory and telecommunication engineering, the signal-to-interference-plus-noise ratio (SINR) (also known as the signal-to-noise-plus-interference ratio (SNIR)) is a quantity used to give theoretical upper bounds on channel capacity (or the rate of information transfer) in wireless communication systems such as networks. Analogous to the signal-to-noise ratio (SNR) used often in wired communications systems, the SINR is defined as the power of a certain signal of interest divided by the sum of the Interference (communication), interference power (from all the other interfering signals) and the power of some background noise. If the power of noise term is zero, then the SINR reduces to the signal-to-interference ratio (SIR). Conversely, zero interference reduces the SINR to the SNR, which is used less often when developing mathematical models of wireless networks such as cellular networks.J. G. Andrews, R. K. Ganti, M. Haenggi, N. Jindal, and S. Weber. A primer on spat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

Information Theory

Information theory is the mathematical study of the quantification (science), quantification, Data storage, storage, and telecommunications, communication of information. The field was established and formalized by Claude Shannon in the 1940s, though early contributions were made in the 1920s through the works of Harry Nyquist and Ralph Hartley. It is at the intersection of electronic engineering, mathematics, statistics, computer science, Neuroscience, neurobiology, physics, and electrical engineering. A key measure in information theory is information entropy, entropy. Entropy quantifies the amount of uncertainty involved in the value of a random variable or the outcome of a random process. For example, identifying the outcome of a Fair coin, fair coin flip (which has two equally likely outcomes) provides less information (lower entropy, less uncertainty) than identifying the outcome from a roll of a dice, die (which has six equally likely outcomes). Some other important measu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Multipath Fading

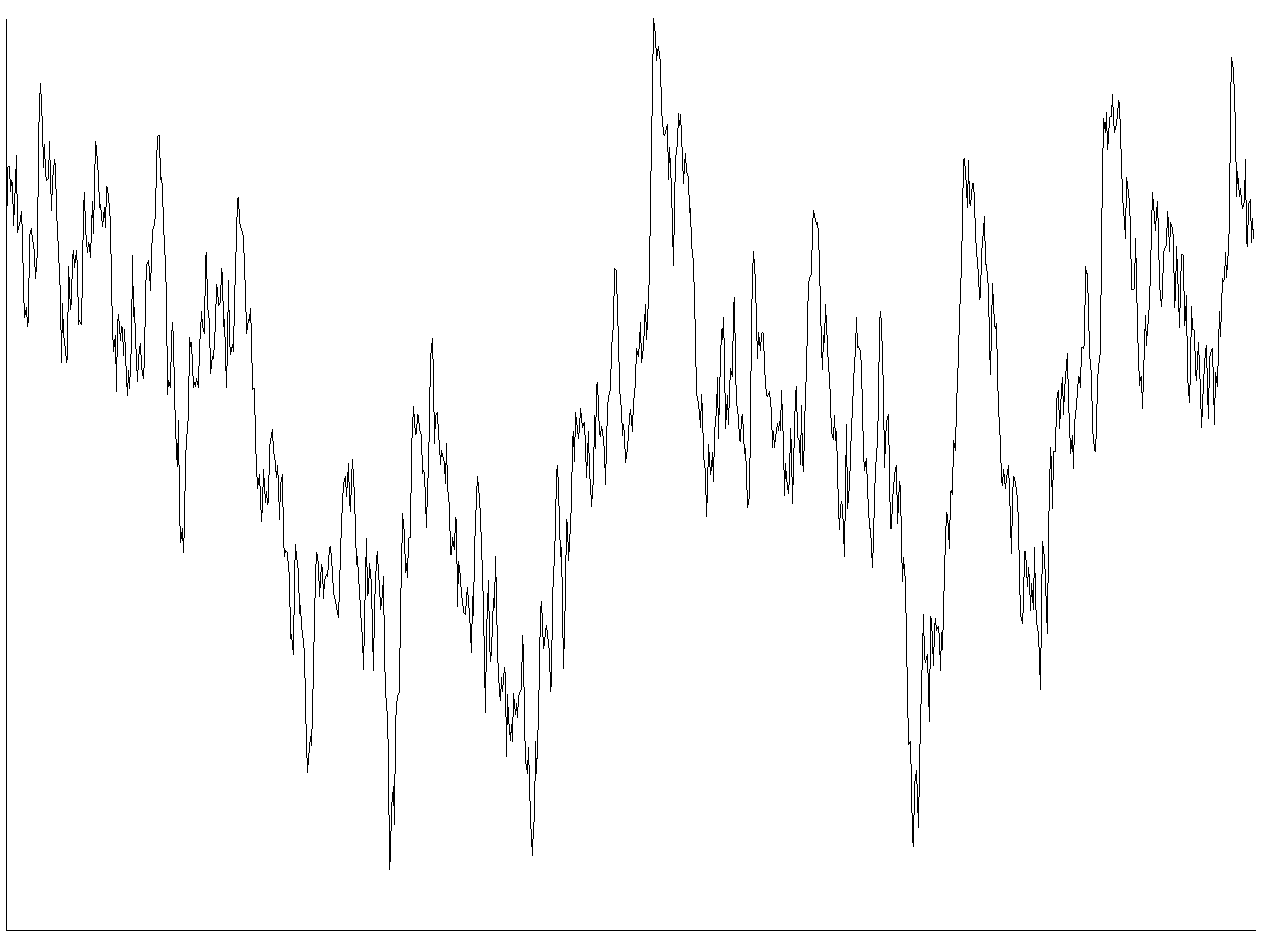

In radio communication, multipath is the propagation phenomenon that results in radio signals reaching the receiving antenna by two or more paths. Causes of multipath include atmospheric ducting, ionospheric reflection and refraction, and reflection from water bodies and terrestrial objects such as mountains and buildings. When the same signal is received over more than one path, it can create interference and phase shifting of the signal. Destructive interference causes fading; this may cause a radio signal to become too weak in certain areas to be received adequately. For this reason, this effect is also known as multipath interference or multipath distortion. Where the magnitudes of the signals arriving by the various paths have a distribution known as the Rayleigh distribution, this is known as Rayleigh fading. Where one component (often, but not necessarily, a line of sight component) dominates, a Rician distribution provides a more accurate model, and this is know ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Telecommunications

Telecommunication, often used in its plural form or abbreviated as telecom, is the transmission of information over a distance using electronic means, typically through cables, radio waves, or other communication technologies. These means of transmission may be divided into communication channels for multiplexing, allowing for a single medium to transmit several concurrent Session (computer science), communication sessions. Long-distance technologies invented during the 20th and 21st centuries generally use electric power, and include the electrical telegraph, telegraph, telephone, television, and radio. Early telecommunication networks used metal wires as the medium for transmitting signals. These networks were used for telegraphy and telephony for many decades. In the first decade of the 20th century, a revolution in wireless communication began with breakthroughs including those made in radio communications by Guglielmo Marconi, who won the 1909 Nobel Prize in Physics. Othe ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

Noise (electronics)

In electronics, noise is an unwanted disturbance in an electrical signal. Noise generated by electronic devices varies greatly as it is produced by several different effects. In particular, noise is inherent in physics and central to thermodynamics. Any conductor with electrical resistance will generate thermal noise inherently. The final elimination of thermal noise in electronics can only be achieved cryogenically, and even then quantum noise would remain inherent. Electronic noise is a common component of noise in signal processing. In communication systems, noise is an error or undesired random disturbance of a useful information signal in a communication channel. The noise is a summation of unwanted or disturbing energy from natural and sometimes man-made sources. Noise is, however, typically distinguished from interference, for example in the signal-to-noise ratio (SNR), signal-to-interference ratio (SIR) and signal-to-noise plus interference ratio (SNIR) measu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

Continuum Percolation Theory

In mathematics and probability theory, continuum percolation theory is a branch of mathematics that extends discrete percolation theory to continuous space (often Euclidean space ). More specifically, the underlying points of discrete percolation form types of lattices whereas the underlying points of continuum percolation are often randomly positioned in some continuous space and form a type of point process. For each point, a random shape is frequently placed on it and the shapes overlap each with other to form clumps or components. As in discrete percolation, a common research focus of continuum percolation is studying the conditions of occurrence for infinite or giant components. Other shared concepts and analysis techniques exist in these two types of percolation theory as well as the study of random graphs and random geometric graphs. Continuum percolation arose from an early mathematical model for Wireless communication, wireless networks, which, with the rise of several wirel ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

Signal-to-noise Ratio

Signal-to-noise ratio (SNR or S/N) is a measure used in science and engineering that compares the level of a desired signal to the level of background noise. SNR is defined as the ratio of signal power to noise power, often expressed in decibels. A ratio higher than 1:1 (greater than 0 dB) indicates more signal than noise. SNR is an important parameter that affects the performance and quality of systems that process or transmit signals, such as communication systems, audio systems, radar systems, imaging systems, and data acquisition systems. A high SNR means that the signal is clear and easy to detect or interpret, while a low SNR means that the signal is corrupted or obscured by noise and may be difficult to distinguish or recover. SNR can be improved by various methods, such as increasing the signal strength, reducing the noise level, filtering out unwanted noise, or using error correction techniques. SNR also determines the maximum possible amount of data that ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

Continuum Percolation Theory

In mathematics and probability theory, continuum percolation theory is a branch of mathematics that extends discrete percolation theory to continuous space (often Euclidean space ). More specifically, the underlying points of discrete percolation form types of lattices whereas the underlying points of continuum percolation are often randomly positioned in some continuous space and form a type of point process. For each point, a random shape is frequently placed on it and the shapes overlap each with other to form clumps or components. As in discrete percolation, a common research focus of continuum percolation is studying the conditions of occurrence for infinite or giant components. Other shared concepts and analysis techniques exist in these two types of percolation theory as well as the study of random graphs and random geometric graphs. Continuum percolation arose from an early mathematical model for Wireless communication, wireless networks, which, with the rise of several wirel ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Random Variables

A random variable (also called random quantity, aleatory variable, or stochastic variable) is a mathematical formalization of a quantity or object which depends on random events. The term 'random variable' in its mathematical definition refers to neither randomness nor variability but instead is a mathematical function in which * the domain is the set of possible outcomes in a sample space (e.g. the set \ which are the possible upper sides of a flipped coin heads H or tails T as the result from tossing a coin); and * the range is a measurable space (e.g. corresponding to the domain above, the range might be the set \ if say heads H mapped to -1 and T mapped to 1). Typically, the range of a random variable is a subset of the real numbers. Informally, randomness typically represents some fundamental element of chance, such as in the roll of a die; it may also represent uncertainty, such as measurement error. However, the interpretation of probability is philosophic ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Nakagami Distribution

The Nakagami distribution or the Nakagami-''m'' distribution is a probability distribution related to the gamma distribution. The family of Nakagami distributions has two parameters: a shape parameter m\geq 1/2 and a scale parameter \Omega > 0. It is used to model physical phenomena such as those found in medical ultrasound imaging, communications engineering, meteorology, hydrology, multimedia, and seismology. Characterization Its probability density function (pdf) is : f(x;\,m,\Omega) = \fracx^\exp\left(-\fracx^2\right) \text x\geq 0. where m\geq 1/2 and \Omega>0. Its cumulative distribution function (CDF) is : F(x;\,m,\Omega) = \frac = P\left(m, \fracx^2\right) where ''P'' is the regularized (lower) incomplete gamma function. Parameterization The parameters m and \Omega are : m = \frac , and : \Omega = \operatorname ^2 No closed form solution exists for the median of this distribution, although special cases do exist, such as \sqrt when ''m' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Log-normal Distribution

In probability theory, a log-normal (or lognormal) distribution is a continuous probability distribution of a random variable whose logarithm is normal distribution, normally distributed. Thus, if the random variable is log-normally distributed, then has a normal distribution. Equivalently, if has a normal distribution, then the exponential function of , , has a log-normal distribution. A random variable which is log-normally distributed takes only positive real values. It is a convenient and useful model for measurements in exact and engineering sciences, as well as medicine, economics and other topics (e.g., energies, concentrations, lengths, prices of financial instruments, and other metrics). The distribution is occasionally referred to as the Galton distribution or Galton's distribution, after Francis Galton. The log-normal distribution has also been associated with other names, such as Donald MacAlister#log-normal, McAlister, Gibrat's law, Gibrat and Cobb–Douglas. A l ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Rician Distribution

In probability theory, the Rice distribution or Rician distribution (or, less commonly, Ricean distribution) is the probability distribution of the magnitude of a circularly-symmetric bivariate normal distribution, bivariate normal random variable, possibly with non-zero mean (noncentral). It was named after Stephen O. Rice (1907–1986). Characterization The probability density function is : f(x\mid\nu,\sigma) = \frac\exp\left(\frac \right)I_0\left(\frac\right), where ''I''0(''z'') is the modified Bessel function of the first kind with order zero. In the context of Rician fading, the distribution is often also rewritten using the ''Shape Parameter'' K = \frac, defined as the ratio of the power contributions by line-of-sight path to the remaining multipaths, and the ''Scale parameter'' \Omega = \nu^2+2\sigma^2 , defined as the total power received in all paths. The Characteristic function (probability theory), characteristic function of the Rice distribution is given as: ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Rayleigh Distribution

In probability theory and statistics, the Rayleigh distribution is a continuous probability distribution for nonnegative-valued random variables. Up to rescaling, it coincides with the chi distribution with two degrees of freedom. The distribution is named after Lord Rayleigh (). A Rayleigh distribution is often observed when the overall magnitude of a vector in the plane is related to its directional components. One example where the Rayleigh distribution naturally arises is when wind velocity is analyzed in two dimensions. Assuming that each component is uncorrelated, normally distributed with equal variance, and zero mean, which is infrequent, then the overall wind speed (vector magnitude) will be characterized by a Rayleigh distribution. A second example of the distribution arises in the case of random complex numbers whose real and imaginary components are independently and identically distributed Gaussian with equal variance and zero mean. In that case, the absolute v ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |