|

Semantic Translation

Semantic translation is the process of using semantic information to aid in the translation of data in one representation or data model to another representation or data model. Semantic translation takes advantage of semantics that associate meaning with individual data elements in one dictionary to create an equivalent meaning in a second system. An example of semantic translation is the conversion of XML data from one data model to a second data model using formal ontologies for each system such as the Web Ontology Language (OWL). This is frequently required by intelligent agents that wish to perform searches on remote computer systems that use different data models to store their data elements. The process of allowing a single user to search multiple systems with a single search request is also known as federated search. Semantic translation should be differentiated from data mapping tools that do simple one-to-one translation of data from one system to another without actu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Semantic

Semantics is the study of linguistic Meaning (philosophy), meaning. It examines what meaning is, how words get their meaning, and how the meaning of a complex expression depends on its parts. Part of this process involves the distinction between sense and reference. Sense is given by the ideas and concepts associated with an expression while reference is the object to which an expression points. Semantics contrasts with syntax, which studies the rules that dictate how to create grammatically correct sentences, and pragmatics, which investigates how people use language in communication. Lexical semantics is the branch of semantics that studies word meaning. It examines whether words have one or several meanings and in what lexical relations they stand to one another. Phrasal semantics studies the meaning of sentences by exploring the phenomenon of compositionality or how new meanings can be created by arranging words. Formal semantics (natural language), Formal semantics relies o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

National Information Exchange Model

NIEMOpen (), frequently referred to as NIEM, originated as an XML-based information exchange framework from the United States, but has transitioned to an OASIS Open Project. This initiative formalizes NIEM's designation as an official standard in national and international policy and procurement. NIEMOpen's Project Governing Board recently approved the first standard under this new project; the Conformance Targets Attribute Specification (CTAS) Version 3.0. A full collection of NIEMOpen standards are anticipated by end of year 2024. NIEM offers a common vocabulary that enables effective information exchanges across diverse public and private organizations. NIEM is currently developinthe NIEM Metamodel and Common Model Formatwhich can be expressed in any data serialization that NIEM supports, including, but not limited to JSON. Formed from an interagency partnership, NIEM has come to represent a collaborative partnership of agencies and organizations across all levels of governme ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Management

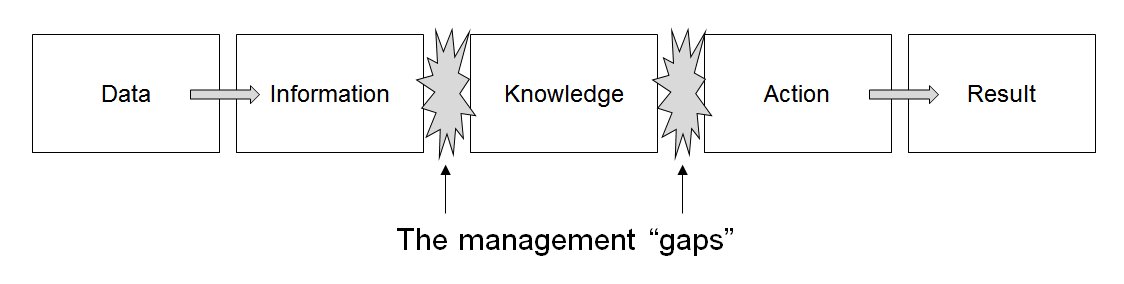

Data management comprises all disciplines related to handling data as a valuable resource, it is the practice of managing an organization's data so it can be analyzed for decision making. Concept The concept of data management emerged alongside the evolution of computing technology. In the 1950s, as computers became more prevalent, organizations began to grapple with the challenge of organizing and storing data efficiently. Early methods relied on punch cards and manual sorting, which were labor-intensive and prone to errors. The introduction of database management systems in the 1970s marked a significant milestone, enabling structured storage and retrieval of data. By the 1980s, relational database models revolutionized data management, emphasizing the importance of data as an asset and fostering a data-centric mindset in business. This era also saw the rise of data governance practices, which prioritized the organization and regulation of data to ensure quality and complian ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Vocabulary-based Transformation

In metadata, a vocabulary-based transformation (VBT) is a transformation aided by the use of a semantic equivalence statements within a controlled vocabulary. Many organizations today require communication between two or more computers. Although many standards exist to exchange data between computers such as HTML or email, there is still much structured information that needs to be exchanged between computers that is not standardized. The process of mapping one source of data into another is often a slow and labor-intensive process. VBT is a possible way to avoid much of the time and cost of manual data mapping using traditional extract, transform, load technologies. History The term ''vocabulary-based transformation'' was first defined by Roy Shulte of the Gartner Group around May 2003 and appeared in annual " hype-cycle" for integration. Application VBT allows computer systems integrators to more automatically "look up" the definitions of data elements in a centrali ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Semantic Web

The Semantic Web, sometimes known as Web 3.0, is an extension of the World Wide Web through standards set by the World Wide Web Consortium (W3C). The goal of the Semantic Web is to make Internet data machine-readable. To enable the encoding of semantics with the data, technologies such as Resource Description Framework (RDF) and Web Ontology Language (OWL) are used. These technologies are used to formally represent metadata. For example, Ontology (information science), ontology can describe concepts, relationships between Entity–relationship model, entities, and categories of things. These embedded semantics offer significant advantages such as reasoning engine, reasoning over data and operating with heterogeneous data sources. These standards promote common data formats and exchange protocols on the Web, fundamentally the RDF. According to the W3C, "The Semantic Web provides a common framework that allows data to be shared and reused across application, enterprise, and commu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Semantic Mapper

A semantic mapper is tool or service that aids in the transformation of data elements from one namespace into another namespace. A semantic mapper is an essential component of a semantic broker and one tool that is enabled by the Semantic Web technologies. Essentially the problems arising in semantic mapping are the same as in data mapping for data integration purposes, with the difference that here the semantic relationships are made explicit through the use of semantic nets or ontologies which play the role of data dictionaries in data mapping. Structure A semantic mapper must have access to three data sets: # List of data elements in source namespace # List of data elements in destination namespace # List of semantic equivalent statements between source and destination (e.gowl:equivalentClassowl:equivalentPropertyoowl:sameAsin OWL). A semantic mapper processes on a list of data elements in the source namespace. The semantic mapper will successively translate the data e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

ISO/IEC 11179

The ISO/IEC 11179 metadata registry (MDR) standard is an international International Organization for Standardization, ISO/International Electrotechnical Commission, IEC standard for representing metadata for an organization in a metadata registry. It documents the standardization and registration of metadata to make data understandable and shareable. Structure of an ISO/IEC 11179 metadata registry The ISO/IEC 11179 model is a result of two principles of semantic theory, combined with basic principles of data modelling. The first principle from semantic theory is the thesaurus type relation between wider and more narrow (or specific) concepts, e.g. the wide concept "income" has a relation to the more narrow concept "net income". The second principle from semantic theory is the relation between a concept and its representation, e.g., "buy" and "purchase" are the same concept although different terms are used. A basic principle of data modelling is the combination of an object c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Intelligent Agents

In artificial intelligence, an intelligent agent is an entity that perceives its environment, takes actions autonomously to achieve goals, and may improve its performance through machine learning or by acquiring knowledge. Leading AI textbooks define artificial intelligence as the "study and design of intelligent agents," emphasizing that goal-directed behavior is central to intelligence. A specialized subset of intelligent agents, agentic AI (also known as an AI agent or simply agent), expands this concept by proactively pursuing goals, making decisions, and taking actions over extended periods, thereby exemplifying a novel form of digital agency. Intelligent agents can range from simple to highly complex. A basic thermostat or control system is considered an intelligent agent, as is a human being, or any other system that meets the same criteria—such as a firm, a state, or a biome. Intelligent agents operate based on an objective function, which encapsulates their goals ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Federated Search

Federated search retrieves information from a variety of sources via a search application built on top of one or more search engines. A user makes a single query request which is distributed to the search engines, databases or other query engines participating in the federation. The federated search then aggregates the results that are received from the search engines for presentation to the user. Federated search can be used to integrate disparate information resources within a single large organization ("enterprise") or for the entire web. Federated search, unlike distributed search, requires centralized coordination of the searchable resources. This involves both coordination of the queries transmitted to the individual search engines and fusion of the search results returned by each of them. Purpose Federated search came about to meet the need of searching multiple disparate content sources with one query. This allows a user to search multiple databases at once in real time, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Mapping

In computing and data management, data mapping is the process of creating data element mappings between two distinct data models. Data mapping is used as a first step for a wide variety of data integration tasks, including: * Data transformation or data mediation between a data source and a destination * Identification of data relationships as part of data lineage analysis * Discovery of hidden sensitive data such as the last four digits of a social security number hidden in another user id as part of a data masking or de-identification project * Consolidation of multiple databases into a single database and identifying redundant columns of data for consolidation or elimination For example, a company that would like to transmit and receive purchases and invoices with other companies might use data mapping to create data maps from a company's data to standardized ANSI ASC X12 messages for items such as purchase orders and invoices. Standards X12 standards are generic E ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Instance (computer Science)

In computer science, an instance is an occurrence of a software element that is based on a type definition. When created, an occurrence is said to have been ''instantiated'', and both the creation process and the result of creation are called ''instantiation''. Examples ; Class instance: An object-oriented programming (OOP) object created from a class. Each instance of a class shares a data layout but has its own memory allocation. ; Computer instance: An occurrence of a virtual machine which typically includes storage, a virtual CPU. ; Polygonal model: In computer graphics, it can be instantiated in order to be drawn several times in different locations in a scene which can improve the performance of rendering since a portion of the work needed to display each instance is reused. ; Program instance: In a POSIX-oriented operating system, it refers to an executing process A process is a series or set of activities that interact to produce a result; it may occur once-only o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |