|

Relative Likelihood

In statistics, when selecting a statistical model for given data, the relative likelihood compares the relative plausibilities of different candidate models or of different values of a parameter of a single model. Relative likelihood of parameter values Assume that we are given some data for which we have a statistical model with parameter . Suppose that the maximum likelihood estimate for is \hat. Relative plausibilities of other values may be found by comparing the likelihoods of those other values with the likelihood of \hat. The ''relative likelihood'' of is defined to be :\frac, where \mathcal(\theta \mid x) denotes the likelihood function. Thus, the relative likelihood is the likelihood ratio with fixed denominator \mathcal(\hat \mid x). The function :\theta \mapsto \frac is the ''relative likelihood function''. Likelihood region A ''likelihood region'' is the set of all values of whose relative likelihood is greater than or equal to a given threshold. In terms of perc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistics

Statistics (from German language, German: ', "description of a State (polity), state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model to be studied. Populations can be diverse groups of people or objects such as "all people living in a country" or "every atom composing a crystal". Statistics deals with every aspect of data, including the planning of data collection in terms of the design of statistical survey, surveys and experimental design, experiments. When census data (comprising every member of the target population) cannot be collected, statisticians collect data by developing specific experiment designs and survey sample (statistics), samples. Representative sampling assures that inferences and conclusions can reasonably extend from the sample ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Model Validation

In statistics, model validation is the task of evaluating whether a chosen statistical model is appropriate or not. Oftentimes in statistical inference, inferences from models that appear to fit their data may be flukes, resulting in a misunderstanding by researchers of the actual relevance of their model. To combat this, model validation is used to test whether a statistical model can hold up to permutations in the data. Model validation is also called model criticism or model evaluation. This topic is not to be confused with the closely related task of model selection, the process of discriminating between multiple candidate models: model validation does not concern so much the conceptual design of models as it tests only the consistency between a chosen model and its stated outputs. There are many ways to validate a model. Residual plots plot the difference between the actual data and the model's predictions: correlations in the residual plots may indicate a flaw in the model. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Model Specification

In statistics, model specification is part of the process of building a statistical model: specification consists of selecting an appropriate functional form for the model and choosing which variables to include. For example, given personal income y together with years of schooling s and on-the-job experience x, we might specify a functional relationship y = f(s,x) as follows: : \ln y = \ln y_0 + \rho s + \beta_1 x + \beta_2 x^2 + \varepsilon where \varepsilon is the unexplained error term that is supposed to comprise independent and identically distributed Gaussian variables. The statistician Sir David Cox has said, "How hetranslation from subject-matter problem to statistical model is done is often the most critical part of an analysis". Specification error and bias Specification error occurs when the functional form or the choice of independent variables poorly represent relevant aspects of the true data-generating process. In particular, bias (the expected value of th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

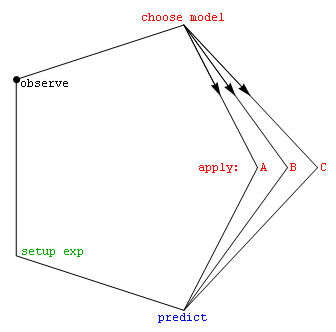

Statistical Model Selection

Model selection is the task of selecting a model from among various candidates on the basis of performance criterion to choose the best one. In the context of machine learning and more generally statistical analysis, this may be the selection of a statistical model from a set of candidate models, given data. In the simplest cases, a pre-existing set of data is considered. However, the task can also involve the design of experiments such that the data collected is well-suited to the problem of model selection. Given candidate models of similar predictive or explanatory power, the simplest model is most likely to be the best choice (Occam's razor). state, "The majority of the problems in statistical inference can be considered to be problems related to statistical modeling". Relatedly, has said, "How hetranslation from subject-matter problem to statistical model is done is often the most critical part of an analysis". Model selection may also refer to the problem of selecting ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Akaike Information Criterion

The Akaike information criterion (AIC) is an estimator of prediction error and thereby relative quality of statistical models for a given set of data. Given a collection of models for the data, AIC estimates the quality of each model, relative to each of the other models. Thus, AIC provides a means for model selection. AIC is founded on information theory. When a statistical model is used to represent the process that generated the data, the representation will almost never be exact; so some information will be lost by using the model to represent the process. AIC estimates the relative amount of information lost by a given model: the less information a model loses, the higher the quality of that model. In estimating the amount of information lost by a model, AIC deals with the trade-off between the goodness of fit of the model and the simplicity of the model. In other words, AIC deals with both the risk of overfitting and the risk of underfitting. The Akaike information crite ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Chi-squared Distribution

In probability theory and statistics, the \chi^2-distribution with k Degrees of freedom (statistics), degrees of freedom is the distribution of a sum of the squares of k Independence (probability theory), independent standard normal random variables. The chi-squared distribution \chi^2_k is a special case of the gamma distribution and the univariate Wishart distribution. Specifically if X \sim \chi^2_k then X \sim \text(\alpha=\frac, \theta=2) (where \alpha is the shape parameter and \theta the scale parameter of the gamma distribution) and X \sim \text_1(1,k) . The scaled chi-squared distribution s^2 \chi^2_k is a reparametrization of the gamma distribution and the univariate Wishart distribution. Specifically if X \sim s^2 \chi^2_k then X \sim \text(\alpha=\frac, \theta=2 s^2) and X \sim \text_1(s^2,k) . The chi-squared distribution is one of the most widely used probability distributions in inferential statistics, notably in hypothesis testing and in constru ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Wilks' Theorem

In statistics, Wilks' theorem offers an asymptotic distribution of the log-likelihood ratio statistic, which can be used to produce confidence intervals for maximum-likelihood estimates or as a test statistic for performing the likelihood-ratio test. Statistical tests (such as hypothesis testing) generally require knowledge of the probability distribution of the test statistic. This is often a problem for likelihood ratios, where the probability distribution can be very difficult to determine. A convenient result by Samuel S. Wilks says that as the sample size approaches \infty, the distribution of the test statistic -2 \log(\Lambda) asymptotically approaches the chi-squared (\chi^2) distribution under the null hypothesis H_0. Here, \Lambda denotes the likelihood ratio, and the \chi^2 distribution has degrees of freedom equal to the difference in dimensionality of \Theta and \Theta_0, where \Theta is the full parameter space and \Theta_0 is the subset of the parameter space ass ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Posterior Probability

The posterior probability is a type of conditional probability that results from updating the prior probability with information summarized by the likelihood via an application of Bayes' rule. From an epistemological perspective, the posterior probability contains everything there is to know about an uncertain proposition (such as a scientific hypothesis, or parameter values), given prior knowledge and a mathematical model describing the observations available at a particular time. After the arrival of new information, the current posterior probability may serve as the prior in another round of Bayesian updating. In the context of Bayesian statistics, the posterior probability distribution usually describes the epistemic uncertainty about statistical parameters conditional on a collection of observed data. From a given posterior distribution, various point and interval estimates can be derived, such as the maximum a posteriori (MAP) or the highest posterior density int ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Coverage Probability

In statistical estimation theory, the coverage probability, or coverage for short, is the probability that a confidence interval or confidence region will include the true value (parameter) of interest. It can be defined as the proportion of instances where the interval surrounds the true value as assessed by long-run frequency. In statistical prediction, the coverage probability is the probability that a prediction interval will include an out-of-sample value of the random variable. The coverage probability can be defined as the proportion of instances where the interval surrounds an out-of-sample value as assessed by long-run frequency. Concept The fixed degree of certainty pre-specified by the analyst, referred to as the ''confidence level'' or ''confidence coefficient'' of the constructed interval, is effectively the nominal coverage probability of the procedure for constructing confidence intervals. Hence, referring to a "nominal confidence level" or "nominal confi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Credible Interval

In Bayesian statistics, a credible interval is an interval used to characterize a probability distribution. It is defined such that an unobserved parameter value has a particular probability \gamma to fall within it. For example, in an experiment that determines the distribution of possible values of the parameter \mu, if the probability that \mu lies between 35 and 45 is \gamma=0.95, then 35 \le \mu \le 45 is a 95% credible interval. Credible intervals are typically used to characterize posterior probability distributions or predictive probability distributions. Their generalization to disconnected or multivariate sets is called credible set or credible region. Credible intervals are a Bayesian analog to confidence intervals in frequentist statistics. The two concepts arise from different philosophies: Bayesian intervals treat their bounds as fixed and the estimated parameter as a random variable, whereas frequentist confidence intervals treat their bounds as random varia ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |