|

Pooling Layer

In neural networks, a pooling layer is a kind of network layer that downsamples and aggregates information that is dispersed among many vectors into fewer vectors. It has several uses. It removes redundant information, reducing the amount of computation and memory required, makes the model more robust to small variations in the input, and increases the receptive field of neurons in later layers in the network. Convolutional neural network pooling Pooling is most commonly used in convolutional neural networks (CNN). Below is a description of pooling in 2-dimensional CNNs. The generalization to n-dimensions is immediate. As notation, we consider a tensor x \in \R^, where H is height, W is width, and C is the number of channels. A pooling layer outputs a tensor y \in \R^. We define two variables f, s called "filter size" (aka "kernel size") and "stride". Sometimes, it is necessary to use a different filter size and stride for horizontal and vertical directions. In such cases, we ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Neural Network (machine Learning)

In machine learning, a neural network (also artificial neural network or neural net, abbreviated ANN or NN) is a model inspired by the structure and function of biological neural networks in animal brains. An ANN consists of connected units or nodes called '' artificial neurons'', which loosely model the neurons in a brain. These are connected by ''edges'', which model the synapses in a brain. Each artificial neuron receives signals from connected neurons, then processes them and sends a signal to other connected neurons. The "signal" is a real number, and the output of each neuron is computed by some non-linear function of the sum of its inputs, called the '' activation function''. The strength of the signal at each connection is determined by a '' weight'', which adjusts during the learning process. Typically, neurons are aggregated into layers. Different layers may perform different transformations on their inputs. Signals travel from the first layer (the ''input layer' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

BERT (language Model)

Bidirectional Encoder Representations from Transformers (BERT) is a transformer-based machine learning technique for natural language processing (NLP) pre-training developed by Google. BERT was created and published in 2018 by Jacob Devlin and his colleagues from Google. In 2019, Google announced that it had begun leveraging BERT in its search engine, and by late 2020 it was using BERT in almost every English-language query. A 2020 literature survey concluded that "in a little over a year, BERT has become a ubiquitous baseline in NLP experiments", counting over 150 research publications analyzing and improving the model. The original English-language BERT has two models: (1) the BERTBASE: 12 encoders with 12 bidirectional self-attention heads, and (2) the BERTLARGE: 24 encoders with 16 bidirectional self-attention heads. Both models are pre-trained from unlabeled data extracted from the BooksCorpus with 800M words and English Wikipedia with 2,500M words. Architecture BERT is ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Convolutional Neural Network

In deep learning, a convolutional neural network (CNN, or ConvNet) is a class of artificial neural network (ANN), most commonly applied to analyze visual imagery. CNNs are also known as Shift Invariant or Space Invariant Artificial Neural Networks (SIANN), based on the shared-weight architecture of the convolution kernels or filters that slide along input features and provide translation- equivariant responses known as feature maps. Counter-intuitively, most convolutional neural networks are not invariant to translation, due to the downsampling operation they apply to the input. They have applications in image and video recognition, recommender systems, image classification, image segmentation, medical image analysis, natural language processing, brain–computer interfaces, and financial time series. CNNs are regularized versions of multilayer perceptrons. Multilayer perceptrons usually mean fully connected networks, that is, each neuron in one layer is connected to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Béla Julesz

Béla Julesz (also Bela Julesz in English; February 19, 1928 – December 31, 2003) was a Hungarian-born American visual neuroscientist and experimental psychologist in the fields of visual and auditory perception. Julesz was the originator of random dot stereograms which led to the creation of autostereograms. He also was the first to study texture discrimination by constraining second-order statistics. Biography Béla Julesz was born in Budapest, Hungary, on February 19, 1928. He immigrated to the United States with his wife Margit after receiving his Ph.D. from the Hungarian Academy of Sciences in 1956. Although Béla is the Hungarian name with the accent, Julesz always used the non-accented version. In 1956, Julesz joined the renowned Bell Laboratories, where he headed the Sensory and Perceptual Processes Department (1964–1982), then the Visual Perception Research Department (1983–1989). Much of his research focused on physiological psychology topics including depth ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Torsten Wiesel

Torsten Nils Wiesel (born 3 June 1924) is a Swedish neurophysiologist. With David H. Hubel, he received the 1981 Nobel Prize in Physiology or Medicine, for their discoveries concerning information processing in the visual system; the prize was shared with Roger W. Sperry for his independent research on the cerebral hemispheres. Career Wiesel was born in Uppsala, Sweden in 1924, the youngest of five children. In 1947, he began his scientific career in Carl Gustaf Bernhard's laboratory at the Karolinska Institute, where he received his medical degree in 1954. He went on to teach in the Institute's department of physiology and worked in the child psychiatry unit of the Karolinska Hospital. In 1955 he moved to the United States to work at Johns Hopkins School of Medicine under Stephen Kuffler. Wiesel began a fellowship in ophthalmology, and in 1958 he became an assistant professor. That same year, he met David Hubel, beginning a collaboration that would last over twenty years. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

David H

David (; , "beloved one") (traditional spelling), , ''Dāwūd''; grc-koi, Δαυΐδ, Dauíd; la, Davidus, David; gez , ዳዊት, ''Dawit''; xcl, Դաւիթ, ''Dawitʿ''; cu, Давíдъ, ''Davidŭ''; possibly meaning "beloved one". was, according to the Hebrew Bible, the third king of the United Kingdom of Israel. In the Books of Samuel, he is described as a young shepherd and harpist who gains fame by slaying Goliath, a champion of the Philistines, in southern Canaan. David becomes a favourite of Saul, the first king of Israel; he also forges a notably close friendship with Jonathan, a son of Saul. However, under the paranoia that David is seeking to usurp the throne, Saul attempts to kill David, forcing the latter to go into hiding and effectively operate as a fugitive for several years. After Saul and Jonathan are both killed in battle against the Philistines, a 30-year-old David is anointed king over all of Israel and Judah. Following his rise to power, D ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Backpropagation

In machine learning, backpropagation (backprop, BP) is a widely used algorithm for training feedforward artificial neural networks. Generalizations of backpropagation exist for other artificial neural networks (ANNs), and for functions generally. These classes of algorithms are all referred to generically as "backpropagation". In fitting a neural network, backpropagation computes the gradient of the loss function with respect to the weights of the network for a single input–output example, and does so efficiently, unlike a naive direct computation of the gradient with respect to each weight individually. This efficiency makes it feasible to use gradient methods for training multilayer networks, updating weights to minimize loss; gradient descent, or variants such as stochastic gradient descent, are commonly used. The backpropagation algorithm works by computing the gradient of the loss function with respect to each weight by the chain rule, computing the gradient one laye ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sigmoid Function

A sigmoid function is a mathematical function having a characteristic "S"-shaped curve or sigmoid curve. A common example of a sigmoid function is the logistic function shown in the first figure and defined by the formula: :S(x) = \frac = \frac=1-S(-x). Other standard sigmoid functions are given in the Examples section. In some fields, most notably in the context of artificial neural networks, the term "sigmoid function" is used as an alias for the logistic function. Special cases of the sigmoid function include the Gompertz curve (used in modeling systems that saturate at large values of x) and the ogee curve (used in the spillway of some dams). Sigmoid functions have domain of all real numbers, with return (response) value commonly monotonically increasing but could be decreasing. Sigmoid functions most often show a return value (y axis) in the range 0 to 1. Another commonly used range is from −1 to 1. A wide variety of sigmoid functions including the logistic and ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Matrix Multiplication

In mathematics, particularly in linear algebra, matrix multiplication is a binary operation that produces a matrix from two matrices. For matrix multiplication, the number of columns in the first matrix must be equal to the number of rows in the second matrix. The resulting matrix, known as the matrix product, has the number of rows of the first and the number of columns of the second matrix. The product of matrices and is denoted as . Matrix multiplication was first described by the French mathematician Jacques Philippe Marie Binet in 1812, to represent the composition of linear maps that are represented by matrices. Matrix multiplication is thus a basic tool of linear algebra, and as such has numerous applications in many areas of mathematics, as well as in applied mathematics, statistics, physics, economics, and engineering. Computing matrix products is a central operation in all computational applications of linear algebra. Notation This article will use the following n ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Projection (mathematics)

In mathematics, a projection is a mapping of a set (or other mathematical structure) into a subset (or sub-structure), which is equal to its square for mapping composition, i.e., which is idempotent. The restriction to a subspace of a projection is also called a ''projection'', even if the idempotence property is lost. An everyday example of a projection is the casting of shadows onto a plane (sheet of paper): the projection of a point is its shadow on the sheet of paper, and the projection (shadow) of a point on the sheet of paper is that point itself (idempotency). The shadow of a three-dimensional sphere is a closed disk. Originally, the notion of projection was introduced in Euclidean geometry to denote the projection of the three-dimensional Euclidean space onto a plane in it, like the shadow example. The two main projections of this kind are: * The projection from a point onto a plane or central projection: If ''C'' is a point, called the center of projection, then th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Nearest Neighbor Graph

The nearest neighbor graph (NNG) is a directed graph defined for a set of points in a metric space, such as the Euclidean distance in the plane. The NNG has a vertex for each point, and a directed edge from ''p'' to ''q'' whenever ''q'' is a nearest neighbor of ''p'', a point whose distance from ''p'' is minimum among all the given points other than ''p'' itself. In many uses of these graphs, the directions of the edges are ignored and the NNG is defined instead as an undirected graph. However, the nearest neighbor relation is not a symmetric one, i.e., ''p'' from the definition is not necessarily a nearest neighbor for ''q''. In theoretical discussions of algorithms a kind of general position is often assumed, namely, the nearest (k-nearest) neighbor is unique for each object. In implementations of the algorithms it is necessary to bear in mind that this is not always the case. For situations in which it is necessary to make the nearest neighbor for each object unique, the set ' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

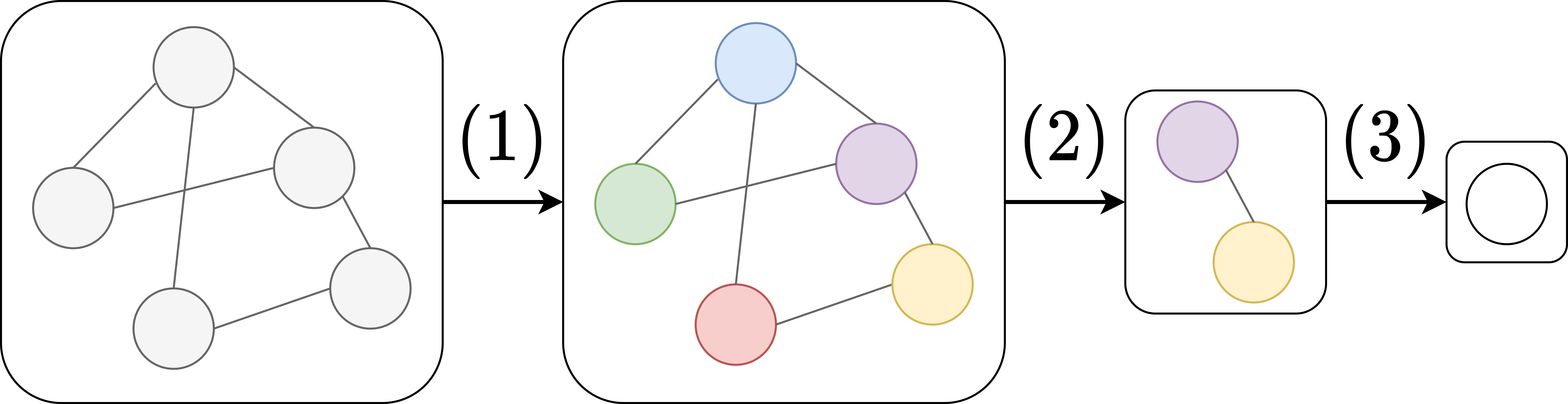

Graph Neural Network

A graph neural network (GNN) belongs to a class of artificial neural networks for processing data that can be represented as graphs. In the more general subject of "geometric deep learning", certain existing neural network architectures can be interpreted as GNNs operating on suitably defined graphs. A convolutional neural network layer, in the context of computer vision, can be seen as a GNN applied to graphs whose nodes are pixels and only adjacent pixels are connected by edges in the graph. A transformer layer, in natural language processing, can be seen as a GNN applied to complete graphs whose nodes are words or tokens in a passage of natural language text. The key design element of GNNs is the use of ''pairwise message passing'', such that graph nodes iteratively update their representations by exchanging information with their neighbors. Since their inception, several different GNN architectures have been proposed, which implement different flavors of message passing, s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |