|

One-way Analysis Of Variance

In statistics, one-way analysis of variance (or one-way ANOVA) is a technique to compare whether two or more samples' means are significantly different (using the F distribution). This analysis of variance technique requires a numeric Dependent and independent variables, response variable "Y" and a single explanatory variable "X", hence "one-way". The ANOVA tests the null hypothesis, which states that samples in all groups are drawn from populations with the same mean values. To do this, two estimates are made of the population variance. These estimates rely on various assumptions (#Assumptions, see below). The ANOVA produces an F-statistic, the ratio of the variance calculated among the means to the variance within the samples. If the group means are drawn from populations with the same mean values, the variance between the group means should be lower than the variance of the samples, following the central limit theorem. A higher ratio therefore implies that the samples were draw ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Statistics

Statistics (from German language, German: ', "description of a State (polity), state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model to be studied. Populations can be diverse groups of people or objects such as "all people living in a country" or "every atom composing a crystal". Statistics deals with every aspect of data, including the planning of data collection in terms of the design of statistical survey, surveys and experimental design, experiments. When census data (comprising every member of the target population) cannot be collected, statisticians collect data by developing specific experiment designs and survey sample (statistics), samples. Representative sampling assures that inferences and conclusions can reasonably extend from the sample ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Robust Statistics

Robust statistics are statistics that maintain their properties even if the underlying distributional assumptions are incorrect. Robust Statistics, statistical methods have been developed for many common problems, such as estimating location parameter, location, scale parameter, scale, and regression coefficient, regression parameters. One motivation is to produce statistical methods that are not unduly affected by outliers. Another motivation is to provide methods with good performance when there are small departures from a Parametric statistics, parametric distribution. For example, robust methods work well for mixtures of two normal distributions with different standard deviations; under this model, non-robust methods like a t-test work poorly. Introduction Robust statistics seek to provide methods that emulate popular statistical methods, but are not unduly affected by outliers or other small departures from Statistical assumption, model assumptions. In statistics, classical e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Mixed Model

A mixed model, mixed-effects model or mixed error-component model is a statistical model containing both fixed effects and random effects. These models are useful in a wide variety of disciplines in the physical, biological and social sciences. They are particularly useful in settings where repeated measures design, repeated measurements are made on the same statistical units (see also longitudinal study), or where measurements are made on clusters of related statistical units. Mixed models are often preferred over traditional analysis of variance regression models because they don't rely on the independent observations assumption. Further, they have their flexibility in dealing with missing values and uneven spacing of repeated measurements. The Mixed model analysis allows measurements to be explicitly modeled in a wider variety of correlation and variance-covariance variance, avoiding biased estimations structures. This page will discuss mainly linear mixed-effects models rathe ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

F Test

An F-test is a statistical test that compares variances. It is used to determine if the variances of two samples, or if the ratios of variances among multiple samples, are significantly different. The test calculates a statistic, represented by the random variable F, and checks if it follows an F-distribution. This check is valid if the null hypothesis is true and standard assumptions about the errors (ε) in the data hold. F-tests are frequently used to compare different statistical models and find the one that best describes the population the data came from. When models are created using the least squares method, the resulting F-tests are often called "exact" F-tests. The F-statistic was developed by Ronald Fisher in the 1920s as the variance ratio and was later named in his honor by George W. Snedecor. Common examples Common examples of the use of ''F''-tests include the study of the following cases * The hypothesis that the means of a given set of normally distributed po ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Analysis Of Variance

Analysis of variance (ANOVA) is a family of statistical methods used to compare the Mean, means of two or more groups by analyzing variance. Specifically, ANOVA compares the amount of variation ''between'' the group means to the amount of variation ''within'' each group. If the between-group variation is substantially larger than the within-group variation, it suggests that the group means are likely different. This comparison is done using an F-test. The underlying principle of ANOVA is based on the law of total variance, which states that the total variance in a dataset can be broken down into components attributable to different sources. In the case of ANOVA, these sources are the variation between groups and the variation within groups. ANOVA was developed by the statistician Ronald Fisher. In its simplest form, it provides a statistical test of whether two or more population means are equal, and therefore generalizes the Student's t-test#Independent two-sample t-test, ''t''- ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

F-distribution

In probability theory and statistics, the ''F''-distribution or ''F''-ratio, also known as Snedecor's ''F'' distribution or the Fisher–Snedecor distribution (after Ronald Fisher and George W. Snedecor), is a continuous probability distribution that arises frequently as the null distribution of a test statistic, most notably in the analysis of variance (ANOVA) and other ''F''-tests. Definitions The ''F''-distribution with ''d''1 and ''d''2 degrees of freedom is the distribution of X = \frac where U_1 and U_2 are independent random variables with chi-square distributions with respective degrees of freedom d_1 and d_2. It can be shown to follow that the probability density function (pdf) for ''X'' is given by \begin f(x; d_1,d_2) &= \frac \\ pt&=\frac \left(\frac\right)^ x^ \left(1+\frac \, x \right)^ \end for real ''x'' > 0. Here \mathrm is the beta function. In many applications, the parameters ''d''1 and ''d''2 are positive integers, but the distribution is wel ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Expected Value

In probability theory, the expected value (also called expectation, expectancy, expectation operator, mathematical expectation, mean, expectation value, or first Moment (mathematics), moment) is a generalization of the weighted average. Informally, the expected value is the arithmetic mean, mean of the possible values a random variable can take, weighted by the probability of those outcomes. Since it is obtained through arithmetic, the expected value sometimes may not even be included in the sample data set; it is not the value you would expect to get in reality. The expected value of a random variable with a finite number of outcomes is a weighted average of all possible outcomes. In the case of a continuum of possible outcomes, the expectation is defined by Integral, integration. In the axiomatic foundation for probability provided by measure theory, the expectation is given by Lebesgue integration. The expected value of a random variable is often denoted by , , or , with a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Standard Error

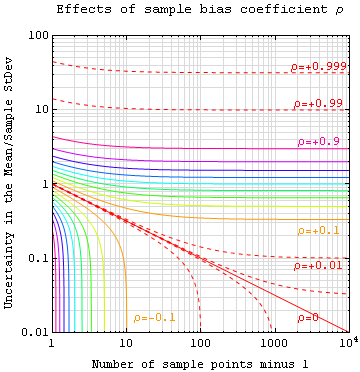

The standard error (SE) of a statistic (usually an estimator of a parameter, like the average or mean) is the standard deviation of its sampling distribution or an estimate of that standard deviation. In other words, it is the standard deviation of statistic values (each value is per sample that is a set of observations made per sampling on the same population). If the statistic is the sample mean, it is called the standard error of the mean (SEM). The standard error is a key ingredient in producing confidence intervals. The sampling distribution of a mean is generated by repeated sampling from the same population and recording the sample mean per sample. This forms a distribution of different means, and this distribution has its own mean and variance. Mathematically, the variance of the sampling mean distribution obtained is equal to the variance of the population divided by the sample size. This is because as the sample size increases, sample means cluster more closely arou ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

P-value

In null-hypothesis significance testing, the ''p''-value is the probability of obtaining test results at least as extreme as the result actually observed, under the assumption that the null hypothesis is correct. A very small ''p''-value means that such an extreme observed outcome would be very unlikely ''under the null hypothesis''. Even though reporting ''p''-values of statistical tests is common practice in academic publications of many quantitative fields, misinterpretation and misuse of p-values is widespread and has been a major topic in mathematics and metascience. In 2016, the American Statistical Association (ASA) made a formal statement that "''p''-values do not measure the probability that the studied hypothesis is true, or the probability that the data were produced by random chance alone" and that "a ''p''-value, or statistical significance, does not measure the size of an effect or the importance of a result" or "evidence regarding a model or hypothesis". That ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

Statistical Significance

In statistical hypothesis testing, a result has statistical significance when a result at least as "extreme" would be very infrequent if the null hypothesis were true. More precisely, a study's defined significance level, denoted by \alpha, is the probability of the study rejecting the null hypothesis, given that the null hypothesis is true; and the p-value, ''p''-value of a result, ''p'', is the probability of obtaining a result at least as extreme, given that the null hypothesis is true. The result is said to be ''statistically significant'', by the standards of the study, when p \le \alpha. The significance level for a study is chosen before data collection, and is typically set to 5% or much lower—depending on the field of study. In any experiment or Observational study, observation that involves drawing a Sampling (statistics), sample from a Statistical population, population, there is always the possibility that an observed effect would have occurred due to sampling error al ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

ANOVA On Ranks

In statistics, one purpose for the analysis of variance (ANOVA) is to analyze differences in means between groups. The test statistic, ''F'', assumes independence of observations, homogeneous variances, and population normality. ANOVA on ranks is a statistic designed for situations when the normality assumption has been violated. Logic of the ''F'' test on means The ''F'' statistic is a ratio of a numerator to a denominator. Consider randomly selected subjects that are subsequently randomly assigned to groups A, B, and C. Under the truth of the null hypothesis, the variability (or sum of squares) of scores on some dependent variable will be the same within each group. When divided by the degrees of freedom (i.e., based on the number of subjects per group), the denominator of the ''F'' ratio is obtained. Treat the mean for each group as a score, and compute the variability (again, the sum of squares) of those three scores. When divided by its degrees of freedom (i.e., based on the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Journal Of The American Statistical Association

The ''Journal of the American Statistical Association'' is a quarterly peer-reviewed scientific journal published by Taylor & Francis on behalf of the American Statistical Association. It covers work primarily focused on the application of statistics, statistical theory and methods in economic, social, physical, engineering, and health sciences. The journal also includes reviews of books which are relevant to the field. The journal was established in 1888 as the ''Publications of the American Statistical Association''. It was renamed ''Quarterly Publications of the American Statistical Association'' in 1912, obtaining its current title in 1922. Reception According to the ''Journal Citation Reports ''Journal Citation Reports'' (''JCR'') is an annual publication by Clarivate. It has been integrated with the Web of Science and is accessed from the Web of Science Core Collection. It provides information about academic journals in the natur ...'', the journal has a 2023 impac ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |