|

Inductive Bias

The inductive bias (also known as learning bias) of a learning algorithm is the set of assumptions that the learner uses to predict outputs of given inputs that it has not encountered. Inductive bias is anything which makes the algorithm learn one pattern instead of another pattern (e.g., step-functions in decision trees instead of continuous functions in linear regression models). Learning involves searching a space of solutions for a solution that provides a good explanation of the data. However, in many cases, there may be multiple equally appropriate solutions. An inductive bias allows a learning algorithm to prioritize one solution (or interpretation) over another, independently of the observed data. In machine learning, the aim is to construct algorithms that are able to learn to predict a certain target output. To achieve this, the learning algorithm is presented some training examples that demonstrate the intended relation of input and output values. Then the learner is s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Machine Learning

Machine learning (ML) is a field of study in artificial intelligence concerned with the development and study of Computational statistics, statistical algorithms that can learn from data and generalise to unseen data, and thus perform Task (computing), tasks without explicit Machine code, instructions. Within a subdiscipline in machine learning, advances in the field of deep learning have allowed Neural network (machine learning), neural networks, a class of statistical algorithms, to surpass many previous machine learning approaches in performance. ML finds application in many fields, including natural language processing, computer vision, speech recognition, email filtering, agriculture, and medicine. The application of ML to business problems is known as predictive analytics. Statistics and mathematical optimisation (mathematical programming) methods comprise the foundations of machine learning. Data mining is a related field of study, focusing on exploratory data analysi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

No Free Lunch Theorem

In mathematical folklore, the "no free lunch" (NFL) theorem (sometimes pluralized) of David Wolpert and William Macready, alludes to the saying "no such thing as a free lunch", that is, there are no easy shortcuts to success. It appeared in the 1997 "No Free Lunch Theorems for Optimization". Wolpert had previously derived no free lunch theorems for machine learning (statistical inference).Wolpert, David (1996),The Lack of ''A Priori'' Distinctions between Learning Algorithms, ''Neural Computation'', pp. 1341–1390. In 2005, Wolpert and Macready themselves indicated that the first theorem in their paper "state that any two optimization algorithms are equivalent when their performance is averaged across all possible problems". The "no free lunch" (NFL) theorem is an easily stated and easily understood consequence of theorems Wolpert and Macready actually prove. It is objectively weaker than the proven theorems, and thus does not encapsulate them. Various investigators have exte ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

No Free Lunch In Search And Optimization

In computational complexity and optimization the no free lunch theorem is a result that states that for certain types of mathematical problems, the computational cost of finding a solution, averaged over all problems in the class, is the same for any solution method. The name alludes to the saying " no such thing as a free lunch", that is, no method offers a "short cut". This is under the assumption that the search space is a probability density function. It does not apply to the case where the search space has underlying structure (e.g., is a differentiable function) that can be exploited more efficiently (e.g., Newton's method in optimization) than random search or even has closed-form solutions (e.g., the extrema of a quadratic polynomial) that can be determined without search at all. For such probabilistic assumptions, the outputs of all procedures solving a particular type of problem are statistically identical. A colourful way of describing such a circumstance, introduc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cognitive Bias

A cognitive bias is a systematic pattern of deviation from norm (philosophy), norm or rationality in judgment. Individuals create their own "subjective reality" from their perception of the input. An individual's construction of reality, not the Objectivity (philosophy), objective input, may dictate their behavior in the world. Thus, cognitive biases may sometimes lead to perceptual distortion, inaccurate judgment, illogical interpretation, and irrationality. While cognitive biases may initially appear to be negative, some are adaptive. They may lead to more effective actions in a given context. Furthermore, allowing cognitive biases enables faster decisions which can be desirable when timeliness is more valuable than accuracy, as illustrated in Heuristic (psychology), heuristics. Other cognitive biases are a "by-product" of human processing limitations, resulting from a lack of appropriate mental mechanisms (bounded rationality), the impact of an individual's constitution and bi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Algorithmic Bias

Algorithmic bias describes systematic and repeatable harmful tendency in a computerized sociotechnical system to create " unfair" outcomes, such as "privileging" one category over another in ways different from the intended function of the algorithm. Bias can emerge from many factors, including but not limited to the design of the algorithm or the unintended or unanticipated use or decisions relating to the way data is coded, collected, selected or used to train the algorithm. For example, algorithmic bias has been observed in search engine results and social media platforms. This bias can have impacts ranging from inadvertent privacy violations to reinforcing social biases of race, gender, sexuality, and ethnicity. The study of algorithmic bias is most concerned with algorithms that reflect "systematic and unfair" discrimination. This bias has only recently been addressed in legal frameworks, such as the European Union's General Data Protection Regulation (proposed 2018) ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

K-nearest Neighbors Algorithm

In statistics, the ''k''-nearest neighbors algorithm (''k''-NN) is a Non-parametric statistics, non-parametric supervised learning method. It was first developed by Evelyn Fix and Joseph Lawson Hodges Jr., Joseph Hodges in 1951, and later expanded by Thomas M. Cover, Thomas Cover. Most often, it is used for statistical classification, classification, as a ''k''-NN classifier, the output of which is a class membership. An object is classified by a plurality vote of its neighbors, with the object being assigned to the class most common among its ''k'' nearest neighbors (''k'' is a positive integer, typically small). If ''k'' = 1, then the object is simply assigned to the class of that single nearest neighbor. The ''k''-NN algorithm can also be generalized for regression analysis, regression. In ''-NN regression'', also known as ''nearest neighbor smoothing'', the output is the property value for the object. This value is the average of the values of ''k'' nearest neighbo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Feature Selection

In machine learning, feature selection is the process of selecting a subset of relevant Feature (machine learning), features (variables, predictors) for use in model construction. Feature selection techniques are used for several reasons: * simplification of models to make them easier to interpret, * shorter training times, * to avoid the curse of dimensionality, * improve the compatibility of the data with a certain learning model class, * to encode inherent Symmetric space, symmetries present in the input space. The central premise when using feature selection is that data sometimes contains features that are ''redundant'' or ''irrelevant'', and can thus be removed without incurring much loss of information. Redundancy and irrelevance are two distinct notions, since one relevant feature may be redundant in the presence of another relevant feature with which it is strongly correlated. Feature extraction creates new features from functions of the original features, whereas feat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Feature Space

Feature may refer to: Computing * Feature recognition, could be a hole, pocket, or notch * Feature (computer vision), could be an edge, corner or blob * Feature (machine learning), in statistics: individual measurable properties of the phenomena being observed * Software feature, a distinguishing characteristic of a software program Science and analysis * Feature data, in geographic information systems, comprise information about an entity with a geographic location * Features, in audio signal processing, an aim to capture specific aspects of audio signals in a numeric way * Feature (archaeology), any dug, built, or dumped evidence of human activity Media * Feature film, a film with a running time long enough to be considered the principal or sole film to fill a program ** Feature length, the standardized length of such films * Feature story, a piece of non-fiction writing about news * Radio documentary (feature), a radio program devoted to covering a particular topic ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Minimum Description Length

Minimum Description Length (MDL) is a model selection principle where the shortest description of the data is the best model. MDL methods learn through a data compression perspective and are sometimes described as mathematical applications of Occam's razor. The MDL principle can be extended to other forms of inductive inference and learning, for example to estimation and sequential prediction, without explicitly identifying a single model of the data. MDL has its origins mostly in information theory and has been further developed within the general fields of statistics, theoretical computer science and machine learning, and more narrowly computational learning theory. Historically, there are different, yet interrelated, usages of the definite noun phrase "''the'' minimum description length ''principle''" that vary in what is meant by ''description'': * Within Jorma Rissanen's theory of learning, a central concept of information theory, models are statistical hypotheses and descri ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Support Vector Machines

In machine learning, support vector machines (SVMs, also support vector networks) are supervised max-margin models with associated learning algorithms that analyze data for classification and regression analysis. Developed at AT&T Bell Laboratories, SVMs are one of the most studied models, being based on statistical learning frameworks of VC theory proposed by Vapnik (1982, 1995) and Chervonenkis (1974). In addition to performing linear classification, SVMs can efficiently perform non-linear classification using the ''kernel trick'', representing the data only through a set of pairwise similarity comparisons between the original data points using a kernel function, which transforms them into coordinates in a higher-dimensional feature space. Thus, SVMs use the kernel trick to implicitly map their inputs into high-dimensional feature spaces, where linear classification can be performed. Being max-margin models, SVMs are resilient to noisy data (e.g., misclassified examples). ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cross-validation (statistics)

Cross-validation, sometimes called rotation estimation or out-of-sample testing, is any of various similar model validation techniques for assessing how the results of a statistics, statistical analysis will Generalization error, generalize to an independent data set. Cross-validation includes Resampling (statistics), resampling and sample splitting methods that use different portions of the data to test and train a model on different iterations. It is often used in settings where the goal is prediction, and one wants to estimate how accuracy, accurately a predictive modelling, predictive model will perform in practice. It can also be used to assess the quality of a fitted model and the stability of its parameters. In a prediction problem, a model is usually given a dataset of ''known data'' on which training is run (''training dataset''), and a dataset of ''unknown data'' (or ''first seen'' data) against which the model is tested (called the validation set, validation dataset o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

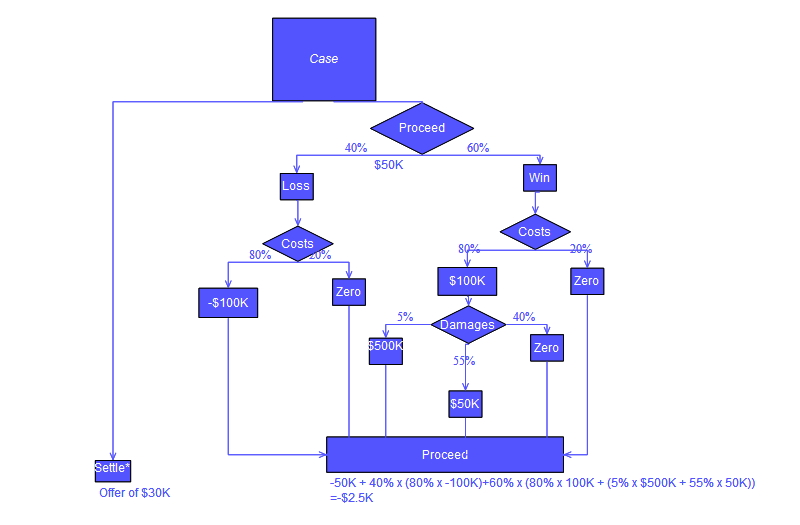

Decision Tree

A decision tree is a decision support system, decision support recursive partitioning structure that uses a Tree (graph theory), tree-like Causal model, model of decisions and their possible consequences, including probability, chance event outcomes, resource costs, and utility. It is one way to display an algorithm that only contains conditional control statements. Decision trees are commonly used in operations research, specifically in decision analysis, to help identify a strategy most likely to reach a goal, but are also a popular tool in Decision tree learning, machine learning. Overview A decision tree is a flowchart-like structure in which each internal node represents a test on an attribute (e.g. whether a coin flip comes up heads or tails), each branch represents the outcome of the test, and each leaf node represents a class label (decision taken after computing all attributes). The paths from root to leaf represent classification rules. In decision analysis, a de ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |