|

Hardware Cache

In computing, a cache ( ) is a hardware or software component that stores data so that future requests for that data can be served faster; the data stored in a cache might be the result of an earlier computation or a copy of data stored elsewhere. A cache hit occurs when the requested data can be found in a cache, while a cache miss occurs when it cannot. Cache hits are served by reading data from the cache, which is faster than recomputing a result or reading from a slower data store; thus, the more requests that can be served from the cache, the faster the system performs. To be cost-effective, caches must be relatively small. Nevertheless, caches are effective in many areas of computing because typical computer applications access data with a high degree of locality of reference. Such access patterns exhibit temporal locality, where data is requested that has been recently requested, and spatial locality, where data is requested that is stored near data that has already be ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Web Browser

A web browser, often shortened to browser, is an application for accessing websites. When a user requests a web page from a particular website, the browser retrieves its files from a web server and then displays the page on the user's screen. Browsers can also display content stored locally on the user's device. Browsers are used on a range of devices, including desktops, laptops, tablets, smartphones, smartwatches and consoles. As of 2024, the most used browsers worldwide are Google Chrome (~66% market share), Safari (~16%), Edge (~6%), Firefox (~3%), Samsung Internet (~2%), and Opera (~2%). As of 2023, an estimated 5.4 billion people had used a browser. Function The purpose of a web browser is to fetch content and display it on the user's device. This process begins when the user inputs a Uniform Resource Locator (URL), such as ''https://en.wikipedia.org/'', into the browser's address bar. Virtually all URLs on the Web start with either ''http:'' or ''h ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Prefetcher

The Prefetcher is a component of Microsoft Windows which was introduced in Windows XP. It is a component of the Memory management, Memory Manager that can speed up the Windows booting, boot Windows NT Startup Process, process and shorten the amount of time it takes to start up programs. It accomplishes this by caching files that are needed by an application to RAM as the application is launched, thus consolidating disk reads and reducing disk seeks. This feature was covered by US patent 6,633,968. Since Windows Vista, the Prefetcher has been extended by Windows Vista I/O technologies#SuperFetch, SuperFetch and ReadyBoost. SuperFetch attempts to accelerate application launch times by monitoring and adapting to application usage patterns over periods of time, and caching the majority of the files and data needed by them into memory in advance so that they can be accessed very quickly when needed. ReadyBoost (when enabled) uses external memory like a USB flash drive to extend the syste ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Page Cache

In computing, a page cache, sometimes also called disk cache, is a transparent cache for the pages originating from a secondary storage device such as a hard disk drive (HDD) or a solid-state drive (SSD). The operating system keeps a page cache in otherwise unused portions of the main memory (RAM), resulting in quicker access to the contents of cached pages and overall performance improvements. A page cache is implemented in kernels with the paging memory management, and is mostly transparent to applications. Usually, all physical memory not directly allocated to applications is used by the operating system for the page cache. Since the memory would otherwise be idle and is easily reclaimed when applications request it, there is generally no associated performance penalty and the operating system might even report such memory as "free" or "available". When compared to main memory, hard disk drive read/writes are slow and random accesses require expensive disk seeks; as a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Loader (computing)

In computing, computer systems a loader is the part of an operating system that is responsible for loading computer program, programs and Library (computing), libraries. It is one of the essential stages in the process of starting a program, as it places programs into memory and prepares them for execution. Loading a program involves either memory-mapped file, memory-mapping or copying the contents of the executable, executable file containing the program instructions into memory, and then carrying out other required preparatory tasks to prepare the executable for running. Once loading is complete, the operating system starts the program by passing control to the loaded program code. All operating systems that support program loading have loaders, apart from highly specialized computer systems that only have a fixed set of specialized programs. Embedded systems typically do not have loaders, and instead, the code executes directly from ROM or similar. In order to load the operatin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Disk Storage

Disc or disk may refer to: * Disk (mathematics) In geometry, a disk (Spelling of disc, also spelled disc) is the region in a plane (geometry), plane bounded by a circle. A disk is said to be ''closed'' if it contains the circle that constitutes its boundary, and ''open'' if it does not. Fo ..., a two dimensional shape, the interior of a circle * Disk storage * Optical disc * Floppy disk Music * Disc (band), an American experimental music band * ''Disk'' (album), a 1995 EP by Moby Other uses * Disc harrow, a farm implement * Discus throw or disc throw, a track and field event involving a heavy disc * Intervertebral disc, a cartilage between vertebrae * Disk (functional analysis), a subset of a vector space * ''Disc'' (magazine), a British music magazine * Disk, a part of a flower * Disc number, numbers assigned to Inuit by the Government of Canada * Galactic disc, a disc-shaped group of stars Abbreviations * Death-inducing signaling complex * DISC assessmen ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Prefetch Input Queue

Fetching the instruction opcodes from program memory well in advance is known as prefetching and it is served by using a prefetch input queue (PIQ). The pre-fetched instructions are stored in a queue. The fetching of opcodes well in advance, prior to their need for execution, increases the overall efficiency of the processor boosting its speed. The processor no longer has to wait for the memory access operations for the subsequent instruction opcode to complete. This architecture was prominently used in the Intel 8086 microprocessor. Introduction Pipelining was brought to the forefront of computing architecture design during the 1960s due to the need for faster and more efficient computing. Pipelining is the broader concept and most modern processors load their instructions some clock cycles before they execute them. This is achieved by pre-loading machine code from memory into a ''prefetch input queue''. This behavior only applies to von Neumann computers (that is, not H ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Demand Paging

In computer operating systems, demand paging (as opposed to anticipatory paging) is a method of virtual memory management. In a system that uses demand paging, the operating system copies a disk page into physical memory only when an attempt is made to access it and that page is not already in memory (i.e., if a page fault occurs). It follows that a process begins execution with none of its pages in physical memory, and triggers many page faults until most of its working set of pages are present in physical memory. This is an example of a lazy loading technique. Basic concept Demand paging only brings pages into memory when an executing process demands them. This is often referred to as lazy loading, as only those pages demanded by the process are swapped from secondary storage to main memory. Contrast this to pure swapping, where all memory for a process is swapped from secondary storage to main memory when the process starts up or resumes execution. Commonly, to achieve this ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cache Coherence

In computer architecture, cache coherence is the uniformity of shared resource data that is stored in multiple local caches. In a cache coherent system, if multiple clients have a cached copy of the same region of a shared memory resource, all copies are the same. Without cache coherence, a change made to the region by one client may not be seen by others, and errors can result when the data used by different clients is mismatched. A cache coherence protocol is used to maintain cache coherency. The two main types are snooping and directory-based protocols. Cache coherence is of particular relevance in multiprocessing systems, where each CPU may have its own local cache of a shared memory resource. Overview In a shared memory multiprocessor system with a separate cache memory for each processor, it is possible to have many copies of shared data: one copy in the main memory and one in the local cache of each processor that requested it. When one of the copies of data is c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Write-back With Write-allocation

In computing, a cache ( ) is a hardware or software component that stores data so that future requests for that data can be served faster; the data stored in a cache might be the result of an earlier computation or a copy of data stored elsewhere. A cache hit occurs when the requested data can be found in a cache, while a cache miss occurs when it cannot. Cache hits are served by reading data from the cache, which is faster than recomputing a result or reading from a slower data store; thus, the more requests that can be served from the cache, the faster the system performs. To be cost-effective, caches must be relatively small. Nevertheless, caches are effective in many areas of computing because typical Application software, computer applications access data with a high degree of locality of reference. Such access patterns exhibit temporal locality, where data is requested that has been recently requested, and spatial locality, where data is requested that is stored near dat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

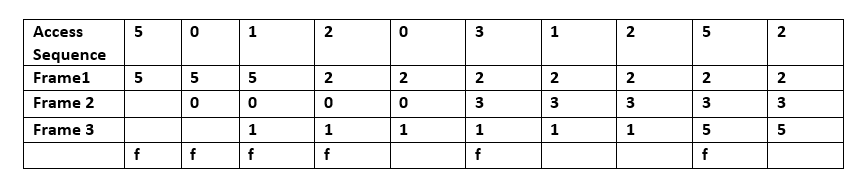

Cache Replacement Policies

In computing, cache replacement policies (also known as cache replacement algorithms or cache algorithms) are optimizing instructions or algorithms which a computer program or hardware-maintained structure can utilize to manage a cache of information. Caching improves performance by keeping recent or often-used data items in memory locations which are faster, or computationally cheaper to access, than normal memory stores. When the cache is full, the algorithm must choose which items to discard to make room for new data. __TOC__ Overview The average memory reference time is : T = m \times T_m + T_h + E where : m = miss ratio = 1 - (hit ratio) : T_m = time to make main-memory access when there is a miss (or, with a multi-level cache, average memory reference time for the next-lower cache) : T_h= latency: time to reference the cache (should be the same for hits and misses) : E = secondary effects, such as queuing effects in multiprocessor systems A cache has two primary figures ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |