|

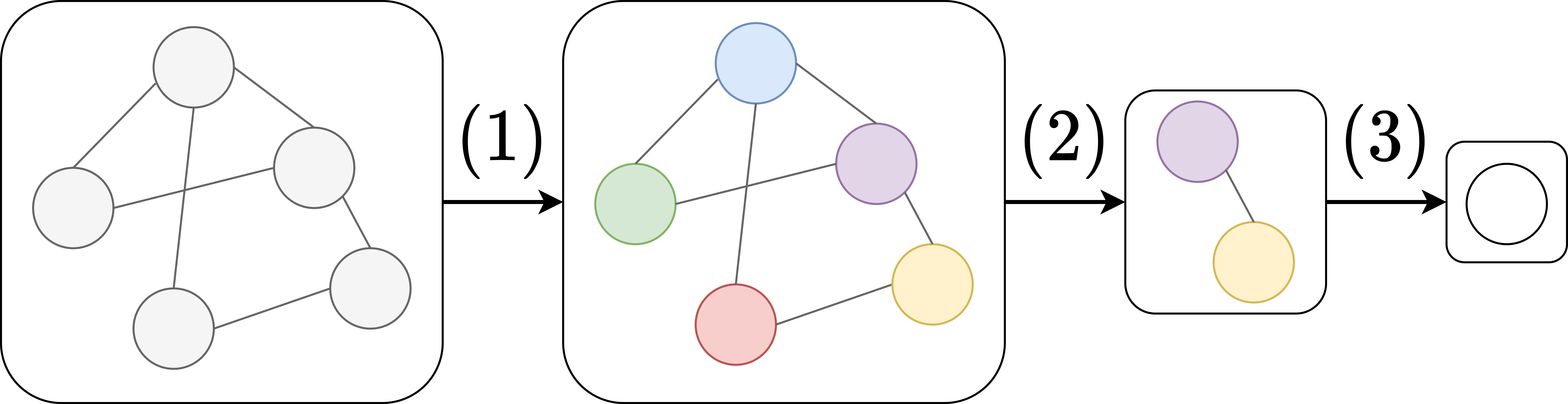

Graph Neural Network

Graph neural networks (GNN) are specialized artificial neural networks that are designed for tasks whose inputs are graphs. One prominent example is molecular drug design. Each input sample is a graph representation of a molecule, where atoms form the nodes and chemical bonds between atoms form the edges. In addition to the graph representation, the input also includes known chemical properties for each of the atoms. Dataset samples may thus differ in length, reflecting the varying numbers of atoms in molecules, and the varying number of bonds between them. The task is to predict the efficacy of a given molecule for a specific medical application, like eliminating ''E. coli'' bacteria. The key design element of GNNs is the use of ''pairwise message passing'', such that graph nodes iteratively update their representations by exchanging information with their neighbors. Several GNN architectures have been proposed, which implement different flavors of message passing, started ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Artificial Neural Network

In machine learning, a neural network (also artificial neural network or neural net, abbreviated ANN or NN) is a computational model inspired by the structure and functions of biological neural networks. A neural network consists of connected units or nodes called '' artificial neurons'', which loosely model the neurons in the brain. Artificial neuron models that mimic biological neurons more closely have also been recently investigated and shown to significantly improve performance. These are connected by ''edges'', which model the synapses in the brain. Each artificial neuron receives signals from connected neurons, then processes them and sends a signal to other connected neurons. The "signal" is a real number, and the output of each neuron is computed by some non-linear function of the sum of its inputs, called the '' activation function''. The strength of the signal at each connection is determined by a ''weight'', which adjusts during the learning process. Typically, ne ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Physics

Physics is the scientific study of matter, its Elementary particle, fundamental constituents, its motion and behavior through space and time, and the related entities of energy and force. "Physical science is that department of knowledge which relates to the order of nature, or, in other words, to the regular succession of events." It is one of the most fundamental scientific disciplines. "Physics is one of the most fundamental of the sciences. Scientists of all disciplines use the ideas of physics, including chemists who study the structure of molecules, paleontologists who try to reconstruct how dinosaurs walked, and climatologists who study how human activities affect the atmosphere and oceans. Physics is also the foundation of all engineering and technology. No engineer could design a flat-screen TV, an interplanetary spacecraft, or even a better mousetrap without first understanding the basic laws of physics. (...) You will come to see physics as a towering achievement of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Downsampling (signal Processing)

In digital signal processing, downsampling, compression, and decimation are terms associated with the process of sample rate conversion, ''resampling'' in a multi-rate digital signal processing system. Both ''downsampling'' and ''decimation'' can be synonymous with ''compression'', or they can describe an entire process of bandwidth reduction (Lowpass filter, filtering) and sample-rate reduction. When the process is performed on a sequence of samples of a ''signal'' or a continuous function, it produces an approximation of the sequence that would have been obtained by sampling the signal at a lower rate (or Dots per inch, density, as in the case of a photograph). ''Decimation'' is a term that historically means the ''Decimation (punishment), removal of every tenth one''. But in signal processing, ''decimation by a factor of 10'' actually means ''keeping'' only every tenth sample. This factor multiplies the sampling interval or, equivalently, divides the sampling rate. For example, i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pooling Layer

In neural networks, a pooling layer is a kind of network layer that downsamples and aggregates information that is dispersed among many vectors into fewer vectors. It has several uses. It removes redundant information, reducing the amount of computation and memory required, makes the model more robust to small variations in the input, and increases the receptive field of neurons in later layers in the network. Convolutional neural network pooling Pooling is most commonly used in convolutional neural networks (CNN). Below is a description of pooling in 2-dimensional CNNs. The generalization to n-dimensions is immediate. As notation, we consider a tensor x \in \R^, where H is height, W is width, and C is the number of channels. A pooling layer outputs a tensor y \in \R^. We define two variables f, s called "filter size" (aka "kernel size") and "stride". Sometimes, it is necessary to use a different filter size and stride for horizontal and vertical directions. In such cases, we ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Map (mathematics)

In mathematics, a map or mapping is a function (mathematics), function in its general sense. These terms may have originated as from the process of making a map, geographical map: ''mapping'' the Earth surface to a sheet of paper. The term ''map'' may be used to distinguish some special types of functions, such as homomorphisms. For example, a linear map is a homomorphism of vector spaces, while the term linear function may have this meaning or it may mean a linear polynomial. In category theory, a map may refer to a morphism. The term ''transformation'' can be used interchangeably, but ''transformation (function), transformation'' often refers to a function from a set to itself. There are also a few less common uses in logic and graph theory. Maps as functions In many branches of mathematics, the term ''map'' is used to mean a Function (mathematics), function, sometimes with a specific property of particular importance to that branch. For instance, a "map" is a "continuous f ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Layer (deep Learning)

A layer in a deep learning model is a structure or network topology in the model's architecture, which takes information from the previous layers and then passes it to the next layer. Layer types The first type of layer is the Dense layer, also called the fully-connected layer, and is used for abstract representations of input data. In this layer, neurons connect to every neuron in the preceding layer. In multilayer perceptron networks, these layers are stacked together. The Convolutional layer is typically used for image analysis tasks. In this layer, the network detects edges, textures, and patterns. The outputs from this layer are then fed into a fully-connected layer for further processing. See also: CNN model. The Pooling layer is used to reduce the size of data input. The Recurrent layer is used for text processing with a memory function. Similar to the Convolutional layer, the output of recurrent layers are usually fed into a fully-connected layer for further proces ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Flux (machine-learning Framework)

Flux is an open-source machine-learning software library and ecosystem written in Julia. Its current stable release is v. It has a layer-stacking-based interface for simpler models, and has a strong support on interoperability with other Julia packages instead of a monolithic design. For example, GPU support is implemented transparently by CuArrays.jl. This is in contrast to some other machine learning frameworks which are implemented in other languages with Julia bindings, such as TensorFlow.jl (the unofficial wrapper, now deprecated), and thus are more limited by the functionality present in the underlying implementation, which is often in C or C++. Flux joined NumFOCUS as an affiliated project in December of 2021. Flux's focus on interoperability has enabled, for example, support for Neural Differential Equations, by fusing Flux.jl and DifferentialEquations.jl into DiffEqFlux.jl. Flux supports recurrent and convolutional networks. It is also capable of differentiable program ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Julia (programming Language)

Julia is a high-level programming language, high-level, general-purpose programming language, general-purpose dynamic programming language, dynamic programming language, designed to be fast and productive, for e.g. data science, artificial intelligence, machine learning, modeling and simulation, most commonly used for numerical analysis and computational science. Distinctive aspects of Julia's design include a type system with parametric polymorphism and the use of multiple dispatch as a core programming paradigm, a default just-in-time compilation, just-in-time (JIT) compiler (with support for ahead-of-time compilation) and an tracing garbage collection, efficient (multi-threaded) garbage collection implementation. Notably Julia does not support classes with encapsulated methods and instead it relies on structs with generic methods/functions not tied to them. By default, Julia is run similarly to scripting languages, using its runtime, and allows for read–eval–print loop, i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Google JAX

JAX is a Python library for accelerator-oriented array computation and program transformation, designed for high-performance numerical computing and large-scale machine learning. It is developed by Google with contributions from Nvidia and other community contributors. It is described as bringing together a modified version oautograd(automatic obtaining of the gradient function through differentiation of a function) and OpenXLA's XLA (Accelerated Linear Algebra). It is designed to follow the structure and workflow of NumPy as closely as possible and works with various existing frameworks such as TensorFlow and PyTorch. The primary features of JAX are: # Providing a unified NumPy-like interface to computations that run on CPU, GPU, or TPU, in local or distributed settings. # Built-in Just-In-Time (JIT) compilation via Open XLA, an open-source machine learning compiler ecosystem. # Efficient evaluation of gradients via its automatic differentiation transformations. # Automatically ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

TensorFlow

TensorFlow is a Library (computing), software library for machine learning and artificial intelligence. It can be used across a range of tasks, but is used mainly for Types of artificial neural networks#Training, training and Statistical inference, inference of Neural network (machine learning), neural networks. "It is machine learning software being used for various kinds of perceptual and language understanding tasks" – Jeffrey Dean, minute 0:47 / 2:17 from YouTube clip It is one of the most popular deep learning frameworks, alongside others such as PyTorch. It is free and open-source software released under the Apache License 2.0. It was developed by the Google Brain team for Google's internal use in research and production. The initial version was released under the Apache License 2.0 in 2015. Google released an updated version, TensorFlow 2.0, in September 2019. TensorFlow can be used in a wide variety of programming languages, including Python (programming language), P ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

PyTorch

PyTorch is a machine learning library based on the Torch library, used for applications such as computer vision and natural language processing, originally developed by Meta AI and now part of the Linux Foundation umbrella. It is one of the most popular deep learning frameworks, alongside others such as TensorFlow, offering free and open-source software released under the modified BSD license. Although the Python interface is more polished and the primary focus of development, PyTorch also has a C++ interface. A number of pieces of deep learning software are built on top of PyTorch, including Tesla Autopilot, Uber's Pyro, Hugging Face's Transformers, and Catalyst. PyTorch provides two high-level features: * Tensor computing (like NumPy) with strong acceleration via graphics processing units (GPU) * Deep neural networks built on a tape-based automatic differentiation system History In 2001, Torch was written and released under a GPL license. It was a machine-learning li ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Library (computing)

In computing, a library is a collection of System resource, resources that can be leveraged during software development to implement a computer program. Commonly, a library consists of executable code such as compiled function (computer science), functions and Class (computer programming), classes, or a library can be a collection of source code. A resource library may contain data such as images and Text string, text. A library can be used by multiple, independent consumers (programs and other libraries). This differs from resources defined in a program which can usually only be used by that program. When a consumer uses a library resource, it gains the value of the library without having to implement it itself. Libraries encourage software reuse in a Modular programming, modular fashion. Libraries can use other libraries resulting in a hierarchy of libraries in a program. When writing code that uses a library, a programmer only needs to know how to use it not its internal d ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |