|

Fuzzy Hashing

Fuzzy hashing, also known as similarity hashing, is a technique for detecting data that is similar, but not exactly the same, as other data. This is in contrast to cryptographic hash functions, which are designed to have significantly different hashes for even minor differences. Fuzzy hashing has been used to identify malware and has potential for other applications, like data loss prevention and detecting multiple versions of code. Background A hash function is a mathematical algorithm which maps arbitrary-sized data to a fixed size output. Many solutions use cryptographic hash functions like SHA-256 to detect duplicates or check for known files within large collection of files. However, cryptographic hash functions cannot be used for determining if a file is ''similar'' to a known file, because one of the requirements of a cryptographic hash function is that a small change to the input should change the hash value so extensively that the new hash value appears uncorrelated wi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Content Similarity Detection

Plagiarism detection or content similarity detection is the process of locating instances of plagiarism or copyright infringement within a work or document. The widespread use of computers and the advent of the Internet have made it easier to plagiarize the work of others. Bretag, T., & Mahmud, S. (2009). A model for determining student plagiarism: Electronic detection and academic judgement. ''Journal of University Teaching & Learning Practice, 6''(1). Retrieved from http://ro.uow.edu.au/jutlp/vol6/iss1/6 Detection of plagiarism can be undertaken in a variety of ways. Human detection is the most traditional form of identifying plagiarism from written work. This can be a lengthy and time-consuming task for the reader and can also result in inconsistencies in how plagiarism is identified within an organization. Text-matching software (TMS), which is also referred to as "plagiarism detection software" or "anti-plagiarism" software, has become widely available, in the form of both com ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Andrew Tridgell

Andrew "Tridge" Tridgell (born 28 February 1967) is an Australian computer programmer. He is the author of and a contributor to the Samba (software), Samba file server, and co-inventor of the rsync algorithm. He has analysed complex proprietary protocols and algorithms, to allow compatible free and open source software implementations. Projects Tridgell was a major developer of the Samba software, analyzing the Server Message Block protocol used for Workgroup (computer networking), workgroup and network file sharing by Microsoft Windows products. He developed thhierarchical memory allocator, originally as part of Samba. For his PhD dissertation, he co-developed rsync, including the rsync algorithm, a highly efficient file transfer and File synchronization, synchronisation tool. He was also the original author of rzip, which uses a similar algorithm to rsync. He developed spamsum, based on locality-sensitive hashing algorithms. He is the author of KnightCap, a reinforcement l ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer Security

Computer security (also cybersecurity, digital security, or information technology (IT) security) is a subdiscipline within the field of information security. It consists of the protection of computer software, systems and computer network, networks from Threat (security), threats that can lead to unauthorized information disclosure, theft or damage to computer hardware, hardware, software, or Data (computing), data, as well as from the disruption or misdirection of the Service (economics), services they provide. The significance of the field stems from the expanded reliance on computer systems, the Internet, and wireless network standards. Its importance is further amplified by the growth of smart devices, including smartphones, televisions, and the various devices that constitute the Internet of things (IoT). Cybersecurity has emerged as one of the most significant new challenges facing the contemporary world, due to both the complexity of information systems and the societi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Anti-spam

Various anti-spam techniques are used to prevent email spam (unsolicited bulk email). No technique is a complete solution to the spam problem, and each has trade-offs between incorrectly rejecting legitimate email ( false positives) as opposed to not rejecting all spam email ( false negatives) – and the associated costs in time, effort, and cost of wrongfully obstructing good mail. Anti-spam techniques can be broken into four broad categories: those that require actions by individuals, those that can be automated by email administrators, those that can be automated by email senders and those employed by researchers and law enforcement officials. End-user techniques There are a number of techniques that individuals can use to restrict the availability of their email addresses, with the goal of reducing their chance of receiving spam. Discretion Sharing an email address only among a limited group of correspondents is one way to limit the chance that the address will be "harv ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Locality-sensitive Hashing

In computer science, locality-sensitive hashing (LSH) is a fuzzy hashing technique that hashes similar input items into the same "buckets" with high probability. (The number of buckets is much smaller than the universe of possible input items.) Since similar items end up in the same buckets, this technique can be used for data clustering and nearest neighbor search. It differs from conventional hashing techniques in that hash collisions are maximized, not minimized. Alternatively, the technique can be seen as a way to reduce the dimensionality of high-dimensional data; high-dimensional input items can be reduced to low-dimensional versions while preserving relative distances between items. Hashing-based approximate nearest-neighbor search algorithms generally use one of two main categories of hashing methods: either data-independent methods, such as locality-sensitive hashing (LSH); or data-dependent methods, such as locality-preserving hashing (LPH). Locality-preserving hashin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bloom Filter

In computing, a Bloom filter is a space-efficient probabilistic data structure, conceived by Burton Howard Bloom in 1970, that is used to test whether an element is a member of a set. False positive matches are possible, but false negatives are not – in other words, a query returns either "possibly in set" or "definitely not in set". Elements can be added to the set, but not removed (though this can be addressed with the counting Bloom filter variant); the more items added, the larger the probability of false positives. Bloom proposed the technique for applications where the amount of source data would require an impractically large amount of memory if "conventional" error-free hashing techniques were applied. He gave the example of a hyphenation algorithm for a dictionary of 500,000 words, out of which 90% follow simple hyphenation rules, but the remaining 10% require expensive disk accesses to retrieve specific hyphenation patterns. With sufficient core memory, an error- ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Locality-sensitive Hashing

In computer science, locality-sensitive hashing (LSH) is a fuzzy hashing technique that hashes similar input items into the same "buckets" with high probability. (The number of buckets is much smaller than the universe of possible input items.) Since similar items end up in the same buckets, this technique can be used for data clustering and nearest neighbor search. It differs from conventional hashing techniques in that hash collisions are maximized, not minimized. Alternatively, the technique can be seen as a way to reduce the dimensionality of high-dimensional data; high-dimensional input items can be reduced to low-dimensional versions while preserving relative distances between items. Hashing-based approximate nearest-neighbor search algorithms generally use one of two main categories of hashing methods: either data-independent methods, such as locality-sensitive hashing (LSH); or data-dependent methods, such as locality-preserving hashing (LPH). Locality-preserving hashin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Nilsimsa Hash

Nilsimsa is an anti-spam focused locality-sensitive hashing algorithm originally proposed the cmeclax remailer operator in 2001 and then reviewed by Ernesto Damiani et al. in their 2004 paper titled, "An Open Digest-based Technique for Spam Detection". The goal of Nilsimsa is to generate a hash digest of an email message such that the digests of two similar messages are similar to each other. In comparison with cryptographic hash functions such as SHA-1 or MD5, making a small modification to a document does not substantially change the resulting hash of the document. The paper suggests that the Nilsimsa satisfies three requirements: # The digest identifying each message should not vary significantly (sic) for changes that can be produced automatically. # The encoding must be robust against intentional attacks. # The encoding should support an extremely low risk of false positives. Subsequent testing on a range of file types identified the Nilsimsa hash as having a significantly hi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Nearest Neighbor Search

Nearest neighbor search (NNS), as a form of proximity search, is the optimization problem of finding the point in a given set that is closest (or most similar) to a given point. Closeness is typically expressed in terms of a dissimilarity function: the less similar the objects, the larger the function values. Formally, the nearest-neighbor (NN) search problem is defined as follows: given a set ''S'' of points in a space ''M'' and a query point ''q'' ∈ ''M'', find the closest point in ''S'' to ''q''. Donald Knuth in vol. 3 of '' The Art of Computer Programming'' (1973) called it the post-office problem, referring to an application of assigning to a residence the nearest post office. A direct generalization of this problem is a ''k''-NN search, where we need to find the ''k'' closest points. Most commonly ''M'' is a metric space and dissimilarity is expressed as a distance metric, which is symmetric and satisfies the triangle inequality. Even more common, ''M'' is take ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cryptographic Hash Function

A cryptographic hash function (CHF) is a hash algorithm (a map (mathematics), map of an arbitrary binary string to a binary string with a fixed size of n bits) that has special properties desirable for a cryptography, cryptographic application: * the probability of a particular n-bit output result (hash value) for a random input string ("message") is 2^ (as for any good hash), so the hash value can be used as a representative of the message; * finding an input string that matches a given hash value (a ''pre-image'') is infeasible, ''assuming all input strings are equally likely.'' The ''resistance'' to such search is quantified as security strength: a cryptographic hash with n bits of hash value is expected to have a ''preimage resistance'' strength of n bits, unless the space of possible input values is significantly smaller than 2^ (a practical example can be found in ); * a ''second preimage'' resistance strength, with the same expectations, refers to a similar problem of f ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cluster Analysis

Cluster analysis or clustering is the data analyzing technique in which task of grouping a set of objects in such a way that objects in the same group (called a cluster) are more Similarity measure, similar (in some specific sense defined by the analyst) to each other than to those in other groups (clusters). It is a main task of exploratory data analysis, and a common technique for statistics, statistical data analysis, used in many fields, including pattern recognition, image analysis, information retrieval, bioinformatics, data compression, computer graphics and machine learning. Cluster analysis refers to a family of algorithms and tasks rather than one specific algorithm. It can be achieved by various algorithms that differ significantly in their understanding of what constitutes a cluster and how to efficiently find them. Popular notions of clusters include groups with small Distance function, distances between cluster members, dense areas of the data space, intervals or pa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Locality Sensitive Hashing

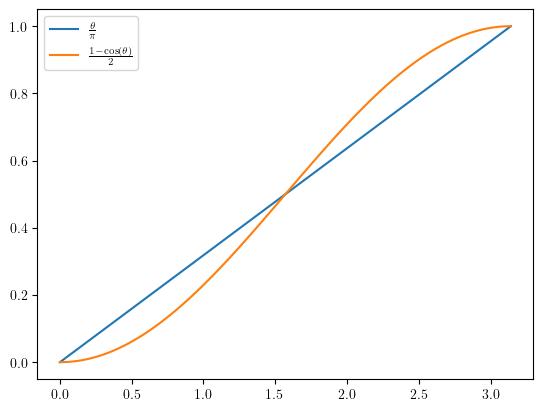

In computer science, locality-sensitive hashing (LSH) is a fuzzy hashing technique that hashes similar input items into the same "buckets" with high probability. (The number of buckets is much smaller than the universe of possible input items.) Since similar items end up in the same buckets, this technique can be used for data clustering and nearest neighbor search. It differs from conventional hashing techniques in that hash collisions are maximized, not minimized. Alternatively, the technique can be seen as a way to reduce the dimensionality of high-dimensional data; high-dimensional input items can be reduced to low-dimensional versions while preserving relative distances between items. Hashing-based approximate nearest-neighbor search algorithms generally use one of two main categories of hashing methods: either data-independent methods, such as locality-sensitive hashing (LSH); or data-dependent methods, such as locality-preserving hashing (LPH). Locality-preserving hashin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |