|

Formal Calculation

In mathematical logic, a formal calculation, or formal operation, is a calculation that is ''systematic but without a rigorous justification''. It involves manipulating symbols in an expression using a generic substitution without proving that the necessary conditions hold. Essentially, it involves the form of an expression without considering its underlying meaning. This reasoning can either serve as positive evidence that some statement is true when it is difficult or unnecessary to provide proof or as an inspiration for the creation of new (completely rigorous) definitions. However, this interpretation of the term formal is not universally accepted, and some consider it to mean quite the opposite: a completely rigorous argument, as in formal mathematical logic. Examples Formal calculations can lead to results that are wrong in one context, but correct in another context. The equation :\sum_^ q^n = \frac holds if ''q'' has an absolute value less than 1. Ignoring this restricti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mathematical Logic

Mathematical logic is the study of Logic#Formal logic, formal logic within mathematics. Major subareas include model theory, proof theory, set theory, and recursion theory (also known as computability theory). Research in mathematical logic commonly addresses the mathematical properties of formal systems of logic such as their expressive or deductive power. However, it can also include uses of logic to characterize correct mathematical reasoning or to establish foundations of mathematics. Since its inception, mathematical logic has both contributed to and been motivated by the study of foundations of mathematics. This study began in the late 19th century with the development of axiomatic frameworks for geometry, arithmetic, and Mathematical analysis, analysis. In the early 20th century it was shaped by David Hilbert's Hilbert's program, program to prove the consistency of foundational theories. Results of Kurt Gödel, Gerhard Gentzen, and others provided partial resolution to th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Algebraic Geometry

Algebraic geometry is a branch of mathematics which uses abstract algebraic techniques, mainly from commutative algebra, to solve geometry, geometrical problems. Classically, it studies zero of a function, zeros of multivariate polynomials; the modern approach generalizes this in a few different aspects. The fundamental objects of study in algebraic geometry are algebraic variety, algebraic varieties, which are geometric manifestations of solution set, solutions of systems of polynomial equations. Examples of the most studied classes of algebraic varieties are line (geometry), lines, circles, parabolas, ellipses, hyperbolas, cubic curves like elliptic curves, and quartic curves like lemniscate of Bernoulli, lemniscates and Cassini ovals. These are plane algebraic curves. A point of the plane lies on an algebraic curve if its coordinates satisfy a given polynomial equation. Basic questions involve the study of points of special interest like singular point of a curve, singular p ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Euclidean Vector Space

Euclidean space is the fundamental space of geometry, intended to represent physical space. Originally, in Euclid's ''Elements'', it was the three-dimensional space of Euclidean geometry, but in modern mathematics there are ''Euclidean spaces'' of any positive integer dimension ''n'', which are called Euclidean ''n''-spaces when one wants to specify their dimension. For ''n'' equal to one or two, they are commonly called respectively Euclidean lines and Euclidean planes. The qualifier "Euclidean" is used to distinguish Euclidean spaces from other spaces that were later considered in physics and modern mathematics. Ancient Greek geometers introduced Euclidean space for modeling the physical space. Their work was collected by the ancient Greek mathematician Euclid in his ''Elements'', with the great innovation of '' proving'' all properties of the space as theorems, by starting from a few fundamental properties, called '' postulates'', which either were considered as evident (f ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Orientation (vector Space)

The orientation of a real vector space or simply orientation of a vector space is the arbitrary choice of which ordered bases are "positively" oriented and which are "negatively" oriented. In the three-dimensional Euclidean space, right-handed bases are typically declared to be positively oriented, but the choice is arbitrary, as they may also be assigned a negative orientation. A vector space with an orientation selected is called an oriented vector space, while one not having an orientation selected, is called . In mathematics, '' orientability'' is a broader notion that, in two dimensions, allows one to say when a cycle goes around clockwise or counterclockwise, and in three dimensions when a figure is left-handed or right-handed. In linear algebra over the real numbers, the notion of orientation makes sense in arbitrary finite dimension, and is a kind of asymmetry that makes a reflection impossible to replicate by means of a simple displacement. Thus, in three dimensi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Determinant

In mathematics, the determinant is a Scalar (mathematics), scalar-valued function (mathematics), function of the entries of a square matrix. The determinant of a matrix is commonly denoted , , or . Its value characterizes some properties of the matrix and the linear map represented, on a given basis (linear algebra), basis, by the matrix. In particular, the determinant is nonzero if and only if the matrix is invertible matrix, invertible and the corresponding linear map is an linear isomorphism, isomorphism. However, if the determinant is zero, the matrix is referred to as singular, meaning it does not have an inverse. The determinant is completely determined by the two following properties: the determinant of a product of matrices is the product of their determinants, and the determinant of a triangular matrix is the product of its diagonal entries. The determinant of a matrix is :\begin a & b\\c & d \end=ad-bc, and the determinant of a matrix is : \begin a & b & c \\ d & e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

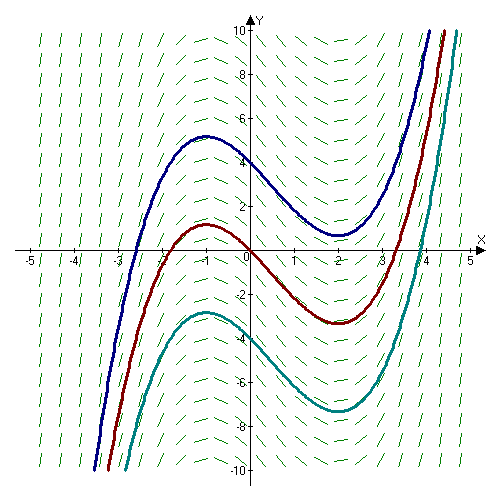

Antiderivative

In calculus, an antiderivative, inverse derivative, primitive function, primitive integral or indefinite integral of a continuous function is a differentiable function whose derivative is equal to the original function . This can be stated symbolically as . The process of solving for antiderivatives is called antidifferentiation (or indefinite integration), and its opposite operation is called ''differentiation'', which is the process of finding a derivative. Antiderivatives are often denoted by capital Roman letters such as and . Antiderivatives are related to definite integrals through the second fundamental theorem of calculus: the definite integral of a function over a closed interval where the function is Riemann integrable is equal to the difference between the values of an antiderivative evaluated at the endpoints of the interval. In physics, antiderivatives arise in the context of rectilinear motion (e.g., in explaining the relationship between position, veloc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Commutative Algebra

Commutative algebra, first known as ideal theory, is the branch of algebra that studies commutative rings, their ideal (ring theory), ideals, and module (mathematics), modules over such rings. Both algebraic geometry and algebraic number theory build on commutative algebra. Prominent examples of commutative rings include polynomial rings; rings of algebraic integers, including the ordinary integers \mathbb; and p-adic number, ''p''-adic integers. Commutative algebra is the main technical tool of algebraic geometry, and many results and concepts of commutative algebra are strongly related with geometrical concepts. The study of rings that are not necessarily commutative is known as noncommutative algebra; it includes ring theory, representation theory, and the theory of Banach algebras. Overview Commutative algebra is essentially the study of the rings occurring in algebraic number theory and algebraic geometry. Several concepts of commutative algebras have been developed in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Real Analysis

In mathematics, the branch of real analysis studies the behavior of real numbers, sequences and series of real numbers, and real functions. Some particular properties of real-valued sequences and functions that real analysis studies include convergence, limits, continuity, smoothness, differentiability and integrability. Real analysis is distinguished from complex analysis, which deals with the study of complex numbers and their functions. Scope Construction of the real numbers The theorems of real analysis rely on the properties of the (established) real number system. The real number system consists of an uncountable set (\mathbb), together with two binary operations denoted and \cdot, and a total order denoted . The operations make the real numbers a field, and, along with the order, an ordered field. The real number system is the unique '' complete ordered field'', in the sense that any other complete ordered field is isomorphic to it. Intuitively, completenes ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

P-adic Number

In number theory, given a prime number , the -adic numbers form an extension of the rational numbers which is distinct from the real numbers, though with some similar properties; -adic numbers can be written in a form similar to (possibly infinite) decimals, but with digits based on a prime number rather than ten, and extending to the left rather than to the right. For example, comparing the expansion of the rational number \tfrac15 in base vs. the -adic expansion, \begin \tfrac15 &= 0.01210121\ldots \ (\text 3) &&= 0\cdot 3^0 + 0\cdot 3^ + 1\cdot 3^ + 2\cdot 3^ + \cdots \\ mu\tfrac15 &= \dots 121012102 \ \ (\text) &&= \cdots + 2\cdot 3^3 + 1 \cdot 3^2 + 0\cdot3^1 + 2 \cdot 3^0. \end Formally, given a prime number , a -adic number can be defined as a series s=\sum_^\infty a_i p^i = a_k p^k + a_ p^ + a_ p^ + \cdots where is an integer (possibly negative), and each a_i is an integer such that 0\le a_i < p. A -adic integer is a -adic number such that < ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Power Series

In mathematics, a power series (in one variable) is an infinite series of the form \sum_^\infty a_n \left(x - c\right)^n = a_0 + a_1 (x - c) + a_2 (x - c)^2 + \dots where ''a_n'' represents the coefficient of the ''n''th term and ''c'' is a constant called the ''center'' of the series. Power series are useful in mathematical analysis, where they arise as Taylor series of infinitely differentiable functions. In fact, Borel's theorem implies that every power series is the Taylor series of some smooth function. In many situations, the center ''c'' is equal to zero, for instance for Maclaurin series. In such cases, the power series takes the simpler form \sum_^\infty a_n x^n = a_0 + a_1 x + a_2 x^2 + \dots. The partial sums of a power series are polynomials, the partial sums of the Taylor series of an analytic function are a sequence of converging polynomial approximations to the function at the center, and a converging power series can be seen as a kind of generalized polynom ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Formal Power Series

In mathematics, a formal series is an infinite sum that is considered independently from any notion of convergence, and can be manipulated with the usual algebraic operations on series (addition, subtraction, multiplication, division, partial sums, etc.). A formal power series is a special kind of formal series, of the form \sum_^\infty a_nx^n=a_0+a_1x+ a_2x^2+\cdots, where the a_n, called ''coefficients'', are numbers or, more generally, elements of some ring, and the x^n are formal powers of the symbol x that is called an indeterminate or, commonly, a variable. Hence, power series can be viewed as a generalization of polynomials where the number of terms is allowed to be infinite, and differ from usual power series by the absence of convergence requirements, which implies that a power series may not represent a function of its variable. Formal power series are in one to one correspondence with their sequences of coefficients, but the two concepts must not be confused, sin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |