|

Flattening Transformation

The flattening transformation is an algorithm that transforms nested data parallelism into flat data parallelism. It was pioneered by Guy Blelloch as part of the NESL programming language. The flattening transformation is also sometimes called vectorization, but is completely unrelated to automatic vectorization. The original flattening algorithm was concerned solely with first-order multidimensional arrays containing primitive types, but was extended to handle higher-order and recursive data types in the work on Data Parallel Haskell. Overview Flattening works by ''lifting'' functions to operate on arrays instead of on single values. For example, a function f : A \rightarrow B is lifted to a function f' : \rightarrow /math>. This means an expression map~f~x can be replaced with an application of the lifted function: f'~x. Intuitively, flattening thus works by replacing all function applications with applications of the corresponding lifted function. After flattening, ar ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Algorithm

In mathematics and computer science, an algorithm () is a finite sequence of rigorous instructions, typically used to solve a class of specific problems or to perform a computation. Algorithms are used as specifications for performing calculations and data processing. More advanced algorithms can perform automated deductions (referred to as automated reasoning) and use mathematical and logical tests to divert the code execution through various routes (referred to as automated decision-making). Using human characteristics as descriptors of machines in metaphorical ways was already practiced by Alan Turing with terms such as "memory", "search" and "stimulus". In contrast, a heuristic is an approach to problem solving that may not be fully specified or may not guarantee correct or optimal results, especially in problem domains where there is no well-defined correct or optimal result. As an effective method, an algorithm can be expressed within a finite amount of spac ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

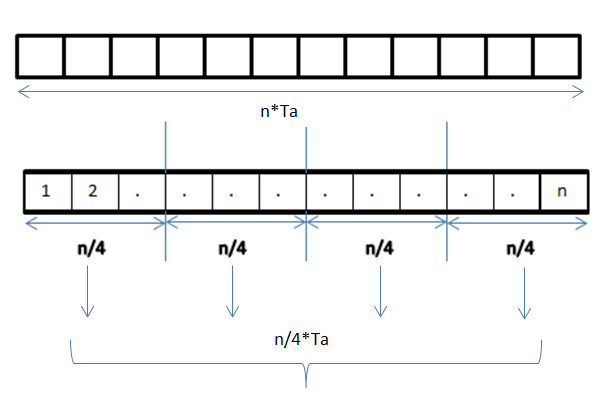

Data Parallelism

Data parallelism is parallelization across multiple processors in parallel computing environments. It focuses on distributing the data across different nodes, which operate on the data in parallel. It can be applied on regular data structures like arrays and matrices by working on each element in parallel. It contrasts to task parallelism as another form of parallelism. A data parallel job on an array of ''n'' elements can be divided equally among all the processors. Let us assume we want to sum all the elements of the given array and the time for a single addition operation is Ta time units. In the case of sequential execution, the time taken by the process will be ''n''×Ta time units as it sums up all the elements of an array. On the other hand, if we execute this job as a data parallel job on 4 processors the time taken would reduce to (''n''/4)×Ta + merging overhead time units. Parallel execution results in a speedup of 4 over sequential execution. One important thing to no ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

NESL

NESL is a parallel programming language developed at Carnegie Mellon by the SCandAL project and released in 1993. It integrates various ideas from parallel algorithms, functional programming, and array programming languages. The most important new ideas behind NESL are *Nested data parallelism: this feature offers the benefits of data parallelism, concise code that is easy to understand and debug, while being well suited for irregular algorithms, such as algorithms on trees, graphs or sparse matrices. *A language based performance model: this gives a formal way to calculate the work and depth of a program. These measures can be related to running time on parallel machines. The main design guideline for NESL was to make parallel programming easy and portable. Algorithms are typically significantly more concise in NESL than in most other parallel programming languages, and the code closely resembles high-level pseudocode. NESL nested data parallelism by using the flattening transf ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Automatic Vectorization

Automatic vectorization, in parallel computing, is a special case of automatic parallelization, where a computer program is converted from a scalar implementation, which processes a single pair of operands at a time, to a vector implementation, which processes one operation on multiple pairs of operands at once. For example, modern conventional computers, including specialized supercomputers, typically have vector operations that simultaneously perform operations such as the following four additions (via SIMD or SPMD hardware): :\begin c_1 & = a_1 + b_1 \\ c_2 & = a_2 + b_2 \\ c_3 & = a_3 + b_3 \\ c_4 & = a_4 + b_4 \end However, in most programming languages one typically writes loops that sequentially perform additions of many numbers. Here is an example of such a loop, written in C: for (i = 0; i < n; i++) c = a + b [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Parallel Haskell

In the pursuit of knowledge, data (; ) is a collection of discrete values that convey information, describing quantity, quality, fact, statistics, other basic units of meaning, or simply sequences of symbols that may be further interpreted. A datum is an individual value in a collection of data. Data is usually organized into structures such as tables that provide additional context and meaning, and which may themselves be used as data in larger structures. Data may be used as variables in a computational process. Data may represent abstract ideas or concrete measurements. Data is commonly used in scientific research, economics, and in virtually every other form of human organizational activity. Examples of data sets include price indices (such as consumer price index), unemployment rates, literacy rates, and census data. In this context, data represents the raw facts and figures which can be used in such a manner in order to capture the useful information out of it. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

1,2,3], [4,5], [], [6

Onekama ( ) is a village in Manistee County in the U.S. state of Michigan. The population was 411 at the 2010 census. The village is located on the shores of Portage Lake and is surrounded by Onekama Township. The town's name is derived from "Ona-ga-maa," an Anishinaabe word which means "singing water." Geography According to the United States Census Bureau, the village has a total area of , all land. The M-22 highway runs through downtown Onekama. History The predecessor of the village of Onekama was the settlement of Portage at Portage Point, first established in 1845, at the western end of Portage, at the outlet of Portage Creek. In 1871, when landowners around the land-locked lake became exasperated with the practices of the Portage Sawmill, they took the solution into their own hands and dug a channel through the narrow isthmus, opening a waterway that lowered the lake by 12 to 14 feet and brought it to the same level as Lake Michigan. When this action dried out Portage ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Prefix Sum

In computer science, the prefix sum, cumulative sum, inclusive scan, or simply scan of a sequence of numbers is a second sequence of numbers , the sums of prefixes (running totals) of the input sequence: : : : :... For instance, the prefix sums of the natural numbers are the triangular numbers: : Prefix sums are trivial to compute in sequential models of computation, by using the formula to compute each output value in sequence order. However, despite their ease of computation, prefix sums are a useful primitive in certain algorithms such as counting sort,. and they form the basis of the scan higher-order function in functional programming languages. Prefix sums have also been much studied in parallel algorithms, both as a test problem to be solved and as a useful primitive to be used as a subroutine in other parallel algorithms.. Abstractly, a prefix sum requires only a binary associative operator ⊕, making it useful for many applications from calculating well-separated pair ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Segmented Scan

In computer science, a segmented scan is a modification of the prefix sum with an equal-sized array of flag bits to denote segment boundaries on which the scan should be performed.Blelloch, Guy E. "Scans as primitive parallel operations." Computers, IEEE Transactions on 38.11 (1989): 1526-1538. Example In the following, the '1' flag bits indicate the beginning of each segment. : \begin 1 & 2 & 3 & 4 & 5& 6 &\text\\ \hline 1 & 0 & 0 & 1 & 0 & 1 &\text\\ \hline 1 & 3 & 6 & 4& 9 & 6 &\text\\ \end ;Group1 * 1 = 1 * 3 = 1 + 2 * 6 = 1 + 2 + 3 ;Group2 * 4 = 4 * 9 = 4 + 5 ;Group3 * 6 = 6 An alternative method used by High Performance Fortran is to begin a new segment at every transition of flag value. An advantage of this representation is that it is useful with both prefix and suffix (backwards) scans without changing its interpretation. In HPF, Fortran logical data type is used to represent segments. So the equivalent flag array for the above example would be as follows: \begin 1 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

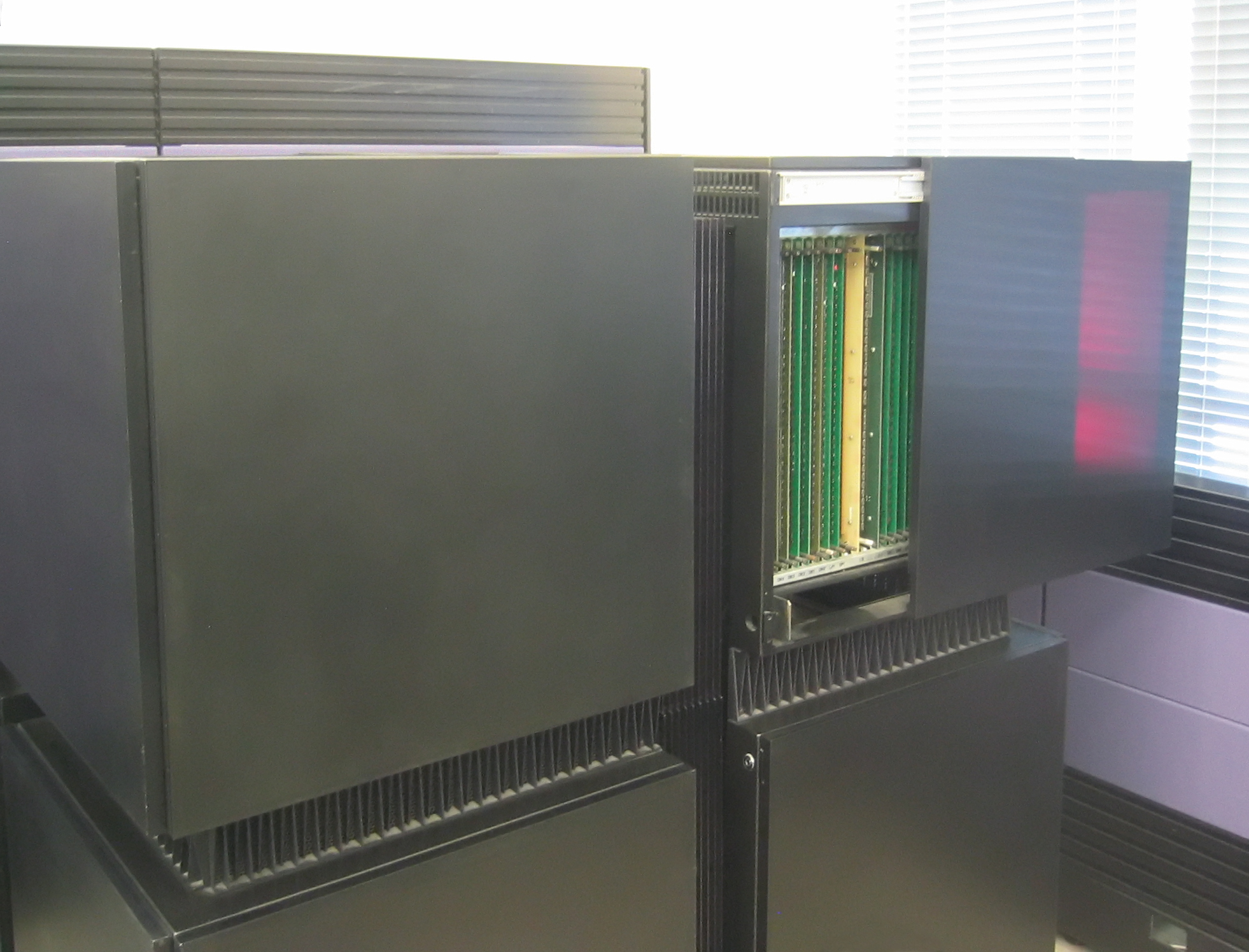

Connection Machine

A Connection Machine (CM) is a member of a series of massively parallel supercomputers that grew out of doctoral research on alternatives to the traditional von Neumann architecture of computers by Danny Hillis at Massachusetts Institute of Technology (MIT) in the early 1980s. Starting with CM-1, the machines were intended originally for applications in artificial intelligence (AI) and symbolic processing, but later versions found greater success in the field of computational science. Origin of idea Danny Hillis and Sheryl Handler founded Thinking Machines Corporation (TMC) in Waltham, Massachusetts, in 1983, moving in 1984 to Cambridge, MA. At TMC, Hillis assembled a team to develop what would become the CM-1 Connection Machine, a design for a massively parallel hypercube-based arrangement of thousands of microprocessors, springing from his PhD thesis work at MIT in Electrical Engineering and Computer Science (1985). The dissertation won the ACM Distinguished Dissertation ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Multicore

A multi-core processor is a microprocessor on a single integrated circuit with two or more separate processing units, called cores, each of which reads and executes program instructions. The instructions are ordinary CPU instructions (such as add, move data, and branch) but the single processor can run instructions on separate cores at the same time, increasing overall speed for programs that support multithreading or other parallel computing techniques. Manufacturers typically integrate the cores onto a single integrated circuit die (known as a chip multiprocessor or CMP) or onto multiple dies in a single chip package. The microprocessors currently used in almost all personal computers are multi-core. A multi-core processor implements multiprocessing in a single physical package. Designers may couple cores in a multi-core device tightly or loosely. For example, cores may or may not share caches, and they may implement message passing or shared-memory inter-core comm ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Google Jax

Google JAX is a machine learning framework for transforming numerical functions. It is described as bringing together a modified version oautograd(automatic obtaining of the gradient function through differentiation of a function) and TensorFlow'XLA(Accelerated Linear Algebra). It is designed to follow the structure and workflow of NumPy as closely as possible and works with various existing frameworks such as TensorFlow and PyTorch. The primary functions of JAX are: # grad: automatic differentiation # jit: compilation # vmap: auto-vectorization # pmap: SPMD programming grad The below code demonstrates the grad function's automatic differentiation. # imports from jax import grad import jax.numpy as jnp # define the logistic function def logistic(x): return jnp.exp(x) / (jnp.exp(x) + 1) # obtain the gradient function of the logistic function grad_logistic = grad(logistic) # evaluate the gradient of the logistic function at x = 1 grad_log_out = grad_logistic(1.0) ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Compiler Optimizations

In computing, an optimizing compiler is a compiler that tries to minimize or maximize some attributes of an executable computer program. Common requirements are to minimize a program's execution time, memory footprint, storage size, and power consumption (the last three being popular for portable computers). Compiler optimization is generally implemented using a sequence of ''optimizing transformations'', algorithms which take a program and transform it to produce a semantically equivalent output program that uses fewer resources or executes faster. It has been shown that some code optimization problems are NP-complete, or even undecidable. In practice, factors such as the programmer's willingness to wait for the compiler to complete its task place upper limits on the optimizations that a compiler might provide. Optimization is generally a very CPU- and memory-intensive process. In the past, computer memory limitations were also a major factor in limiting which optimizations coul ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |