|

Error-driven Learning

In reinforcement learning, error-driven learning is a method for adjusting a model's (intelligent agent's) parameters based on the difference between its output results and the ground truth. These models stand out as they depend on environmental feedback, rather than explicit labels or categories. They are based on the idea that language acquisition involves the minimization of the prediction error (MPSE). By leveraging these prediction errors, the models consistently refine expectations and decrease computational complexity. Typically, these algorithms are operated by the GeneRec algorithm. Error-driven learning has widespread applications in cognitive sciences and computer vision. These methods have also found successful application in natural language processing (NLP), including areas like part-of-speech tagging,Mohammad, Saif, and Ted Pedersen.Combining lexical and syntactic features for supervised word sense disambiguation. Proceedings of the Eighth Conference on Computational ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Reinforcement Learning

Reinforcement learning (RL) is an interdisciplinary area of machine learning and optimal control concerned with how an intelligent agent should take actions in a dynamic environment in order to maximize a reward signal. Reinforcement learning is one of the three basic machine learning paradigms, alongside supervised learning and unsupervised learning. Reinforcement learning differs from supervised learning in not needing labelled input-output pairs to be presented, and in not needing sub-optimal actions to be explicitly corrected. Instead, the focus is on finding a balance between exploration (of uncharted territory) and exploitation (of current knowledge) with the goal of maximizing the cumulative reward (the feedback of which might be incomplete or delayed). The search for this balance is known as the exploration–exploitation dilemma. The environment is typically stated in the form of a Markov decision process (MDP), as many reinforcement learning algorithms use dyn ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Backpropagation

In machine learning, backpropagation is a gradient computation method commonly used for training a neural network to compute its parameter updates. It is an efficient application of the chain rule to neural networks. Backpropagation computes the gradient of a loss function with respect to the weights of the network for a single input–output example, and does so efficiently, computing the gradient one layer at a time, iterating backward from the last layer to avoid redundant calculations of intermediate terms in the chain rule; this can be derived through dynamic programming. Strictly speaking, the term ''backpropagation'' refers only to an algorithm for efficiently computing the gradient, not how the gradient is used; but the term is often used loosely to refer to the entire learning algorithm – including how the gradient is used, such as by stochastic gradient descent, or as an intermediate step in a more complicated optimizer, such as Adaptive Moment Estimation. The ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

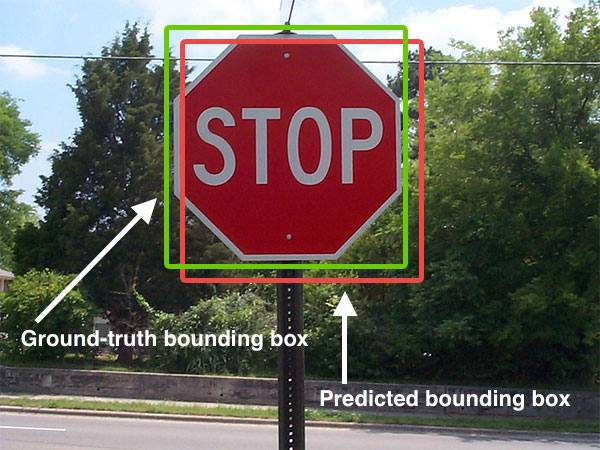

Computer Vision

Computer vision tasks include methods for image sensor, acquiring, Image processing, processing, Image analysis, analyzing, and understanding digital images, and extraction of high-dimensional data from the real world in order to produce numerical or symbolic information, e.g. in the form of decisions. "Understanding" in this context signifies the transformation of visual images (the input to the retina) into descriptions of the world that make sense to thought processes and can elicit appropriate action. This image understanding can be seen as the disentangling of symbolic information from image data using models constructed with the aid of geometry, physics, statistics, and learning theory. The scientific discipline of computer vision is concerned with the theory behind artificial systems that extract information from images. Image data can take many forms, such as video sequences, views from multiple cameras, multi-dimensional data from a 3D scanning, 3D scanner, 3D point clouds ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Reservoir Computing

Reservoir computing is a framework for computation derived from recurrent neural network theory that maps input signals into higher dimensional computational spaces through the dynamics of a fixed, non-linear system called a reservoir. After the input signal is fed into the reservoir, which is treated as a "black box," a simple readout mechanism is trained to read the state of the reservoir and map it to the desired output. The first key benefit of this framework is that training is performed only at the readout stage, as the reservoir dynamics are fixed. The second is that the computational power of naturally available systems, both classical and quantum mechanical, can be used to reduce the effective computational cost. History The first examples of reservoir neural networks demonstrated that randomly connected recurrent neural networks could be used for sensorimotor sequence learning, and simple forms of interval and speech discrimination. In these early models the memory in th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Spiking Neural Network

Spiking neural networks (SNNs) are artificial neural networks (ANN) that mimic natural neural networks. These models leverage timing of discrete spikes as the main information carrier. In addition to Artificial neuron, neuronal and Electrical synapse, synaptic state, SNNs incorporate the concept of time into their operating model. The idea is that Artificial neuron, neurons in the SNN do not transmit information at each propagation cycle (as it happens with typical multi-layer perceptron, perceptron networks), but rather transmit information only when a membrane potential—an intrinsic quality of the neuron related to its membrane electrical charge—reaches a specific value, called the threshold. When the membrane potential reaches the threshold, the neuron fires, and generates a signal that travels to other neurons which, in turn, increase or decrease their potentials in response to this signal. A neuron model that fires at the moment of threshold crossing is also called a sp ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Deep Belief Network

In machine learning, a deep belief network (DBN) is a generative graphical model, or alternatively a class of deep neural network, composed of multiple layers of latent variables ("hidden units"), with connections between the layers but not between units within each layer. When trained on a set of examples without supervision, a DBN can learn to probabilistically reconstruct its inputs. The layers then act as feature detectors. After this learning step, a DBN can be further trained with supervision to perform classification. DBNs can be viewed as a composition of simple, unsupervised networks such as restricted Boltzmann machines (RBMs) or autoencoders, where each sub-network's hidden layer serves as the visible layer for the next. An RBM is an undirected, generative energy-based model with a "visible" input layer and a hidden layer and connections between but not within layers. This composition leads to a fast, layer-by-layer unsupervised training procedure, where contr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Big O Notation

Big ''O'' notation is a mathematical notation that describes the asymptotic analysis, limiting behavior of a function (mathematics), function when the Argument of a function, argument tends towards a particular value or infinity. Big O is a member of a #Related asymptotic notations, family of notations invented by German mathematicians Paul Gustav Heinrich Bachmann, Paul Bachmann, Edmund Landau, and others, collectively called Bachmann–Landau notation or asymptotic notation. The letter O was chosen by Bachmann to stand for '':wikt:Ordnung#German, Ordnung'', meaning the order of approximation. In computer science, big O notation is used to Computational complexity theory, classify algorithms according to how their run time or space requirements grow as the input size grows. In analytic number theory, big O notation is often used to express a bound on the difference between an arithmetic function, arithmetical function and a better understood approximation; one well-known exam ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Decision-making

In psychology, decision-making (also spelled decision making and decisionmaking) is regarded as the Cognition, cognitive process resulting in the selection of a belief or a course of action among several possible alternative options. It could be either Rationality, rational or irrational. The decision-making process is a reasoning process based on assumptions of value (ethics and social sciences), values, preferences and beliefs of the decision-maker. Every decision-making process produces a final choice, which may or may not prompt action. Research about decision-making is also published under the label problem solving, particularly in European psychological research. Overview Decision-making can be regarded as a Problem solving, problem-solving activity yielding a solution deemed to be optimal, or at least satisfactory. It is therefore a process which can be more or less Rationality, rational or Irrationality, irrational and can be based on explicit knowledge, explicit or tacit ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Memory

Memory is the faculty of the mind by which data or information is encoded, stored, and retrieved when needed. It is the retention of information over time for the purpose of influencing future action. If past events could not be remembered, it would be impossible for language, relationships, or personal identity to develop. Memory loss is usually described as forgetfulness or amnesia. Memory is often understood as an informational processing system with explicit and implicit functioning that is made up of a sensory processor, short-term (or working) memory, and long-term memory. This can be related to the neuron. The sensory processor allows information from the outside world to be sensed in the form of chemical and physical stimuli and attended to various levels of focus and intent. Working memory serves as an encoding and retrieval processor. Information in the form of stimuli is encoded in accordance with explicit or implicit functions by the working memory p ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Attention

Attention or focus, is the concentration of awareness on some phenomenon to the exclusion of other stimuli. It is the selective concentration on discrete information, either subjectively or objectively. William James (1890) wrote that "Attention is the taking possession by the mind, in clear and vivid form, of one out of what seem several simultaneously possible objects or trains of thought. Focalization, concentration, of consciousness are of its essence." Attention has also been described as the allocation of limited cognitive processing resources. Attention is manifested by an attentional bottleneck, in terms of the amount of data the brain can process each second; for example, in human vision, less than 1% of the visual input data stream of 1MByte/sec can enter the bottleneck, leading to inattentional blindness. Attention remains a crucial area of investigation within education, psychology, neuroscience, cognitive neuroscience, and neuropsychology. Areas of activ ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |