|

Dimensional Modelling

Dimensional modeling (DM) is part of the '' Business Dimensional Lifecycle'' methodology developed by Ralph Kimball which includes a set of methods, techniques and concepts for use in data warehouse design. The approach focuses on identifying the key business processes within a business and modelling and implementing these first before adding additional business processes, as a bottom-up approach. An alternative approach from Inmon advocates a top down design of the model of all the enterprise data using tools such as entity-relationship modeling (ER). Description Dimensional modeling always uses the concepts of facts (measures), and dimensions (context). Facts are typically (but not always) numeric values that can be aggregated, and dimensions are groups of hierarchies and descriptors that define the facts. For example, sales amount is a fact; timestamp, product, register#, store#, etc. are elements of dimensions. Dimensional models are built by business process area, e.g. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

The Kimball Lifecycle

The Kimball lifecycle is a methodology for developing data warehouses, and has been developed by Ralph Kimball and a variety of colleagues. The methodology "covers a sequence of high level tasks for the effective design, development and deployment" of a data warehouse or business intelligence system. It is considered a "bottom-up" approach to data warehousing as pioneered by Ralph Kimball, in contrast to the older "top-down" approach pioneered by Bill Inmon. Program or project planning phase According to Ralph Kimball et al., the planning phase is the start of the lifecycle. It is a planning phase in which project is a single iteration of the lifecycle while program is the broader coordination of resources. When launching a project or program Kimball et al. suggests following three focus areas: * Defining and scoping the project * Plan the project * Manage the project Program and project management This is an ongoing discipline in the project. The purpose is to keep the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bitmap Index

A bitmap index is a special kind of database index that uses bitmaps. Bitmap indexes have traditionally been considered to work well for ''low-cardinality columns'', which have a modest number of distinct values, either absolutely, or relative to the number of records that contain the data. The extreme case of low cardinality is Boolean data (e.g., does a resident in a city have internet access?), which has two values, True and False. Bitmap indexes use bit arrays (commonly called bitmaps) and answer queries by performing bitwise logical operations on these bitmaps. Bitmap indexes have a significant space and performance advantage over other structures for query of such data. Their drawback is they are less efficient than the traditional B-tree indexes for columns whose data is frequently updated: consequently, they are more often employed in read-only systems that are specialized for fast query - e.g., data warehouses, and generally unsuitable for online transaction processing a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Massively Parallel

Massively parallel is the term for using a large number of computer processors (or separate computers) to simultaneously perform a set of coordinated computations in parallel. GPUs are massively parallel architecture with tens of thousands of threads. One approach is grid computing, where the processing power of many computers in distributed, diverse administrative domains is opportunistically used whenever a computer is available.''Grid computing: experiment management, tool integration, and scientific workflows'' by Radu Prodan, Thomas Fahringer 2007 pages 1–4 An example is BOINC, a volunteer-based, opportunistic grid system, whereby the grid provides power only on a best effort basis.''Parallel and Distributed Computational Intelligence'' by Francisco Fernández de Vega 2010 pages 65–68 Another approach is grouping many processors in close proximity to each other, as in a computer cluster. In such a centralized system the speed and flexibility of the interconnect ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Select (SQL)

The SQL SELECT statement returns a result set of rows, from one or more tables. A SELECT statement retrieves zero or more rows from one or more database tables or database views. In most applications, SELECT is the most commonly used data manipulation language (DML) command. As SQL is a declarative programming language, SELECT queries specify a result set, but do not specify how to calculate it. The database translates the query into a " query plan" which may vary between executions, database versions and database software. This functionality is called the " query optimizer" as it is responsible for finding the best possible execution plan for the query, within applicable constraints. The SELECT statement has many optional clauses: * SELECT list is the list of columns or SQL expressions to be returned by the query. This is approximately the relational algebra projection operation. * AS optionally provides an alias for each column or expression in the SELECT list. Thi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

View (SQL)

In a database, a view is the result set of a stored query that presents a limited perspective of the database to a user. This pre-established query command is kept in the data dictionary. Unlike ordinary '' base tables'' in a relational database, a view does not form part of the physical schema: as a result set, it is a virtual table computed or collated dynamically from data in the database when access to that view is requested. Changes applied to the data in a relevant ''underlying table'' are reflected in the data shown in subsequent invocations of the view. Views can provide advantages over tables: * Views can represent a subset of the data contained in a table. Consequently, a view can limit the degree of exposure of the underlying tables to the outer world: a given user may have permission to query the view, while denied access to the rest of the base table. * Views can join and simplify multiple tables into a single virtual table. * Views can act as aggregated tables, wh ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Slowly Changing Dimension

In data management and data warehousing, a slowly changing dimension (SCD) is a dimension that stores data which, while generally stable, may change over time, often in an unpredictable manner. This contrasts with a rapidly changing dimension, such as transactional parameters like customer ID, product ID, quantity, and price, which undergo frequent updates. Common examples of SCDs include geographical locations, customer details, or product attributes. Various methodologies address the complexities of SCD management. The Kimball Toolkit has popularized a categorization of techniques for handling SCD attributes as Types 1 through 6. These range from simple overwrites (Type 1), to creating new rows for each change (Type 2), adding new attributes (Type 3), maintaining separate history tables (Type 4), or employing hybrid approaches (Type 6 and 7). Type 0 is available to model an attribute as not really changing at all. Each type offers a trade-off between historical accuracy, data ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Immutable Object

In object-oriented (OO) and functional programming, an immutable object (unchangeable object) is an object whose state cannot be modified after it is created.Goetz et al. ''Java Concurrency in Practice''. Addison Wesley Professional, 2006, Section 3.4. Immutability This is in contrast to a mutable object (changeable object), which can be modified after it is created. In some cases, an object is considered immutable even if some internally used attributes change, but the object's state appears unchanging from an external point of view. For example, an object that uses to cache the results of expensive computations could still be considered an immutable object. Strings and other concrete objects are typically expressed as immutable objects to improve readability and runtime efficiency in object-oriented programming. Immutable objects are also useful because they are inherently thread-safe. Other benefits are that they are simpler to understand and reason about and offer higher ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

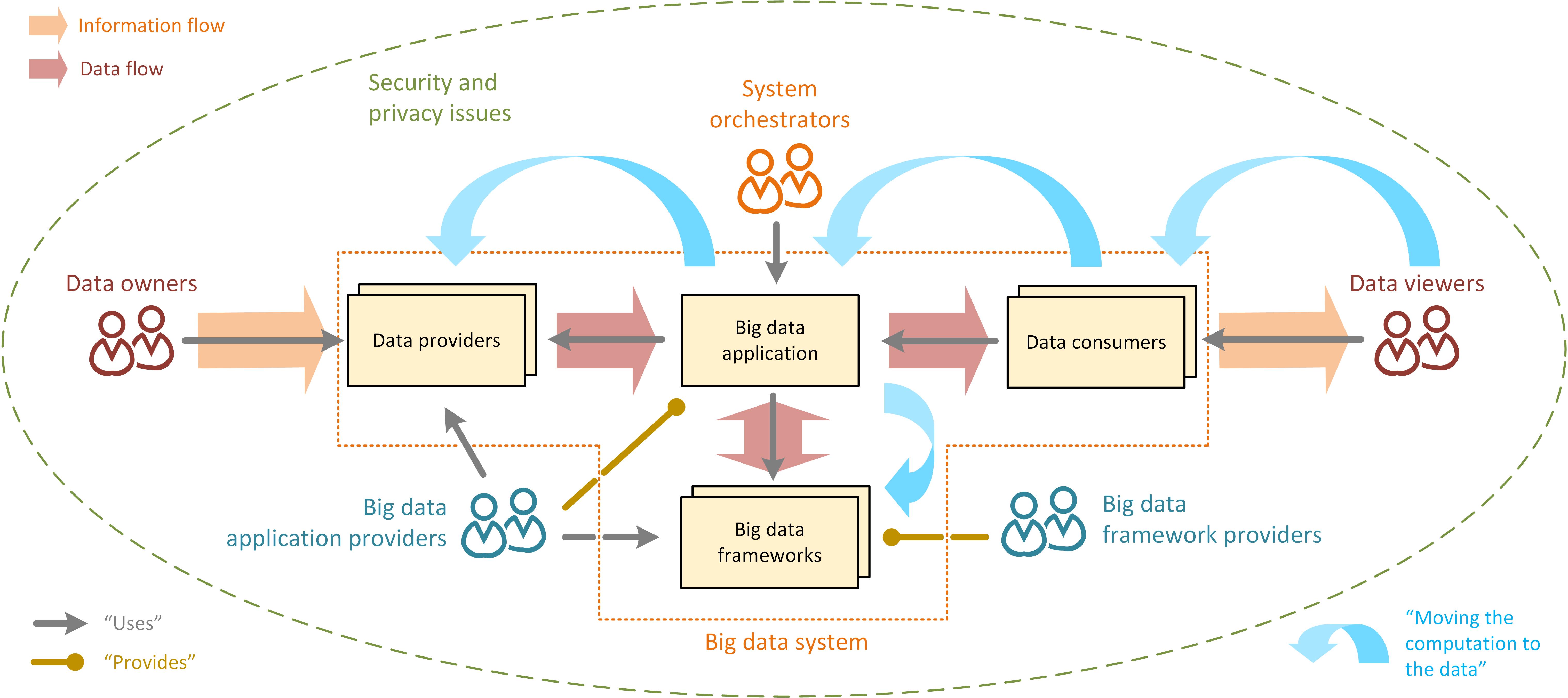

Big Data

Big data primarily refers to data sets that are too large or complex to be dealt with by traditional data processing, data-processing application software, software. Data with many entries (rows) offer greater statistical power, while data with higher complexity (more attributes or columns) may lead to a higher false discovery rate. Big data analysis challenges include Automatic identification and data capture, capturing data, Computer data storage, data storage, data analysis, search, Data sharing, sharing, Data transmission, transfer, Data visualization, visualization, Query language, querying, updating, information privacy, and data source. Big data was originally associated with three key concepts: ''volume'', ''variety'', and ''velocity''. The analysis of big data presents challenges in sampling, and thus previously allowing for only observations and sampling. Thus a fourth concept, ''veracity,'' refers to the quality or insightfulness of the data. Without sufficient investm ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Apache Hadoop

Apache Hadoop () is a collection of open-source software utilities for reliable, scalable, distributed computing. It provides a software framework for distributed storage and processing of big data using the MapReduce programming model. Hadoop was originally designed for computer clusters built from commodity hardware, which is still the common use. It has since also found use on clusters of higher-end hardware. All the modules in Hadoop are designed with a fundamental assumption that hardware failures are common occurrences and should be automatically handled by the framework. Overview The core of Apache Hadoop consists of a storage part, known as Hadoop Distributed File System (HDFS), and a processing part which is a MapReduce programming model. Hadoop splits files into large blocks and distributes them across nodes in a cluster. It then transfers packaged code into nodes to process the data in parallel. This approach takes advantage of data locality, where nodes manipulate ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Query Optimization

Query optimization is a feature of many relational database management systems and other databases such as NoSQL and graph databases. The query optimizer attempts to determine the most efficient way to execute a given query by considering the possible query plans. Generally, the query optimizer cannot be accessed directly by users: once queries are submitted to the database server, and parsed by the parser, they are then passed to the query optimizer where optimization occurs. However, some database engines allow guiding the query optimizer with hints. A query is a request for information from a database. It can be as simple as "find the address of a person with Social Security number 123-45-6789," or more complex like "find the average salary of all the employed married men in California between the ages 30 to 39 who earn less than their spouses." The result of a query is generated by processing the rows in a database in a way that yields the requested information. Since data ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Third Normal Form

Third normal form (3NF) is a database schema design approach for relational databases which uses normalizing principles to reduce the duplication of data, avoid data anomalies, ensure referential integrity, and simplify data management. It was defined in 1971 by Edgar F. Codd, an English computer scientist who invented the relational model for database management. A database relation (e.g. a database table) is said to meet third normal form standards if all the attributes (e.g. database columns) are functionally dependent on solely a key, except the case of functional dependency whose right hand side is a prime attribute (an attribute which is strictly included into some key). Codd defined this as a relation in second normal form where all non-prime attributes depend only on the candidate keys and do not have a transitive dependency on another key. A hypothetical example of a failure to meet third normal form would be a hospital database having a table of patients which ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

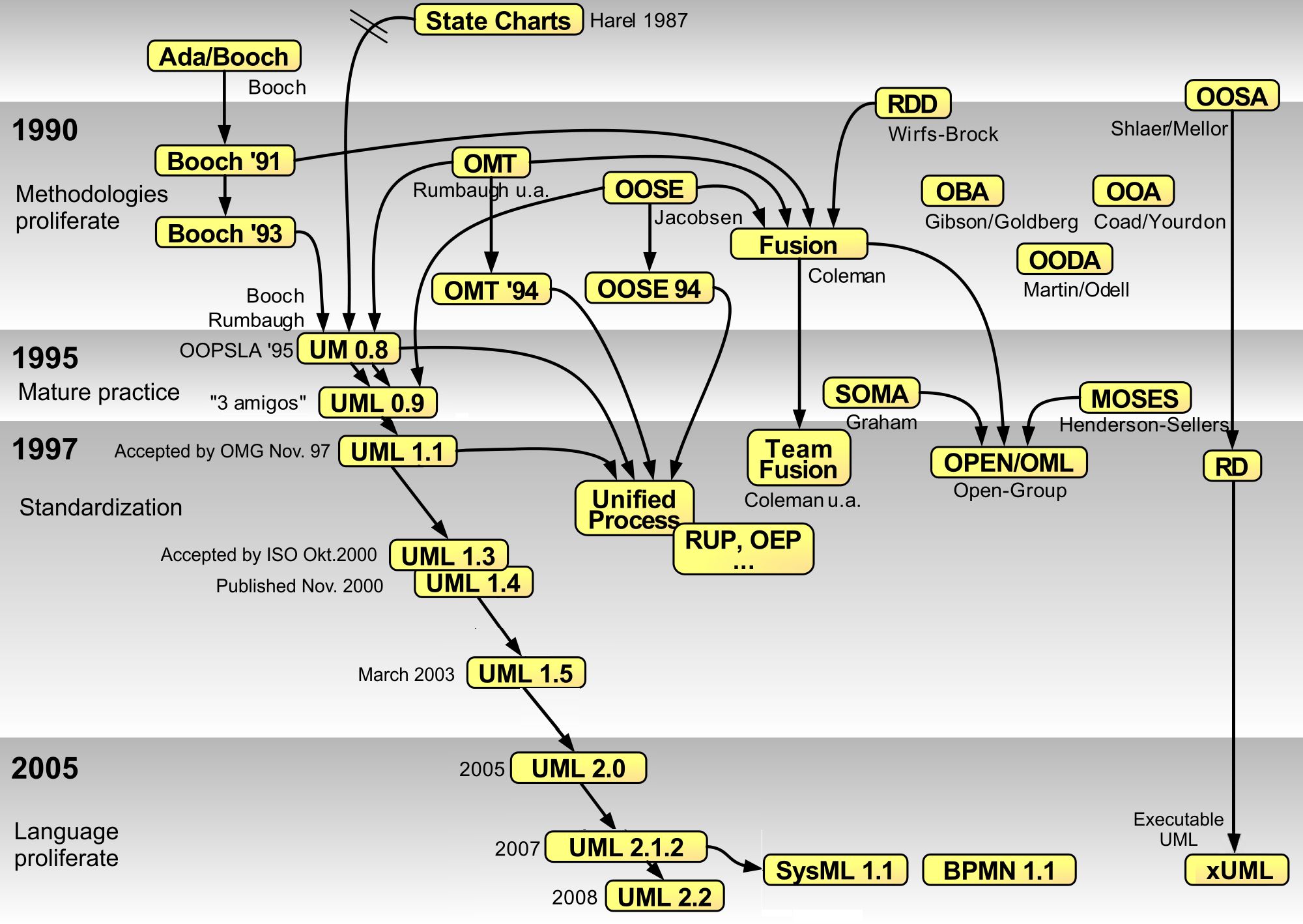

Unified Modeling Language

The Unified Modeling Language (UML) is a general-purpose visual modeling language that is intended to provide a standard way to visualize the design of a system. UML provides a standard notation for many types of diagrams which can be roughly divided into three main groups: behavior diagrams, interaction diagrams, and structure diagrams. The creation of UML was originally motivated by the desire to standardize the disparate notational systems and approaches to software design. It was developed at Rational Software in 1994–1995, with further development led by them through 1996. In 1997, UML was adopted as a standard by the Object Management Group (OMG) and has been managed by this organization ever since. In 2005, UML was also published by the International Organization for Standardization (ISO) and the International Electrotechnical Commission (IEC) as the ISO/IEC 19501 standard. Since then the standard has been periodically revised to cover the latest revision of UML. In ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |