|

Diehard Tests

The diehard tests are a battery of statistical tests for measuring the quality of a random number generator. They were developed by George Marsaglia over several years and first published in 1995 on a CD-ROM of random numbers. Test overview ; Birthday spacings : Choose random points on a large interval. The spacings between the points should be asymptotically exponentially distributed. The name is based on the birthday paradox. ; Overlapping permutations : Analyze sequences of five consecutive random numbers. The 120 possible orderings should occur with statistically equal probability. ; Ranks of matrices : Select some number of bits from some number of random numbers to form a matrix over , then determine the rank of the matrix. Count the ranks. ; Monkey tests : Treat sequences of some number of bits as "words". Count the overlapping words in a stream. The number of "words" that do not appear should follow a known distribution. The name is based on the infinite monkey theor ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Test

A statistical hypothesis test is a method of statistical inference used to decide whether the data at hand sufficiently support a particular hypothesis. Hypothesis testing allows us to make probabilistic statements about population parameters. History Early use While hypothesis testing was popularized early in the 20th century, early forms were used in the 1700s. The first use is credited to John Arbuthnot (1710), followed by Pierre-Simon Laplace (1770s), in analyzing the human sex ratio at birth; see . Modern origins and early controversy Modern significance testing is largely the product of Karl Pearson ( ''p''-value, Pearson's chi-squared test), William Sealy Gosset ( Student's t-distribution), and Ronald Fisher ("null hypothesis", analysis of variance, " significance test"), while hypothesis testing was developed by Jerzy Neyman and Egon Pearson (son of Karl). Ronald Fisher began his life in statistics as a Bayesian (Zabell 1992), but Fisher soon grew disenchanted ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Exponential Distribution

In probability theory and statistics, the exponential distribution is the probability distribution of the time between events in a Poisson point process, i.e., a process in which events occur continuously and independently at a constant average rate. It is a particular case of the gamma distribution. It is the continuous analogue of the geometric distribution, and it has the key property of being memoryless. In addition to being used for the analysis of Poisson point processes it is found in various other contexts. The exponential distribution is not the same as the class of exponential families of distributions. This is a large class of probability distributions that includes the exponential distribution as one of its members, but also includes many other distributions, like the normal, binomial, gamma, and Poisson distributions. Definitions Probability density function The probability density function (pdf) of an exponential distribution is : f(x;\lambda) = \begin \l ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

TestU01

TestU01 is a software library, implemented in the ANSI C language, that offers a collection of utilities for the empirical randomness testing of random number generators (RNGs).The TestU01 web site The library was first introduced in 2007 by Pierre L’Ecuyer and Richard Simard of the .Pierre L’Ecuyer & Richard Simard (2007), TestU01: A Software Library in ANSI C for Empirical Testing of Random Number Generators , '' [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Randomness Test

A randomness test (or test for randomness), in data evaluation, is a test used to analyze the distribution of a set of data to see if it can be described as random (patternless). In stochastic modeling, as in some computer simulations, the hoped-for randomness of potential input data can be verified, by a formal test for randomness, to show that the data are valid for use in simulation runs. In some cases, data reveals an obvious non-random pattern, as with so-called "runs in the data" (such as expecting random 0–9 but finding "4 3 2 1 0 4 3 2 1..." and rarely going above 4). If a selected set of data fails the tests, then parameters can be changed or other randomized data can be used which does pass the tests for randomness. Background The issue of randomness is an important philosophical and theoretical question. Tests for randomness can be used to determine whether a data set has a recognisable pattern, which would indicate that the process that generated it is significa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Chi-square Test

A chi-squared test (also chi-square or test) is a statistical hypothesis test used in the analysis of contingency tables when the sample sizes are large. In simpler terms, this test is primarily used to examine whether two categorical variables (''two dimensions of the contingency table'') are independent in influencing the test statistic (''values within the table''). The test is valid when the test statistic is chi-squared distributed under the null hypothesis, specifically Pearson's chi-squared test and variants thereof. Pearson's chi-squared test is used to determine whether there is a statistically significant difference between the expected frequencies and the observed frequencies in one or more categories of a contingency table. For contingency tables with smaller sample sizes, a Fisher's exact test is used instead. In the standard applications of this test, the observations are classified into mutually exclusive classes. If the null hypothesis that there are no differ ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Chi-square Goodness Of Fit Test

A chi-squared test (also chi-square or test) is a statistical hypothesis test used in the analysis of contingency tables when the sample sizes are large. In simpler terms, this test is primarily used to examine whether two categorical variables (''two dimensions of the contingency table'') are independent in influencing the test statistic (''values within the table''). The test is valid when the test statistic is chi-squared distributed under the null hypothesis, specifically Pearson's chi-squared test and variants thereof. Pearson's chi-squared test is used to determine whether there is a statistically significant difference between the expected frequencies and the observed frequencies in one or more categories of a contingency table. For contingency tables with smaller sample sizes, a Fisher's exact test is used instead. In the standard applications of this test, the observations are classified into mutually exclusive classes. If the null hypothesis that there are no diff ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Poisson-distributed

In probability theory and statistics, the Poisson distribution is a discrete probability distribution that expresses the probability of a given number of events occurring in a fixed interval of time or space if these events occur with a known constant mean rate and independently of the time since the last event. It is named after French mathematician Siméon Denis Poisson (; ). The Poisson distribution can also be used for the number of events in other specified interval types such as distance, area, or volume. For instance, a call center receives an average of 180 calls per hour, 24 hours a day. The calls are independent; receiving one does not change the probability of when the next one will arrive. The number of calls received during any minute has a Poisson probability distribution with mean 3: the most likely numbers are 2 and 3 but 1 and 4 are also likely and there is a small probability of it being as low as zero and a very small probability it could be 10. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Craps

Craps is a dice game in which players bet on the outcomes of the roll of a pair of dice. Players can wager money against each other (playing "street craps") or against a bank ("casino craps"). Because it requires little equipment, "street craps" can be played in informal settings. While shooting craps, players may use slang terminology to place bets and actions. History In 1788, "Krabs" (later spelled crabs) was an English variation on the dice game hazard (also spelled hasard). Craps developed in the United States from a simplification of the western European game of hazard. The origins of hazard are obscure and may date to the Crusades. Hazard was brought from London to New Orleans in approximately 1805 by the returning Bernard Xavier Philippe de Marigny de Mandeville, the young gambler and scion of a family of wealthy landowners in colonial Louisiana. Although in hazard the dice shooter may choose any number from five to nine to be his main number, de Marigny si ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Normal Distribution

In statistics, a normal distribution or Gaussian distribution is a type of continuous probability distribution for a real-valued random variable. The general form of its probability density function is : f(x) = \frac e^ The parameter \mu is the mean or expectation of the distribution (and also its median and mode), while the parameter \sigma is its standard deviation. The variance of the distribution is \sigma^2. A random variable with a Gaussian distribution is said to be normally distributed, and is called a normal deviate. Normal distributions are important in statistics and are often used in the natural and social sciences to represent real-valued random variables whose distributions are not known. Their importance is partly due to the central limit theorem. It states that, under some conditions, the average of many samples (observations) of a random variable with finite mean and variance is itself a random variable—whose distribution converges to a normal dist ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Random Number Generator

Random number generation is a process by which, often by means of a random number generator (RNG), a sequence of numbers or symbols that cannot be reasonably predicted better than by random chance is generated. This means that the particular outcome sequence will contain some patterns detectable in hindsight but unpredictable to foresight. True random number generators can be '' hardware random-number generators'' (HRNGS) that generate random numbers, wherein each generation is a function of the current value of a physical environment's attribute that is constantly changing in a manner that is practically impossible to model. This would be in contrast to so-called "random number generations" done by '' pseudorandom number generators'' (PRNGs) that generate numbers that only look random but are in fact pre-determined—these generations can be reproduced simply by knowing the state of the PRNG. Various applications of randomness have led to the development of several different met ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

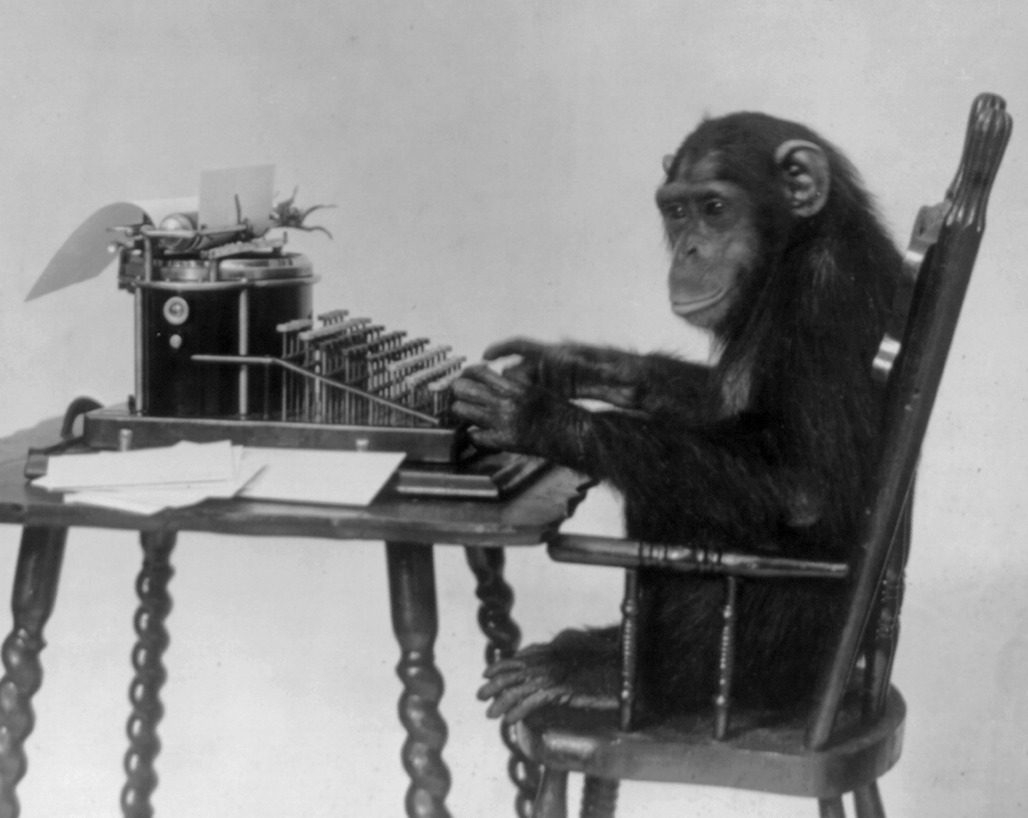

Infinite Monkey Theorem

The infinite monkey theorem states that a monkey hitting keys at random on a typewriter keyboard for an infinite amount of time will almost surely type any given text, such as the complete works of William Shakespeare. In fact, the monkey would almost surely type every possible finite text an infinite number of times. However, the probability that monkeys filling the entire observable universe would type a single complete work, such as Shakespeare's ''Hamlet'', is so tiny that the chance of it occurring during a period of time hundreds of thousands of orders of magnitude longer than the age of the universe is ''extremely'' low (but technically not zero). The theorem can be generalized to state that any sequence of events which has a non-zero probability of happening will almost certainly eventually occur, given enough time. In this context, "almost surely" is a mathematical term meaning the event happens with probability 1, and the "monkey" is not an actual monkey, but a metaph ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |