|

Data Space

A dataspace is an abstraction in data management that aims to overcome some of the problems encountered in a data integration system. A dataspace is defined as a set of "participants", or data sources, and the relations between them: for example that dataset A is a duplicate of dataset B. It can contain all data sources of an organization regardless of their format, physical location, or data model. The data space then provides a unified interface to query data regardless of format, sometimes in a "best-effort" fashion, and ways to further integrate the data when necessary. It is very different than a traditional relational database, which requires that all data be in the same format. The aim of the concept is to reduce the effort required to set up a data integration system by relying on existing matching and mapping generation techniques, and to improve the system in "pay-as-you-go" fashion as it is used. Labor-intensive aspects of data integration are postponed until they are a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

European Commission

The European Commission (EC) is the primary Executive (government), executive arm of the European Union (EU). It operates as a cabinet government, with a number of European Commissioner, members of the Commission (directorial system, informally known as "commissioners") corresponding to two thirds of the number of Member state of the European Union, member states, unless the European Council, acting unanimously, decides to alter this number. The current number of commissioners is 27, including the president. It includes an administrative body of about 32,000 European civil servants. The commission is divided into departments known as Directorate-General, Directorates-General (DGs) that can be likened to departments or Ministry (government department), ministries each headed by a director-general who is responsible to a commissioner. Currently, there is one member per European Union member state, member state, but members are bound by their oath of office to represent the genera ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Semantic Integration

Semantic integration is the process of interrelating information from diverse sources, for example calendars and to do lists, email archives, presence information (physical, psychological, and social), documents of all sorts, contacts (including social graphs), search results, and advertising and marketing relevance derived from them. In this regard, semantics focuses on the organization of and action upon information by acting as an intermediary between heterogeneous data sources, which may conflict not only by structure but also context or value. Applications and methods In enterprise application integration (EAI), semantic integration can facilitate or even automate the communication between computer systems using metadata publishing. Metadata publishing potentially offers the ability to automatically link ontology (computer science), ontologies. One approach to (semi-)automated ontology mapping requires the definition of a semantic distance or its inverse, semantic similarit ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Linked Data

In computing, linked data is structured data which is interlinked with other data so it becomes more useful through semantic queries. It builds upon standard Web technologies such as HTTP, RDF and URIs, but rather than using them to serve web pages only for human readers, it extends them to share information in a way that can be read automatically by computers. Part of the vision of linked data is for the Internet to become a global database. Tim Berners-Lee, director of the World Wide Web Consortium (W3C), coined the term in a 2006 design note about the Semantic Web project. Linked data may also be open data, in which case it is usually described as Linked Open Data. Principles In his 2006 "Linked Data" note, Tim Berners-Lee outlined four principles of linked data, paraphrased along the following lines: #Uniform Resource Identifiers (URIs) should be used to name and identify individual things. #HTTP URIs should be used to allow these things to be looked up, interpreted, and ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Information Integration

Information integration (II) is the merging of information from heterogeneous sources with differing conceptual, contextual and typographical representations. It is used in data mining and consolidation of data from unstructured or semi-structured resources. Typically, ''information integration'' refers to textual representations of knowledge but is sometimes applied to rich-media content. Truth discovery, Information fusion, which is a related term, involves the combination of information into a new set of information towards reducing redundancy and uncertainty. Examples of Technology, technologies available to integrate information include data deduplication, deduplication, and string metrics which allow the detection of similar text in different data sources by fuzzy string searching, fuzzy matching. A host of methods for these research areas are available such as those presented in the International Society of Information Fusion. Other methods rely on causal estimates of the ou ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Mapping

In computing and data management, data mapping is the process of creating data element mappings between two distinct data models. Data mapping is used as a first step for a wide variety of data integration tasks, including: * Data transformation or data mediation between a data source and a destination * Identification of data relationships as part of data lineage analysis * Discovery of hidden sensitive data such as the last four digits of a social security number hidden in another user id as part of a data masking or de-identification project * Consolidation of multiple databases into a single database and identifying redundant columns of data for consolidation or elimination For example, a company that would like to transmit and receive purchases and invoices with other companies might use data mapping to create data maps from a company's data to standardized ANSI ASC X12 messages for items such as purchase orders and invoices. Standards X12 standards are generic E ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Integration

Data integration refers to the process of combining, sharing, or synchronizing data from multiple sources to provide users with a unified view. There are a wide range of possible applications for data integration, from commercial (such as when a business merges multiple databases) to scientific (combining research data from different bioinformatics repositories). The decision to integrate data tends to arise when the volume, complexity (that is, big data) and need to share existing data explodes. It has become the focus of extensive theoretical work, and numerous open problems remain unsolved. Data integration encourages collaboration between internal as well as external users. The data being integrated must be received from a heterogeneous database system and transformed to a single coherent data store that provides synchronous data across a network of files for clients. A common use of data integration is in data mining when analyzing and extracting information from exist ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

European Green Deal

The European Green Deal, approved in 2020, is a set of policy initiatives by the European Commission with the overarching aim of making the European Union (EU) climate neutral in 2050. The plan is to review each existing law on its climate merits, and also introduce new legislation on the circular economy (CE), building renovation, biodiversity, farming and innovation. The president of the European Commission, Ursula von der Leyen, stated that the European Green Deal would be Europe's "man on the moon moment". On 13 December 2019, the European Council decided to press ahead with the plan, with an opt-out for Poland. On 15 January 2020, the European Parliament voted to support the deal as well, with requests for higher ambition. A year later, the European Climate Law was passed, which legislated that greenhouse gas emissions should be 55% lower in 2030 compared to 1990. The Fit for 55 package is a large set of proposed legislation detailing how the European Union plans to rea ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Mining

Data mining is the process of extracting and finding patterns in massive data sets involving methods at the intersection of machine learning, statistics, and database systems. Data mining is an interdisciplinary subfield of computer science and statistics with an overall goal of extracting information (with intelligent methods) from a data set and transforming the information into a comprehensible structure for further use. Data mining is the analysis step of the " knowledge discovery in databases" process, or KDD. Aside from the raw analysis step, it also involves database and data management aspects, data pre-processing, model and inference considerations, interestingness metrics, complexity considerations, post-processing of discovered structures, visualization, and online updating. The term "data mining" is a misnomer because the goal is the extraction of patterns and knowledge from large amounts of data, not the extraction (''mining'') of data itself. It also is a buzzwo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Management

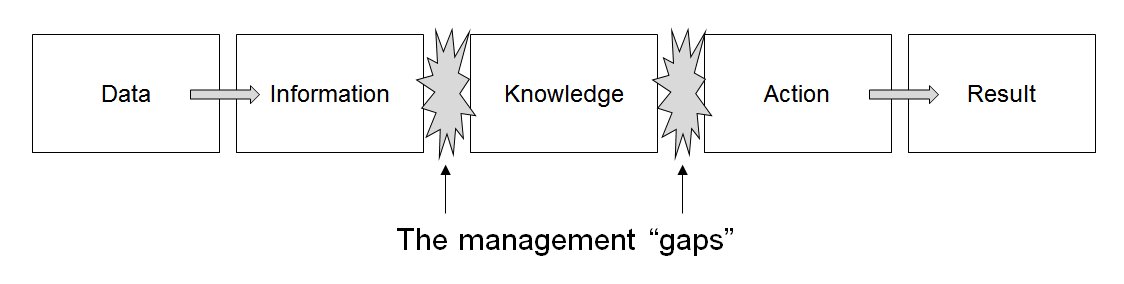

Data management comprises all disciplines related to handling data as a valuable resource, it is the practice of managing an organization's data so it can be analyzed for decision making. Concept The concept of data management emerged alongside the evolution of computing technology. In the 1950s, as computers became more prevalent, organizations began to grapple with the challenge of organizing and storing data efficiently. Early methods relied on punch cards and manual sorting, which were labor-intensive and prone to errors. The introduction of database management systems in the 1970s marked a significant milestone, enabling structured storage and retrieval of data. By the 1980s, relational database models revolutionized data management, emphasizing the importance of data as an asset and fostering a data-centric mindset in business. This era also saw the rise of data governance practices, which prioritized the organization and regulation of data to ensure quality and complian ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Keyword Search

In computing, a search engine is an information retrieval software system designed to help find information stored on one or more computer systems. Search engines discover, crawl, transform, and store information for retrieval and presentation in response to user queries. The search results are usually presented in a list and are commonly called ''hits''. The most widely used type of search engine is a web search engine, which searches for information on the World Wide Web. A search engine normally consists of four components, as follows: a search interface, a crawler (also known as a spider or bot), an indexer, and a database. The crawler traverses a document collection, deconstructs document text, and assigns surrogates for storage in the search engine index. Online search engines store images, link data and metadata for the document. How search engines work Search engines provide an interface to a group of items that enables users to specify criteria about an item of interest ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |