|

Data Profiling

Data profiling is the process of examining the data available from an existing information source (e.g. a database or a file) and collecting statistics or informative summaries about that data. The purpose of these statistics may be to: # Find out whether existing data can be easily used for other purposes # Improve the ability to search data by tagging it with keywords, descriptions, or assigning it to a category # Assess data quality, including whether the data conforms to particular standards or patterns # Assess the risk involved in integrating data in new applications, including the challenges of joins # Discover metadata of the source database, including value patterns and distributions, key candidates, foreign-key candidates, and functional dependencies # Assess whether known metadata accurately describes the actual values in the source database # Understanding data challenges early in any data intensive project, so that late project surprises are avoided. Finding dat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer File

A computer file is a System resource, resource for recording Data (computing), data on a Computer data storage, computer storage device, primarily identified by its filename. Just as words can be written on paper, so too can data be written to a computer file. Files can be shared with and transferred between computers and Mobile device, mobile devices via removable media, Computer networks, networks, or the Internet. Different File format, types of computer files are designed for different purposes. A file may be designed to store a written message, a document, a spreadsheet, an Digital image, image, a Digital video, video, a computer program, program, or any wide variety of other kinds of data. Certain files can store multiple data types at once. By using computer programs, a person can open, read, change, save, and close a computer file. Computer files may be reopened, modified, and file copying, copied an arbitrary number of times. Files are typically organized in a file syst ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Master Data Management

Master data management (MDM) is a discipline in which business and information technology collaborate to ensure the uniformity, accuracy, stewardship, semantic consistency, and accountability of the enterprise's official shared master data assets. Reasons for master data management * Data consistency and accuracy: MDM ensures that the organization's critical data is Consistency (database systems), consistent and accurate across all systems, reducing discrepancies and errors caused by multiple, siloed copies of the same data. * Improved decision-making: By providing a single version of the truth, MDM aims to have business leaders make informed, Data-informed decision-making, data-driven decisions, and improve overall business performance. * Operational efficiency: With consistent and accurate data, operational processes such as reporting, inventory management, and customer service become more efficient. * Regulatory compliance: MDM tries to help organizations comply with industry ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Analysis

Data analysis is the process of inspecting, Data cleansing, cleansing, Data transformation, transforming, and Data modeling, modeling data with the goal of discovering useful information, informing conclusions, and supporting decision-making. Data analysis has multiple facets and approaches, encompassing diverse techniques under a variety of names, and is used in different business, science, and social science domains. In today's business world, data analysis plays a role in making decisions more scientific and helping businesses operate more effectively. Data mining is a particular data analysis technique that focuses on statistical modeling and knowledge discovery for predictive rather than purely descriptive purposes, while business intelligence covers data analysis that relies heavily on aggregation, focusing mainly on business information. In statistical applications, data analysis can be divided into descriptive statistics, exploratory data analysis (EDA), and Statistical h ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Analysis Paralysis

Analysis paralysis (or paralysis by analysis) describes an individual or group process where overanalyzing or overthinking a situation can cause forward motion or decision-making to become " paralyzed", meaning that no solution or course of action is decided upon within a natural time frame. A situation may be deemed too complicated and a decision is never made, or made much too late, due to anxiety that a potentially larger problem may arise. A person may desire a perfect solution, but may fear making a decision that could result in error, while on the way to a better solution. Equally, a person may hold that a superior solution is a short step away, and stall in its endless pursuit, with no concept of diminishing returns. On the opposite end of the time spectrum is the phrase extinct by instinct, which is making a fatal decision based on hasty judgment or a gut reaction. Analysis paralysis is when the fear of either making an error or forgoing a superior solution outweighs th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

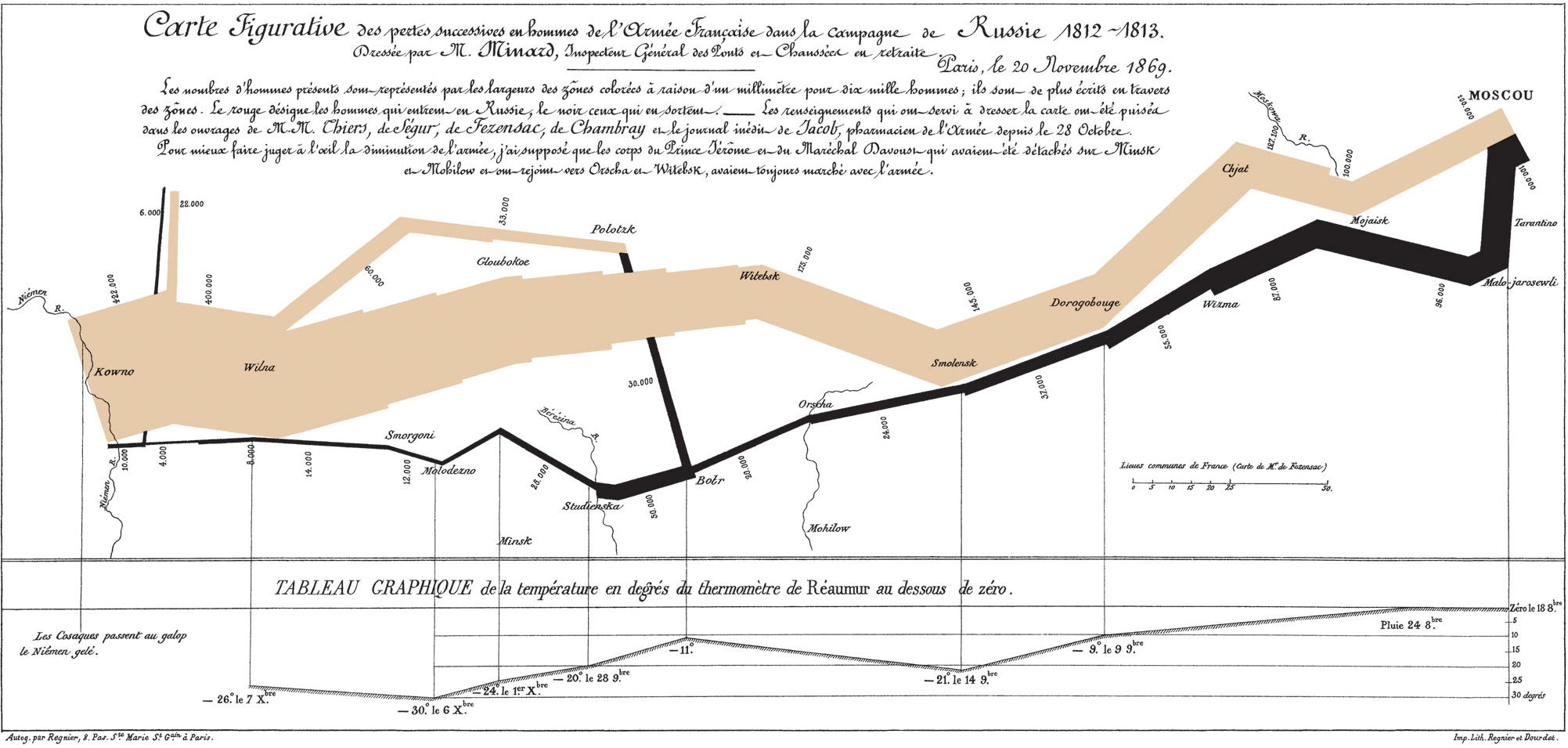

Data Visualization

Data and information visualization (data viz/vis or info viz/vis) is the practice of designing and creating Graphics, graphic or visual Representation (arts), representations of a large amount of complex quantitative and qualitative data and information with the help of static, dynamic or interactive visual items. Typically based on data and information collected from a certain domain of expertise, these visualizations are intended for a broader audience to help them visually explore and discover, quickly understand, interpret and gain important insights into otherwise difficult-to-identify structures, relationships, correlations, local and global patterns, trends, variations, constancy, clusters, outliers and unusual groupings within data (''exploratory visualization''). When intended for the general public (mass communication) to convey a concise version of known, specific information in a clear and engaging manner (''presentational'' or ''explanatory visualization''), it is t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Database Normalization

Database normalization is the process of structuring a relational database in accordance with a series of so-called '' normal forms'' in order to reduce data redundancy and improve data integrity. It was first proposed by British computer scientist Edgar F. Codd as part of his relational model. Normalization entails organizing the columns (attributes) and tables (relations) of a database to ensure that their dependencies are properly enforced by database integrity constraints. It is accomplished by applying some formal rules either by a process of ''synthesis'' (creating a new database design) or ''decomposition'' (improving an existing database design). Objectives A basic objective of the first normal form defined by Codd in 1970 was to permit data to be queried and manipulated using a "universal data sub-language" grounded in first-order logic. An example of such a language is SQL, though it is one that Codd regarded as seriously flawed. The objectives of normalization ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Master Data Management

Master data management (MDM) is a discipline in which business and information technology collaborate to ensure the uniformity, accuracy, stewardship, semantic consistency, and accountability of the enterprise's official shared master data assets. Reasons for master data management * Data consistency and accuracy: MDM ensures that the organization's critical data is Consistency (database systems), consistent and accurate across all systems, reducing discrepancies and errors caused by multiple, siloed copies of the same data. * Improved decision-making: By providing a single version of the truth, MDM aims to have business leaders make informed, Data-informed decision-making, data-driven decisions, and improve overall business performance. * Operational efficiency: With consistent and accurate data, operational processes such as reporting, inventory management, and customer service become more efficient. * Regulatory compliance: MDM tries to help organizations comply with industry ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Quality

Data quality refers to the state of qualitative or quantitative pieces of information. There are many definitions of data quality, but data is generally considered high quality if it is "fit for tsintended uses in operations, decision making and planning". Data is deemed of high quality if it correctly represents the real-world construct to which it refers. Apart from these definitions, as the number of data sources increases, the question of internal data consistency becomes significant, regardless of fitness for use for any particular external purpose. People's views on data quality can often be in disagreement, even when discussing the same set of data used for the same purpose. When this is the case, businesses may adopt recognised international standards for data quality (See #International Standards for Data Quality below). Data governance can also be used to form agreed upon definitions and standards, including international standards, for data quality. In such cases, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Warehouse

In computing, a data warehouse (DW or DWH), also known as an enterprise data warehouse (EDW), is a system used for Business intelligence, reporting and data analysis and is a core component of business intelligence. Data warehouses are central Repository (version control), repositories of data integrated from disparate sources. They store current and historical data organized in a way that is optimized for data analysis, generation of reports, and developing insights across the integrated data. They are intended to be used by analysts and managers to help make organizational decisions. The data stored in the warehouse is uploaded from operational systems (such as marketing or sales). The data may pass through an operational data store and may require data cleansing for additional operations to ensure data quality before it is used in the data warehouse for reporting. The two main workflows for building a data warehouse system are extract, transform, load (ETL) and extract, load, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Functional Dependency

In relational database theory, a functional dependency is the following constraint between two attribute sets in a relation: Given a relation ''R'' and attribute sets ''X'',''Y'' \subseteq ''R'', ''X'' is said to functionally determine ''Y'' (written ''X'' → ''Y'') if each ''X'' value is associated with precisely one ''Y'' value. ''R'' is then said to satisfy the functional dependency ''X'' → ''Y''. Equivalently, the projection \Pi_R is a function, that is, ''Y'' is a function of ''X''. In simple words, if the values for the ''X'' attributes are known (say they are ''x''), then the values for the ''Y'' attributes corresponding to ''x'' can be determined by looking them up in ''any'' tuple of ''R'' containing ''x''. Customarily ''X'' is called the ''determinant'' set and ''Y'' the ''dependent'' set. A functional dependency FD: ''X'' → ''Y'' is called ''trivial'' if ''Y'' is a subset of ''X''. In other words, a dependency FD: ''X'' → ''Y'' means that the values of ''Y'' ar ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |