|

Data-oriented Design

In computing, data-oriented design is a program optimization approach motivated by efficient usage of the CPU cache, often used in video game development. The approach is to focus on the data layout, separating and sorting fields according to when they are needed, and to think about transformations of data. Proponents include Mike Acton, Scott Meyers, and Jonathan Blow. The parallel array (or structure of arrays) is the main example of data-oriented design. It is contrasted with the ''array of structures'' typical of object-oriented designs. The definition of data-oriented design as a programming paradigm can be seen as contentious as many believe that it can be used side by side with another paradigm, but due to the emphasis on data layout, it is also incompatible with most other paradigms. Motives These methods became especially popular in the mid to late 2000s during the seventh generation of video game consoles that included the IBM PowerPC based PlayStation 3 (PS3) and X ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Computing

Computing is any goal-oriented activity requiring, benefiting from, or creating computer, computing machinery. It includes the study and experimentation of algorithmic processes, and the development of both computer hardware, hardware and software. Computing has scientific, engineering, mathematical, technological, and social aspects. Major computing disciplines include computer engineering, computer science, cybersecurity, data science, information systems, information technology, and software engineering. The term ''computing'' is also synonymous with counting and calculation, calculating. In earlier times, it was used in reference to the action performed by Mechanical computer, mechanical computing machines, and before that, to Computer (occupation), human computers. History The history of computing is longer than the history of computing hardware and includes the history of methods intended for pen and paper (or for chalk and slate) with or without the aid of tables. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Transistor Budget

A transistor is a semiconductor device used to Electronic amplifier, amplify or electronic switch, switch electrical signals and electric power, power. It is one of the basic building blocks of modern electronics. It is composed of semiconductor material, usually with at least three terminal (electronics), terminals for connection to an electronic circuit. A voltage or Electric current, current applied to one pair of the transistor's terminals controls the current through another pair of terminals. Because the controlled (output) power can be higher than the controlling (input) power, a transistor can amplify a signal. Some transistors are packaged individually, but many more in miniature form are found embedded in integrated circuits. Because transistors are the key active components in practically all modern electronics, many people consider them one of the 20th century's greatest inventions. Physicist Julius Edgar Lilienfeld proposed the concept of a field-effect transisto ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Itanium

Itanium (; ) is a discontinued family of 64-bit computing, 64-bit Intel microprocessors that implement the Intel Itanium architecture (formerly called IA-64). The Itanium architecture originated at Hewlett-Packard (HP), and was later jointly developed by HP and Intel. Launching in June 2001, Intel initially marketed the processors for enterprise servers and high-performance computing systems. In the concept phase, engineers said "we could run circles around PowerPC...we could kill the x86". Early predictions were that IA-64 would expand to the lower-end servers, supplanting Xeon, and eventually penetrate into the personal computers, eventually to supplant Reduced instruction set computer, reduced instruction set computing (RISC) and complex instruction set computing (CISC) architectures for all general-purpose applications. When first released in 2001 after a decade of development, Itanium's performance was disappointing compared to better-established RISC and CISC processors. Em ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Memory Access Pattern

In computing, a memory access pattern or IO access pattern is the pattern with which a system or program reads and writes memory on secondary storage. These patterns differ in the level of locality of reference and drastically affect cache performance, and also have implications for the approach to parallelism and distribution of workload in shared memory systems. Further, cache coherency issues can affect multiprocessor performance, which means that certain memory access patterns place a ceiling on parallelism (which manycore approaches seek to break). Computer memory is usually described as " random access", but traversals by software will still exhibit patterns that can be exploited for efficiency. Various tools exist to help system designers and programmers understand, analyse and improve the memory access pattern, including VTune and Vectorization Advisor, including tools to address GPU memory access patterns. Memory access patterns also have implications for securi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Locality Of Reference

In computer science, locality of reference, also known as the principle of locality, is the tendency of a processor to access the same set of memory locations repetitively over a short period of time. There are two basic types of reference locality temporal and spatial locality. Temporal locality refers to the reuse of specific data and/or resources within a relatively small time duration. Spatial locality (also termed ''data locality'')"NIST Big Data Interoperability Framework: Volume 1"urn:doi:10.6028/NIST.SP.1500-1r2 refers to the use of data elements within relatively close storage locations. Sequential locality, a special case of spatial locality, occurs when data elements are arranged and accessed linearly, such as traversing the elements in a one-dimensional Array data structure, array. Locality is a type of predictability, predictable behavior that occurs in computer systems. Systems which exhibit strong ''locality of reference'' are good candidates for performance optimiza ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Von Neumann Architecture

The von Neumann architecture—also known as the von Neumann model or Princeton architecture—is a computer architecture based on the '' First Draft of a Report on the EDVAC'', written by John von Neumann in 1945, describing designs discussed with John Mauchly and J. Presper Eckert at the University of Pennsylvania's Moore School of Electrical Engineering. The document describes a design architecture for an electronic digital computer made of "organs" that were later understood to have these components: * A processing unit with both an arithmetic logic unit and processor registers * A control unit that includes an instruction register and a program counter * Memory that stores data and instructions * External mass storage * Input and output mechanisms.. The attribution of the invention of the architecture to von Neumann is controversial, not least because Eckert and Mauchly had done a lot of the required design work and claim to have had the idea for stored programs ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

System Bus

A system bus is a single computer bus that connects the major components of a computer system, combining the functions of a data bus to carry information, an address bus to determine where it should be sent or read from, and a control bus to determine its operation. The technique was developed to reduce costs and improve modularity, and although popular in the 1970s and 1980s, more modern computers use a variety of separate buses adapted to more specific needs. The system level bus (as distinct from a CPU's internal datapath busses) connects the CPU to memory and I/O devices. Typically a system level bus is designed for use as a backplane. Background scenario Many of the computers were based on the ''First Draft of a Report on the EDVAC'' report published in 1945. In what became known as the Von Neumann architecture, a central control unit and arithmetic logic unit (ALU, which he called the central arithmetic part) were combined with computer memory and input and output functi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Cache Misses

In computing, a cache ( ) is a hardware or software component that stores data so that future requests for that data can be served faster; the data stored in a cache might be the result of an earlier computation or a copy of data stored elsewhere. A cache hit occurs when the requested data can be found in a cache, while a cache miss occurs when it cannot. Cache hits are served by reading data from the cache, which is faster than recomputing a result or reading from a slower data store; thus, the more requests that can be served from the cache, the faster the system performs. To be cost-effective, caches must be relatively small. Nevertheless, caches are effective in many areas of computing because typical computer applications access data with a high degree of locality of reference. Such access patterns exhibit temporal locality, where data is requested that has been recently requested, and spatial locality, where data is requested that is stored near data that has already bee ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Processing Element

This glossary of computer hardware terms is a list of definitions of terms and concepts related to computer hardware, i.e. the physical and structural components of computers, architectural issues, and peripheral devices. A B C D E F G H I J K L M N O P R S T U V W Z See also *List of computer term etymologies *Glossary of backup terms *Glossary of computer graphics *Glossary of computer science *Glossary of computer software terms *wikt:Appendi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Clock Cycle

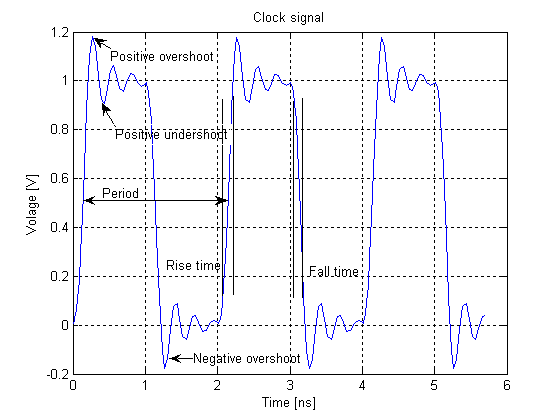

In electronics and especially synchronous digital circuits, a clock signal (historically also known as ''logic beat'') is an electronic logic signal (voltage or current) which oscillates between a high and a low state at a constant frequency and is used like a metronome to synchronize actions of digital circuits. In a synchronous logic circuit, the most common type of digital circuit, the clock signal is applied to all storage devices, flip-flops and latches, and causes them all to change state simultaneously, preventing race conditions. A clock signal is produced by an electronic oscillator called a clock generator. The most common clock signal is in the form of a square wave with a 50% duty cycle. Circuits using the clock signal for synchronization may become active at either the rising edge, falling edge, or, in the case of double data rate, both in the rising and in the falling edges of the clock cycle. Digital circuits Most integrated circuits (ICs) of suffi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Main Memory

Computer data storage or digital data storage is a technology consisting of computer components and recording media that are used to retain digital data. It is a core function and fundamental component of computers. The central processing unit (CPU) of a computer is what manipulates data by performing computations. In practice, almost all computers use a storage hierarchy, which puts fast but expensive and small storage options close to the CPU and slower but less expensive and larger options further away. Generally, the fast technologies are referred to as "memory", while slower persistent technologies are referred to as "storage". Even the first computer designs, Charles Babbage's Analytical Engine and Percy Ludgate's Analytical Machine, clearly distinguished between processing and memory (Babbage stored numbers as rotations of gears, while Ludgate stored numbers as displacements of rods in shuttles). This distinction was extended in the Von Neumann architecture, wh ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |

Pipeline (computing)

In computing, a pipeline, also known as a data pipeline, is a set of data processing elements connected in series, where the output of one element is the input of the next one. The elements of a pipeline are often executed in parallel or in time-sliced fashion. Some amount of buffer storage is often inserted between elements. Concept and motivation Pipelining is a commonly used concept in everyday life. For example, in the assembly line of a car factory, each specific task—such as installing the engine, installing the hood, and installing the wheels—is often done by a separate work station. The stations carry out their tasks in parallel, each on a different car. Once a car has had one task performed, it moves to the next station. Variations in the time needed to complete the tasks can be accommodated by "buffering" (holding one or more cars in a space between the stations) and/or by "stalling" (temporarily halting the upstream stations), until the next station becomes avai ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] [Amazon] |