|

Chunking (computing)

In computer programming, chunking has multiple meanings. In memory management Typical modern software systems allocate memory dynamically from structures known as heaps. Calls are made to heap-management routines to allocate and free memory. Heap management involves some computation time and can be a performance issue. Chunking refers to strategies for improving performance by using special knowledge of a situation to aggregate related memory-allocation requests. For example, if it is known that a certain kind of object will typically be required in groups of eight, instead of allocating and freeing each object individually, making sixteen calls to the heap manager, one could allocate and free an array of eight of the objects, reducing the number of calls to two. In HTTP message transmission Chunking is a specific feature of the HTTP 1.1 protocol. Here, the meaning is the opposite of that used in memory management. It refers to a facility that allows inconveniently large message ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Computer Programming

Computer programming or coding is the composition of sequences of instructions, called computer program, programs, that computers can follow to perform tasks. It involves designing and implementing algorithms, step-by-step specifications of procedures, by writing source code, code in one or more programming languages. Programmers typically use high-level programming languages that are more easily intelligible to humans than machine code, which is directly executed by the central processing unit. Proficient programming usually requires expertise in several different subjects, including knowledge of the Domain (software engineering), application domain, details of programming languages and generic code library (computing), libraries, specialized algorithms, and Logic#Formal logic, formal logic. Auxiliary tasks accompanying and related to programming include Requirements analysis, analyzing requirements, Software testing, testing, debugging (investigating and fixing problems), imple ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

Software

Software consists of computer programs that instruct the Execution (computing), execution of a computer. Software also includes design documents and specifications. The history of software is closely tied to the development of digital computers in the mid-20th century. Early programs were written in the machine language specific to the hardware. The introduction of high-level programming languages in 1958 allowed for more human-readable instructions, making software development easier and more portable across different computer architectures. Software in a programming language is run through a compiler or Interpreter (computing), interpreter to execution (computing), execute on the architecture's hardware. Over time, software has become complex, owing to developments in Computer network, networking, operating systems, and databases. Software can generally be categorized into two main types: # operating systems, which manage hardware resources and provide services for applicat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

Computer Memory

Computer memory stores information, such as data and programs, for immediate use in the computer. The term ''memory'' is often synonymous with the terms ''RAM,'' ''main memory,'' or ''primary storage.'' Archaic synonyms for main memory include ''core'' (for magnetic core memory) and ''store''. Main memory operates at a high speed compared to mass storage which is slower but less expensive per bit and higher in capacity. Besides storing opened programs and data being actively processed, computer memory serves as a Page cache, mass storage cache and write buffer to improve both reading and writing performance. Operating systems borrow RAM capacity for caching so long as it is not needed by running software. If needed, contents of the computer memory can be transferred to storage; a common way of doing this is through a memory management technique called ''virtual memory''. Modern computer memory is implemented as semiconductor memory, where data is stored within memory cell (com ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Dynamic Memory Allocation

Memory management (also dynamic memory management, dynamic storage allocation, or dynamic memory allocation) is a form of resource management applied to computer memory. The essential requirement of memory management is to provide ways to dynamically allocate portions of memory to programs at their request, and free it for reuse when no longer needed. This is critical to any advanced computer system where more than a single process might be underway at any time. Several methods have been devised that increase the effectiveness of memory management. Virtual memory systems separate the memory addresses used by a process from actual physical addresses, allowing separation of processes and increasing the size of the virtual address space beyond the available amount of RAM using paging or swapping to secondary storage. The quality of the virtual memory manager can have an extensive effect on overall system performance. The system allows a computer to appear as if it may have more ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

HTTP

HTTP (Hypertext Transfer Protocol) is an application layer protocol in the Internet protocol suite model for distributed, collaborative, hypermedia information systems. HTTP is the foundation of data communication for the World Wide Web, where hypertext documents include hyperlinks to other resources that the user can easily access, for example by a Computer mouse, mouse click or by tapping the screen in a web browser. Development of HTTP was initiated by Tim Berners-Lee at CERN in 1989 and summarized in a simple document describing the behavior of a client and a server using the first HTTP version, named 0.9. That version was subsequently developed, eventually becoming the public 1.0. Development of early HTTP Requests for Comments (RFCs) started a few years later in a coordinated effort by the Internet Engineering Task Force (IETF) and the World Wide Web Consortium (W3C), with work later moving to the IETF. HTTP/1 was finalized and fully documented (as version 1.0) in 1996 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

Data Deduplication

In computing, data deduplication is a technique for eliminating duplicate copies of repeating data. Successful implementation of the technique can improve storage utilization, which may in turn lower capital expenditure by reducing the overall amount of storage media required to meet storage capacity needs. It can also be applied to network data transfers to reduce the number of bytes that must be sent. The deduplication process requires comparison of data 'chunks' (also known as 'byte patterns') which are unique, contiguous blocks of data. These chunks are identified and stored during a process of analysis, and compared to other chunks within existing data. Whenever a match occurs, the redundant chunk is replaced with a small reference that points to the stored chunk. Given that the same byte pattern may occur dozens, hundreds, or even thousands of times (the match frequency is dependent on the chunk size), the amount of data that must be stored or transferred can be greatly reduce ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Data Synchronization

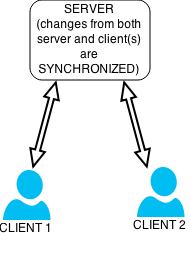

Data synchronization is the process of establishing consistency between source and target data stores, and the continuous harmonization of the data over time. It is fundamental to a wide variety of applications, including file synchronization and mobile device synchronization. Data synchronization can also be useful in encryption for synchronizing public key servers. Data synchronization is needed to update and keep multiple copies of a set of data coherent with one another or to maintain data integrity, Figure 3. For example, database replication is used to keep multiple copies of data synchronized with database servers that store data in different locations. Examples Examples include: * File synchronization, such as syncing a hand-held MP3 player to a desktop computer; * Cluster file systems, which are file systems that maintain data or indexes in a coherent fashion across a whole computing cluster; * Cache coherency, maintaining multiple copies of data in sync across ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

Data Compression

In information theory, data compression, source coding, or bit-rate reduction is the process of encoding information using fewer bits than the original representation. Any particular compression is either lossy or lossless. Lossless compression reduces bits by identifying and eliminating statistical redundancy. No information is lost in lossless compression. Lossy compression reduces bits by removing unnecessary or less important information. Typically, a device that performs data compression is referred to as an encoder, and one that performs the reversal of the process (decompression) as a decoder. The process of reducing the size of a data file is often referred to as data compression. In the context of data transmission, it is called source coding: encoding is done at the source of the data before it is stored or transmitted. Source coding should not be confused with channel coding, for error detection and correction or line coding, the means for mapping data onto a sig ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

Rolling Hash

Rolling is a type of motion that combines rotation (commonly, of an axially symmetric object) and translation of that object with respect to a surface (either one or the other moves), such that, if ideal conditions exist, the two are in contact with each other without sliding. Rolling where there is no sliding is referred to as ''pure rolling''. By definition, there is no sliding when there is a frame of reference in which all points of contact on the rolling object have the same velocity as their counterparts on the surface on which the object rolls; in particular, for a frame of reference in which the rolling plane is at rest (see animation), the instantaneous velocity of all the points of contact (for instance, a generating line segment of a cylinder) of the rolling object is zero. In practice, due to small deformations near the contact area, some sliding and energy dissipation occurs. Nevertheless, the resulting rolling resistance is much lower than sliding friction, and ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

Chunk (information)

A chunk is a fragment of information which is used in many multimedia file formats, such as PNG, IFF, MP3 and AVI. Each chunk contains a header which indicates some parameters (e.g. the type of chunk, comments, size etc.). Following the header is a variable area containing data, which is decoded by the program from the parameters in the header. Chunks may also be fragments of information which are downloaded or managed by P2P programs. In distributed computing, a chunk is a set of data which is sent to a processor or one of the parts of a computer for processing. See also * Chunking (computing) In computer programming, chunking has multiple meanings. In memory management Typical modern software systems allocate memory dynamically from structures known as heaps. Calls are made to heap-management routines to allocate and free memory. Hea ..., a procedure for memory allocation or message transmission in computer programming References Data unit {{software-eng-stub ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |