|

Analysis Of Covariance

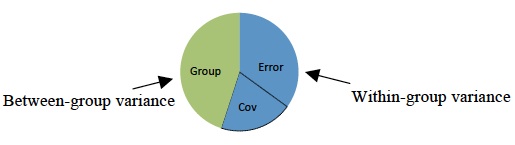

Analysis of covariance (ANCOVA) is a general linear model that blends ANOVA and regression analysis, regression. ANCOVA evaluates whether the means of a dependent variable (DV) are equal across levels of one or more Categorical variable, categorical independent variables (IV) and across one or more continuous variables. For example, the categorical variable(s) might describe treatment and the continuous variable(s) might be covariates (CV)'s, typically nuisance variables; or vice versa. Mathematically, ANCOVA decomposes the variance in the DV into variance explained by the CV(s), variance explained by the categorical IV, and residual variance. Intuitively, ANCOVA can be thought of as 'adjusting' the DV by the group means of the CV(s). The ANCOVA model assumes a linear relationship between the response (DV) and covariate (CV): y_ = \mu + \tau_i + \Beta(x_ - \overline) + \epsilon_. In this equation, the DV, y_ is the jth observation under the ith categorical group; the CV, x_ is t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

General Linear Model

The general linear model or general multivariate regression model is a compact way of simultaneously writing several multiple linear regression models. In that sense it is not a separate statistical linear model. The various multiple linear regression models may be compactly written as : \mathbf = \mathbf\mathbf + \mathbf, where Y is a Matrix (mathematics), matrix with series of multivariate measurements (each column being a set of measurements on one of the dependent variables), X is a matrix of observations on independent variables that might be a design matrix (each column being a set of observations on one of the independent variables), B is a matrix containing parameters that are usually to be estimated and U is a matrix containing Errors and residuals in statistics, errors (noise). The errors are usually assumed to be uncorrelated across measurements, and follow a multivariate normal distribution. If the errors do not follow a multivariate normal distribution, generalized li ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Multicollinearity

In statistics, multicollinearity or collinearity is a situation where the predictors in a regression model are linearly dependent. Perfect multicollinearity refers to a situation where the predictive variables have an ''exact'' linear relationship. When there is perfect collinearity, the design matrix X has less than full rank, and therefore the moment matrix X^X cannot be inverted. In this situation, the parameter estimates of the regression are not well-defined, as the system of equations has infinitely many solutions. Imperfect multicollinearity refers to a situation where the predictive variables have a ''nearly'' exact linear relationship. Contrary to popular belief, neither the Gauss–Markov theorem nor the more common maximum likelihood justification for ordinary least squares relies on any kind of correlation structure between dependent predictors (although perfect collinearity can cause problems with some software). There is no justification for the pra ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

MANCOVA

Multivariate analysis of covariance (MANCOVA) is an extension of analysis of covariance ( ANCOVA) methods to cover cases where there is more than one dependent variable and where the control of concomitant continuous independent variables – covariates – is required. The most prominent benefit of the MANCOVA design over the simple MANOVA is the 'factoring out' of noise or error that has been introduced by the covariant. A commonly used multivariate version of the ANOVA F-statistic is Wilks' Lambda (Λ), which represents the ratio between the error variance (or covariance) and the effect variance (or covariance). Statsoft Textbook, ANOVA/MANOVA. Goals Similarly to all tests in the family, the prim ...[...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Degrees Of Freedom (statistics)

In statistics, the number of degrees of freedom is the number of values in the final calculation of a statistic that are free to vary. Estimates of statistical parameters can be based upon different amounts of information or data. The number of independent pieces of information that go into the estimate of a parameter is called the degrees of freedom. In general, the degrees of freedom of an estimate of a parameter are equal to the number of independent scores that go into the estimate minus the number of parameters used as intermediate steps in the estimation of the parameter itself. For example, if the variance is to be estimated from a random sample of N independent scores, then the degrees of freedom is equal to the number of independent scores (''N'') minus the number of parameters estimated as intermediate steps (one, namely, the sample mean) and is therefore equal to N-1. Mathematically, degrees of freedom is the number of dimensions of the domain of a random vector, or e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Factor Analysis

Factor analysis is a statistical method used to describe variability among observed, correlated variables in terms of a potentially lower number of unobserved variables called factors. For example, it is possible that variations in six observed variables mainly reflect the variations in two unobserved (underlying) variables. Factor analysis searches for such joint variations in response to unobserved latent variables. The observed variables are modelled as linear combinations of the potential factors plus "error" terms, hence factor analysis can be thought of as a special case of errors-in-variables models. Simply put, the factor loading of a variable quantifies the extent to which the variable is related to a given factor. A common rationale behind factor analytic methods is that the information gained about the interdependencies between observed variables can be used later to reduce the set of variables in a dataset. Factor analysis is commonly used in psychometrics, pers ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Interaction (statistics)

In statistics, an interaction may arise when considering the relationship among three or more Variable (statistics), variables, and describes a situation in which the effect of one causal variable on an outcome depends on the state of a second causal variable (that is, when effects of the two causes are not additive map, additive). Although commonly thought of in terms of Causality, causal relationships, the concept of an interaction can also describe non-causal associations (then also called Moderation (statistics), ''moderation'' or ''effect modification''). Interactions are often considered in the context of regression analysis, regression analyses or factorial experiments. The presence of interactions can have important implications for the interpretation of statistical models. If two variables of interest interact, the relationship between each of the interacting variables and a third "dependent variable" depends on the value of the other interacting variable. In practice, th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Main Effect

In the design of experiments and analysis of variance, a main effect is the effect of an independent variable on a dependent variable averaged across the levels of any other independent variables. The term is frequently used in the context of factorial designs and regression models Regression or regressions may refer to: Arts and entertainment * ''Regression'' (film), a 2015 horror film by Alejandro Amenábar, starring Ethan Hawke and Emma Watson * ''Regression'' (magazine), an Australian punk rock fanzine (1982–1984) * ... to distinguish main effects from interaction effects. Relative to a factorial design, under an analysis of variance, a main effect test will test the hypotheses expected such as H0, the null hypothesis. Running a hypothesis for a main effect will test whether there is evidence of an effect of different treatments. However, a main effect test is nonspecific and will not allow for a localization of specific mean pairwise comparisons (simple effects). A mai ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Main Effects

Main may refer to: Geography *Main River (other), multiple rivers with the same name *Ma'in, an ancient kingdom in modern-day Yemen *Main, Iran, a village in Fars Province *Spanish Main, the Caribbean coasts of mainland Spanish territories in the 16th and 17th centuries *''The Main'', the diverse core running through Montreal, Quebec, Canada, also separating the Two Solitudes *Main (lunar crater), located near the north pole of the Moon *Main (Martian crater) People and organizations *Main (surname), a list of people with this family name *Main, alternate spelling for the Minaeans, an ancient people of modern-day Yemen *Main (band), a British ambient band formed in 1991 *Chas. T. Main, an American engineering and hydroelectric company founded in 1893 *MAIN (Mountain Area Information Network), former operator of WPVM-LP (MAIN-FM) in Asheville, North Carolina, U.S. *Main Deli Steak House ("The Main"), a smoked-meat delicatessen in Montreal, Quebec, Canada Ships * ''Ma ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mean Squared Error

In statistics, the mean squared error (MSE) or mean squared deviation (MSD) of an estimator (of a procedure for estimating an unobserved quantity) measures the average of the squares of the errors—that is, the average squared difference between the estimated values and the true value. MSE is a risk function, corresponding to the expected value of the squared error loss. The fact that MSE is almost always strictly positive (and not zero) is because of randomness or because the estimator does not account for information that could produce a more accurate estimate. In machine learning, specifically empirical risk minimization, MSE may refer to the ''empirical'' risk (the average loss on an observed data set), as an estimate of the true MSE (the true risk: the average loss on the actual population distribution). The MSE is a measure of the quality of an estimator. As it is derived from the square of Euclidean distance, it is always a positive value that decreases as the erro ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mediation (statistics)

In statistics, a mediation model seeks to identify and explain the mechanism or process that underlies an observed relationship between an independent variable and a dependent variable via the inclusion of a third hypothetical variable, known as a mediator variable (also a mediating variable, intermediary variable, or intervening variable). Rather than a direct causal relationship between the independent variable and the dependent variable, a mediation model proposes that the independent variable influences the mediator variable, which in turn influences the dependent variable. Thus, the mediator variable serves to clarify the nature of the causal relationship between the independent and dependent variables. Mediation analyses are employed to understand a known relationship by exploring the underlying mechanism or process by which one variable influences another variable through a mediator variable.Cohen, J.; Cohen, P.; West, S. G.; Leona S. Aiken, Aiken, L. S. (2003) ''Applied Mul ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Moderation (statistics)

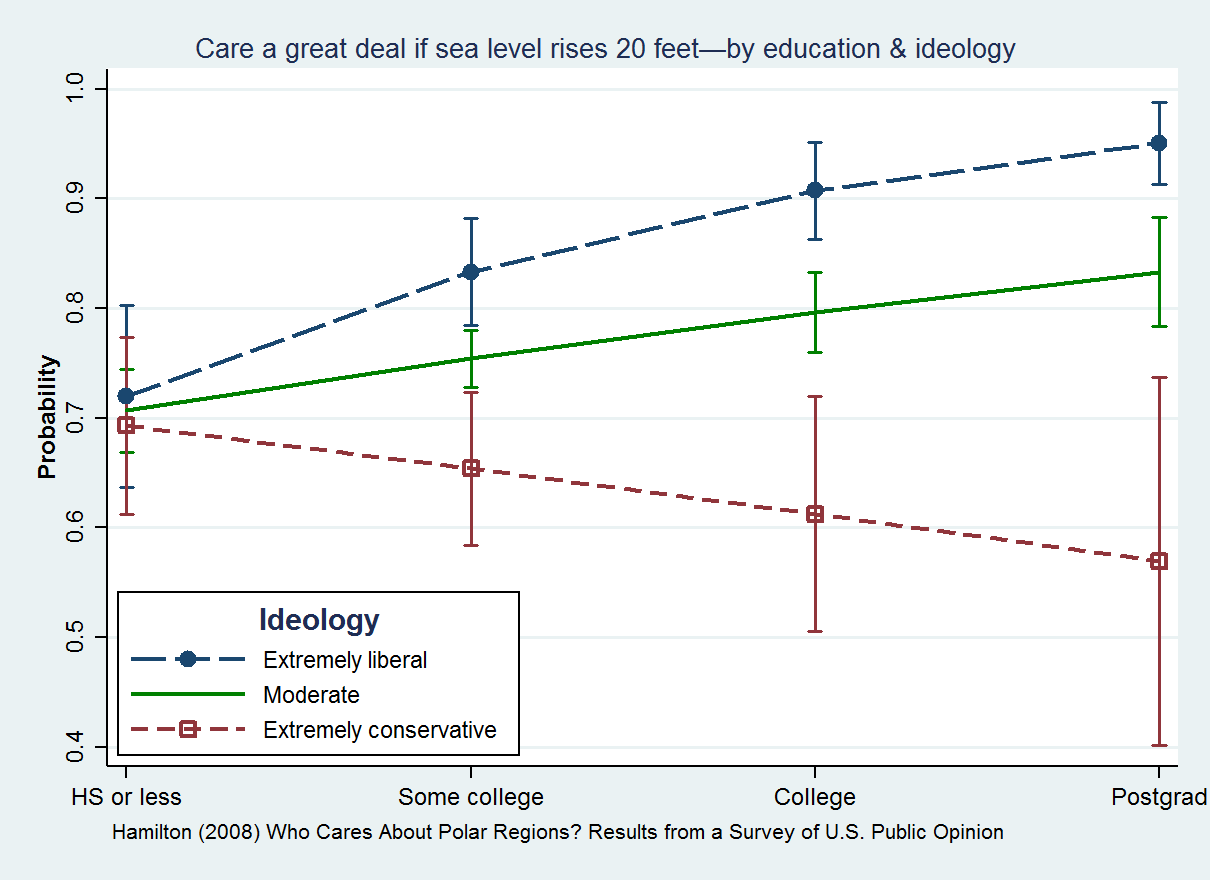

In statistics and regression analysis, moderation (also known as effect modification) occurs when the relationship between two variables depends on a third variable. The third variable is referred to as the moderator variable (or effect modifier) or simply the moderator (or modifier). The effect of a moderating variable is characterized statistically as an interaction; that is, a categorical (e.g., sex, ethnicity, class) or continuous (e.g., age, level of reward) variable that is associated with the direction and/or magnitude of the relation between dependent and independent variables. Specifically within a correlational analysis framework, a moderator is a third variable that affects the zero-order correlation between two other variables, or the value of the slope of the dependent variable on the independent variable. In analysis of variance (ANOVA) terms, a basic moderator effect can be represented as an interaction between a focal independent variable and a factor that spec ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Levene's Test

In statistics, Levene's test is an inferential statistic used to assess the equality of variances for a variable calculated for two or more groups. This test is used because some common statistical procedures assume that variances of the populations from which different samples are drawn are equal. Levene's test assesses this assumption. It tests the null hypothesis that the population variances are equal (called ''homogeneity of variance'' or ''homoscedasticity''). If the resulting ''p''-value of Levene's test is less than some significance level (typically 0.05), the obtained differences in sample variances are unlikely to have occurred based on random sampling from a population with equal variances. Thus, the null hypothesis of equal variances is rejected and it is concluded that there is a difference between the variances in the population. Levene's test has been used in the past before a comparison of means to inform the decision on whether to use a pooled t-test or the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |