|

Victim Cache

A victim cache is a small, usually fully associative cache placed in the refill path of a CPU cache that stores all the blocks evicted from that level of cache, originally proposed in 1990. In modern architectures, this function is typically performed by Level 3 or Level 4 caches. Overview Victim caching is a hardware technique to improve performance of caches proposed by Norman Jouppi. As mentioned in his paper: A victim cache is a hardware cache designed to decrease conflict misses and improve hit latency for direct-mapped caches. It is employed at the refill path of a Level 1 cache, such that any cache-line which gets evicted from the cache is cached in the victim cache. Thus, the victim cache gets populated only when data is thrown out of Level 1 cache. In case of a miss in Level 1, the missed entry is looked up in the victim cache. If the resulting access is a hit, the contents of the Level 1 cache-line and the matching victim cache line are swapped. Though initially ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Fully Associative

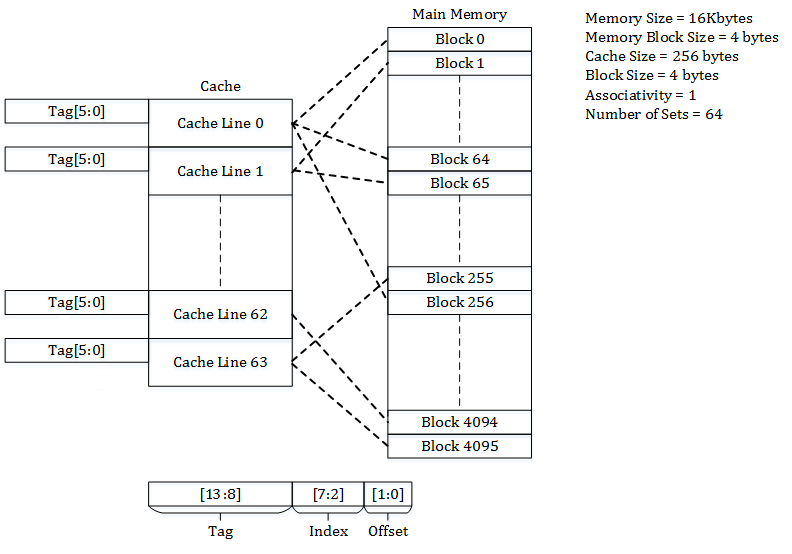

A CPU cache is a memory which holds the recently utilized data by the processor. A block of memory cannot necessarily be placed randomly in the cache and may be restricted to a single cache line or a set of cache lines by the cache placement policy. In other words, the cache placement policy determines where a particular memory block can be placed when it goes into the cache. There are three different policies available for placement of a memory block in the cache: direct-mapped, fully associative, and set-associative. Originally this space of cache organizations was described using the term "congruence mapping". Direct-mapped cache In a direct-mapped cache structure, the cache is organized into multiple sets with a single cache line per set. Based on the address of the memory block, it can only occupy a single cache line. The cache can be framed as a column matrix. To place a block in the cache * The set is determined by the index bits derived from the address of the me ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

CPU Cache

A CPU cache is a hardware cache used by the central processing unit (CPU) of a computer to reduce the average cost (time or energy) to access data from the main memory. A cache is a smaller, faster memory, located closer to a processor core, which stores copies of the data from frequently used main memory locations. Most CPUs have a hierarchy of multiple cache levels (L1, L2, often L3, and rarely even L4), with different instruction-specific and data-specific caches at level 1. The cache memory is typically implemented with static random-access memory (SRAM), in modern CPUs by far the largest part of them by chip area, but SRAM is not always used for all levels (of I- or D-cache), or even any level, sometimes some latter or all levels are implemented with eDRAM. Other types of caches exist (that are not counted towards the "cache size" of the most important caches mentioned above), such as the translation lookaside buffer (TLB) which is part of the memory management unit ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Norman Jouppi

Norman Paul Jouppi is an American electrical engineer and computer scientist. Career Jouppi was one of the computer architects at the MIPS Stanford University Project (under John L. Hennessy), an early RISC project. He received his master's degree in electrical engineering from Northwestern University in 1980 and was awarded a PhD in 1984 from Stanford University. In 1984 he joined Digital Equipment Corporation's Western Research Laboratory. He worked at Compaq and at Hewlett-Packard in 2002, where he ran the Advanced Architecture Lab at HP Labs in Palo Alto from 2006 to 2008 and then the Exascale Computing Lab from 2008 to 2010 and the Intelligent Infrastructure Lab from 2010 to 2011. After that, he became a computer engineer at Google. He pioneered developments in the field of memory hierarchies ( victim buffers, prefetching stream buffers multi-level exclusive caching), heterogeneous architectures (single ISA heterogeneous architectures) and the introduction of the CACT ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cache-line

A CPU cache is a hardware cache used by the central processing unit (CPU) of a computer to reduce the average cost (time or energy) to access data from the main memory. A cache is a smaller, faster memory, located closer to a processor core, which stores copies of the data from frequently used main memory locations. Most CPUs have a hierarchy of multiple cache levels (L1, L2, often L3, and rarely even L4), with different instruction-specific and data-specific caches at level 1. The cache memory is typically implemented with static random-access memory (SRAM), in modern CPUs by far the largest part of them by chip area, but SRAM is not always used for all levels (of I- or D-cache), or even any level, sometimes some latter or all levels are implemented with eDRAM. Other types of caches exist (that are not counted towards the "cache size" of the most important caches mentioned above), such as the translation lookaside buffer (TLB) which is part of the memory management unit (MMU) wh ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Crystal Well

Intel Graphics Technology (GT) is the collective name for a series of integrated graphics processors (IGPs) produced by Intel that are manufactured on the same package or die as the central processing unit (CPU). It was first introduced in 2010 as Intel HD Graphics and renamed in 2017 as Intel UHD Graphics. Intel Iris Graphics and Intel Iris Pro Graphics are the IGP series introduced in 2013 with some models of Haswell processors as the high-performance versions of HD Graphics. Iris Pro Graphics was the first in the series to incorporate embedded DRAM. Since 2016 Intel refers to the technology as Intel Iris Plus Graphics with the release of Kaby Lake. In the fourth quarter of 2013, Intel integrated graphics represented, in units, 65% of all PC graphics processor shipments. However, this percentage does not represent actual adoption as a number of these shipped units end up in systems with discrete graphics cards. History Before the introduction of Intel HD Graphics, Int ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Direct Mapped A CPU cache is a hardware cache use |