|

TOP 500

The TOP500 project ranks and details the 500 most powerful non- distributed computer systems in the world. The project was started in 1993 and publishes an updated list of the supercomputers twice a year. The first of these updates always coincides with the International Supercomputing Conference in June, and the second is presented at the ACM/IEEE Supercomputing Conference in November. The project aims to provide a reliable basis for tracking and detecting trends in high-performance computing and bases rankings on HPL benchmarks, a portable implementation of the high-performance LINPACK benchmark written in Fortran for distributed-memory computers. The most recent edition of TOP500 was published in June 2025 as the 65th edition of TOP500, while the next edition of TOP500 will be published in November 2025 as the 66th edition of TOP500. As of June 2025, the United States' El Capitan is the most powerful supercomputer in the TOP500, reaching 1742 petaFlops (1.742 exaFlops) on t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Jack Dongarra

Jack Joseph Dongarra (born July 18, 1950) is an American computer scientist and mathematician. He is a University Distinguished Professor Emeritus of Computer Science in the Electrical Engineering and Computer Science Department at the University of Tennessee. He holds the position of a Distinguished Research Staff member in the Computer Science and Mathematics Division at Oak Ridge National Laboratory, Turing Fellowship in the School of Mathematics at the University of Manchester, and is an adjunct professor and teacher in the Computer Science Department at Rice University. He served as a faculty fellow at the Texas A&M University Institute for Advanced Study (2014–2018). Dongarra is the founding director of the Innovative Computing Laboratory at the University of Tennessee. He was the recipient of the Turing Award in 2021. Education Dongarra received a BSc degree in mathematics from Chicago State University in 1972 and a MSc degree in Computer Science from the Illinois In ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

University Of Mannheim

The University of Mannheim (German: ''Universität Mannheim''), abbreviated UMA, is a public university, public research university in Mannheim, Baden-Württemberg, Germany. Founded in 1967, the university has its origins in the ''Palatine Academy of Sciences'', which was established by Charles Theodore, Elector of Bavaria, Elector Carl Theodor at Mannheim Palace in 1763, as well as the ''Handelshochschule'' (Commercial College Mannheim), which was founded in 1907. The university offers Undergraduate education, undergraduate, Master's degree, graduate and Doctorate, doctoral programs in business administration, economics, law, social sciences, humanities, mathematics, computer science and information systems. The university's campus is located in the city center of Mannheim and its main campus is in the Mannheim Palace. In the academic year 2020/2021 the university had 11,640 full-time students, 1600 academic staff, with 194 professors, and a total income of around €121 mill ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Intel

Intel Corporation is an American multinational corporation and technology company headquartered in Santa Clara, California, and Delaware General Corporation Law, incorporated in Delaware. Intel designs, manufactures, and sells computer components such as central processing units (CPUs) and related products for business and consumer markets. It is one of the world's List of largest semiconductor chip manufacturers, largest semiconductor chip manufacturers by revenue, and ranked in the Fortune 500, ''Fortune'' 500 list of the List of largest companies in the United States by revenue, largest United States corporations by revenue for nearly a decade, from 2007 to 2016 Fiscal year, fiscal years, until it was removed from the ranking in 2018. In 2020, it was reinstated and ranked 45th, being the List of Fortune 500 computer software and information companies, 7th-largest technology company in the ranking. It was one of the first companies listed on Nasdaq. Intel supplies List of I ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Instruction Set Architecture

In computer science, an instruction set architecture (ISA) is an abstract model that generally defines how software controls the CPU in a computer or a family of computers. A device or program that executes instructions described by that ISA, such as a central processing unit (CPU), is called an ''implementation'' of that ISA. In general, an ISA defines the supported instructions, data types, registers, the hardware support for managing main memory, fundamental features (such as the memory consistency, addressing modes, virtual memory), and the input/output model of implementations of the ISA. An ISA specifies the behavior of machine code running on implementations of that ISA in a fashion that does not depend on the characteristics of that implementation, providing binary compatibility between implementations. This enables multiple implementations of an ISA that differ in characteristics such as performance, physical size, and monetary cost (among other things), but t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

X86-64

x86-64 (also known as x64, x86_64, AMD64, and Intel 64) is a 64-bit extension of the x86 instruction set architecture, instruction set. It was announced in 1999 and first available in the AMD Opteron family in 2003. It introduces two new operating modes: 64-bit mode and compatibility mode, along with a new four-level paging mechanism. In 64-bit mode, x86-64 supports significantly larger amounts of virtual memory and physical memory compared to its 32-bit computing, 32-bit predecessors, allowing programs to utilize more memory for data storage. The architecture expands the number of general-purpose registers from 8 to 16, all fully general-purpose, and extends their width to 64 bits. Floating-point arithmetic is supported through mandatory SSE2 instructions in 64-bit mode. While the older x87 FPU and MMX registers are still available, they are generally superseded by a set of sixteen 128-bit Processor register, vector registers (XMM registers). Each of these vector registers ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

64-bit Computing

In computer architecture, 64-bit integers, memory addresses, or other data units are those that are 64 bits wide. Also, 64-bit central processing units (CPU) and arithmetic logic units (ALU) are those that are based on processor registers, address buses, or data buses of that size. A computer that uses such a processor is a 64-bit computer. From the software perspective, 64-bit computing means the use of machine code with 64-bit virtual memory addresses. However, not all 64-bit instruction sets support full 64-bit virtual memory addresses; x86-64 and AArch64, for example, support only 48 bits of virtual address, with the remaining 16 bits of the virtual address required to be all zeros (000...) or all ones (111...), and several 64-bit instruction sets support fewer than 64 bits of physical memory address. The term ''64-bit'' also describes a generation of computers in which 64-bit processors are the norm. 64 bits is a Word (computer architecture), word size that defines ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Processor Families In TOP500 Supercomputers

Processor may refer to: Computing Hardware * Processor (computing) ** Central processing unit (CPU), the hardware within a computer that executes a program *** Microprocessor, a central processing unit contained on a single integrated circuit (IC) **** Application-specific instruction set processor (ASIP), a component used in system-on-a-chip design **** Graphics processing unit (GPU), a processor designed for doing dedicated graphics-rendering computations **** Physics processing unit (PPU), a dedicated microprocessor designed to handle the calculations of physics **** Digital signal processor (DSP), a specialized microprocessor designed specifically for digital signal processing ***** Image processor, a specialized DSP used for image processing in digital cameras, mobile phones or other devices **** Neural processing unit (NPU), a class of specialized hardware accelerator or computer system designed to accelerate artificial intelligence and machine learning applications, inc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

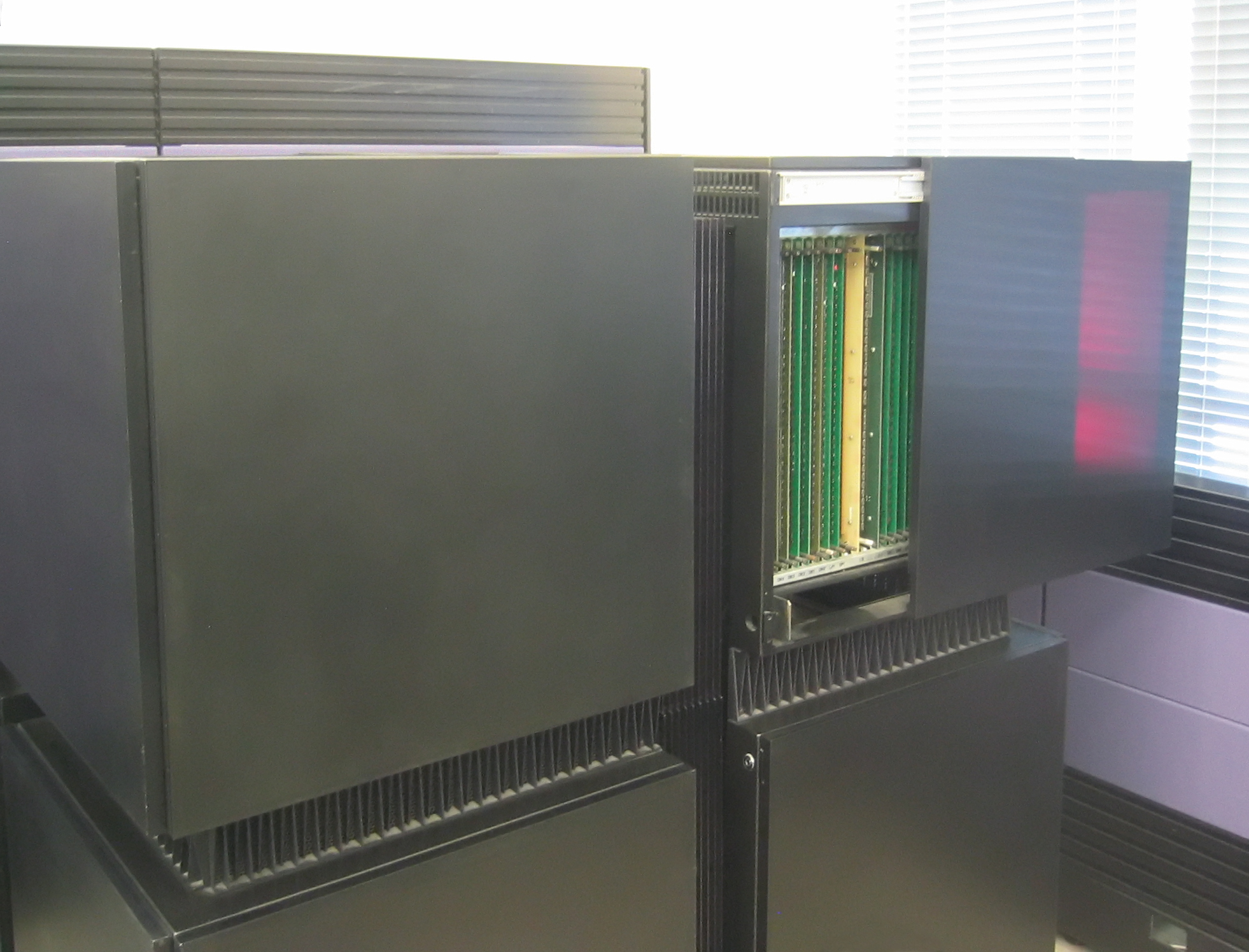

Connection Machine

The Connection Machine (CM) is a member of a series of massively parallel supercomputers sold by Thinking Machines Corporation. The idea for the Connection Machine grew out of doctoral research on alternatives to the traditional von Neumann architecture of computers by Danny Hillis at Massachusetts Institute of Technology (MIT) in the early 1980s. Starting with CM-1, the machines were intended originally for applications in artificial intelligence (AI) and symbolic processing, but later versions found greater success in the field of computational science. Origin of idea Danny Hillis and Sheryl Handler founded Thinking Machines Corporation (TMC) in Waltham, Massachusetts, in 1983, moving in 1984 to Cambridge, MA. At TMC, Hillis assembled a team to develop what would become the CM-1 Connection Machine, a design for a massively parallel Hypercube internetwork topology, hypercube-based arrangement of thousands of microprocessors, springing from his PhD thesis work at MIT in Electric ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

FLOPS

Floating point operations per second (FLOPS, flops or flop/s) is a measure of computer performance in computing, useful in fields of scientific computations that require floating-point calculations. For such cases, it is a more accurate measure than measuring instructions per second. Floating-point arithmetic Floating-point arithmetic is needed for very large or very small real numbers, or computations that require a large dynamic range. Floating-point representation is similar to scientific notation, except computers use base two (with rare exceptions), rather than base ten. The encoding scheme stores the sign, the exponent (in base two for Cray and VAX, base two or ten for IEEE floating point formats, and base 16 for IBM Floating Point Architecture) and the significand (number after the radix point). While several similar formats are in use, the most common is ANSI/IEEE Std. 754-1985. This standard defines the format for 32-bit numbers called ''single precision'', a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Summit (supercomputer)

Summit or OLCF-4 was a supercomputer developed by IBM for use at Oak Ridge Leadership Computing Facility (OLCF), a facility at the Oak Ridge National Laboratory, United States of America. It held the number 1 position on the TOP500 list from June 2018 to June 2020. As of June 2024, its LINPACK benchmark was clocked at 148.6 petaFLOPS. Summit was decommissioned on November 15, 2024. As of November 2019, the supercomputer had ranked as the 5th most energy efficient in the world with a measured power efficiency of 14.668 gigaFLOPS/watt. Summit was the first supercomputer to reach exaflop (a quintillion operations per second) speed, on a non-standard metric, achieving 1.88 exaflops during a genomic analysis and is expected to reach 3.3 exaflops using mixed-precision calculations. History The United States Department of Energy awarded a $325 million contract in November 2014 to IBM, Nvidia and Mellanox. The effort resulted in construction of Summit ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Moore's Law

Moore's law is the observation that the Transistor count, number of transistors in an integrated circuit (IC) doubles about every two years. Moore's law is an observation and Forecasting, projection of a historical trend. Rather than a law of physics, it is an empirical relationship. It is an experience-curve law, a type of law quantifying efficiency gains from experience in production. The observation is named after Gordon Moore, the co-founder of Fairchild Semiconductor and Intel and former CEO of the latter, who in 1965 noted that the number of components per integrated circuit had been exponential growth, doubling every year, and projected this rate of growth would continue for at least another decade. In 1975, looking forward to the next decade, he revised the forecast to doubling every two years, a compound annual growth rate (CAGR) of 41%. Moore's empirical evidence did not directly imply that the historical trend would continue, nevertheless, his prediction has held si ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |