|

T5 (language Model)

T5 (Text-to-Text Transfer Transformer) is a series of Large language model, large language models developed by Google AI introduced in 2019. Like the original Transformer model, T5 models are Transformer (deep learning architecture), encoder-decoder Transformers, where the encoder processes the input text, and the decoder generates the output text. T5 models are usually pretrained on a massive dataset of text and code, after which they can perform the text-based tasks that are similar to their pretrained tasks. They can also be finetuned to perform other tasks. T5 models have been employed in various applications, including chatbots, machine translation systems, text summarization tools, code generation, and robotics. Training The original T5 models are pre-trained on the Colossal Clean Crawled Corpus (C4), containing text and code Web crawler, scraped from the internet. This pre-training process enables the models to learn general language understanding and generation abiliti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Google AI

Google AI is a division of Google dedicated to artificial intelligence. It was announced at Google I/O 2017 by CEO Sundar Pichai. Projects * Serving cloud-based TPUs ( tensor processing units) in order to develop machine learning software. * Development of TensorFlow. * The TPU research cloud provides free access to a cluster of cloud TPUs to researchers engaged in open-source machine learning research. * Portal to over 5500 (as of September 2019) research publications by Google staff. * Magenta: a deep learning research team exploring the role of machine learning as a tool in the creative process. The team has released many open source projects allowing artists and musicians to extend their processes using AI. * Sycamore: a new 54- qubit programmable quantum processor. * LaMDA: a family of conversational neural language models References Further reading * Google Puts All Of Their A.I. Stuff On Google.ai, Announces Cloud TPU' * Google collects its AI initiatives und ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mixture Of Experts

Mixture of experts (MoE) refers to a machine learning technique where multiple expert networks (learners) are used to divide a problem space into homogeneous regions. It differs from ensemble techniques in that typically only a few, or 1, expert model will be run, rather than combining results from all models. An example from computer vision is combining one neural network model for human detection with another for pose estimation. Hierarchical mixture If the output is conditioned on multiple levels of (probabilistic) gating functions, the mixture is called a hierarchical mixture of experts. A gating network decides which expert to use for each input region. Learning thus consists of learning the parameters of: * individual learners and * gating network. Applications Meta Meta (from the Greek μετά, '' meta'', meaning "after" or "beyond") is a prefix meaning "more comprehensive" or "transcending". In modern nomenclature, ''meta''- can also serve as a prefix meaning ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Large Language Models

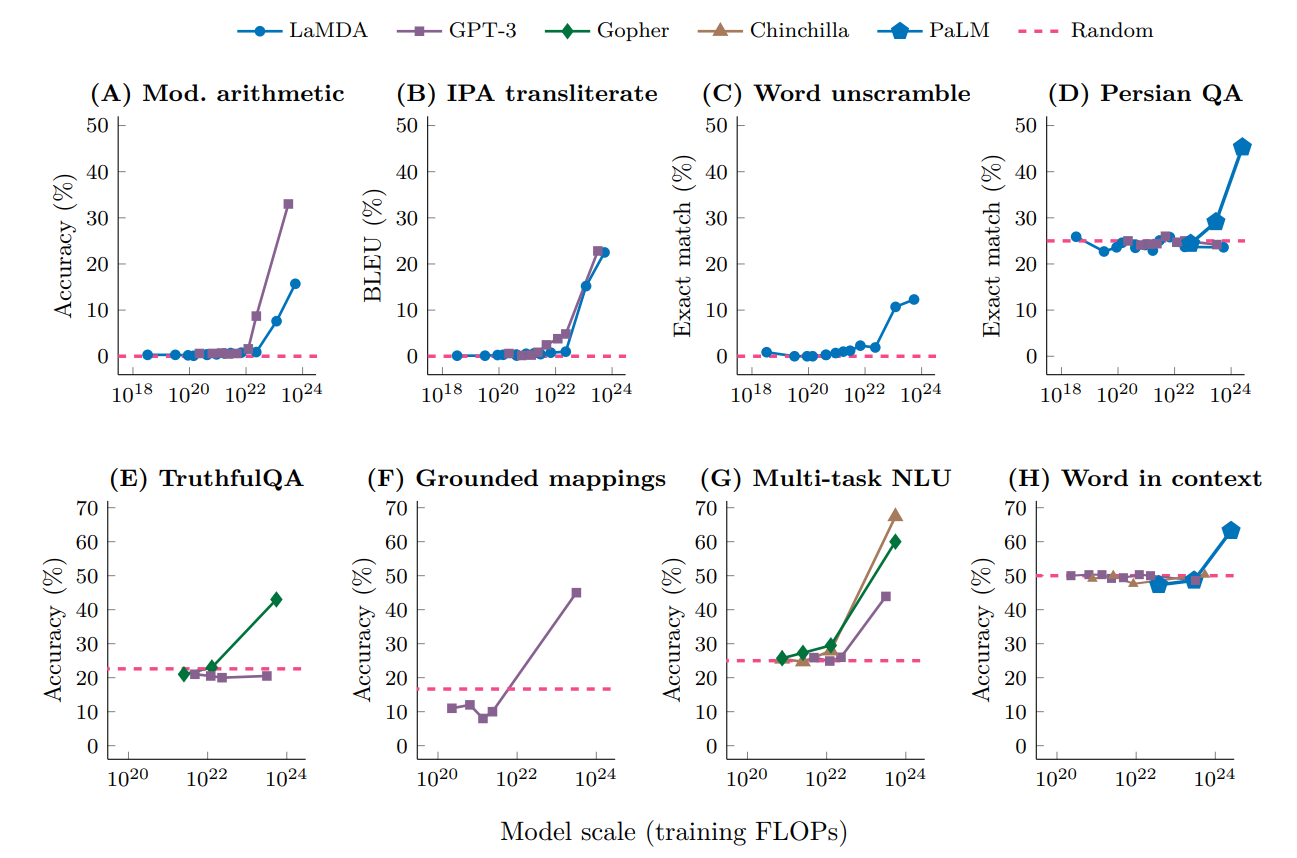

A large language model (LLM) is a language model consisting of a neural network with many parameters (typically billions of weights or more), trained on large quantities of unlabelled text using self-supervised learning. LLMs emerged around 2018 and perform well at a wide variety of tasks. This has shifted the focus of natural language processing research away from the previous paradigm of training specialized supervised models for specific tasks. Properties Though the term ''large language model'' has no formal definition, it often refers to deep learning models having a parameter count on the order of billions or more. LLMs are general purpose models which excel at a wide range of tasks, as opposed to being trained for one specific task (such as sentiment analysis, named entity recognition, or mathematical reasoning). The skill with which they accomplish tasks, and the range of tasks at which they are capable, seems to be a function of the amount of resources (data, parameter-si ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Google Software

Google LLC () is an American Multinational corporation, multinational technology company focusing on Search Engine, search engine technology, online advertising, cloud computing, software, computer software, quantum computing, e-commerce, artificial intelligence, and Computer hardware, consumer electronics. It has been referred to as "the most powerful company in the world" and one of the world's List of most valuable brands, most valuable brands due to its market dominance, data collection, and technological advantages in the area of artificial intelligence. Its parent company Alphabet Inc., Alphabet is considered one of the Big Tech, Big Five American information technology companies, alongside Amazon (company), Amazon, Apple Inc., Apple, Meta Platforms, Meta, and Microsoft. Google was founded on September 4, 1998, by Larry Page and Sergey Brin while they were Doctor of Philosophy, PhD students at Stanford University in California. Together they own about 14% of its publicl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Diffusion Model

In machine learning, diffusion models, also known as diffusion probabilistic models, are a class of latent variable models. They are Markov chains trained using variational inference. The goal of diffusion models is to learn the latent structure of a dataset by modeling the way in which data points diffuse through the latent space. In computer vision, this means that a neural network is trained to denoise images blurred with Gaussian noise by learning to reverse the diffusion process. Three examples of generic diffusion modeling frameworks used in computer vision are denoising diffusion probabilistic models, noise conditioned score networks, and stochastic differential equations. Diffusion models were introduced in 2015 with a motivation from non-equilibrium thermodynamics. Diffusion models can be applied to a variety of tasks, including image denoising, inpainting, super-resolution, and image generation. For example, an image generation model would start with a random noise image ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

The Pile (dataset)

The Pile is an 886.03 GB diverse, open-source dataset of English text created as a training dataset for large language models (LLMs). It was constructed by EleutherAI in 2020 and publicly released on December 31 of that year. It is composed of 22 smaller datasets, including 14 new ones. Creation Training LLMs requires sufficiently vast amounts of data that, before the introduction of the Pile, most data used for training LLMs was taken from the Common Crawl. However, LLMs trained on more diverse datasets are better able to handle a wider range of situations after training. The creation of the Pile was motivated by the need for a large enough dataset that contained data from a wide variety of sources and styles of writing. Compared to other datasets, the Pile's main distinguishing features are that it is a curated selection of data chosen by researchers at EleutherAI to contain information they thought language models should learn and that it is the only such dataset that is thoro ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Llama (language Model)

Llama (Large Language Model Meta AI, formerly stylized as LLaMA) is a family of autoregressive large language models (LLMs) released by Meta AI starting in February 2023. The latest version is Llama 3.3, released in December 2024. Llama models are trained at different parameter sizes, ranging between 1B and 405B. Originally, Llama was only available as a foundation model. Starting with Llama 2, Meta AI started releasing instruction fine-tuned versions alongside foundation models. Model weights for the first version of Llama were made available to the research community under a non-commercial license, and access was granted on a case-by-case basis. Unauthorized copies of the first model were shared via BitTorrent. Subsequent versions of Llama were made accessible outside academia and released under licenses that permitted some commercial use. Alongside the release of Llama 3, Meta added virtual assistant features to Facebook and WhatsApp in select regions, and a standalone webs ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

TensorFlow

TensorFlow is a free and open-source software library for machine learning and artificial intelligence. It can be used across a range of tasks but has a particular focus on training and inference of deep neural networks. "It is machine learning software being used for various kinds of perceptual and language understanding tasks" – Jeffrey Dean, minute 0:47 / 2:17 from YouTube clip TensorFlow was developed by the Google Brain team for internal Google use in research and production. The initial version was released under the Apache License 2.0 in 2015. Google released the updated version of TensorFlow, named TensorFlow 2.0, in September 2019. TensorFlow can be used in a wide variety of programming languages, including Python, JavaScript, C++, and Java. This flexibility lends itself to a range of applications in many different sectors. History DistBelief Starting in 2011, Google Brain built DistBelief as a proprietary machine learning system based on deep learning neur ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Google JAX

Google JAX is a machine learning framework for transforming numerical functions. It is described as bringing together a modified version oautograd(automatic obtaining of the gradient function through differentiation of a function) and TensorFlow'XLA(Accelerated Linear Algebra). It is designed to follow the structure and workflow of NumPy as closely as possible and works with various existing frameworks such as TensorFlow and PyTorch. The primary functions of JAX are: # grad: automatic differentiation # jit: compilation # vmap: auto-vectorization # pmap: SPMD programming grad The below code demonstrates the grad function's automatic differentiation. # imports from jax import grad import jax.numpy as jnp # define the logistic function def logistic(x): return jnp.exp(x) / (jnp.exp(x) + 1) # obtain the gradient function of the logistic function grad_logistic = grad(logistic) # evaluate the gradient of the logistic function at x = 1 grad_log_out = grad_logistic(1.0) ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Instruction Tuning

A large language model (LLM) is a language model consisting of a neural network with many parameters (typically billions of weights or more), trained on large quantities of unlabelled text using self-supervised learning. LLMs emerged around 2018 and perform well at a wide variety of tasks. This has shifted the focus of natural language processing research away from the previous paradigm of training specialized supervised models for specific tasks. Properties Though the term ''large language model'' has no formal definition, it often refers to deep learning models having a parameter count on the order of billions or more. LLMs are general purpose models which excel at a wide range of tasks, as opposed to being trained for one specific task (such as sentiment analysis, named entity recognition, or mathematical reasoning). The skill with which they accomplish tasks, and the range of tasks at which they are capable, seems to be a function of the amount of resources (data, parameter-si ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

UTF-8

UTF-8 is a variable-length character encoding used for electronic communication. Defined by the Unicode Standard, the name is derived from ''Unicode'' (or ''Universal Coded Character Set'') ''Transformation Format 8-bit''. UTF-8 is capable of encoding all 1,112,064 valid character code points in Unicode using one to four one-byte (8-bit) code units. Code points with lower numerical values, which tend to occur more frequently, are encoded using fewer bytes. It was designed for backward compatibility with ASCII: the first 128 characters of Unicode, which correspond one-to-one with ASCII, are encoded using a single byte with the same binary value as ASCII, so that valid ASCII text is valid UTF-8-encoded Unicode as well. UTF-8 was designed as a superior alternative to UTF-1, a proposed variable-length encoding with partial ASCII compatibility which lacked some features including self-synchronization and fully ASCII-compatible handling of characters such as slashes. Ken Thompson ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Zero-shot Learning

Zero-shot learning (ZSL) is a problem setup in machine learning where, at test time, a learner observes samples from classes which were not observed during training, and needs to predict the class that they belong to. Zero-shot methods generally work by associating observed and non-observed classes through some form of auxiliary information, which encodes observable distinguishing properties of objects. For example, given a set of images of animals to be classified, along with auxiliary textual descriptions of what animals look like, an artificial intelligence model which has been trained to recognize horses, but has never been given a zebra, can still recognize a zebra when it also knows that zebras look like striped horses. This problem is widely studied in computer vision, natural language processing, and machine perception. Background and history The first paper on zero-shot learning in natural language processing appeared in 2008 at the AAAI’08, but the name given to the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |