|

Standardized Testing (statistics)

A ''Z''-test is any statistical test for which the distribution of the test statistic under the null hypothesis can be approximated by a normal distribution. ''Z''-test tests the mean of a distribution. For each significance level in the confidence interval, the ''Z''-test has a single critical value (for example, 1.96 for 5% two-tailed), which makes it more convenient than the Student's ''t''-test whose critical values are defined by the sample size (through the corresponding degrees of freedom). Both the ''Z''-test and Student's ''t''-test have similarities in that they both help determine the significance of a set of data. However, the ''Z''-test is rarely used in practice because the population deviation is difficult to determine. Applicability Because of the central limit theorem, many test statistics are approximately normally distributed for large samples. Therefore, many statistical tests can be conveniently performed as approximate ''Z''-tests if the sample size is l ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

Graphics Pipeline

The computer graphics pipeline, also known as the rendering pipeline, or graphics pipeline, is a framework within computer graphics that outlines the necessary procedures for transforming a three-dimensional (3D) scene into a two-dimensional (2D) representation on a screen. Once a 3D model is generated, the graphics pipeline converts the model into a visually perceivable format on the computer display. Due to the dependence on specific software, hardware configurations, and desired display attributes, a universally applicable graphics pipeline does not exist. Nevertheless, graphics application programming interfaces (APIs), such as Direct3D, OpenGL and Vulkan were developed to standardize common procedures and oversee the graphics pipeline of a given hardware accelerator. These APIs provide an abstraction layer over the underlying hardware, relieving programmers from the need to write code explicitly targeting various graphics hardware accelerators like AMD, Intel, Nvidia, a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

P-values

In null-hypothesis significance testing, the ''p''-value is the probability of obtaining test results at least as extreme as the result actually observed, under the assumption that the null hypothesis is correct. A very small ''p''-value means that such an extreme observed outcome would be very unlikely ''under the null hypothesis''. Even though reporting ''p''-values of statistical tests is common practice in academic publications of many quantitative fields, misinterpretation and misuse of p-values is widespread and has been a major topic in mathematics and metascience. In 2016, the American Statistical Association (ASA) made a formal statement that "''p''-values do not measure the probability that the studied hypothesis is true, or the probability that the data were produced by random chance alone" and that "a ''p''-value, or statistical significance, does not measure the size of an effect or the importance of a result" or "evidence regarding a model or hypothesis". That ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

Effect Size

In statistics, an effect size is a value measuring the strength of the relationship between two variables in a population, or a sample-based estimate of that quantity. It can refer to the value of a statistic calculated from a sample of data, the value of one parameter for a hypothetical population, or to the equation that operationalizes how statistics or parameters lead to the effect size value. Examples of effect sizes include the correlation between two variables, the regression coefficient in a regression, the mean difference, or the risk of a particular event (such as a heart attack) happening. Effect sizes are a complement tool for statistical hypothesis testing, and play an important role in power analyses to assess the sample size required for new experiments. Effect size are fundamental in meta-analyses which aim to provide the combined effect size based on data from multiple studies. The cluster of data-analysis methods concerning effect sizes is referred to as estima ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

P-value

In null-hypothesis significance testing, the ''p''-value is the probability of obtaining test results at least as extreme as the result actually observed, under the assumption that the null hypothesis is correct. A very small ''p''-value means that such an extreme observed outcome would be very unlikely ''under the null hypothesis''. Even though reporting ''p''-values of statistical tests is common practice in academic publications of many quantitative fields, misinterpretation and misuse of p-values is widespread and has been a major topic in mathematics and metascience. In 2016, the American Statistical Association (ASA) made a formal statement that "''p''-values do not measure the probability that the studied hypothesis is true, or the probability that the data were produced by random chance alone" and that "a ''p''-value, or statistical significance, does not measure the size of an effect or the importance of a result" or "evidence regarding a model or hypothesis". That ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

One-tailed

In statistical significance testing, a one-tailed test and a two-tailed test are alternative ways of computing the statistical significance of a parameter inferred from a data set, in terms of a test statistic. A two-tailed test is appropriate if the estimated value is greater or less than a certain range of values, for example, whether a test taker may score above or below a specific range of scores. This method is used for null hypothesis testing and if the estimated value exists in the critical areas, the alternative hypothesis is accepted over the null hypothesis. A one-tailed test is appropriate if the estimated value may depart from the reference value in only one direction, left or right, but not both. An example can be whether a machine produces more than one-percent defective products. In this situation, if the estimated value exists in one of the one-sided critical areas, depending on the direction of interest (greater than or less than), the alternative hypothesis is ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Standard Error (statistics)

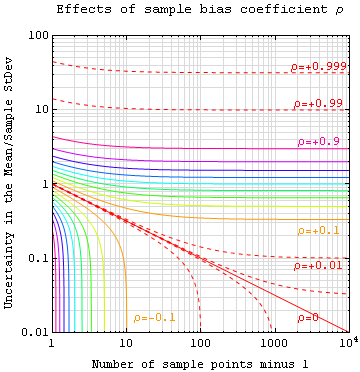

The standard error (SE) of a statistic (usually an estimator of a parameter, like the average or mean) is the standard deviation of its sampling distribution or an estimate of that standard deviation. In other words, it is the standard deviation of statistic values (each value is per sample that is a set of observations made per sampling on the same population). If the statistic is the sample mean, it is called the standard error of the mean (SEM). The standard error is a key ingredient in producing confidence intervals. The sampling distribution of a mean is generated by repeated sampling from the same population and recording the sample mean per sample. This forms a distribution of different means, and this distribution has its own mean and variance. Mathematically, the variance of the sampling mean distribution obtained is equal to the variance of the population divided by the sample size. This is because as the sample size increases, sample means cluster more closely aro ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

Sampling (statistics)

In this statistics, quality assurance, and survey methodology, sampling is the selection of a subset or a statistical sample (termed sample for short) of individuals from within a population (statistics), statistical population to estimate characteristics of the whole population. The subset is meant to reflect the whole population, and statisticians attempt to collect samples that are representative of the population. Sampling has lower costs and faster data collection compared to recording data from the entire population (in many cases, collecting the whole population is impossible, like getting sizes of all stars in the universe), and thus, it can provide insights in cases where it is infeasible to measure an entire population. Each observation measures one or more properties (such as weight, location, colour or mass) of independent objects or individuals. In survey sampling, weights can be applied to the data to adjust for the sample design, particularly in stratified samplin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Consistent Estimator

In statistics, a consistent estimator or asymptotically consistent estimator is an estimator—a rule for computing estimates of a parameter ''θ''0—having the property that as the number of data points used increases indefinitely, the resulting sequence of estimates converges in probability to ''θ''0. This means that the distributions of the estimates become more and more concentrated near the true value of the parameter being estimated, so that the probability of the estimator being arbitrarily close to ''θ''0 converges to one. In practice one constructs an estimator as a function of an available sample of size ''n'', and then imagines being able to keep collecting data and expanding the sample ''ad infinitum''. In this way one would obtain a sequence of estimates indexed by ''n'', and consistency is a property of what occurs as the sample size “grows to infinity”. If the sequence of estimates can be mathematically shown to converge in probability to the true value '' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Slutsky's Theorem

In probability theory, Slutsky's theorem extends some properties of algebraic operations on convergent sequences of real numbers to sequences of random variables. The theorem was named after Eugen Slutsky. Slutsky's theorem is also attributed to Harald Cramér. Statement Let X_n, Y_n be sequences of scalar/vector/matrix random elements. If X_n converges in distribution to a random element X and Y_n converges in probability to a constant c, then * X_n + Y_n \ \xrightarrow\ X + c ; * X_nY_n \ \xrightarrow\ Xc ; * X_n/Y_n \ \xrightarrow\ X/c, provided that ''c'' is invertible, where \xrightarrow denotes convergence in distribution. Notes: # The requirement that ''Yn'' converges to a constant is important — if it were to converge to a non-degenerate random variable, the theorem would be no longer valid. For example, let X_n \sim (0,1) and Y_n = -X_n. The sum X_n + Y_n = 0 for all values of ''n''. Moreover, Y_n \, \xrightarrow \, (-1,0), but X_n + Y_n does not converge ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Nuisance Parameter

In statistics, a nuisance parameter is any parameter which is unspecified but which must be accounted for in the hypothesis testing of the parameters which are of interest. The classic example of a nuisance parameter comes from the normal distribution, a member of the location–scale family. For at least one normal distribution, the variance(s), ''σ2'' is often not specified or known, but one desires to hypothesis test on the mean(s). Another example might be linear regression with unknown variance in the explanatory variable (the independent variable): its variance is a nuisance parameter that must be accounted for to derive an accurate interval estimate of the regression slope, calculate p-values, hypothesis test on the slope's value; see regression dilution. Nuisance parameters are often scale parameters, but not always; for example in errors-in-variables models, the unknown true location of each observation is a nuisance parameter. A parameter may also cease to be a " ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Paired Difference Test

A paired difference test, better known as a paired comparison, is a type of location test that is used when comparing two sets of paired sample, paired measurements to assess whether their expected value, population means differ. A paired difference test is designed for situations where there is dependence between pairs of measurements (in which case a test designed for comparing two independent samples would not be appropriate). That applies in a within-subjects study design, i.e., in a study where the same set of subjects undergo both of the conditions being compared. Specific methods for carrying out paired difference tests include the paired-samples t-test, the paired Z-test, the Wilcoxon signed-rank test and others. Use in reducing variance Paired difference tests for reducing variance are a specific type of blocking (statistics), blocking. To illustrate the idea, suppose we are assessing the performance of a drug for treating high cholesterol. Under the design of our stu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

T-test

Student's ''t''-test is a statistical test used to test whether the difference between the response of two groups is Statistical significance, statistically significant or not. It is any statistical hypothesis testing, statistical hypothesis test in which the test statistic follows a Student's t-distribution, Student's ''t''-distribution under the null hypothesis. It is most commonly applied when the test statistic would follow a normal distribution if the value of a Scale parameter, scaling term in the test statistic were known (typically, the scaling term is unknown and is therefore a nuisance parameter). When the scaling term is estimated based on the data, the test statistic—under certain conditions—follows a Student's ''t'' distribution. The ''t''-test's most common application is to test whether the means of two populations are significantly different. In many cases, a Z-test, ''Z''-test will yield very similar results to a ''t''-test because the latter converges to the fo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |