|

Quasiconvexity (Calculus Of Variations)

In mathematics, a quasiconvex function is a real number, real-valued function (mathematics), function defined on an interval (mathematics), interval or on a convex set, convex subset of a real vector space such that the inverse image of any set of the form (-\infty,a) is a convex set. For a function of a single variable, along any stretch of the curve the highest point is one of the endpoints. The negative of a quasiconvex function is said to be quasiconcave. Quasiconvexity is a more general property than convexity in that all convex functions are also quasiconvex, but not all quasiconvex functions are convex. ''Univariate'' Unimodality, unimodal functions are quasiconvex or quasiconcave, however this is not necessarily the case for functions with multiple argument of a function, arguments. For example, the 2-dimensional Rosenbrock function is unimodal but not quasiconvex and functions with Star_domain, star-convex sublevel sets can be unimodal without being quasiconvex. Def ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Monotonicity Example2

In mathematics, a monotonic function (or monotone function) is a function between ordered sets that preserves or reverses the given order. This concept first arose in calculus, and was later generalized to the more abstract setting of order theory. In calculus and analysis In calculus, a function f defined on a subset of the real numbers with real values is called ''monotonic'' if it is either entirely non-decreasing, or entirely non-increasing. That is, as per Fig. 1, a function that increases monotonically does not exclusively have to increase, it simply must not decrease. A function is termed ''monotonically increasing'' (also ''increasing'' or ''non-decreasing'') if for all x and y such that x \leq y one has f\!\left(x\right) \leq f\!\left(y\right), so f preserves the order (see Figure 1). Likewise, a function is called ''monotonically decreasing'' (also ''decreasing'' or ''non-increasing'') if, whenever x \leq y, then f\!\left(x\right) \geq f\!\left(y\right), so ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lagrange Duality

In mathematical optimization theory, duality or the duality principle is the principle that optimization problems may be viewed from either of two perspectives, the primal problem or the dual problem. If the primal is a minimization problem then the dual is a maximization problem (and vice versa). Any feasible solution to the primal (minimization) problem is at least as large as any feasible solution to the dual (maximization) problem. Therefore, the solution to the primal is an upper bound to the solution of the dual, and the solution of the dual is a lower bound to the solution of the primal. This fact is called weak duality. In general, the optimal values of the primal and dual problems need not be equal. Their difference is called the duality gap. For convex optimization problems, the duality gap is zero under a constraint qualification condition. This fact is called strong duality. Dual problem Usually the term "dual problem" refers to the ''Lagrangian dual problem'' but other ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mathematics Of Operations Research

''Mathematics of Operations Research'' is a quarterly peer-reviewed scientific journal established in February 1976. It focuses on areas of mathematics relevant to the field of operations research such as continuous optimization, discrete optimization, game theory, machine learning, simulation methodology, and stochastic models. The journal is published by INFORMS (Institute for Operations Research and the Management Sciences). the journal has a 2017 impact factor of 1.078. History The journal was established in 1976. The founding editor-in-chief was Arthur F. Veinott Jr. (Stanford University). He served until 1980, when the position was taken over by Stephen M. Robinson, who held the position until 1986. Erhan Cinlar served from 1987 to 1992, and was followed by Jan Karel Lenstra (1993-1998). Next was Gérard Cornuéjols (1999-2003) and Nimrod Megiddo (2004-2009). Finally came Uri Rothblum (2009-2012), Jim Dai (2012-2018), and the current editor-in-chief Katya Scheinberg (20 ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

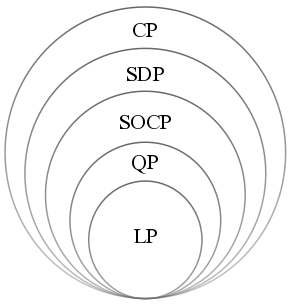

Convex Programming

Convex optimization is a subfield of mathematical optimization that studies the problem of minimizing convex functions over convex sets (or, equivalently, maximizing concave functions over convex sets). Many classes of convex optimization problems admit polynomial-time algorithms, whereas mathematical optimization is in general NP-hard. Definition Abstract form A convex optimization problem is defined by two ingredients: * The ''objective function'', which is a real-valued convex function of ''n'' variables, f :\mathcal D \subseteq \mathbb^n \to \mathbb; * The ''feasible set'', which is a convex subset C\subseteq \mathbb^n. The goal of the problem is to find some \mathbf \in C attaining :\inf \. In general, there are three options regarding the existence of a solution: * If such a point ''x''* exists, it is referred to as an ''optimal point'' or ''solution''; the set of all optimal points is called the ''optimal set''; and the problem is called ''solvable''. * If f is unbo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Iterative Method

In computational mathematics, an iterative method is a Algorithm, mathematical procedure that uses an initial value to generate a sequence of improving approximate solutions for a class of problems, in which the ''i''-th approximation (called an "iterate") is derived from the previous ones. A specific implementation with Algorithm#Termination, termination criteria for a given iterative method like gradient descent, hill climbing, Newton's method, or Quasi-Newton method, quasi-Newton methods like Broyden–Fletcher–Goldfarb–Shanno algorithm, BFGS, is an algorithm of an iterative method or a method of successive approximation. An iterative method is called ''Convergent series, convergent'' if the corresponding sequence converges for given initial approximations. A mathematically rigorous convergence analysis of an iterative method is usually performed; however, heuristic-based iterative methods are also common. In contrast, direct methods attempt to solve the problem by a finit ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Nonlinear Programming

In mathematics, nonlinear programming (NLP) is the process of solving an optimization problem where some of the constraints are not linear equalities or the objective function is not a linear function. An optimization problem is one of calculation of the extrema (maxima, minima or stationary points) of an objective function over a set of unknown real variables and conditional to the satisfaction of a system of equalities and inequalities, collectively termed constraints. It is the sub-field of mathematical optimization that deals with problems that are not linear. Definition and discussion Let ''n'', ''m'', and ''p'' be positive integers. Let ''X'' be a subset of ''Rn'' (usually a box-constrained one), let ''f'', ''gi'', and ''hj'' be real-valued functions on ''X'' for each ''i'' in and each ''j'' in , with at least one of ''f'', ''gi'', and ''hj'' being nonlinear. A nonlinear programming problem is an optimization problem of the form : \begin \text & f(x) \\ \text ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Economics

Economics () is a behavioral science that studies the Production (economics), production, distribution (economics), distribution, and Consumption (economics), consumption of goods and services. Economics focuses on the behaviour and interactions of Agent (economics), economic agents and how economy, economies work. Microeconomics analyses what is viewed as basic elements within economy, economies, including individual agents and market (economics), markets, their interactions, and the outcomes of interactions. Individual agents may include, for example, households, firms, buyers, and sellers. Macroeconomics analyses economies as systems where production, distribution, consumption, savings, and Expenditure, investment expenditure interact; and the factors of production affecting them, such as: Labour (human activity), labour, Capital (economics), capital, Land (economics), land, and Entrepreneurship, enterprise, inflation, economic growth, and public policies that impact gloss ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Game Theory

Game theory is the study of mathematical models of strategic interactions. It has applications in many fields of social science, and is used extensively in economics, logic, systems science and computer science. Initially, game theory addressed two-person zero-sum games, in which a participant's gains or losses are exactly balanced by the losses and gains of the other participant. In the 1950s, it was extended to the study of non zero-sum games, and was eventually applied to a wide range of Human behavior, behavioral relations. It is now an umbrella term for the science of rational Decision-making, decision making in humans, animals, and computers. Modern game theory began with the idea of mixed-strategy equilibria in two-person zero-sum games and its proof by John von Neumann. Von Neumann's original proof used the Brouwer fixed-point theorem on continuous mappings into compact convex sets, which became a standard method in game theory and mathematical economics. His paper was f ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mathematical Optimization

Mathematical optimization (alternatively spelled ''optimisation'') or mathematical programming is the selection of a best element, with regard to some criteria, from some set of available alternatives. It is generally divided into two subfields: discrete optimization and continuous optimization. Optimization problems arise in all quantitative disciplines from computer science and engineering to operations research and economics, and the development of solution methods has been of interest in mathematics for centuries. In the more general approach, an optimization problem consists of maxima and minima, maximizing or minimizing a Function of a real variable, real function by systematically choosing Argument of a function, input values from within an allowed set and computing the Value (mathematics), value of the function. The generalization of optimization theory and techniques to other formulations constitutes a large area of applied mathematics. Optimization problems Opti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mathematical Analysis

Analysis is the branch of mathematics dealing with continuous functions, limit (mathematics), limits, and related theories, such as Derivative, differentiation, Integral, integration, measure (mathematics), measure, infinite sequences, series (mathematics), series, and analytic functions. These theories are usually studied in the context of Real number, real and Complex number, complex numbers and Function (mathematics), functions. Analysis evolved from calculus, which involves the elementary concepts and techniques of analysis. Analysis may be distinguished from geometry; however, it can be applied to any Space (mathematics), space of mathematical objects that has a definition of nearness (a topological space) or specific distances between objects (a metric space). History Ancient Mathematical analysis formally developed in the 17th century during the Scientific Revolution, but many of its ideas can be traced back to earlier mathematicians. Early results in analysis were ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |