|

Primary Server

A server farm or server cluster is a collection of computer servers, usually maintained by an organization to supply server functionality far beyond the capability of a single machine. They often consist of thousands of computers which require a large amount of power to run and to keep cool. At the optimum performance level, a server farm has enormous financial and environmental costs. They often include backup servers that can take over the functions of primary servers that may fail. Server farms are typically collocated with the network switches and/or routers that enable communication between different parts of the cluster and the cluster's users. Server "farmers" typically mount computers, routers, power supplies and related electronics on 19-inch racks in a server room or data center. Applications Server farms are commonly used for cluster computing. Many modern supercomputers comprise giant server farms of high-speed processors connected by either Ethernet or custom interc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

3D Rendering

3D rendering is the 3D computer graphics process of converting 3D models into 2D images on a computer. 3D renders may include photorealistic effects or non-photorealistic styles. Rendering methods Rendering is the final process of creating the actual 2D image or animation from the prepared scene. This can be compared to taking a photo or filming the scene after the setup is finished in real life. Several different, and often specialized, rendering methods have been developed. These range from the distinctly non-realistic wireframe rendering through polygon-based rendering, to more advanced techniques such as: scanline rendering, ray tracing, or radiosity. Rendering may take from fractions of a second to days for a single image/frame. In general, different methods are better suited for either photorealistic rendering, or real-time rendering. Real-time Rendering for interactive media, such as games and simulations, is calculated and displayed in real time, at rates ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Transaction Processing Performance Council

The Transaction Processing Performance Council (TPC), founded in 1988, is a non-profit organization founded to define benchmarks for transaction processing and databases, and to publish objective, verifiable TPC performance data to the industry. TPC benchmarks are used in evaluating the performance of computer systems, and TPC publishes the results. Conference Series In 2009 the TPC initiated an International Technology Conference Series on Performance Evaluation and Benchmarking (TPCTC), a forum for industry experts and researchers to discuss and develop techniques for evaluation, measurement and characterization of modern application systems. The conference series was founded in 2009 by Raghunath Nambiar of Cisco and Meikel Poess in 2009. *TPCTC 2009, in conjunction with VLDB 2009 on August 24, 2009 in Lyon, France. *TPCTC 2010, in conjunction with VLDB 2010 on September 17, 2010 in Singapore. *TPCTC 2011, in conjunction with VLDB 2011 on August 29, 2011 in Seattle, Washington ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

SPECpower

{{Short description, Type of computer benchmarking tool SPECpower_ssj2008 is the first industry-standard benchmark that evaluates the power and performance characteristics of volume server class computers. It is available from the Standard Performance Evaluation Corporation (SPEC). SPECpower_ssj2008 is SPEC's first attempt at defining server power measurement standards. It was introduced in December, 2007. Several SPEC member companies contributed to the development of the new power-performance measurement standard, including AMD, Dell, Fujitsu Siemens Computers, HP, Intel, IBM, and Sun Microsystems. See also * Average CPU power * EEMBC EnergyBench * IT energy management * Performance per watt In computing, performance per watt is a measure of the energy efficiency of a particular computer architecture or computer hardware. Literally, it measures the rate of computation that can be delivered by a computer for every watt of power con ... References Official SPEC website ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

EEMBC

EEMBC, the Embedded Microprocessor Benchmark Consortium, is a non-profit, member-funded organization formed in 1997, focused on the creation of standard benchmarks for the hardware and software used in embedded systems. The goal of its members is to make EEMBC benchmarks an industry standard for evaluating the capabilities of embedded processors, compilers, and the associated embedded system implementations, according to objective, clearly defined, application-based criteria. EEMBC members may contribute to the development of benchmarks, vote at various stages before public distribution, and accelerate testing of their platforms through early access to benchmarks and associated specifications. Most Popular Benchmark Working Groups In chronological order of development: AutoBench 1.1 - single- threaded code for automotive, industrial, and general-purpose applications Networking - single-threaded code associated with moving packets in networking applications. MultiBench - multi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Platform Virtualization

In computing, virtualization (abbreviated v12n) is a series of technologies that allows dividing of physical computing resources into a series of Virtual machine, virtual machines, Operating system, operating systems, processes or containers. Virtualization began in the 1960s with IBM CP/CMS. The control program CP provided each user with a simulated stand-alone System/360 computer. In hardware virtualization, the ''host machine'' is the machine that is used by the virtualization and the ''guest machine'' is the virtual machine. The words ''host'' and ''guest'' are used to distinguish the software that runs on the physical machine from the software that runs on the virtual machine. The software or firmware that creates a virtual machine on the host hardware is called a ''hypervisor'' or ''virtual machine monitor''. Hardware virtualization is not the same as hardware emulation. Hardware-assisted virtualization facilitates building a virtual machine monitor and allows guest OSes to ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Wake-on-lan

Wake-on-LAN (WoL) is an Ethernet or Token Ring computer networking standard that allows a computer to be turned on or awakened from sleep mode by a network message. The message is usually sent to the target computer by a program executed on a device connected to the same local area network (LAN). It is also possible to initiate the message from another network by using subnet directed broadcasts or a WoL gateway service. It is based upon AMD's ''Magic Packet Technology'', which was co-developed by AMD and Hewlett-Packard, following its proposal as a standard in 1995. The standard saw quick adoption thereafter through IBM, Intel and others. If the computer being awakened is communicating via Wi-Fi, a supplementary standard called Wake on Wireless LAN (WoWLAN) must be employed. The WoL and WoWLAN standards are often supplemented by vendors to provide protocol-transparent on-demand services, for example in the Apple Bonjour wake-on-demand ( Sleep Proxy) feature. History The basi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Clock Rate

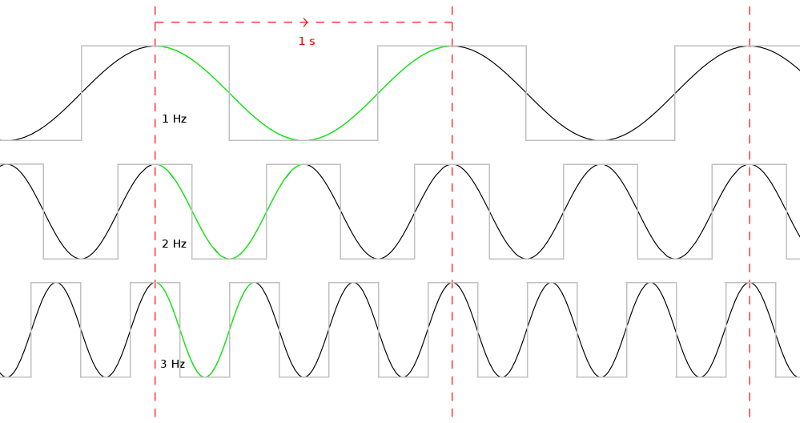

Clock rate or clock speed in computing typically refers to the frequency at which the clock generator of a processor can generate pulses used to synchronize the operations of its components. It is used as an indicator of the processor's speed. Clock rate is measured in the SI unit of frequency hertz (Hz). The clock rate of the first generation of computers was measured in hertz or kilohertz (kHz), the first personal computers from the 1970s through the 1980s had clock rates measured in megahertz (MHz). In the 21st century the speed of modern CPUs is commonly advertised in gigahertz (GHz). This metric is most useful when comparing processors within the same family, holding constant other features that may affect performance. Determining factors Binning Manufacturers of modern processors typically charge higher prices for processors that operate at higher clock rates, a practice called binning. For a given CPU, the clock rates are determined at the end of the manufact ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

High Availability

High availability (HA) is a characteristic of a system that aims to ensure an agreed level of operational performance, usually uptime, for a higher than normal period. There is now more dependence on these systems as a result of modernization. For example, to carry out their regular daily tasks, hospitals and data centers need their systems to be highly available. Availability refers to the ability of the user to access a service or system, whether to submit new work, update or modify existing work, or retrieve the results of previous work. If a user cannot access the system, it is considered ''unavailable from the user's perspective''. The term '' downtime'' is generally used to refer to describe periods when a system is unavailable. Resilience High availability is a property of network resilience, the ability to "provide and maintain an acceptable level of service in the face of faults and challenges to normal operation." Threats and challenges for services can range from ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Performance Per Watt

In computing, performance per watt is a measure of the energy efficiency of a particular computer architecture or computer hardware. Literally, it measures the rate of computation that can be delivered by a computer for every watt of power consumed. This rate is typically measured by performance on the LINPACK benchmark when trying to compare between computing systems: an example using this is the Green500 list of supercomputers. Performance per watt has been suggested to be a more sustainable measure of computing than Moore's law. System designers building parallel computers often pick CPUs based on their performance per watt of power, because the cost of powering the CPU outweighs the cost of the CPU itself. Spaceflight computers have hard limits on the maximum power available and also have hard requirements on minimum real-time performance. A ratio of processing speed to required electrical power is more useful than raw processing speed. D. J. Shirley; and M. K. McLellan ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer Cooling

Computer cooling is required to remove the waste heat produced by computer components, to keep components within permissible operating temperature limits. Components that are susceptible to temporary malfunction or permanent failure if overheated include integrated circuits such as central processing units (CPUs), chipsets, graphics cards, hard disk drives, and solid state drives (SSDs). Components are often designed to generate as little heat as possible, and computers and operating systems may be designed to reduce power consumption and consequent heating according to workload, but more heat may still be produced than can be removed without attention to cooling. Use of heatsinks cooled by airflow reduces the temperature rise produced by a given amount of heat. Attention to patterns of airflow can prevent the development of hotspots. Computer fans are widely used along with heatsink fans to reduce temperature by actively exhausting hot air. There are also other cooling te ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Failover

Failover is switching to a redundant or standby computer server, system, hardware component or network upon the failure or abnormal termination of the previously active application, server, system, hardware component, or network in a computer network. Failover and switchover are essentially the same operation, except that failover is automatic and usually operates without warning, while switchover requires human intervention. History The term "failover", although probably in use by engineers much earlier, can be found in a 1962 declassified NASA report. The term "switchover" can be found in the 1950s when describing '"Hot" and "Cold" Standby Systems', with the current meaning of immediate switchover to a running system (hot) and delayed switchover to a system that needs starting (cold). A conference proceedings from 1957 describes computer systems with both Emergency Switchover (i.e. failover) and Scheduled Failover (for maintenance). Failover Systems designers usually pro ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |