|

Pattern Theory

Pattern theory, formulated by Ulf Grenander, is a mathematical formalism to describe knowledge of the world as patterns. It differs from other approaches to artificial intelligence in that it does not begin by prescribing algorithms and machinery to recognize and classify patterns; rather, it prescribes a vocabulary to articulate and recast the pattern concepts in precise language. Broad in its mathematical coverage, Pattern Theory spans algebra and statistics, as well as local topological and global entropic properties. In addition to the new algebraic vocabulary, its statistical approach is novel in its aim to: * Identify the hidden variables of a data set using real world data rather than artificial stimuli, which was previously commonplace. * Formulate prior distributions for hidden variables and models for the observed variables that form the vertices of a Gibbs-like graph. * Study the randomness and variability of these graphs. * Create the basic classes of stochastic ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

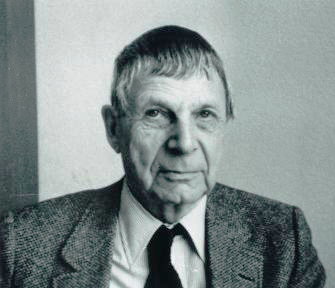

Ulf Grenander

Ulf Grenander (23 July 1923 – 12 May 2016) was a Swedish statistician and professor of applied mathematics at Brown University. His early research was in probability theory, stochastic processes, time series analysis, and statistical theory (particularly the order-constrained estimation of cumulative distribution functions using his sieve estimator). In recent decades, Grenander contributed to computational statistics, image processing, pattern recognition, and artificial intelligence. He coined the term pattern theory to distinguish from pattern recognition. Honors In 1966 Grenander was elected to the Royal Academy of Sciences of Sweden, and in 1996 to the US National Academy of Sciences. In 1998 he was an Invited Speaker of the International Congress of Mathematicians in Berlin. He received an honorary doctorate in 1994 from the University of Chicago, and in 2005 from the Royal Institute of Technology of Stockholm, Sweden. Education Grenander earned his undergraduate degree ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Fields Medal

The Fields Medal is a prize awarded to two, three, or four mathematicians under 40 years of age at the International Congress of Mathematicians, International Congress of the International Mathematical Union (IMU), a meeting that takes place every four years. The name of the award honours the Canadian mathematician John Charles Fields. The Fields Medal is regarded as one of the highest honors a mathematician can receive, and has been list of prizes known as the Nobel or the highest honors of a field, described as the Nobel Prize of Mathematics, although there are several major differences, including frequency of award, number of awards, age limits, monetary value, and award criteria. According to the annual Academic Excellence Survey by Academic Ranking of World Universities, ARWU, the Fields Medal is consistently regarded as the top award in the field of mathematics worldwide, and in another reputation survey conducted by IREG Observatory on Academic Ranking and Excellence, IR ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Spatial Statistics ...

Spatial statistics is a field of applied statistics dealing with spatial data. It involves stochastic processes (random fields, point processes), sampling, smoothing and interpolation, regional ( areal unit) and lattice ( gridded) data, point patterns, as well as image analysis and stereology. See also *Geostatistics *Modifiable areal unit problem *Spatial analysis *Spatial econometrics * Statistical geography *Spatial epidemiology * Spatial network * Statistical shape analysis References {{Statistics-stub Applied statistics Statistics Statistics (from German language, German: ', "description of a State (polity), state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lattice Theory

A lattice is an abstract structure studied in the mathematical subdisciplines of order theory and abstract algebra. It consists of a partially ordered set in which every pair of elements has a unique supremum (also called a least upper bound or join) and a unique infimum (also called a greatest lower bound or meet). An example is given by the power set of a set, partially ordered by inclusion, for which the supremum is the union and the infimum is the intersection. Another example is given by the natural numbers, partially ordered by divisibility, for which the supremum is the least common multiple and the infimum is the greatest common divisor. Lattices can also be characterized as algebraic structures satisfying certain axiomatic identities. Since the two definitions are equivalent, lattice theory draws on both order theory and universal algebra. Semilattices include lattices, which in turn include Heyting and Boolean algebras. These ''lattice-like'' structur ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mathematical Induction

Mathematical induction is a method for mathematical proof, proving that a statement P(n) is true for every natural number n, that is, that the infinitely many cases P(0), P(1), P(2), P(3), \dots all hold. This is done by first proving a simple case, then also showing that if we assume the claim is true for a given case, then the next case is also true. Informal metaphors help to explain this technique, such as falling dominoes or climbing a ladder: A proof by induction consists of two cases. The first, the base case, proves the statement for n = 0 without assuming any knowledge of other cases. The second case, the induction step, proves that ''if'' the statement holds for any given case n = k, ''then'' it must also hold for the next case n = k + 1. These two steps establish that the statement holds for every natural number n. The base case does not necessarily begin with n = 0, but often with n = 1, and possibly with any fixed natural number n = N, establishing the trut ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Image Analysis

Image analysis or imagery analysis is the extraction of meaningful information from images; mainly from digital images by means of digital image processing techniques. Image analysis tasks can be as simple as reading barcode, bar coded tags or as sophisticated as facial recognition system, identifying a person from their face. Computers are indispensable for the analysis of large amounts of data, for tasks that require complex computation, or for the extraction of quantitative information. On the other hand, the human visual cortex is an excellent image analysis apparatus, especially for extracting higher-level information, and for many applications — including medicine, security, and remote sensing — human analysts still cannot be replaced by computers. For this reason, many important image analysis tools such as edge detection, edge detectors and Artificial neural network, neural networks are inspired by human visual perception models. Digital Digital Image Analy ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Grammar Induction

Grammar induction (or grammatical inference) is the process in machine learning of learning a formal grammar (usually as a collection of ''re-write rules'' or '' productions'' or alternatively as a finite-state machine or automaton of some kind) from a set of observations, thus constructing a model which accounts for the characteristics of the observed objects. More generally, grammatical inference is that branch of machine learning where the instance space consists of discrete combinatorial objects such as strings, trees and graphs. Grammar classes Grammatical inference has often been very focused on the problem of learning finite-state machines of various types (see the article Induction of regular languages for details on these approaches), since there have been efficient algorithms for this problem since the 1980s. Since the beginning of the century, these approaches have been extended to the problem of inference of context-free grammars and richer formalisms, such as mult ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Formal Concept Analysis

In information science, formal concept analysis (FCA) is a principled way of deriving a ''concept hierarchy'' or formal ontology from a collection of objects and their properties. Each concept in the hierarchy represents the objects sharing some set of properties; and each sub-concept in the hierarchy represents a subset of the objects (as well as a superset of the properties) in the concepts above it. The term was introduced by Rudolf Wille in 1981, and builds on the mathematical theory of lattices and ordered sets that was developed by Garrett Birkhoff and others in the 1930s. Formal concept analysis finds practical application in fields including data mining, text mining, machine learning, knowledge management, semantic web, software development, chemistry and biology. Overview and history The original motivation of formal concept analysis was the search for real-world meaning of mathematical order theory. One such possibility of very general nature is that data tables ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computational Anatomy

Computational anatomy is an interdisciplinary field of biology focused on quantitative investigation and modelling of anatomical shapes variability. It involves the development and application of mathematical, statistical and data-analytical methods for modelling and simulation of biological structures. The field is broadly defined and includes foundations in anatomy, applied mathematics and pure mathematics, machine learning, computational mechanics, computational science, biological imaging, neuroscience, physics, probability, and statistics; it also has strong connections with fluid mechanics and geometric mechanics. Additionally, it complements newer, interdisciplinary fields like bioinformatics and neuroinformatics in the sense that its interpretation uses metadata derived from the original sensor imaging modalities (of which magnetic resonance imaging is one example). It focuses on the anatomical structures being imaged, rather than the medical imaging devices. It is similar i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Algebraic Statistics

Algebraic statistics is the use of algebra to advance statistics. Algebra has been useful for experimental design, parameter estimation, and hypothesis testing. Traditionally, algebraic statistics has been associated with the design of experiments and multivariate analysis (especially time series). In recent years, the term "algebraic statistics" has been sometimes restricted, sometimes being used to label the use of algebraic geometry and commutative algebra in statistics. The tradition of algebraic statistics In the past, statisticians have used algebra to advance research in statistics. Some algebraic statistics led to the development of new topics in algebra and combinatorics, such as association schemes. Design of experiments For example, Ronald A. Fisher, Henry B. Mann, and Rosemary A. Bailey applied Abelian groups to the design of experiments. Experimental designs were also studied with affine geometry over finite fields and then with the introduction of association sche ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Abductive Reasoning

Abductive reasoning (also called abduction,For example: abductive inference, or retroduction) is a form of logical inference that seeks the simplest and most likely conclusion from a set of observations. It was formulated and advanced by American philosopher and logician Charles Sanders Peirce beginning in the latter half of the 19th century. Abductive reasoning, unlike deductive reasoning, yields a plausible conclusion but does not definitively verify it. Abductive conclusions do not eliminate uncertainty or doubt, which is expressed in terms such as "best available" or "most likely". While inductive reasoning draws general conclusions that apply to many situations, abductive conclusions are confined to the particular observations in question. In the 1990s, as computing power grew, the fields of law, computer science, and artificial intelligence researchFor examples, see "", John R. Josephson, Laboratory for Artificial Intelligence Research, Ohio State University, and ''Abduc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

David Mumford

David Bryant Mumford (born 11 June 1937) is an American mathematician known for his work in algebraic geometry and then for research into vision and pattern theory. He won the Fields Medal and was a MacArthur Fellow. In 2010 he was awarded the National Medal of Science. He is currently a University Professor Emeritus in the Division of Applied Mathematics at Brown University. Early life and education Mumford was born in Worth, West Sussex in England, of an English father and American mother. His father William started an experimental school in Tanzania and worked for the then newly created United Nations. He attended Phillips Exeter Academy, where he received a Westinghouse Science Talent Search prize for his relay-based computer project. Mumford then went to Harvard University, where he became a student of Oscar Zariski. At Harvard, he became a Putnam Fellow in 1955 and 1956. He completed his PhD in 1961, with a thesis entitled ''Existence of the moduli scheme for curve ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |