|

Owen's T Function

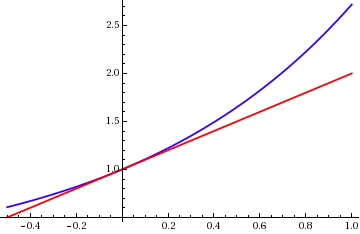

In mathematics, Owen's T function ''T''(''h'', ''a''), named after statistician Donald Bruce Owen, is defined by : T(h,a)=\frac\int_^ \frac dx \quad \left(-\infty < h, a < +\infty\right). The function was first introduced by Owen in 1956. Applications The function ''T''(''h'', ''a'') gives the probability of the event (''X'' > ''h'' and 0 < ''Y'' < ''aX'') where ''X'' and ''Y'' are s. This function can be used to calculate |

Statistician

A statistician is a person who works with theoretical or applied statistics. The profession exists in both the private and public sectors. It is common to combine statistical knowledge with expertise in other subjects, and statisticians may work as employees or as statistical consultants. Nature of the work According to the United States Bureau of Labor Statistics, as of 2014, 26,970 jobs were classified as ''statistician'' in the United States. Of these people, approximately 30 percent worked for governments (federal, state, or local). As of October 2021, the median pay for statisticians in the United States was $92,270. Additionally, there is a substantial number of people who use statistics and data analysis in their work but have job titles other than ''statistician'', such as actuaries, applied mathematicians, economists, data scientists, data analysts (predictive analytics), financial analysts, psychometricians, sociologists, epidemiologists, and quantitative psycholo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistically Independent

Independence is a fundamental notion in probability theory, as in statistics and the theory of stochastic processes. Two events are independent, statistically independent, or stochastically independent if, informally speaking, the occurrence of one does not affect the probability of occurrence of the other or, equivalently, does not affect the odds. Similarly, two random variables are independent if the realization of one does not affect the probability distribution of the other. When dealing with collections of more than two events, two notions of independence need to be distinguished. The events are called pairwise independent if any two events in the collection are independent of each other, while mutual independence (or collective independence) of events means, informally speaking, that each event is independent of any combination of other events in the collection. A similar notion exists for collections of random variables. Mutual independence implies pairwise independenc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Standard Normal Distribution

In statistics, a normal distribution or Gaussian distribution is a type of continuous probability distribution for a real-valued random variable. The general form of its probability density function is : f(x) = \frac e^ The parameter \mu is the mean or expectation of the distribution (and also its median and mode), while the parameter \sigma is its standard deviation. The variance of the distribution is \sigma^2. A random variable with a Gaussian distribution is said to be normally distributed, and is called a normal deviate. Normal distributions are important in statistics and are often used in the natural and social sciences to represent real-valued random variables whose distributions are not known. Their importance is partly due to the central limit theorem. It states that, under some conditions, the average of many samples (observations) of a random variable with finite mean and variance is itself a random variable—whose distribution converges to a normal dist ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Random Variable

A random variable (also called random quantity, aleatory variable, or stochastic variable) is a mathematical formalization of a quantity or object which depends on random events. It is a mapping or a function from possible outcomes (e.g., the possible upper sides of a flipped coin such as heads H and tails T) in a sample space (e.g., the set \) to a measurable space, often the real numbers (e.g., \ in which 1 corresponding to H and -1 corresponding to T). Informally, randomness typically represents some fundamental element of chance, such as in the roll of a dice; it may also represent uncertainty, such as measurement error. However, the interpretation of probability is philosophically complicated, and even in specific cases is not always straightforward. The purely mathematical analysis of random variables is independent of such interpretational difficulties, and can be based upon a rigorous axiomatic setup. In the formal mathematical language of measure theory, a rando ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bivariate Normal Distribution

In probability theory and statistics, the multivariate normal distribution, multivariate Gaussian distribution, or joint normal distribution is a generalization of the one-dimensional ( univariate) normal distribution to higher dimensions. One definition is that a random vector is said to be ''k''-variate normally distributed if every linear combination of its ''k'' components has a univariate normal distribution. Its importance derives mainly from the multivariate central limit theorem. The multivariate normal distribution is often used to describe, at least approximately, any set of (possibly) correlated real-valued random variables each of which clusters around a mean value. Definitions Notation and parameterization The multivariate normal distribution of a ''k''-dimensional random vector \mathbf = (X_1,\ldots,X_k)^ can be written in the following notation: : \mathbf\ \sim\ \mathcal(\boldsymbol\mu,\, \boldsymbol\Sigma), or to make it explicitly known that ''X' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Multivariate Normal Distribution

In probability theory and statistics, the multivariate normal distribution, multivariate Gaussian distribution, or joint normal distribution is a generalization of the one-dimensional ( univariate) normal distribution to higher dimensions. One definition is that a random vector is said to be ''k''-variate normally distributed if every linear combination of its ''k'' components has a univariate normal distribution. Its importance derives mainly from the multivariate central limit theorem. The multivariate normal distribution is often used to describe, at least approximately, any set of (possibly) correlated real-valued random variables each of which clusters around a mean value. Definitions Notation and parameterization The multivariate normal distribution of a ''k''-dimensional random vector \mathbf = (X_1,\ldots,X_k)^ can be written in the following notation: : \mathbf\ \sim\ \mathcal(\boldsymbol\mu,\, \boldsymbol\Sigma), or to make it explicitly known that ''X ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Error Bound

The approximation error in a data value is the discrepancy between an exact value and some ''approximation'' to it. This error can be expressed as an absolute error (the numerical amount of the discrepancy) or as a relative error (the absolute error divided by the data value). An approximation error can occur because of computing machine precision or measurement error (e.g. the length of a piece of paper is 4.53 cm but the ruler only allows you to estimate it to the nearest 0.1 cm, so you measure it as 4.5 cm). In the mathematical field of numerical analysis, the numerical stability of an algorithm indicates how the error is propagated by the algorithm. Formal definition One commonly distinguishes between the relative error and the absolute error. Given some value ''v'' and its approximation ''v''approx, the absolute error is :\epsilon = , v-v_\text, \ , where the vertical bars denote the absolute value. If v \ne 0, the relative error is : \eta = \frac = \left, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

List Of Integrals Of Gaussian Functions

In the expressions in this article, :\phi(x) = \frace^ is the standard normal probability density function, :\Phi(x) = \int_^x \phi(t) \, dt = \frac\left(1 + \operatorname\left(\frac\right)\right) is the corresponding cumulative distribution function (where erf is the error function) and : T(h,a) = \phi(h)\int_0^a \frac \, dx is Owen's T function. Owen has an extensive list of Gaussian-type integrals; only a subset is given below. Indefinite integrals :\int \phi(x) \, dx = \Phi(x) + C :\int x \phi(x) \, dx = -\phi(x) + C :\int x^2 \phi(x) \, dx = \Phi(x) - x\phi(x) + C :\int x^ \phi(x) \, dx = -\phi(x) \sum_^k \fracx^ + C lists this integral above without the minus sign, which is an error. See calculation bWolframAlpha/ref> :\int x^ \phi(x) \, dx = -\phi(x)\sum_^k\fracx^ + (2k+1)!!\,\Phi(x) + C In these integrals, ''n''!! is the double factorial In mathematics, the double factorial or semifactorial of a number , denoted by , is the product of all the integers from 1 u ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Gaussian Quadrature

In numerical analysis, a quadrature rule is an approximation of the definite integral of a function, usually stated as a weighted sum of function values at specified points within the domain of integration. (See numerical integration for more on quadrature rules.) An -point Gaussian quadrature rule, named after Carl Friedrich Gauss, is a quadrature rule constructed to yield an exact result for polynomials of degree or less by a suitable choice of the nodes and weights for . The modern formulation using orthogonal polynomials was developed by Carl Gustav Jacobi in 1826. The most common domain of integration for such a rule is taken as , so the rule is stated as :\int_^1 f(x)\,dx \approx \sum_^n w_i f(x_i), which is exact for polynomials of degree or less. This exact rule is known as the Gauss-Legendre quadrature rule. The quadrature rule will only be an accurate approximation to the integral above if is well-approximated by a polynomial of degree or less on . The ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Normal Distribution

In statistics, a normal distribution or Gaussian distribution is a type of continuous probability distribution for a real-valued random variable. The general form of its probability density function is : f(x) = \frac e^ The parameter \mu is the mean or expectation of the distribution (and also its median and mode), while the parameter \sigma is its standard deviation. The variance of the distribution is \sigma^2. A random variable with a Gaussian distribution is said to be normally distributed, and is called a normal deviate. Normal distributions are important in statistics and are often used in the natural and social sciences to represent real-valued random variables whose distributions are not known. Their importance is partly due to the central limit theorem. It states that, under some conditions, the average of many samples (observations) of a random variable with finite mean and variance is itself a random variable—whose distribution converges to a normal dist ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

LGPL

The GNU Lesser General Public License (LGPL) is a free-software license published by the Free Software Foundation (FSF). The license allows developers and companies to use and integrate a software component released under the LGPL into their own (even proprietary) software without being required by the terms of a strong copyleft license to release the source code of their own components. However, any developer who modifies an LGPL-covered component is required to make their modified version available under the same LGPL license. For proprietary software, code under the LGPL is usually used in the form of a shared library, so that there is a clear separation between the proprietary and LGPL components. The LGPL is primarily used for software libraries, although it is also used by some stand-alone applications. The LGPL was developed as a compromise between the strong copyleft of the GNU General Public License (GPL) and more permissive licenses such as the BSD licenses and the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Mathematica

Wolfram Mathematica is a software system with built-in libraries for several areas of technical computing that allow machine learning, statistics, symbolic computation, data manipulation, network analysis, time series analysis, NLP, optimization, plotting functions and various types of data, implementation of algorithms, creation of user interfaces, and interfacing with programs written in other programming languages. It was conceived by Stephen Wolfram, and is developed by Wolfram Research of Champaign, Illinois. The Wolfram Language is the programming language used in ''Mathematica''. Mathematica 1.0 was released on June 23, 1988 in Champaign, Illinois and Santa Clara, California. __TOC__ Notebook interface Wolfram Mathematica (called ''Mathematica'' by some of its users) is split into two parts: the kernel and the front end. The kernel interprets expressions (Wolfram Language code) and returns result expressions, which can then be displayed by the front end. The ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |