|

Multiplicity (statistical Mechanics)

In statistical mechanics, multiplicity (also called statistical weight) refers to the number of microstates corresponding to a particular macrostate of a thermodynamic system. Commonly denoted \Omega, it is related to the configuration entropy of an isolated system via Boltzmann's entropy formula S = k_\text \log \Omega, where S is the entropy and k_\text is the Boltzmann constant. Example: the two-state paramagnet A simplified model of the two-state paramagnet provides an example of the process of calculating the multiplicity of particular macrostate. This model consists of a system of microscopic dipoles which may either be aligned or anti-aligned with an externally applied magnetic field . Let N_\uparrow represent the number of dipoles that are aligned with the external field and N_\downarrow represent the number of anti-aligned dipoles. The energy of a single aligned dipole is U_\uparrow = -\mu B, while the energy of an anti-aligned dipole is U_\downarrow = \mu B; t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Mechanics

In physics, statistical mechanics is a mathematical framework that applies statistical methods and probability theory to large assemblies of microscopic entities. Sometimes called statistical physics or statistical thermodynamics, its applications include many problems in a wide variety of fields such as biology, neuroscience, computer science Computer science is the study of computation, information, and automation. Computer science spans Theoretical computer science, theoretical disciplines (such as algorithms, theory of computation, and information theory) to Applied science, ..., information theory and sociology. Its main purpose is to clarify the properties of matter in aggregate, in terms of physical laws governing atomic motion. Statistical mechanics arose out of the development of classical thermodynamics, a field for which it was successful in explaining macroscopic physical properties—such as temperature, pressure, and heat capacity—in terms of microscop ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Microstate (statistical Mechanics)

In statistical mechanics, a microstate is a specific configuration of a system that describes the precise positions and momenta of all the individual particles or components that make up the system. Each microstate has a certain probability of occurring during the course of the system's thermal fluctuations. In contrast, the macrostate of a system refers to its macroscopic properties, such as its temperature, pressure, volume and density. Treatments on statistical mechanics define a macrostate as follows: a particular set of values of energy, the number of particles, and the volume of an isolated thermodynamic system is said to specify a particular macrostate of it. In this description, microstates appear as different possible ways the system can achieve a particular macrostate. A macrostate is characterized by a probability distribution of possible states across a certain statistical ensemble of all microstates. This distribution describes the probability of finding the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Thermodynamic System

A thermodynamic system is a body of matter and/or radiation separate from its surroundings that can be studied using the laws of thermodynamics. Thermodynamic systems can be passive and active according to internal processes. According to internal processes, passive systems and active systems are distinguished: passive, in which there is a redistribution of available energy, active, in which one type of energy is converted into another. Depending on its interaction with the environment, a thermodynamic system may be an isolated system, a Closed system#In thermodynamics, closed system, or an Open system (systems theory), open system. An isolated system does not exchange matter or energy with its surroundings. A closed system may exchange heat, experience forces, and exert forces, but does not exchange matter. An open system can interact with its surroundings by exchanging both matter and energy. The physical condition of a thermodynamic system at a given time is described by its ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Configuration Entropy

In statistical mechanics, configuration entropy is the portion of a system's entropy that is related to discrete representative positions of its constituent particles. For example, it may refer to the number of ways that atoms or molecules pack together in a mixture, alloy or glass, the number of conformations of a molecule, or the number of spin configurations in a magnet. The name might suggest that it relates to all possible configurations or particle positions of a system, excluding the entropy of their velocity or momentum, but that usage rarely occurs. Calculation If the configurations all have the same weighting, or energy, the configurational entropy is given by Boltzmann's entropy formula :S = k_B \, \ln W, where ''k''''B'' is the Boltzmann constant and ''W'' is the number of possible configurations. In a more general formulation, if a system can be in states ''n'' with probabilities ''P''''n'', the configurational entropy of the system is given by :S = - k_B \, \sum_^ ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Boltzmann's Entropy Formula

In statistical mechanics, Boltzmann's entropy formula (also known as the Boltzmann–Planck equation, not to be confused with the more general Boltzmann equation, which is a partial differential equation) is a probability equation relating the entropy S, also written as S_\mathrm, of an ideal gas to the multiplicity (commonly denoted as \Omega or W), the number of real microstates corresponding to the gas's macrostate: where k_\mathrm B is the Boltzmann constant (also written as simply k) and equal to 1.380649 × 10−23 J/K, and \ln is the natural logarithm function (or log base e, as in the image above). In short, the Boltzmann formula shows the relationship between entropy and the number of ways the atoms or molecules of a certain kind of thermodynamic system can be arranged. History The equation was originally formulated by Ludwig Boltzmann between 1872 and 1875, but later put into its current form by Max Planck in about 1900. To quote Planck, "the logarithmic c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Boltzmann Constant

The Boltzmann constant ( or ) is the proportionality factor that relates the average relative thermal energy of particles in a ideal gas, gas with the thermodynamic temperature of the gas. It occurs in the definitions of the kelvin (K) and the molar gas constant, in Planck's law of black-body radiation and Boltzmann's entropy formula, and is used in calculating Johnson–Nyquist noise, thermal noise in resistors. The Boltzmann constant has Dimensional analysis, dimensions of energy divided by temperature, the same as entropy and heat capacity. It is named after the Austrian scientist Ludwig Boltzmann. As part of the 2019 revision of the SI, the Boltzmann constant is one of the seven "Physical constant, defining constants" that have been defined so as to have exact finite decimal values in SI units. They are used in various combinations to define the seven SI base units. The Boltzmann constant is defined to be exactly joules per kelvin, with the effect of defining the SI unit ke ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Paramagnetism

Paramagnetism is a form of magnetism whereby some materials are weakly attracted by an externally applied magnetic field, and form internal, induced magnetic fields in the direction of the applied magnetic field. In contrast with this behavior, diamagnetic materials are repelled by magnetic fields and form induced magnetic fields in the direction opposite to that of the applied magnetic field. Paramagnetic materials include most chemical elements and some compounds; they have a relative magnetic permeability slightly greater than 1 (i.e., a small positive magnetic susceptibility) and hence are attracted to magnetic fields. The magnetic moment induced by the applied field is linear in the field strength and rather weak. It typically requires a sensitive analytical balance to detect the effect and modern measurements on paramagnetic materials are often conducted with a SQUID magnetometer. Paramagnetism is due to the presence of unpaired electrons in the material, so most atom ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Combinatorics

Combinatorics is an area of mathematics primarily concerned with counting, both as a means and as an end to obtaining results, and certain properties of finite structures. It is closely related to many other areas of mathematics and has many applications ranging from logic to statistical physics and from evolutionary biology to computer science. Combinatorics is well known for the breadth of the problems it tackles. Combinatorial problems arise in many areas of pure mathematics, notably in algebra, probability theory, topology, and geometry, as well as in its many application areas. Many combinatorial questions have historically been considered in isolation, giving an ''ad hoc'' solution to a problem arising in some mathematical context. In the later twentieth century, however, powerful and general theoretical methods were developed, making combinatorics into an independent branch of mathematics in its own right. One of the oldest and most accessible parts of combinatorics ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Internal Energy

The internal energy of a thermodynamic system is the energy of the system as a state function, measured as the quantity of energy necessary to bring the system from its standard internal state to its present internal state of interest, accounting for the gains and losses of energy due to changes in its internal state, including such quantities as magnetization. It excludes the kinetic energy of motion of the system as a whole and the potential energy of position of the system as a whole, with respect to its surroundings and external force fields. It includes the thermal energy, ''i.e.'', the constituent particles' kinetic energies of motion relative to the motion of the system as a whole. Without a thermodynamic process, the internal energy of an isolated system cannot change, as expressed in the law of conservation of energy, a foundation of the first law of thermodynamics. The notion has been introduced to describe the systems characterized by temperature variations, te ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Heat Capacity

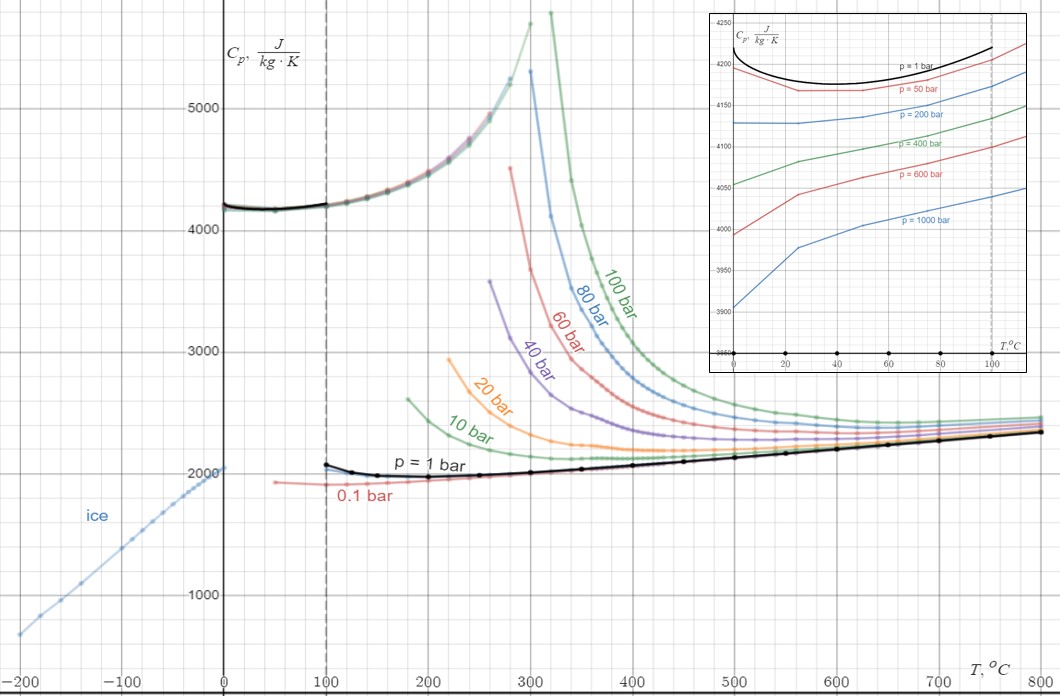

Heat capacity or thermal capacity is a physical property of matter, defined as the amount of heat to be supplied to an object to produce a unit change in its temperature. The SI unit of heat capacity is joule per kelvin (J/K). Heat capacity is an extensive property. The corresponding intensive property is the specific heat capacity, found by dividing the heat capacity of an object by its mass. Dividing the heat capacity by the amount of substance in moles yields its molar heat capacity. The volumetric heat capacity measures the heat capacity per volume. In architecture and civil engineering, the heat capacity of a building is often referred to as its '' thermal mass''. Definition Basic definition The heat capacity of an object, denoted by C, is the limit C = \lim_\frac, where \Delta Q is the amount of heat that must be added to the object (of mass ''M'') in order to raise its temperature by \Delta T. The value of this parameter usually varies considerably depending o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |