|

Multimodal Learning

Multimodal learning is a type of deep learning that integrates and processes multiple types of data, referred to as modalities, such as text, audio, images, or video. This integration allows for a more holistic understanding of complex data, improving model performance in tasks like visual question answering, cross-modal retrieval, text-to-image generation, aesthetic ranking, and image captioning. Large multimodal models, such as Google Gemini and GPT-4o, have become increasingly popular since 2023, enabling increased versatility and a broader understanding of real-world phenomena. Motivation Data usually comes with different modalities which carry different information. For example, it is very common to caption an image to convey the information not presented in the image itself. Similarly, sometimes it is more straightforward to use an image to describe information which may not be obvious from text. As a result, if different words appear in similar images, then these words l ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Deep Learning

Deep learning is a subset of machine learning that focuses on utilizing multilayered neural networks to perform tasks such as classification, regression, and representation learning. The field takes inspiration from biological neuroscience and is centered around stacking artificial neurons into layers and "training" them to process data. The adjective "deep" refers to the use of multiple layers (ranging from three to several hundred or thousands) in the network. Methods used can be either supervised, semi-supervised or unsupervised. Some common deep learning network architectures include fully connected networks, deep belief networks, recurrent neural networks, convolutional neural networks, generative adversarial networks, transformers, and neural radiance fields. These architectures have been applied to fields including computer vision, speech recognition, natural language processing, machine translation, bioinformatics, drug design, medical image analysis, c ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Contrastive Language-Image Pre-training

Contrastive Language-Image Pre-training (CLIP) is a technique for training a pair of neural network models, one for image understanding and one for text understanding, using a contrastive objective. This method has enabled broad applications across multiple domains, including cross-modal retrieval, text-to-image generation, and aesthetic ranking. Algorithm The CLIP method trains a pair of models contrastively. One model takes in a piece of text as input and outputs a single vector representing its semantic content. The other model takes in an image and similarly outputs a single vector representing its visual content. The models are trained so that the vectors corresponding to semantically similar text-image pairs are close together in the shared vector space, while those corresponding to dissimilar pairs are far apart. To train a pair of CLIP models, one would start by preparing a large dataset of image-caption pairs. During training, the models are presented with batches ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Markov Chain Monte Carlo

In statistics, Markov chain Monte Carlo (MCMC) is a class of algorithms used to draw samples from a probability distribution. Given a probability distribution, one can construct a Markov chain whose elements' distribution approximates it – that is, the Markov chain's Discrete-time Markov chain#Stationary distributions, equilibrium distribution matches the target distribution. The more steps that are included, the more closely the distribution of the sample matches the actual desired distribution. Markov chain Monte Carlo methods are used to study probability distributions that are too complex or too highly N-dimensional space, dimensional to study with analytic techniques alone. Various algorithms exist for constructing such Markov chains, including the Metropolis–Hastings algorithm. General explanation Markov chain Monte Carlo methods create samples from a continuous random variable, with probability density proportional to a known function. These samples can be used to e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Markov Random Field

In the domain of physics and probability, a Markov random field (MRF), Markov network or undirected graphical model is a set of random variables having a Markov property described by an undirected graph In discrete mathematics, particularly in graph theory, a graph is a structure consisting of a set of objects where some pairs of the objects are in some sense "related". The objects are represented by abstractions called '' vertices'' (also call .... In other words, a random field is said to be a Andrey Markov, Markov random field if it satisfies Markov properties. The concept originates from the Spin glass#Sherrington–Kirkpatrick model, Sherrington–Kirkpatrick model. A Markov network or MRF is similar to a Bayesian network in its representation of dependencies; the differences being that Bayesian networks are directed acyclic graph, directed and acyclic, whereas Markov networks are undirected and may be cyclic. Thus, a Markov network can represent certain dependencies th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hopfield Network

A Hopfield network (or associative memory) is a form of recurrent neural network, or a spin glass system, that can serve as a content-addressable memory. The Hopfield network, named for John Hopfield, consists of a single layer of neurons, where each neuron is connected to every other neuron except itself. These connections are bidirectional and symmetric, meaning the weight of the connection from neuron ''i'' to neuron ''j'' is the same as the weight from neuron ''j'' to neuron ''i''. Patterns are associatively recalled by fixing certain inputs, and dynamically evolve the network to minimize an energy function, towards local energy minimum states that correspond to stored patterns. Patterns are associatively learned (or "stored") by a Hebbian learning algorithm. One of the key features of Hopfield networks is their ability to recover complete patterns from partial or noisy inputs, making them robust in the face of incomplete or corrupted data. Their connection to statistical mech ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Emotion Recognition

Emotion recognition is the process of identifying human emotion. People vary widely in their accuracy at recognizing the emotions of others. Use of technology to help people with emotion recognition is a relatively nascent research area. Generally, the technology works best if it uses multiple modalities in context. To date, the most work has been conducted on automating the recognition of facial expressions from video, spoken expressions from audio, written expressions from text, and physiology as measured by wearables. Human Humans show a great deal of variability in their abilities to recognize emotion. A key point to keep in mind when learning about automated emotion recognition is that there are several sources of "ground truth", or truth about what the real emotion is. Suppose we are trying to recognize the emotions of Alex. One source is "what would most people say that Alex is feeling?" In this case, the 'truth' may not correspond to what Alex feels, but may corr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Sentiment Analysis

Sentiment analysis (also known as opinion mining or emotion AI) is the use of natural language processing, text analysis, computational linguistics, and biometrics to systematically identify, extract, quantify, and study affective states and subjective information. Sentiment analysis is widely applied to voice of the customer materials such as reviews and survey responses, online and social media, and healthcare materials for applications that range from marketing to customer service to clinical medicine. With the rise of deep language models, such as RoBERTa, also more difficult data domains can be analyzed, e.g., news texts where authors typically express their opinion/sentiment less explicitly.Hamborg, Felix; Donnay, Karsten (2021)"NewsMTSC: A Dataset for (Multi-)Target-dependent Sentiment Classification in Political News Articles" "Proceedings of the 16th Conference of the European Chapter of the Association for Computational Linguistics: Main Volume" Simple cases * "Coron ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Human–computer Interaction

Human–computer interaction (HCI) is the process through which people operate and engage with computer systems. Research in HCI covers the design and the use of computer technology, which focuses on the interfaces between people (users) and computers. HCI researchers observe the ways humans interact with computers and design technologies that allow humans to interact with computers in novel ways. These include visual, auditory, and tactile (haptic) feedback systems, which serve as channels for interaction in both traditional interfaces and mobile computing contexts. A device that allows interaction between human being and a computer is known as a "human–computer interface". As a field of research, human–computer interaction is situated at the intersection of computer science, behavioral sciences, design, media studies, and several other fields of study. The term was popularized by Stuart K. Card, Allen Newell, and Thomas P. Moran in their 1983 book, ''The Psychology of Hum ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

DALL·E

DALL-E, DALL-E 2, and DALL-E 3 (stylised DALL·E) are text-to-image models developed by OpenAI using deep learning methodologies to generate digital images from natural language descriptions known as ''prompts''. The first version of DALL-E was announced in January 2021. In the following year, its successor DALL-E 2 was released. DALL-E 3 was released natively into ChatGPT for ChatGPT Plus and ChatGPT Enterprise customers in October 2023, with availability via OpenAI's API and "Labs" platform provided in early November. Microsoft implemented the model in Bing's Image Creator tool and plans to implement it into their Designer app. With Bing's Image Creator tool, Microsoft Copilot runs on DALL-E 3. In March 2025, DALL-E-3 was replaced in ChatGPT by GPT Image 1's native image-generation capabilities. History and background DALL-E was revealed by OpenAI in a blog post on 5 January 2021, and uses a version of GPT-3 modified to generate images. On 6 April 2022, OpenAI announced ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Brigham And Women's Hospital

Brigham and Women's Hospital (BWH or The Brigham) is a teaching hospital of Harvard Medical School and the largest hospital in the Longwood Medical Area in Boston, Massachusetts. Along with Massachusetts General Hospital, it is one of the two founding members of Mass General Brigham, the largest healthcare provider in Massachusetts. Giles Boland, MD, serves as the hospital's current president. Brigham and Women's Hospital conducts the second largest hospital-based research program in the world, with an annual research budget of more than $630 million. History File:Bwh-longwood.jpg, 221 Longwood Avenue, formerly the Boston Lying-In Hospital building, part of Brigham and Women's Hospital but separate from the main building at 15–75 Francis Street; view from Longwood Avenue File:Free Hospital for Women.jpg, Former site of the Free Hospital for Women across the street from Olmsted Park. This institution was absorbed into Brigham and Women's Hospital. File:Brigham Circle at d ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

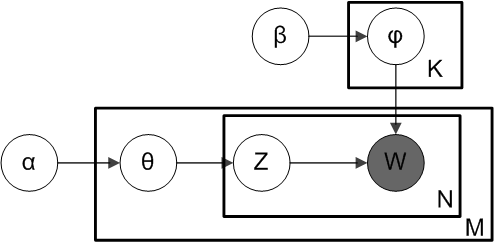

Latent Dirichlet Allocation

In natural language processing, latent Dirichlet allocation (LDA) is a Bayesian network (and, therefore, a generative statistical model) for modeling automatically extracted topics in textual corpora. The LDA is an example of a Bayesian topic model. In this, observations (e.g., words) are collected into documents, and each word's presence is attributable to one of the document's topics. Each document will contain a small number of topics. History In the context of population genetics, LDA was proposed by J. K. Pritchard, M. Stephens and P. Donnelly in 2000. LDA was applied in machine learning by David Blei, Andrew Ng and Michael I. Jordan in 2003. Overview Population genetics In population genetics, the model is used to detect the presence of structured genetic variation in a group of individuals. The model assumes that alleles carried by individuals under study have origin in various extant or past populations. The model and various inference algorithms allow sci ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Support Vector Machine

In machine learning, support vector machines (SVMs, also support vector networks) are supervised max-margin models with associated learning algorithms that analyze data for classification and regression analysis. Developed at AT&T Bell Laboratories, SVMs are one of the most studied models, being based on statistical learning frameworks of VC theory proposed by Vapnik (1982, 1995) and Chervonenkis (1974). In addition to performing linear classification, SVMs can efficiently perform non-linear classification using the ''kernel trick'', representing the data only through a set of pairwise similarity comparisons between the original data points using a kernel function, which transforms them into coordinates in a higher-dimensional feature space. Thus, SVMs use the kernel trick to implicitly map their inputs into high-dimensional feature spaces, where linear classification can be performed. Being max-margin models, SVMs are resilient to noisy data (e.g., misclassified examples). ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |