|

Minimum Message Length

Minimum message length (MML) is a Bayesian information-theoretic method for statistical model comparison and selection. It provides a formal information theory restatement of Occam's Razor: even when models are equal in their measure of fit-accuracy to the observed data, the one generating the most concise ''explanation'' of data is more likely to be correct (where the ''explanation'' consists of the statement of the model, followed by the lossless encoding of the data using the stated model). MML was invented by Chris Wallace, first appearing in the seminal paper "An information measure for classification". MML is intended not just as a theoretical construct, but as a technique that may be deployed in practice. It differs from the related concept of Kolmogorov complexity in that it does not require use of a Turing-complete language to model data. Definition Shannon's ''A Mathematical Theory of Communication'' (1948) states that in an optimal code, the message length (in binary) o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Information Theory

Information theory is the mathematical study of the quantification (science), quantification, Data storage, storage, and telecommunications, communication of information. The field was established and formalized by Claude Shannon in the 1940s, though early contributions were made in the 1920s through the works of Harry Nyquist and Ralph Hartley. It is at the intersection of electronic engineering, mathematics, statistics, computer science, Neuroscience, neurobiology, physics, and electrical engineering. A key measure in information theory is information entropy, entropy. Entropy quantifies the amount of uncertainty involved in the value of a random variable or the outcome of a random process. For example, identifying the outcome of a Fair coin, fair coin flip (which has two equally likely outcomes) provides less information (lower entropy, less uncertainty) than identifying the outcome from a roll of a dice, die (which has six equally likely outcomes). Some other important measu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Consistency

Statistics (from German: ', "description of a state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model to be studied. Populations can be diverse groups of people or objects such as "all people living in a country" or "every atom composing a crystal". Statistics deals with every aspect of data, including the planning of data collection in terms of the design of surveys and experiments. When census data (comprising every member of the target population) cannot be collected, statisticians collect data by developing specific experiment designs and survey samples. Representative sampling assures that inferences and conclusions can reasonably extend from the sample to the population as a whole. An experimental study involves taking measurements of the sy ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Akaike Information Criterion

The Akaike information criterion (AIC) is an estimator of prediction error and thereby relative quality of statistical models for a given set of data. Given a collection of models for the data, AIC estimates the quality of each model, relative to each of the other models. Thus, AIC provides a means for model selection. AIC is founded on information theory. When a statistical model is used to represent the process that generated the data, the representation will almost never be exact; so some information will be lost by using the model to represent the process. AIC estimates the relative amount of information lost by a given model: the less information a model loses, the higher the quality of that model. In estimating the amount of information lost by a model, AIC deals with the trade-off between the goodness of fit of the model and the simplicity of the model. In other words, AIC deals with both the risk of overfitting and the risk of underfitting. The Akaike information crite ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

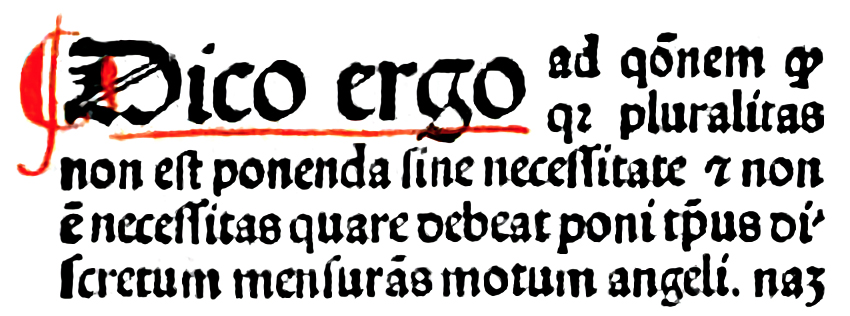

Occam's Razor

In philosophy, Occam's razor (also spelled Ockham's razor or Ocham's razor; ) is the problem-solving principle that recommends searching for explanations constructed with the smallest possible set of elements. It is also known as the principle of parsimony or the law of parsimony (). Attributed to William of Ockham, a 14th-century English philosopher and theologian, it is frequently cited as , which translates as "Entities must not be multiplied beyond necessity", although Occam never used these exact words. Popularly, the principle is sometimes paraphrased as "of two competing theories, the simpler explanation of an entity is to be preferred." This philosophical razor advocates that when presented with competing hypotheses about the same prediction and both hypotheses have equal explanatory power, one should prefer the hypothesis that requires the fewest assumptions, and that this is not meant to be a way of choosing between hypotheses that make different predictions. Similarl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Minimum Description Length

Minimum Description Length (MDL) is a model selection principle where the shortest description of the data is the best model. MDL methods learn through a data compression perspective and are sometimes described as mathematical applications of Occam's razor. The MDL principle can be extended to other forms of inductive inference and learning, for example to estimation and sequential prediction, without explicitly identifying a single model of the data. MDL has its origins mostly in information theory and has been further developed within the general fields of statistics, theoretical computer science and machine learning, and more narrowly computational learning theory. Historically, there are different, yet interrelated, usages of the definite noun phrase "''the'' minimum description length ''principle''" that vary in what is meant by ''description'': * Within Jorma Rissanen's theory of learning, a central concept of information theory, models are statistical hypotheses and descri ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

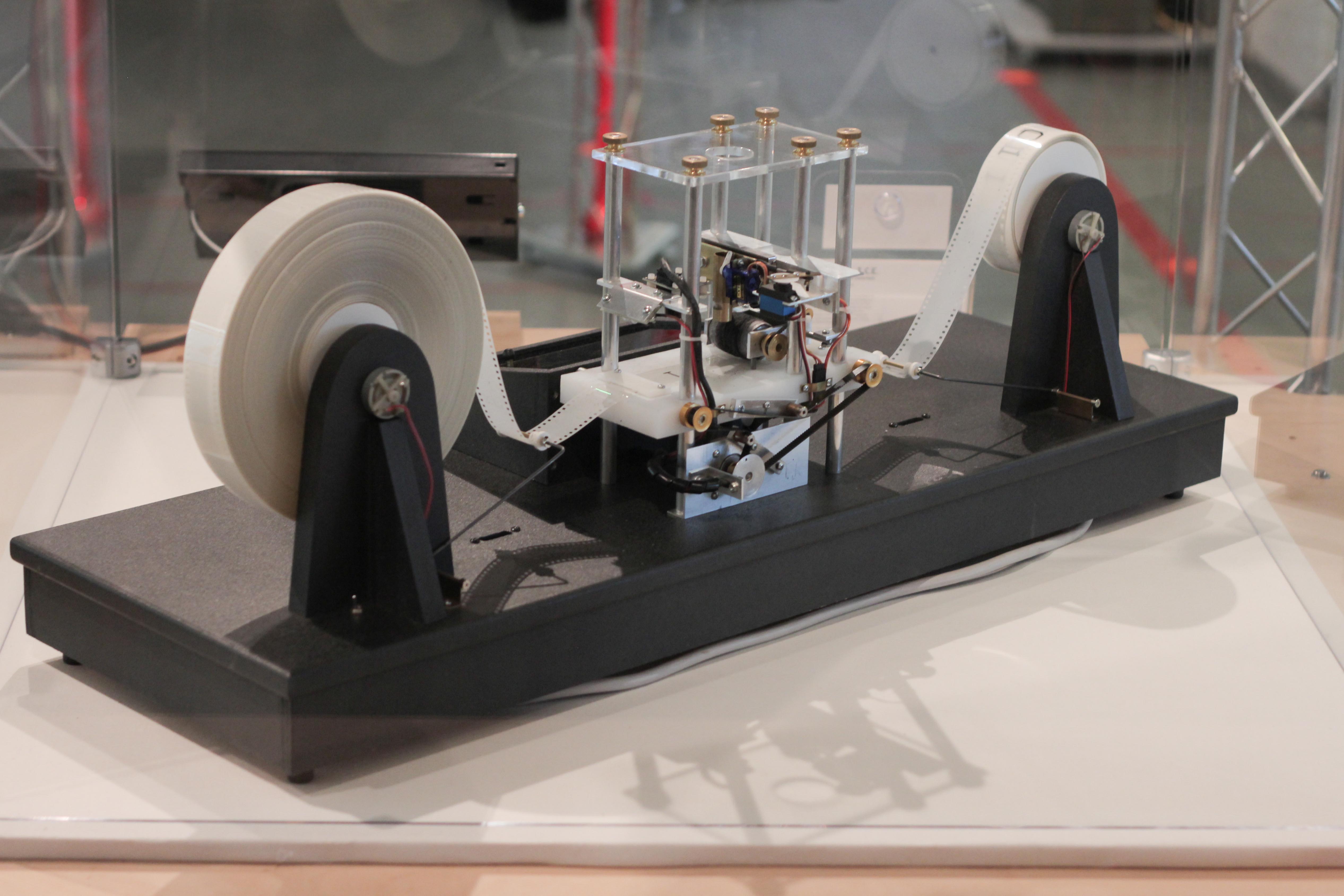

Turing Machine

A Turing machine is a mathematical model of computation describing an abstract machine that manipulates symbols on a strip of tape according to a table of rules. Despite the model's simplicity, it is capable of implementing any computer algorithm. The machine operates on an infinite memory tape divided into discrete mathematics, discrete cells, each of which can hold a single symbol drawn from a finite set of symbols called the Alphabet (formal languages), alphabet of the machine. It has a "head" that, at any point in the machine's operation, is positioned over one of these cells, and a "state" selected from a finite set of states. At each step of its operation, the head reads the symbol in its cell. Then, based on the symbol and the machine's own present state, the machine writes a symbol into the same cell, and moves the head one step to the left or the right, or halts the computation. The choice of which replacement symbol to write, which direction to move the head, and whet ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Inductive Probability

Inductive probability attempts to give the probability of future events based on past events. It is the basis for inductive reasoning Inductive reasoning refers to a variety of method of reasoning, methods of reasoning in which the conclusion of an argument is supported not with deductive certainty, but with some degree of probability. Unlike Deductive reasoning, ''deductive'' ..., and gives the mathematical basis for learning and the perception of patterns. It is a source of knowledge about the world. There are three sources of knowledge: inference, communication, and deduction. Communication relays information found using other methods. Deduction establishes new facts based on existing facts. Inference establishes new facts from data. Its basis is Bayes' theorem. Information describing the world is written in a language. For example, a simple mathematical language of propositions may be chosen. Sentences may be written down in this language as strings of characters. But in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Inductive Inference

Inductive reasoning refers to a variety of methods of reasoning in which the conclusion of an argument is supported not with deductive certainty, but with some degree of probability. Unlike ''deductive'' reasoning (such as mathematical induction), where the conclusion is ''certain'', given the premises are correct, inductive reasoning produces conclusions that are at best ''probable'', given the evidence provided. Types The types of inductive reasoning include generalization, prediction, statistical syllogism, argument from analogy, and causal inference. There are also differences in how their results are regarded. Inductive generalization A generalization (more accurately, an ''inductive generalization'') proceeds from premises about a sample to a conclusion about the population. The observation obtained from this sample is projected onto the broader population. : The proportion Q of the sample has attribute A. : Therefore, the proportion Q of the population has attrib ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Grammar Induction

Grammar induction (or grammatical inference) is the process in machine learning of learning a formal grammar (usually as a collection of ''re-write rules'' or '' productions'' or alternatively as a finite-state machine or automaton of some kind) from a set of observations, thus constructing a model which accounts for the characteristics of the observed objects. More generally, grammatical inference is that branch of machine learning where the instance space consists of discrete combinatorial objects such as strings, trees and graphs. Grammar classes Grammatical inference has often been very focused on the problem of learning finite-state machines of various types (see the article Induction of regular languages for details on these approaches), since there have been efficient algorithms for this problem since the 1980s. Since the beginning of the century, these approaches have been extended to the problem of inference of context-free grammars and richer formalisms, such as mult ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Algorithmic Probability

In algorithmic information theory, algorithmic probability, also known as Solomonoff probability, is a mathematical method of assigning a prior probability to a given observation. It was invented by Ray Solomonoff in the 1960s. It is used in inductive inference theory and analyses of algorithms. In his general theory of inductive inference, Solomonoff uses the method together with Bayes' rule to obtain probabilities of prediction for an algorithm's future outputs. In the mathematical formalism used, the observations have the form of finite binary strings viewed as outputs of Turing machines, and the universal prior is a probability distribution over the set of finite binary strings calculated from a probability distribution over programs (that is, inputs to a universal Turing machine). The prior is universal in the Turing-computability sense, i.e. no string has zero probability. It is not computable, but it can be approximated. Formally, the probability P is not a probabil ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bayesian Network

A Bayesian network (also known as a Bayes network, Bayes net, belief network, or decision network) is a probabilistic graphical model that represents a set of variables and their conditional dependencies via a directed acyclic graph (DAG). While it is one of several forms of causal notation, causal networks are special cases of Bayesian networks. Bayesian networks are ideal for taking an event that occurred and predicting the likelihood that any one of several possible known causes was the contributing factor. For example, a Bayesian network could represent the probabilistic relationships between diseases and symptoms. Given symptoms, the network can be used to compute the probabilities of the presence of various diseases. Efficient algorithms can perform inference and learning in Bayesian networks. Bayesian networks that model sequences of variables (''e.g.'' speech signals or protein sequences) are called dynamic Bayesian networks. Generalizations of Bayesian networks tha ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |