|

Minimum Description Length

Minimum Description Length (MDL) is a model selection principle where the shortest description of the data is the best model. MDL methods learn through a data compression perspective and are sometimes described as mathematical applications of Occam's razor. The MDL principle can be extended to other forms of inductive inference and learning, for example to estimation and sequential prediction, without explicitly identifying a single model of the data. MDL has its origins mostly in information theory and has been further developed within the general fields of statistics, theoretical computer science and machine learning, and more narrowly computational learning theory. Historically, there are different, yet interrelated, usages of the definite noun phrase "''the'' minimum description length ''principle''" that vary in what is meant by ''description'': * Within Jorma Rissanen's theory of learning, a central concept of information theory, models are statistical hypotheses and descri ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Model Selection

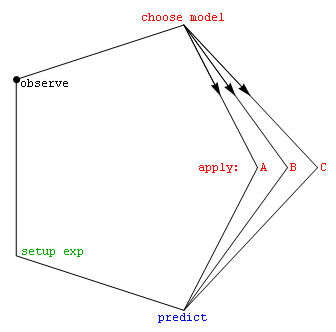

Model selection is the task of selecting a model from among various candidates on the basis of performance criterion to choose the best one. In the context of machine learning and more generally statistical analysis, this may be the selection of a statistical model from a set of candidate models, given data. In the simplest cases, a pre-existing set of data is considered. However, the task can also involve the design of experiments such that the data collected is well-suited to the problem of model selection. Given candidate models of similar predictive or explanatory power, the simplest model is most likely to be the best choice (Occam's razor). state, "The majority of the problems in statistical inference can be considered to be problems related to statistical modeling". Relatedly, has said, "How hetranslation from subject-matter problem to statistical model is done is often the most critical part of an analysis". Model selection may also refer to the problem of selecting ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Compression

In information theory, data compression, source coding, or bit-rate reduction is the process of encoding information using fewer bits than the original representation. Any particular compression is either lossy or lossless. Lossless compression reduces bits by identifying and eliminating statistical redundancy. No information is lost in lossless compression. Lossy compression reduces bits by removing unnecessary or less important information. Typically, a device that performs data compression is referred to as an encoder, and one that performs the reversal of the process (decompression) as a decoder. The process of reducing the size of a data file is often referred to as data compression. In the context of data transmission, it is called source coding: encoding is done at the source of the data before it is stored or transmitted. Source coding should not be confused with channel coding, for error detection and correction or line coding, the means for mapping data onto a sig ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bayesian Inference

Bayesian inference ( or ) is a method of statistical inference in which Bayes' theorem is used to calculate a probability of a hypothesis, given prior evidence, and update it as more information becomes available. Fundamentally, Bayesian inference uses a prior distribution to estimate posterior probabilities. Bayesian inference is an important technique in statistics, and especially in mathematical statistics. Bayesian updating is particularly important in the dynamic analysis of a sequence of data. Bayesian inference has found application in a wide range of activities, including science, engineering, philosophy, medicine, sport, and law. In the philosophy of decision theory, Bayesian inference is closely related to subjective probability, often called "Bayesian probability". Introduction to Bayes' rule Formal explanation Bayesian inference derives the posterior probability as a consequence of two antecedents: a prior probability and a "likelihood function" derive ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistics

Statistics (from German language, German: ', "description of a State (polity), state, a country") is the discipline that concerns the collection, organization, analysis, interpretation, and presentation of data. In applying statistics to a scientific, industrial, or social problem, it is conventional to begin with a statistical population or a statistical model to be studied. Populations can be diverse groups of people or objects such as "all people living in a country" or "every atom composing a crystal". Statistics deals with every aspect of data, including the planning of data collection in terms of the design of statistical survey, surveys and experimental design, experiments. When census data (comprising every member of the target population) cannot be collected, statisticians collect data by developing specific experiment designs and survey sample (statistics), samples. Representative sampling assures that inferences and conclusions can reasonably extend from the sample ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probability Theory

Probability theory or probability calculus is the branch of mathematics concerned with probability. Although there are several different probability interpretations, probability theory treats the concept in a rigorous mathematical manner by expressing it through a set of axioms of probability, axioms. Typically these axioms formalise probability in terms of a probability space, which assigns a measure (mathematics), measure taking values between 0 and 1, termed the probability measure, to a set of outcomes called the sample space. Any specified subset of the sample space is called an event (probability theory), event. Central subjects in probability theory include discrete and continuous random variables, probability distributions, and stochastic processes (which provide mathematical abstractions of determinism, non-deterministic or uncertain processes or measured Quantity, quantities that may either be single occurrences or evolve over time in a random fashion). Although it is no ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probability Distribution

In probability theory and statistics, a probability distribution is a Function (mathematics), function that gives the probabilities of occurrence of possible events for an Experiment (probability theory), experiment. It is a mathematical description of a Randomness, random phenomenon in terms of its sample space and the Probability, probabilities of Event (probability theory), events (subsets of the sample space). For instance, if is used to denote the outcome of a coin toss ("the experiment"), then the probability distribution of would take the value 0.5 (1 in 2 or 1/2) for , and 0.5 for (assuming that fair coin, the coin is fair). More commonly, probability distributions are used to compare the relative occurrence of many different random values. Probability distributions can be defined in different ways and for discrete or for continuous variables. Distributions with special properties or for especially important applications are given specific names. Introduction A prob ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Function (mathematics)

In mathematics, a function from a set (mathematics), set to a set assigns to each element of exactly one element of .; the words ''map'', ''mapping'', ''transformation'', ''correspondence'', and ''operator'' are sometimes used synonymously. The set is called the Domain of a function, domain of the function and the set is called the codomain of the function. Functions were originally the idealization of how a varying quantity depends on another quantity. For example, the position of a planet is a ''function'' of time. History of the function concept, Historically, the concept was elaborated with the infinitesimal calculus at the end of the 17th century, and, until the 19th century, the functions that were considered were differentiable function, differentiable (that is, they had a high degree of regularity). The concept of a function was formalized at the end of the 19th century in terms of set theory, and this greatly increased the possible applications of the concept. A f ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

One-to-one Correspondence

In mathematics, a bijection, bijective function, or one-to-one correspondence is a function between two sets such that each element of the second set (the codomain) is the image of exactly one element of the first set (the domain). Equivalently, a bijection is a relation between two sets such that each element of either set is paired with exactly one element of the other set. A function is bijective if it is invertible; that is, a function f:X\to Y is bijective if and only if there is a function g:Y\to X, the ''inverse'' of , such that each of the two ways for composing the two functions produces an identity function: g(f(x)) = x for each x in X and f(g(y)) = y for each y in Y. For example, the ''multiplication by two'' defines a bijection from the integers to the even numbers, which has the ''division by two'' as its inverse function. A function is bijective if and only if it is both injective (or ''one-to-one'')—meaning that each element in the codomain is mapped f ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Universal Code (data Compression)

In data compression, a universal code for integers is a prefix code that maps the positive integers onto binary codewords, with the additional property that whatever the true probability distribution on integers, as long as the distribution is monotonic (i.e., ''p''(''i'') ≥ ''p''(''i'' + 1) for all positive ''i''), the expected lengths of the codewords are within a constant factor of the expected lengths that the optimal code for that probability distribution would have assigned. A universal code is ''asymptotically optimal'' if the ratio between actual and optimal expected lengths is bounded by a function of the information entropy of the code that, in addition to being bounded, approaches 1 as entropy approaches infinity. In general, most prefix codes for integers assign longer codewords to larger integers. Such a code can be used to efficiently communicate a message drawn from a set of possible messages, by simply ordering the set of messages ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lossless Compression

Lossless compression is a class of data compression that allows the original data to be perfectly reconstructed from the compressed data with no loss of information. Lossless compression is possible because most real-world data exhibits statistical redundancy. By contrast, lossy compression permits reconstruction only of an approximation of the original data, though usually with greatly improved compression rates (and therefore reduced media sizes). By operation of the pigeonhole principle, no lossless compression algorithm can shrink the size of all possible data: Some data will get longer by at least one symbol or bit. Compression algorithms are usually effective for human- and machine-readable documents and cannot shrink the size of random data that contain no redundancy. Different algorithms exist that are designed either with a specific type of input data in mind or with specific assumptions about what kinds of redundancy the uncompressed data are likely to contain. Lo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Ridge Regression

Ridge regression (also known as Tikhonov regularization, named for Andrey Tikhonov) is a method of estimating the coefficients of multiple- regression models in scenarios where the independent variables are highly correlated. It has been used in many fields including econometrics, chemistry, and engineering. It is a method of regularization of ill-posed problems. It is particularly useful to mitigate the problem of multicollinearity in linear regression, which commonly occurs in models with large numbers of parameters. In general, the method provides improved efficiency in parameter estimation problems in exchange for a tolerable amount of bias (see bias–variance tradeoff). The theory was first introduced by Hoerl and Kennard in 1970 in their ''Technometrics'' papers "Ridge regressions: biased estimation of nonorthogonal problems" and "Ridge regressions: applications in nonorthogonal problems". Ridge regression was developed as a possible solution to the imprecision of least ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |