|

Memory Pool

Memory pools, also called fixed-size blocks allocation, is the use of pools for memory management that allows dynamic memory allocation. Dynamic memory allocation can, and has been achieved through the use of techniques such as malloc and C++'s operator new; although established and reliable implementations, these suffer from fragmentation because of variable block sizes, it is not recommendable to use them in a real time system due to performance. A more efficient solution is preallocating a number of memory blocks with the same size called the memory pool. The application can allocate, access, and free blocks represented by handles at run time. Many real-time operating systems use memory pools, such as the Transaction Processing Facility. Some systems, like the web server Nginx, use the term ''memory pool'' to refer to a group of variable-size allocations which can be later deallocated all at once. This is also known as a ''region''; see region-based memory manage ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

RTOS

A real-time operating system (RTOS) is an operating system (OS) for real-time computing applications that processes data and events that have critically defined time constraints. A RTOS is distinct from a time-sharing operating system, such as Unix, which manages the sharing of system resources with a scheduler, data buffers, or fixed task prioritization in multitasking or multiprogramming environments. All operations must verifiably complete within given time and resource constraints or else fail safe. Real-time operating systems are event-driven and preemptive, meaning the OS can monitor the relevant priority of competing tasks, and make changes to the task priority. Characteristics A key characteristic of an RTOS is the level of its consistency concerning the amount of time it takes to accept and complete an application's task; the variability is "jitter". A "hard" real-time operating system (hard RTOS) has less jitter than a "soft" real-time operating system (soft RTOS); a l ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Object Pool

The object pool pattern is a software creational design pattern that uses a set of initialized objects kept ready to use – a "pool" – rather than allocating and destroying them on demand. A client of the pool will request an object from the pool and perform operations on the returned object. When the client has finished, it returns the object to the pool rather than destroying it; this can be done manually or automatically. Object pools are primarily used for performance: in some circumstances, object pools significantly improve performance. Object pools complicate object lifetime, as objects obtained from and returned to a pool are not actually created or destroyed at this time, and thus require care in implementation. Description When it is necessary to work with numerous objects that are particularly expensive to instantiate and each object is only needed for a short period of time, the performance of an entire application may be adversely affected. An object pool desi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Free List

A free list (or freelist) is a data structure used in a scheme for dynamic memory allocation. It operates by connecting unallocated regions of memory together in a linked list, using the first word of each unallocated region as a pointer to the next. It is most suitable for allocating from a memory pool, where all objects have the same size. Free lists make the allocation and deallocation operations very simple. To free a region, one would just link it to the free list. To allocate a region, one would simply remove a single region from the end of the free list and use it. If the regions are variable-sized, one may have to search for a region of large enough size, which can be expensive. Free lists have the disadvantage, inherited from linked lists, of poor locality of reference and so poor data cache utilization, and they do not automatically consolidate adjacent regions to fulfill allocation requests for large regions, unlike the buddy allocation system. Nevertheless, they ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Recursion (computer Science)

In computer science, recursion is a method of solving a computational problem where the solution depends on solutions to smaller instances of the same problem. Recursion solves such recursion, recursive problems by using function (computer science), functions that call themselves from within their own code. The approach can be applied to many types of problems, and recursion is one of the central ideas of computer science. Most computer programming languages support recursion by allowing a function to call itself from within its own code. Some functional programming languages (for instance, Clojure) do not define any looping constructs but rely solely on recursion to repeatedly call code. It is proved in computability theory that these recursive-only languages are Turing complete; this means that they are as powerful (they can be used to solve the same problems) as imperative languages based on control structures such as and . Repeatedly calling a function from within itse ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Control Flow

In computer science, control flow (or flow of control) is the order in which individual statements, instructions or function calls of an imperative program are executed or evaluated. The emphasis on explicit control flow distinguishes an ''imperative programming'' language from a ''declarative programming'' language. Within an imperative programming language, a ''control flow statement'' is a statement that results in a choice being made as to which of two or more paths to follow. For non-strict functional languages, functions and language constructs exist to achieve the same result, but they are usually not termed control flow statements. A set of statements is in turn generally structured as a block, which in addition to grouping, also defines a lexical scope. Interrupts and signals are low-level mechanisms that can alter the flow of control in a way similar to a subroutine, but usually occur as a response to some external stimulus or event (that can occur asynchr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Compile Time

In computer science, compile time (or compile-time) describes the time window during which a language's statements are converted into binary instructions for the processor to execute. The term is used as an adjective to describe concepts related to the context of program compilation, as opposed to concepts related to the context of program execution ( run time). For example, ''compile-time requirements'' are programming language requirements that must be met by source code before compilation and ''compile-time properties'' are properties of the program that can be reasoned about during compilation. The actual length of time it takes to compile a program is usually referred to as ''compilation time''. Overview Most compilers have at least the following compiler phases (which therefore occur at compile-time): syntax analysis, semantic analysis, and code generation. During optimization phases, constant expressions in the source code can also be evaluated at compile-time usin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Region-based Memory Management

In computer science, region-based memory management is a type of memory management in which each allocated object is assigned to a region. A region, also called a partition, subpool, zone, arena, area, or memory context, is a collection of allocated objects that can be efficiently reallocated or deallocated all at once. Memory allocators using region-based managements are often called area allocators, and when they work by only "bumping" a single pointer, as bump allocators. Like stack allocation, regions facilitate allocation and deallocation of memory with low overhead; but they are more flexible, allowing objects to live longer than the stack frame in which they were allocated. In typical implementations, all objects in a region are allocated in a single contiguous range of memory addresses, similarly to how stack frames are typically allocated. In OS/360 and successors, the concept applies at two levels; each job runs within a contiguous partition or region. Storage allocati ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Nginx

(pronounced "engine x" , stylized as NGINX or nginx) is a web server that can also be used as a reverse proxy, load balancer, mail proxy and HTTP cache. The software was created by Russian developer Igor Sysoev and publicly released in 2004. Nginx is free and open-source software, released under the terms of the 2-clause BSD license. A large fraction of web servers use Nginx, often as a load balancer. A company of the same name was founded in 2011 to provide support and ''NGINX Plus'' paid software. In March 2019, the company was acquired by F5 for $670 million. Popularity , W3Tech's web server count of all web sites ranked Nginx first with 33.8%. Apache was second at 26.4% and Cloudflare Server third at 23.4%. , Netcraft estimated that Nginx served 20.11% of the million busiest websites with Cloudflare a little ahead at 22.99%. Apache at 17.83% and Microsoft Internet Information Services at 4.16% rounded out the top four servers for the busiest websites. Some o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Transaction Processing Facility

Transaction Processing Facility (TPF) is an IBM real-time operating system for mainframe computers descended from the IBM System/360 family, including zSeries and System z9. TPF delivers fast, high-volume, high-throughput transaction processing, handling large, continuous loads of essentially simple transactions across large, geographically dispersed networks. While there are other industrial-strength transaction processing systems, notably IBM's own CICS and IMS, TPF's specialty is extreme volume, large numbers of concurrent users, and very fast response times. For example, it handles VISA credit card transaction processing during the peak holiday shopping season. The TPF passenger reservation application PARS, or its international version IPARS, is used by many airlines. ''PARS'' is an ''application program''; TPF is an operating system. One of TPF's major optional components is a high performance, specialized database facility called ''TPF Database Facility'' (TPFDF). A ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Run Time (program Lifecycle Phase)

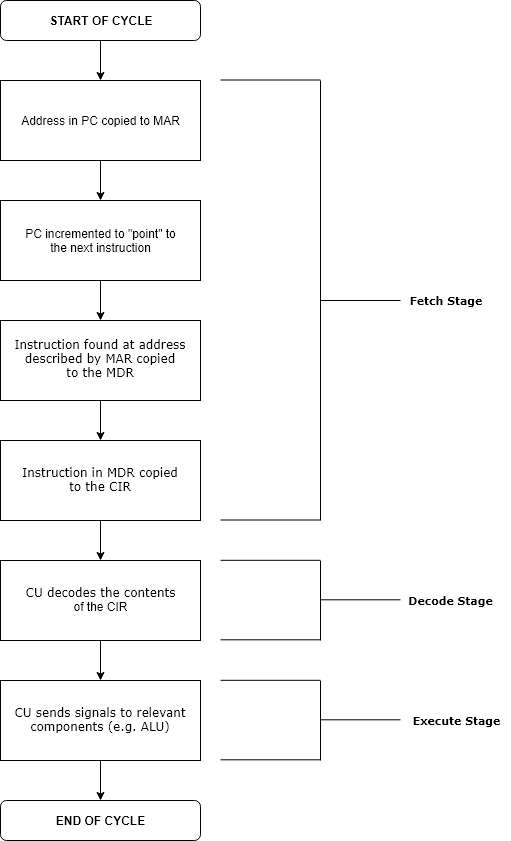

Execution in computer and software engineering is the process by which a computer or virtual machine interprets and acts on the instructions of a computer program. Each instruction of a program is a description of a particular action which must be carried out, in order for a specific problem to be solved. Execution involves repeatedly following a " fetch–decode–execute" cycle for each instruction done by the control unit. As the executing machine follows the instructions, specific effects are produced in accordance with the semantics of those instructions. Programs for a computer may be executed in a batch process without human interaction or a user may type commands in an interactive session of an interpreter. In this case, the "commands" are simply program instructions, whose execution is chained together. The term run is used almost synonymously. A related meaning of both "to run" and "to execute" refers to the specific action of a user starting (or ''launching'' o ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Fixed-size Blocks Allocation

Memory management (also dynamic memory management, dynamic storage allocation, or dynamic memory allocation) is a form of resource management applied to computer memory. The essential requirement of memory management is to provide ways to dynamically allocate portions of memory to programs at their request, and free it for reuse when no longer needed. This is critical to any advanced computer system where more than a single process might be underway at any time. Several methods have been devised that increase the effectiveness of memory management. Virtual memory systems separate the memory addresses used by a process from actual physical addresses, allowing separation of processes and increasing the size of the virtual address space beyond the available amount of RAM using paging or swapping to secondary storage. The quality of the virtual memory manager can have an extensive effect on overall system performance. The system allows a computer to appear as if it may have more m ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |