|

Markov Logic Network

A Markov logic network (MLN) is a probabilistic logic which applies the ideas of a Markov network to first-order logic, defining probability distributions on possible worlds on any given domain. History In 2002, Ben Taskar, Pieter Abbeel and Daphne Koller introduced relational Markov networks as templates to specify Markov networks abstractly and without reference to a specific domain. Work on Markov logic networks began in 2003 by Pedro Domingos and Matt Richardson. Markov logic networks is a popular formalism for statistical relational learning. Syntax A Markov logic network consists of a collection of formulas from first-order logic, to each of which is assigned a real number, the weight. The underlying idea is that an interpretation is more likely if it satisfies formulas with positive weights and less likely if it satisfies formulas with negative weights. For instance, the following Markov logic network codifies how smokers are more likely to be friends with other sm ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probabilistic Logic

Probabilistic logic (also probability logic and probabilistic reasoning) involves the use of probability and logic to deal with uncertain situations. Probabilistic logic extends traditional logic truth tables with probabilistic expressions. A difficulty of probabilistic logics is their tendency to multiply the computational complexities of their probabilistic and logical components. Other difficulties include the possibility of counter-intuitive results, such as in case of belief fusion in Dempster–Shafer theory. Source trust and epistemic uncertainty about the probabilities they provide, such as defined in subjective logic, are additional elements to consider. The need to deal with a broad variety of contexts and issues has led to many different proposals. Logical background There are numerous proposals for probabilistic logics. Very roughly, they can be categorized into two different classes: those logics that attempt to make a probabilistic extension to logical entailment, s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Predicate Symbol

In mathematical logic, a predicate variable is a predicate letter which functions as a "placeholder" for a relation (between terms), but which has not been specifically assigned any particular relation (or meaning). Common symbols for denoting predicate variables include capital roman letters such as P, Q and R, or lower case roman letters, e.g., x. In first-order logic, they can be more properly called metalinguistic variables. In higher-order logic, predicate variables correspond to propositional variables which can stand for well-formed formulas of the same logic, and such variables can be quantified by means of (at least) second-order quantifiers. Notation Predicate variables should be distinguished from predicate constants, which could be represented either with a different (exclusive) set of predicate letters, or by their own symbols which really do have their own specific meaning in their domain of discourse: e.g. =, \ \in , \ \le,\ <, \ \sub,... . If letters ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probabilistic Logic Network

A probabilistic logic network (PLN) is a conceptual, mathematical and computational approach to uncertain inference. It was inspired by logic programming and it uses probabilities in place of crisp (true/false) truth values, and fractional uncertainty in place of crisp known/unknown values. In order to carry out effective reasoning in real-world circumstances, artificial intelligence software handles uncertainty. Previous approaches to uncertain inference do not have the breadth of scope required to provide an integrated treatment of the disparate forms of cognitively critical uncertainty as they manifest themselves within the various forms of pragmatic inference. Going beyond prior probabilistic approaches to uncertain inference, PLN encompasses uncertain logic with such ideas as induction, abduction, analogy, fuzziness and speculation, and reasoning about time and causality. PLN was developed by Ben Goertzel, Matt Ikle, Izabela Lyon Freire Goertzel, and Ari Heljakka for use ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Statistical Relational Learning

Statistical relational learning (SRL) is a subdiscipline of artificial intelligence and machine learning that is concerned with domain models that exhibit both uncertainty (which can be dealt with using statistical methods) and complex, relational structure. Typically, the knowledge representation formalisms developed in SRL use (a subset of) first-order logic to describe relational properties of a domain in a general manner (universal quantification) and draw upon probabilistic graphical models (such as Bayesian networks or Markov networks) to model the uncertainty; some also build upon the methods of inductive logic programming. Significant contributions to the field have been made since the late 1990s. As is evident from the characterization above, the field is not strictly limited to learning aspects; it is equally concerned with reasoning (specifically probabilistic inference) and knowledge representation. Therefore, alternative terms that reflect the main foci of the field ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Markov Random Field

In the domain of physics and probability, a Markov random field (MRF), Markov network or undirected graphical model is a set of random variables having a Markov property described by an undirected graph In discrete mathematics, particularly in graph theory, a graph is a structure consisting of a set of objects where some pairs of the objects are in some sense "related". The objects are represented by abstractions called '' vertices'' (also call .... In other words, a random field is said to be a Andrey Markov, Markov random field if it satisfies Markov properties. The concept originates from the Spin glass#Sherrington–Kirkpatrick model, Sherrington–Kirkpatrick model. A Markov network or MRF is similar to a Bayesian network in its representation of dependencies; the differences being that Bayesian networks are directed acyclic graph, directed and acyclic, whereas Markov networks are undirected and may be cyclic. Thus, a Markov network can represent certain dependencies th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Weighted Model Counting

A weight function is a mathematical device used when performing a sum, integral, or average to give some elements more "weight" or influence on the result than other elements in the same set. The result of this application of a weight function is a weighted sum or weighted average. Weight functions occur frequently in statistics and analysis, and are closely related to the concept of a measure. Weight functions can be employed in both discrete and continuous settings. They can be used to construct systems of calculus called "weighted calculus" and "meta-calculus".Jane Grossma''Meta-Calculus: Differential and Integral'' , 1981. Discrete weights General definition In the discrete setting, a weight function w \colon A \to \R^+ is a positive function defined on a discrete set A, which is typically finite or countable. The weight function w(a) := 1 corresponds to the ''unweighted'' situation in which all elements have equal weight. One can then apply this weight to various conce ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pseudolikelihood

In statistical theory, a pseudolikelihood is an approximation to the joint probability distribution of a collection of random variables. The practical use of this is that it can provide an approximation to the likelihood function of a set of observed data which may either provide a computationally simpler problem for estimation, or may provide a way of obtaining explicit estimates of model parameters. The pseudolikelihood approach was introduced by Julian Besag in the context of analysing data having spatial dependence. Definition Given a set of random variables X = X_1, X_2, \ldots, X_n the pseudolikelihood of X = x = (x_1,x_2, \ldots, x_n) is :L(\theta) := \prod_i \mathrm_\theta(X_i = x_i\mid X_j = x_j \text j \neq i)=\prod_i \mathrm _\theta (X_i = x_i \mid X_=x_) in discrete case and :L(\theta) := \prod_i p_\theta(x_i \mid x_j \text j \neq i)=\prod_i p _\theta (x_i \mid x_)=\prod _i p_\theta (x_i \mid x_1,\ldots, \hat x_i, \ldots, x_n) in continuous one. Here X is a vect ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Belief Propagation

Belief propagation, also known as sum–product message passing, is a message-passing algorithm for performing inference on graphical models, such as Bayesian networks and Markov random fields. It calculates the marginal distribution for each unobserved node (or variable), conditional on any observed nodes (or variables). Belief propagation is commonly used in artificial intelligence and information theory, and has demonstrated empirical success in numerous applications, including low-density parity-check codes, turbo codes, free energy approximation, and satisfiability. The algorithm was first proposed by Judea Pearl in 1982, who formulated it as an exact inference algorithm on trees, later extended to polytrees. While the algorithm is not exact on general graphs, it has been shown to be a useful approximate algorithm. Motivation Given a finite set of discrete random variables X_1, \ldots, X_n with joint probability mass function p, a common task is to compute ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

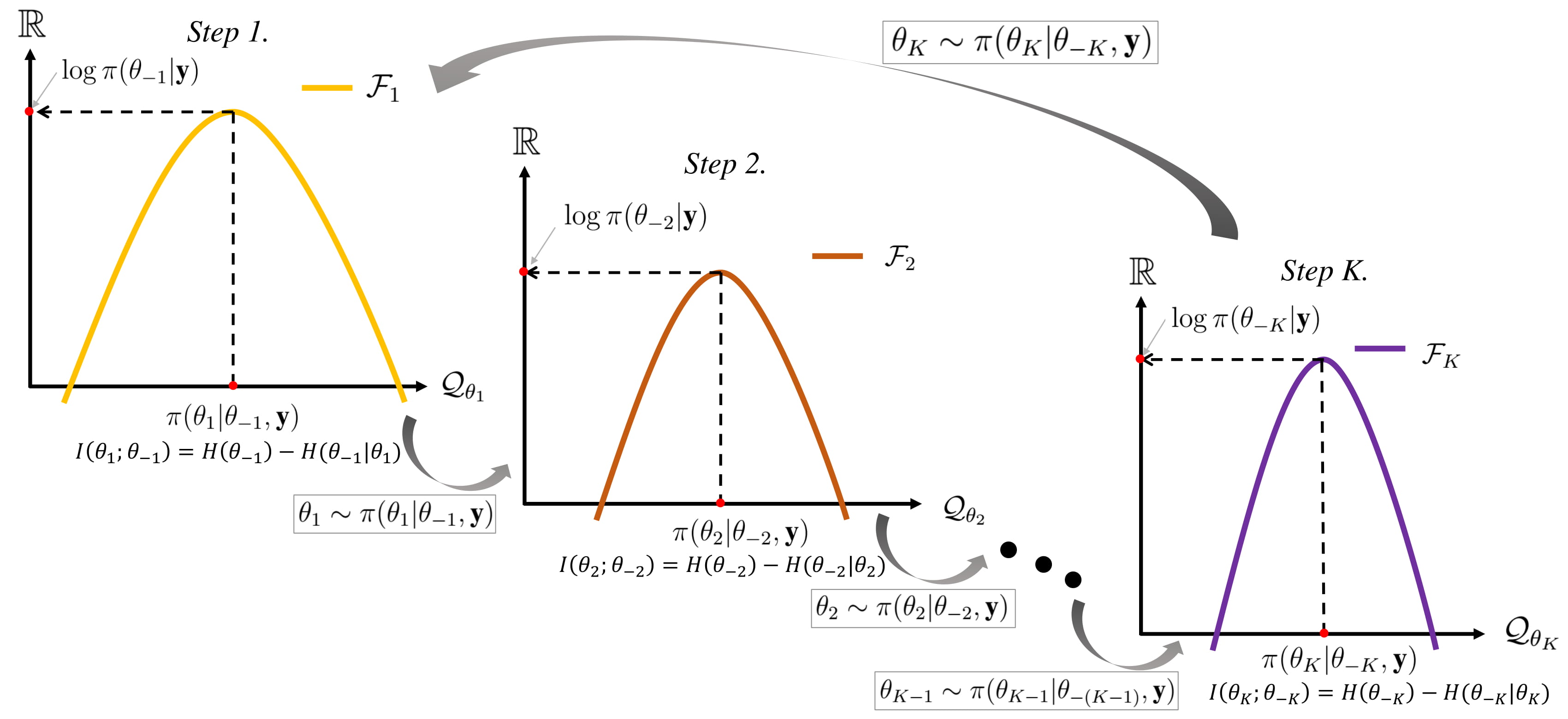

Gibbs Sampling

In statistics, Gibbs sampling or a Gibbs sampler is a Markov chain Monte Carlo (MCMC) algorithm for sampling from a specified multivariate distribution, multivariate probability distribution when direct sampling from the joint distribution is difficult, but sampling from the Conditional probability distribution, conditional distribution is more practical. This sequence can be used to approximate the joint distribution (e.g., to generate a histogram of the distribution); to approximate the marginal distribution of one of the variables, or some subset of the variables (for example, the unknown parameters or latent variables); or to compute an integral (such as the expected value of one of the variables). Typically, some of the variables correspond to observations whose values are known, and hence do not need to be sampled. Gibbs sampling is commonly used as a means of statistical inference, especially Bayesian inference. It is a randomized algorithm (i.e. an algorithm that makes ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

♯P

In computational complexity theory, the complexity class #P (pronounced "sharp P" or, sometimes "number P" or "hash P") is the set of the counting problems associated with the decision problems in the set NP. More formally, #P is the class of function problems of the form "compute ''f''(''x'')", where ''f'' is the number of accepting paths of a nondeterministic Turing machine running in polynomial time. Unlike most well-known complexity classes, it is not a class of decision problems but a class of function problems. The most difficult, representative problems of this class are #P-complete. Relation to decision problems An NP decision problem is often of the form "Are there any solutions that satisfy certain constraints?" For example: * Are there any subsets of a list of integers that add up to zero? (subset sum problem) * Are there any Hamiltonian cycles in a given graph with cost less than 100? (traveling salesman problem) * Are there any variable assignments that satisfy a g ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Directly Proportional

In mathematics, two sequences of numbers, often experimental data, are proportional or directly proportional if their corresponding elements have a constant ratio. The ratio is called ''coefficient of proportionality'' (or ''proportionality constant'') and its reciprocal is known as ''constant of normalization'' (or ''normalizing constant''). Two sequences are inversely proportional if corresponding elements have a constant product. Two functions f(x) and g(x) are ''proportional'' if their ratio \frac is a constant function. If several pairs of variables share the same direct proportionality constant, the equation expressing the equality of these ratios is called a proportion, e.g., (for details see Ratio). Proportionality is closely related to ''linearity''. Direct proportionality Given an independent variable ''x'' and a dependent variable ''y'', ''y'' is directly proportional to ''x'' if there is a positive constant ''k'' such that: : y = kx The relation is often ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Variable Assignment

First-order logic, also called predicate logic, predicate calculus, or quantificational logic, is a collection of formal systems used in mathematics, philosophy, linguistics, and computer science. First-order logic uses quantified variables over non-logical objects, and allows the use of sentences that contain variables. Rather than propositions such as "all humans are mortal", in first-order logic one can have expressions in the form "for all ''x'', if ''x'' is a human, then ''x'' is mortal", where "for all ''x"'' is a quantifier, ''x'' is a variable, and "... ''is a human''" and "... ''is mortal''" are predicates. This distinguishes it from propositional logic, which does not use quantifiers or relations; in this sense, propositional logic is the foundation of first-order logic. A theory about a topic, such as set theory, a theory for groups,A. Tarski, ''Undecidable Theories'' (1953), p. 77. Studies in Logic and the Foundation of Mathematics, North-Holland or a formal theory of ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |