|

Log-structured File System

A log-structured filesystem is a file system in which data and metadata are written sequentially to a circular buffer, called a log. The design was first proposed in 1988 by John K. Ousterhout and Fred Douglis and first implemented in 1992 by Ousterhout and Mendel Rosenblum for the Unix-like Sprite distributed operating system. Rationale Conventional file systems lay out files with great care for spatial locality and make in-place changes to their data structures in order to perform well on optical and magnetic disks, which tend to seek relatively slowly. The design of log-structured file systems is based on the hypothesis that this will no longer be effective because ever-increasing memory sizes on modern computers would lead to I/O becoming write-heavy since reads would be almost always satisfied from memory cache. A log-structured file system thus treats its storage as a circular log and writes sequentially to the head of the log. This has several important side effe ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Versioning File System

A versioning file system is any computer file system which allows a computer file to exist in several versions at the same time. Thus it is a form of revision control. Most common versioning file systems keep a number of old copies of the file. Some limit the number of changes per minute or per hour to avoid storing large numbers of trivial changes. Others instead take periodic snapshots whose contents can be accessed using methods similar as those for normal file access. Similar technologies Backup A versioning file system is similar to a periodic backup, with several key differences. * Backups are normally triggered on a timed basis, while versioning occurs when the file changes. * Backups are usually system-wide or partition-wide, while versioning occurs independently on a file-by-file basis. * Backups are normally written to separate media, while versioning file systems write to the same hard drive (and normally the same folder, directory, or local partition). In comp ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer File Systems

A computer is a machine that can be programmed to automatically carry out sequences of arithmetic or logical operations (''computation''). Modern digital electronic computers can perform generic sets of operations known as ''programs'', which enable computers to perform a wide range of tasks. The term computer system may refer to a nominally complete computer that includes the hardware, operating system, software, and peripheral equipment needed and used for full operation; or to a group of computers that are linked and function together, such as a computer network or computer cluster. A broad range of industrial and consumer products use computers as control systems, including simple special-purpose devices like microwave ovens and remote controls, and factory devices like industrial robots. Computers are at the core of general-purpose devices such as personal computers and mobile devices such as smartphones. Computers power the Internet, which links billions of computer ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

List Of Log-structured File Systems

This is an incomplete list of log-structured file system implementations. * James T, Brady while in IBM Poughkeepsie Lab conceived a log structured paging file system in 1979 which was implemented in MVS SP2 in 1980."1981 IBM Corporate Technical Recognition Event Book, Outstanding Innovation Award, “Virtual Storage Disk Paging”" * John K. Ousterhout and Mendel Rosenblum implemented the first log-structured file system for the Sprite operating system in 1992.Rosenblum, Mendel and Ousterhout, John K. (June 1990) -The LFS Storage Manager. ''Proceedings of the 1990 Summer Usenix''. pp315-324.Rosenblum, Mendel and Ousterhout, John K. (February 1992) -. ''ACM Transactions on Computer Systems, Vol. 10 Issue 1''. pp26-52. * BSD-LFS, an implementation by Margo Seltzer was added to 4.4BSD, and was later ported to 386BSD. It lacked support for snapshots. It was removed from FreeBSD and OpenBSD, but still lives on in NetBSD. * Plan 9's Fossil file system is also log-structured and supp ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Comparison Of File Systems

The following tables compare general and technical information for a number of file systems. General information Metadata All widely used file systems record a last modified time stamp (also known as "mtime"). It is not included in the table. Individual file systems may record additional special types of date and time stamps. For example, the specification of ISO 9660 includes a "File Expiration Date and Time" and a "File Effective Date and Time". Features File capabilities Block capabilities Note that in addition to the below table, block capabilities can be implemented below the file system layer in Linux (Logical Volume Manager (Linux), LVM, , Dm-crypt#cryptsetup, cryptsetup) or Windows (Volume Shadow Copy Service, SECURITY.BIN, SECURITY), etc. Resize capabilities "Online" and "offline" are synonymous with "mounted" and "not mounted". Allocation and layout policies OS support Limits While storage devices usually have their size ex ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Fragmentation (computer)

In computer storage, fragmentation is a phenomenon in the computer system which involves the distribution of data in to smaller pieces which storage space, such as computer memory or a hard drive, is used inefficiently, reducing capacity or performance and often both. The exact consequences of fragmentation depend on the specific system of storage allocation in use and the particular form of fragmentation. In many cases, fragmentation leads to storage space being "wasted", and programs will tend to run inefficiently due to the shortage of memory. Basic principle In main memory fragmentation, when a computer program requests blocks of memory from the computer system, the blocks are allocated in chunks. When the computer program is finished with a chunk, it can free it back to the system, making it available to later be allocated again to another or the same program. The size and the amount of time a chunk is held by a program varies. During its lifespan, a computer program can requ ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

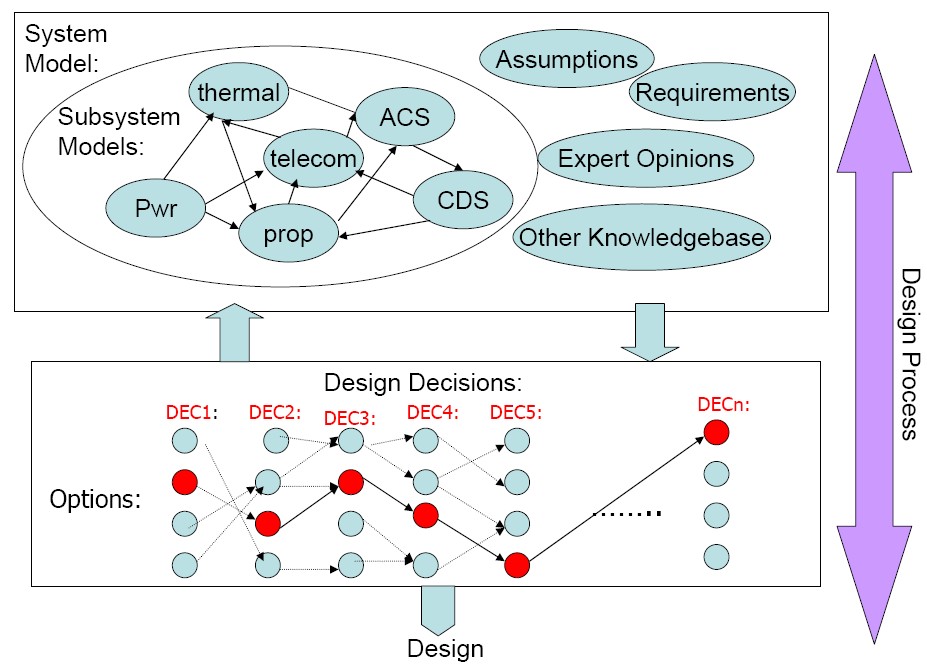

Design Rationale

A design rationale is an explicit documentation of the reasons behind decisions made when designing a system or artifact. As initially developed by W.R. Kunz and Horst Rittel, design rationale seeks to provide argumentation-based structure to the political, collaborative process of addressing wicked problems. Overview A design rationale is the explicit listing of decisions made during a design process, and the reasons why those decisions were made.Jarczyk, Alex P.; Löffler, Peter; Shipman III, Frank M. (1992), "Design Rationale for Software Engineering: A Survey", ''25th Hawaii International Conference on System Sciences'', 2, pp. 577-586 Its primary goal is to support designers by providing a means to record and communicate the argumentation and reasoning behind the design process.Horner, J.; Atwood, M.E. (2006), "Effective Design Rationale: Understanding the Barriers", in Dutoit, A.H.; McCall, R.; Mistrík, I. et al., Rationale Management in Software Engineering, Spr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Write Amplification

Write amplification (WA) is an undesirable phenomenon associated with flash memory and solid-state drives (SSDs) where the actual amount of information physically written to the storage media is a multiple of the logical amount intended to be written. Because flash memory must be erased before it can be rewritten, with much coarser granularity of the erase operation when compared to the write operation, the process to perform these operations results in moving (or rewriting) user data and metadata more than once. Thus, rewriting some data requires an already-used-portion of flash to be read, updated, and written to a new location, together with initially erasing the new location if it was previously used. Due to the way flash works, much larger portions of flash must be erased and rewritten than actually required by the amount of new data. This multiplying effect increases the number of writes required over the life of the SSD, which shortens the time it can operate reliably. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Garbage Collection (computer Science)

In computer science, garbage collection (GC) is a form of automatic memory management. The ''garbage collector'' attempts to reclaim memory that was allocated by the program, but is no longer referenced; such memory is called ''garbage (computer science), garbage''. Garbage collection was invented by American computer scientist John McCarthy (computer scientist), John McCarthy around 1959 to simplify manual memory management in Lisp (programming language), Lisp. Garbage collection relieves the programmer from doing manual memory management, where the programmer specifies what objects to de-allocate and return to the memory system and when to do so. Other, similar techniques include stack-based memory allocation, stack allocation, region inference, and memory ownership, and combinations thereof. Garbage collection may take a significant proportion of a program's total processing time, and affect computer performance, performance as a result. Resources other than memory, such a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

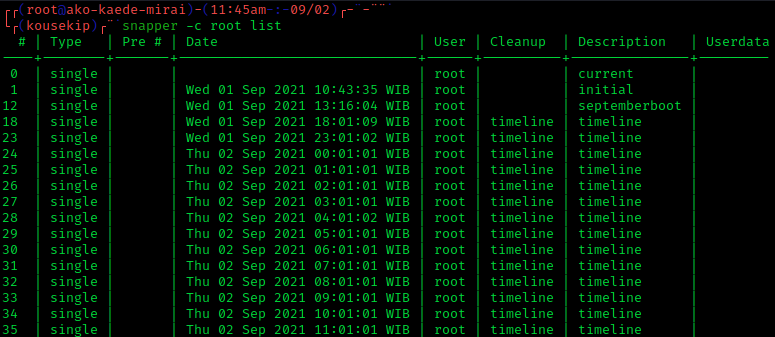

Snapshot (computer Storage)

In Computer, computer systems, a snapshot is the State (computer science), state of a system at a particular point in time. The term was coined as an analogy to that in Snapshot (photography), photography. Rationale A full backup of a large data set may take a long time to complete. On Computer multitasking, multi-tasking or multi-user systems, there may be writes to that data while it is being backed up. This prevents the backup from being Atomicity (database systems), atomic and introduces a version skew that may result in data corruption. For example, if a user moves a file into a directory that has already been backed up, then that file would be completely missing on the Computer data storage, backup media, since the backup operation had already taken place before the addition of the file. Version skew may also cause corruption with files which change their size or contents underfoot while being read. One Backup#Approaches to backing up live data, approach to safely backin ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Circular Buffer

In computer science, a circular buffer, circular queue, cyclic buffer or ring buffer is a data structure that uses a single, fixed-size buffer as if it were connected end-to-end. This structure lends itself easily to buffering data streams. There were early circular buffer implementations in hardware. Overview A circular buffer first starts out empty and has a set length. In the diagram below is a 7-element buffer: : Assume that 1 is written in the center of a circular buffer (the exact starting location is not important in a circular buffer): : Then assume that two more elements are added to the circular buffer — 2 & 3 — which get put after 1: : If two elements are removed, the two oldest values inside of the circular buffer would be removed. Circular buffers use FIFO ('' first in, first out'') logic. In the example, 1 & 2 were the first to enter the circular buffer, they are the first to be removed, leaving 3 inside of the buffer. : If the buffer has 7 e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |