|

Latent Dirichlet Allocation

In natural language processing, latent Dirichlet allocation (LDA) is a Bayesian network (and, therefore, a generative statistical model) for modeling automatically extracted topics in textual corpora. The LDA is an example of a Bayesian topic model. In this, observations (e.g., words) are collected into documents, and each word's presence is attributable to one of the document's topics. Each document will contain a small number of topics. History In the context of population genetics, LDA was proposed by J. K. Pritchard, M. Stephens and P. Donnelly in 2000. LDA was applied in machine learning by David Blei, Andrew Ng and Michael I. Jordan in 2003. Overview Population genetics In population genetics, the model is used to detect the presence of structured genetic variation in a group of individuals. The model assumes that alleles carried by individuals under study have origin in various extant or past populations. The model and various inference algorithms allow sci ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Natural Language Processing

Natural language processing (NLP) is a subfield of computer science and especially artificial intelligence. It is primarily concerned with providing computers with the ability to process data encoded in natural language and is thus closely related to information retrieval, knowledge representation and computational linguistics, a subfield of linguistics. Major tasks in natural language processing are speech recognition, text classification, natural-language understanding, natural language understanding, and natural language generation. History Natural language processing has its roots in the 1950s. Already in 1950, Alan Turing published an article titled "Computing Machinery and Intelligence" which proposed what is now called the Turing test as a criterion of intelligence, though at the time that was not articulated as a problem separate from artificial intelligence. The proposed test includes a task that involves the automated interpretation and generation of natural language ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cognitive Therapy And Research

Cognition is the "mental action or process of acquiring knowledge and understanding through thought, experience, and the senses". It encompasses all aspects of intellectual functions and processes such as: perception, attention, thought, imagination, intelligence, the formation of knowledge, memory and working memory, judgment and evaluation, reasoning and computation, problem-solving and decision-making, comprehension and production of language. Cognitive processes use existing knowledge to discover new knowledge. Cognitive processes are analyzed from very different perspectives within different contexts, notably in the fields of linguistics, musicology, anesthesia, neuroscience, psychiatry, psychology, education, philosophy, anthropology, biology, systemics, logic, and computer science. These and other approaches to the analysis of cognition (such as embodied cognition) are synthesized in the developing field of cognitive science, a progressively autonomous academic discipli ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Observable Variable

In physics, an observable is a physical property or physical quantity that can be measured. In classical mechanics, an observable is a real-valued "function" on the set of all possible system states, e.g., position and momentum. In quantum mechanics, an observable is an operator, or gauge, where the property of the quantum state can be determined by some sequence of operations. For example, these operations might involve submitting the system to various electromagnetic fields and eventually reading a value. Physically meaningful observables must also satisfy transformation laws that relate observations performed by different observers in different frames of reference. These transformation laws are automorphisms of the state space, that is bijective transformations that preserve certain mathematical properties of the space in question. Quantum mechanics In quantum mechanics, observables manifest as self-adjoint operators on a separable complex Hilbert space repr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Smoothed LDA

In statistics and image processing, to smooth a data set is to create an approximating function that attempts to capture important patterns in the data, while leaving out noise or other fine-scale structures/rapid phenomena. In smoothing, the data points of a signal are modified so individual points higher than the adjacent points (presumably because of noise) are reduced, and points that are lower than the adjacent points are increased leading to a smoother signal. Smoothing may be used in two important ways that can aid in data analysis (1) by being able to extract more information from the data as long as the assumption of smoothing is reasonable and (2) by being able to provide analyses that are both flexible and robust. Many different algorithms are used in smoothing. Compared to curve fitting Smoothing may be distinguished from the related and partially overlapping concept of curve fitting in the following ways: * curve fitting often involves the use of an explicit function ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probabilistic Graphical Model

A graphical model or probabilistic graphical model (PGM) or structured probabilistic model is a probabilistic model for which a Graph (discrete mathematics), graph expresses the conditional dependence structure between random variables. Graphical models are commonly used in probability theory, statistics—particularly Bayesian statistics—and machine learning. Types of graphical models Generally, probabilistic graphical models use a graph-based representation as the foundation for encoding a distribution over a multi-dimensional space and a graph that is a compact or Factor graph, factorized representation of a set of independences that hold in the specific distribution. Two branches of graphical representations of distributions are commonly used, namely, Bayesian networks and Markov random fields. Both families encompass the properties of factorization and independences, but they differ in the set of independences they can encode and the factorization of the distribution that ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

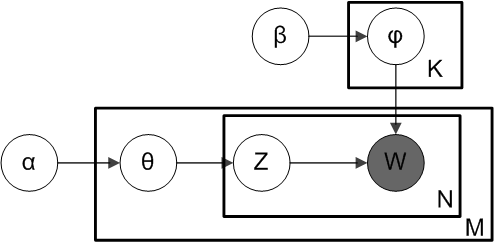

Plate Notation

In Bayesian inference, plate notation is a method of representing variables that repeat in a graphical model. Instead of drawing each repeated variable individually, a plate or rectangle is used to group variables into a subgraph that repeat together, and a number is drawn on the plate to represent the number of repetitions of the subgraph in the plate. The assumptions are that the subgraph is duplicated that many times, the variables in the subgraph are indexed by the repetition number, and any links that cross a plate boundary are replicated once for each subgraph repetition. Example In this example, we consider Latent Dirichlet allocation, a Bayesian network that models how documents in a corpus are topically related. There are two variables not in any plate; ''α'' is the parameter of the uniform Dirichlet distribution, Dirichlet prior on the per-document topic distributions, and ''β'' is the parameter of the uniform Dirichlet prior on the per-topic word distribution. The ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Latent Dirichlet Allocation

In natural language processing, latent Dirichlet allocation (LDA) is a Bayesian network (and, therefore, a generative statistical model) for modeling automatically extracted topics in textual corpora. The LDA is an example of a Bayesian topic model. In this, observations (e.g., words) are collected into documents, and each word's presence is attributable to one of the document's topics. Each document will contain a small number of topics. History In the context of population genetics, LDA was proposed by J. K. Pritchard, M. Stephens and P. Donnelly in 2000. LDA was applied in machine learning by David Blei, Andrew Ng and Michael I. Jordan in 2003. Overview Population genetics In population genetics, the model is used to detect the presence of structured genetic variation in a group of individuals. The model assumes that alleles carried by individuals under study have origin in various extant or past populations. The model and various inference algorithms allow sci ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

MapReduce

MapReduce is a programming model and an associated implementation for processing and generating big data sets with a parallel and distributed algorithm on a cluster. A MapReduce program is composed of a ''map'' procedure, which performs filtering and sorting (such as sorting students by first name into queues, one queue for each name), and a '' reduce'' method, which performs a summary operation (such as counting the number of students in each queue, yielding name frequencies). The "MapReduce System" (also called "infrastructure" or "framework") orchestrates the processing by marshalling the distributed servers, running the various tasks in parallel, managing all communications and data transfers between the various parts of the system, and providing for redundancy and fault tolerance. The model is a specialization of the ''split-apply-combine'' strategy for data analysis. It is inspired by the map and reduce functions commonly used in functional programming,"Our abstracti ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

K-means Clustering

''k''-means clustering is a method of vector quantization, originally from signal processing, that aims to partition of a set, partition ''n'' observations into ''k'' clusters in which each observation belongs to the cluster (statistics), cluster with the nearest mean (cluster centers or cluster centroid), serving as a prototype of the cluster. This results in a partitioning of the data space into Voronoi cells. ''k''-means clustering minimizes within-cluster variances (squared Euclidean distances), but not regular Euclidean distances, which would be the more difficult Weber problem: the mean optimizes squared errors, whereas only the geometric median minimizes Euclidean distances. For instance, better Euclidean solutions can be found using k-medians clustering, ''k''-medians and k-medoids, ''k''-medoids. The problem is computationally difficult (NP-hardness, NP-hard); however, efficient heuristic algorithms converge quickly to a local optimum. These are usually similar to the ex ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probabilistic Latent Semantic Analysis

Probabilistic latent semantic analysis (PLSA), also known as probabilistic latent semantic indexing (PLSI, especially in information retrieval circles) is a statistical technique for the analysis of two-mode and co-occurrence data. In effect, one can derive a low-dimensional representation of the observed variables in terms of their affinity to certain hidden variables, just as in latent semantic analysis, from which PLSA evolved. Compared to standard latent semantic analysis which stems from linear algebra and downsizes the occurrence tables (usually via a singular value decomposition), probabilistic latent semantic analysis is based on a mixture decomposition derived from a latent class model. Model Considering observations in the form of co-occurrences (w,d) of words and documents, PLSA models the probability of each co-occurrence as a mixture of conditionally independent multinomial distributions: : P(w,d) = \sum_c P(c) P(d, c) P(w, c) = P(d) \sum_c P(c, d) P(w, c) with ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Expectation Maximization

Expectation, or expectations, as well as expectancy or expectancies, may refer to: Science * Expectancy effect, including observer-expectancy effects and subject-expectancy effects such as the placebo effect * Expectancy theory of motivation * Expectation (philosophy) * Expected value, in mathematical probability theory * Expectation value (quantum mechanics) * Expectation–maximization algorithm, in statistics Music * ''Expectation'' (album), a 2013 album by Girl's Day * ''Expectation'', a 2006 album by Matt Harding * ''Expectations'' (Keith Jarrett album), 1971 * ''Expectations'' (Dance Exponents album), 1985 * ''Expectations'' (Hayley Kiyoko album), 2018, or Expectations/Overture", a song from the album * ''Expectations'' (Bebe Rexha album), 2018 * ''Expectations'' (Katie Pruitt album), 2020, or the title song * "Expectation" (waltz), a 1980 waltz composed by Ilya Herold Lavrentievich Kittler * "Expectation" (song), a 2010 song by Tame Impala * "Expectations" (song ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bayesian Estimator

In estimation theory and decision theory, a Bayes estimator or a Bayes action is an estimator or decision rule that minimizes the posterior expected value of a loss function (i.e., the posterior expected loss). Equivalently, it maximizes the posterior expectation of a utility function. An alternative way of formulating an estimator within Bayesian statistics is maximum a posteriori estimation. Definition Suppose an unknown parameter \theta is known to have a prior distribution \pi. Let \widehat = \widehat(x) be an estimator of \theta (based on some measurements ''x''), and let L(\theta,\widehat) be a loss function, such as squared error. The Bayes risk of \widehat is defined as E_\pi(L(\theta, \widehat)), where the expectation is taken over the probability distribution of \theta: this defines the risk function as a function of \widehat. An estimator \widehat is said to be a ''Bayes estimator'' if it minimizes the Bayes risk among all estimators. Equivalently, the estimator w ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |