|

Journal Of Big Data

''Journal of Big Data'' is a scientific journal that publishes open-access original research on big data. Published by SpringerOpen since 2014, it examines data capture and storage; search, sharing, and analytics; big data technologies; data visualization; architectures for massively parallel processing; data mining tools and techniques; machine learning algorithms for big data; cloud computing platforms; distributed file systems A clustered file system (CFS) is a file system which is shared by being simultaneously mounted on multiple servers. There are several approaches to clustering, most of which do not employ a clustered file system (only direct attached stora ... and databases; and scalable storage systems. All articles are included in: * ESCI * Scopus * DLBP * DOAJ * ProQuest * EBSCO Discover Service References External links Official website Academic journals established in 2014 Big data Computer science journals {{compu-journal-stub ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Big Data

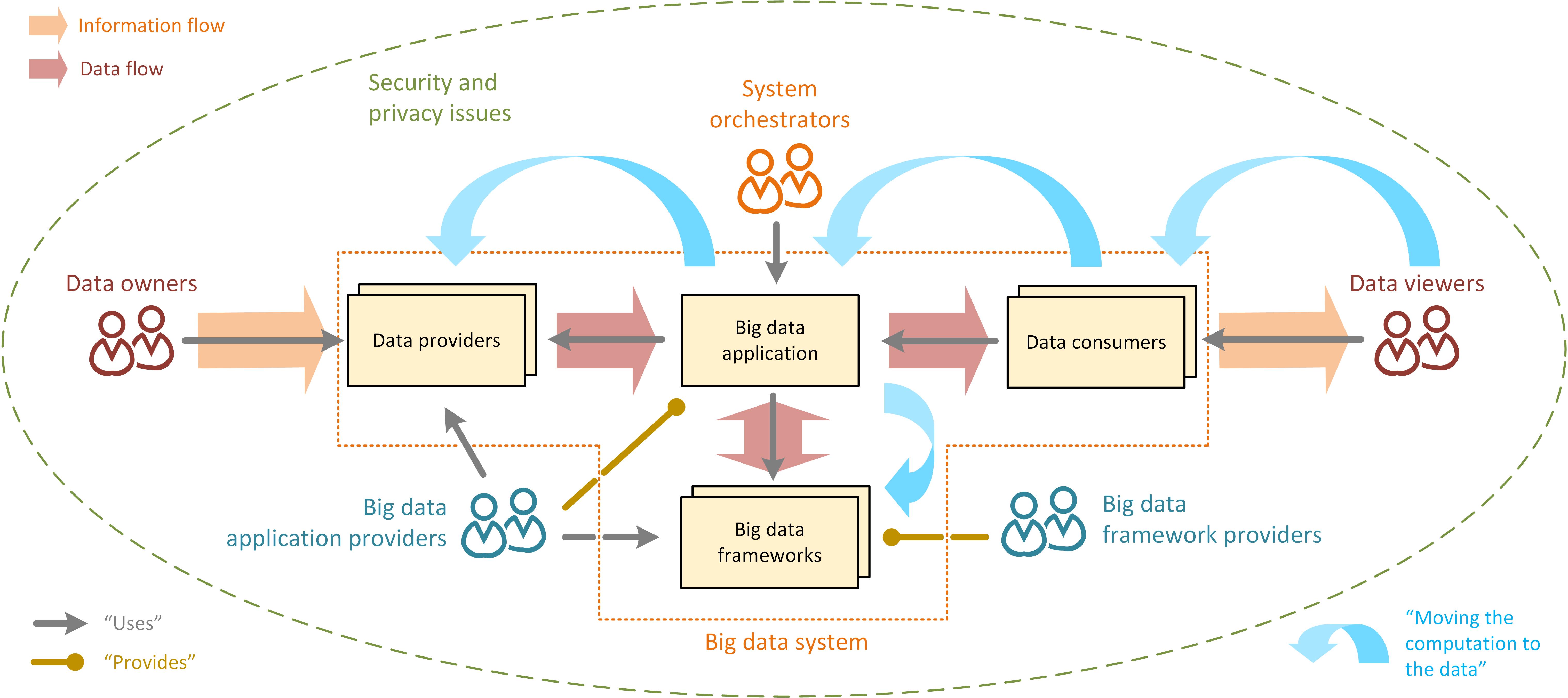

Big data primarily refers to data sets that are too large or complex to be dealt with by traditional data processing, data-processing application software, software. Data with many entries (rows) offer greater statistical power, while data with higher complexity (more attributes or columns) may lead to a higher false discovery rate. Big data analysis challenges include Automatic identification and data capture, capturing data, Computer data storage, data storage, data analysis, search, Data sharing, sharing, Data transmission, transfer, Data visualization, visualization, Query language, querying, updating, information privacy, and data source. Big data was originally associated with three key concepts: ''volume'', ''variety'', and ''velocity''. The analysis of big data presents challenges in sampling, and thus previously allowing for only observations and sampling. Thus a fourth concept, ''veracity,'' refers to the quality or insightfulness of the data. Without sufficient investm ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Scientific Journal

In academic publishing, a scientific journal is a periodical publication designed to further the progress of science by disseminating new research findings to the scientific community. These journals serve as a platform for researchers, scholars, and scientists to share their latest discoveries, insights, and methodologies across a multitude of scientific disciplines. Unlike professional or trade magazines, the articles are mostly written by scientists rather than staff writers employed by the journal. Scientific journals are characterized by their rigorous peer review process, which aims to ensure the validity, reliability, and quality of the published content. In peer review, submitted articles are reviewed by active scientists (peers) to ensure scientific rigor. With origins dating back to the 17th century, the publication of scientific journals has evolved significantly, advancing scientific knowledge, fostering academic discourse, and facilitating collaboration within ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Big Data

Big data primarily refers to data sets that are too large or complex to be dealt with by traditional data processing, data-processing application software, software. Data with many entries (rows) offer greater statistical power, while data with higher complexity (more attributes or columns) may lead to a higher false discovery rate. Big data analysis challenges include Automatic identification and data capture, capturing data, Computer data storage, data storage, data analysis, search, Data sharing, sharing, Data transmission, transfer, Data visualization, visualization, Query language, querying, updating, information privacy, and data source. Big data was originally associated with three key concepts: ''volume'', ''variety'', and ''velocity''. The analysis of big data presents challenges in sampling, and thus previously allowing for only observations and sampling. Thus a fourth concept, ''veracity,'' refers to the quality or insightfulness of the data. Without sufficient investm ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Capture

Automatic identification and data capture (AIDC) refers to the methods of automatically identifying objects, collecting data about them, and entering them directly into computer systems, without human involvement. Technologies typically considered as part of AIDC include QR codes, bar codes, radio frequency identification (RFID), biometrics (like iris and facial recognition system), magnetic stripes, optical character recognition (OCR), smart cards, and voice recognition. AIDC is also commonly referred to as "Automatic Identification", "Auto-ID" and "Automatic Data Capture". AIDC is the process or means of obtaining external data, particularly through the analysis of images, sounds, or videos. To capture data, a transducer is employed which converts the actual image or a sound into a digital file. The file is then stored and at a later time, it can be analyzed by a computer, or compared with other files in a database to verify identity or to provide authorization to enter a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Parallel Computing

Parallel computing is a type of computing, computation in which many calculations or Process (computing), processes are carried out simultaneously. Large problems can often be divided into smaller ones, which can then be solved at the same time. There are several different forms of parallel computing: Bit-level parallelism, bit-level, Instruction-level parallelism, instruction-level, Data parallelism, data, and task parallelism. Parallelism has long been employed in high-performance computing, but has gained broader interest due to the physical constraints preventing frequency scaling.S.V. Adve ''et al.'' (November 2008)"Parallel Computing Research at Illinois: The UPCRC Agenda" (PDF). Parallel@Illinois, University of Illinois at Urbana-Champaign. "The main techniques for these performance benefits—increased clock frequency and smarter but increasingly complex architectures—are now hitting the so-called power wall. The computer industry has accepted that future performance inc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Data Mining

Data mining is the process of extracting and finding patterns in massive data sets involving methods at the intersection of machine learning, statistics, and database systems. Data mining is an interdisciplinary subfield of computer science and statistics with an overall goal of extracting information (with intelligent methods) from a data set and transforming the information into a comprehensible structure for further use. Data mining is the analysis step of the " knowledge discovery in databases" process, or KDD. Aside from the raw analysis step, it also involves database and data management aspects, data pre-processing, model and inference considerations, interestingness metrics, complexity considerations, post-processing of discovered structures, visualization, and online updating. The term "data mining" is a misnomer because the goal is the extraction of patterns and knowledge from large amounts of data, not the extraction (''mining'') of data itself. It also is a buzzwo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Machine Learning Algorithms

The following outline is provided as an overview of, and topical guide to, machine learning: Machine learning (ML) is a subfield of artificial intelligence within computer science that evolved from the study of pattern recognition and computational learning theory.http://www.britannica.com/EBchecked/topic/1116194/machine-learning In 1959, Arthur Samuel defined machine learning as a "field of study that gives computers the ability to learn without being explicitly programmed". ML involves the study and construction of algorithms that can learn from and make predictions on data. These algorithms operate by building a model from a training set of example observations to make data-driven predictions or decisions expressed as outputs, rather than following strictly static program instructions. How can machine learning be categorized? * An academic discipline * A branch of science ** An applied science *** A subfield of computer science **** A branch of artificial intelligenc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cloud Computing

Cloud computing is "a paradigm for enabling network access to a scalable and elastic pool of shareable physical or virtual resources with self-service provisioning and administration on-demand," according to International Organization for Standardization, ISO. Essential characteristics In 2011, the National Institute of Standards and Technology (NIST) identified five "essential characteristics" for cloud systems. Below are the exact definitions according to NIST: * On-demand self-service: "A consumer can unilaterally provision computing capabilities, such as server time and network storage, as needed automatically without requiring human interaction with each service provider." * Broad network access: "Capabilities are available over the network and accessed through standard mechanisms that promote use by heterogeneous thin or thick client platforms (e.g., mobile phones, tablets, laptops, and workstations)." * Pooling (resource management), Resource pooling: " The provider' ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Distributed File Systems

A clustered file system (CFS) is a file system which is shared by being simultaneously mounted on multiple servers. There are several approaches to clustering, most of which do not employ a clustered file system (only direct attached storage for each node). Clustered file systems can provide features like location-independent addressing and redundancy which improve reliability or reduce the complexity of the other parts of the cluster. Parallel file systems are a type of clustered file system that spread data across multiple storage nodes, usually for redundancy or performance. Shared-disk file system A shared-disk file system uses a storage area network (SAN) to allow multiple computers to gain direct disk access at the block level. Access control and translation from file-level operations that applications use to block-level operations used by the SAN must take place on the client node. The most common type of clustered file system, the shared-disk file systemby addi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Academic Journals Established In 2014

An academy (Attic Greek: Ἀκαδήμεια; Koine Greek Ἀκαδημία) is an institution of tertiary education. The name traces back to Plato's school of philosophy, founded approximately 386 BC at Akademia, a sanctuary of Athena, the goddess of wisdom and Skills, skill, north of Ancient Athens, Athens, Greece. The Royal Spanish Academy defines academy as scientific, literary or artistic society established with public authority and as a teaching establishment, public or private, of a professional, artistic, technical or simply practical nature. Etymology The word comes from the ''Academy'' in ancient Greece, which derives from the Athenian hero, ''Akademos''. Outside the city walls of Athens, the Gymnasium (ancient Greece), gymnasium was made famous by Plato as a center of learning. The sacred space, dedicated to the goddess of wisdom, Athena, had formerly been an olive Grove (nature), grove, hence the expression "the groves of Academe". In these gardens, the philos ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |