|

Factorial Moment

In probability theory, the factorial moment is a mathematical quantity defined as the expectation or average of the falling factorial of a random variable. Factorial moments are useful for studying non-negative integer-valued random variables,D. J. Daley and D. Vere-Jones. ''An introduction to the theory of point processes. Vol. I''. Probability and its Applications (New York). Springer, New York, second edition, 2003 and arise in the use of probability-generating functions to derive the moments of discrete random variables. Factorial moments serve as analytic tools in the mathematical field of combinatorics, which is the study of discrete mathematical structures. Definition For a natural number , the -th factorial moment of a probability distribution on the real or complex numbers, or, in other words, a random variable with that probability distribution, is :\operatorname\bigl X)_r\bigr= \operatorname\bigl X(X-1)(X-2)\cdots(X-r+1)\bigr where the is the expectation ( operato ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Probability Theory

Probability theory or probability calculus is the branch of mathematics concerned with probability. Although there are several different probability interpretations, probability theory treats the concept in a rigorous mathematical manner by expressing it through a set of axioms of probability, axioms. Typically these axioms formalise probability in terms of a probability space, which assigns a measure (mathematics), measure taking values between 0 and 1, termed the probability measure, to a set of outcomes called the sample space. Any specified subset of the sample space is called an event (probability theory), event. Central subjects in probability theory include discrete and continuous random variables, probability distributions, and stochastic processes (which provide mathematical abstractions of determinism, non-deterministic or uncertain processes or measured Quantity, quantities that may either be single occurrences or evolve over time in a random fashion). Although it is no ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Combinatorics

Combinatorics is an area of mathematics primarily concerned with counting, both as a means and as an end to obtaining results, and certain properties of finite structures. It is closely related to many other areas of mathematics and has many applications ranging from logic to statistical physics and from evolutionary biology to computer science. Combinatorics is well known for the breadth of the problems it tackles. Combinatorial problems arise in many areas of pure mathematics, notably in algebra, probability theory, topology, and geometry, as well as in its many application areas. Many combinatorial questions have historically been considered in isolation, giving an ''ad hoc'' solution to a problem arising in some mathematical context. In the later twentieth century, however, powerful and general theoretical methods were developed, making combinatorics into an independent branch of mathematics in its own right. One of the oldest and most accessible parts of combinatorics ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Factorial Moment Generating Function

In probability theory and statistics, the factorial moment generating function (FMGF) of the probability distribution of a real-valued random variable ''X'' is defined as :M_X(t)=\operatorname\bigl ^\bigr/math> for all complex numbers ''t'' for which this expected value exists. This is the case at least for all ''t'' on the unit circle , t, =1, see characteristic function. If ''X'' is a discrete random variable taking values only in the set of non-negative integers, then M_X is also called probability-generating function (PGF) of ''X'' and M_X(t) is well-defined at least for all ''t'' on the closed unit disk , t, \le1. The factorial moment generating function generates the factorial moments of the probability distribution. Provided M_X exists in a neighbourhood of ''t'' = 1, the ''n''th factorial moment is given by :\operatorname X)_nM_X^(1)=\left.\frac\_ M_X(t), where the Pochhammer symbol (''x'')''n'' is the falling factorial :(x)_n = x(x-1)(x-2)\cdots(x-n+1). ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cumulant

In probability theory and statistics, the cumulants of a probability distribution are a set of quantities that provide an alternative to the '' moments'' of the distribution. Any two probability distributions whose moments are identical will have identical cumulants as well, and vice versa. The first cumulant is the mean, the second cumulant is the variance, and the third cumulant is the same as the third central moment. But fourth and higher-order cumulants are not equal to central moments. In some cases theoretical treatments of problems in terms of cumulants are simpler than those using moments. In particular, when two or more random variables are statistically independent, the th-order cumulant of their sum is equal to the sum of their th-order cumulants. As well, the third and higher-order cumulants of a normal distribution are zero, and it is the only distribution with this property. Just as for moments, where ''joint moments'' are used for collections of random variables ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Moment (mathematics)

In mathematics, the moments of a function are certain quantitative measures related to the shape of the function's graph. If the function represents mass density, then the zeroth moment is the total mass, the first moment (normalized by total mass) is the center of mass, and the second moment is the moment of inertia. If the function is a probability distribution, then the first moment is the expected value, the second central moment is the variance, the third standardized moment is the skewness, and the fourth standardized moment is the kurtosis. For a distribution of mass or probability on a bounded interval, the collection of all the moments (of all orders, from to ) uniquely determines the distribution ( Hausdorff moment problem). The same is not true on unbounded intervals ( Hamburger moment problem). In the mid-nineteenth century, Pafnuty Chebyshev became the first person to think systematically in terms of the moments of random variables. Significance of th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Factorial Moment Measure

In probability and statistics, a factorial moment measure is a mathematical quantity, function or, more precisely, measure that is defined in relation to mathematical objects known as point processes, which are types of stochastic processes often used as mathematical models of physical phenomena representable as randomly positioned points in time, space or both. Moment measures generalize the idea of factorial moments, which are useful for studying non-negative integer-valued random variables.D. J. Daley and D. Vere-Jones. ''An introduction to the theory of point processes. Vol. I''. Probability and its Applications (New York). Springer, New York, second edition, 2003. The first factorial moment measure of a point process coincides with its first moment measure or ''intensity measure'', which gives the expected or average number of points of the point process located in some region of space. In general, if the number of points in some region is considered as a random variab ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Beta-binomial Distribution

In probability theory and statistics, the beta-binomial distribution is a family of discrete probability distributions on a finite support of non-negative integers arising when the probability of success in each of a fixed or known number of Bernoulli trials is either unknown or random. The beta-binomial distribution is the binomial distribution in which the probability of success at each of ''n'' trials is not fixed but randomly drawn from a beta distribution. It is frequently used in Bayesian statistics, empirical Bayes methods and classical statistics to capture overdispersion in binomial type distributed data. The beta-binomial is a one-dimensional version of the Dirichlet-multinomial distribution as the binomial and beta distributions are univariate versions of the multinomial and Dirichlet distributions respectively. The special case where ''α'' and ''β'' are integers is also known as the negative hypergeometric distribution. Motivation and derivation As a compound ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

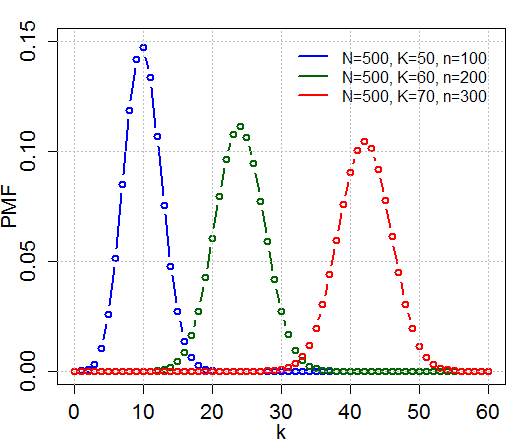

Hypergeometric Distribution

In probability theory and statistics, the hypergeometric distribution is a Probability distribution#Discrete probability distribution, discrete probability distribution that describes the probability of k successes (random draws for which the object drawn has a specified feature) in n draws, ''without'' replacement, from a finite Statistical population, population of size N that contains exactly K objects with that feature, wherein each draw is either a success or a failure. In contrast, the binomial distribution describes the probability of k successes in n draws ''with'' replacement. Definitions Probability mass function The following conditions characterize the hypergeometric distribution: * The result of each draw (the elements of the population being sampled) can be classified into one of Binary variable, two mutually exclusive categories (e.g. Pass/Fail or Employed/Unemployed). * The probability of a success changes on each draw, as each draw decreases the population ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Binomial Distribution

In probability theory and statistics, the binomial distribution with parameters and is the discrete probability distribution of the number of successes in a sequence of statistical independence, independent experiment (probability theory), experiments, each asking a yes–no question, and each with its own Boolean-valued function, Boolean-valued outcome (probability), outcome: ''success'' (with probability ) or ''failure'' (with probability ). A single success/failure experiment is also called a Bernoulli trial or Bernoulli experiment, and a sequence of outcomes is called a Bernoulli process; for a single trial, i.e., , the binomial distribution is a Bernoulli distribution. The binomial distribution is the basis for the binomial test of statistical significance. The binomial distribution is frequently used to model the number of successes in a sample of size drawn with replacement from a population of size . If the sampling is carried out without replacement, the draws ar ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Stirling Numbers Of The Second Kind

In mathematics, particularly in combinatorics, a Stirling number of the second kind (or Stirling partition number) is the number of ways to partition a set of ''n'' objects into ''k'' non-empty subsets and is denoted by S(n,k) or \textstyle \left\. Stirling numbers of the second kind occur in the field of mathematics called combinatorics and the study of partitions. They are named after James Stirling. The Stirling numbers of the first and second kind can be understood as inverses of one another when viewed as triangular matrices. This article is devoted to specifics of Stirling numbers of the second kind. Identities linking the two kinds appear in the article on Stirling numbers. Definition The Stirling numbers of the second kind, written S(n,k) or \lbrace\textstyle\rbrace or with other notations, count the number of ways to partition a set of n labelled objects into k nonempty unlabelled subsets. Equivalently, they count the number of different equivalence relations wit ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Poisson Distribution

In probability theory and statistics, the Poisson distribution () is a discrete probability distribution that expresses the probability of a given number of events occurring in a fixed interval of time if these events occur with a known constant mean rate and independently of the time since the last event. It can also be used for the number of events in other types of intervals than time, and in dimension greater than 1 (e.g., number of events in a given area or volume). The Poisson distribution is named after French mathematician Siméon Denis Poisson. It plays an important role for discrete-stable distributions. Under a Poisson distribution with the expectation of ''λ'' events in a given interval, the probability of ''k'' events in the same interval is: :\frac . For instance, consider a call center which receives an average of ''λ ='' 3 calls per minute at all times of day. If the calls are independent, receiving one does not change the probability of when the next on ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Special Function

Special functions are particular mathematical functions that have more or less established names and notations due to their importance in mathematical analysis, functional analysis, geometry, physics, or other applications. The term is defined by consensus, and thus lacks a general formal definition, but the list of mathematical functions contains functions that are commonly accepted as special. Tables of special functions Many special functions appear as solutions of differential equations or integrals of elementary functions. Therefore, tables of integrals usually include descriptions of special functions, and tables of special functions include most important integrals; at least, the integral representation of special functions. Because symmetries of differential equations are essential to both physics and mathematics, the theory of special functions is closely related to the theory of Lie groups and Lie algebras, as well as certain topics in mathematical physics. Symbolic ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |