|

Data Lakehouse

A data lake is a system or repository of data stored in its natural/raw format, usually object blobs or files. A data lake is usually a single store of data including raw copies of source system data, sensor data, social data etc., and transformed data used for tasks such as reporting, visualization, advanced analytics, and machine learning. A data lake can include structured data from relational databases (rows and columns), semi-structured data ( CSV, logs, XML, JSON), unstructured data (emails, documents, PDFs), and binary data (images, audio, video). A data lake can be established ''on premises'' (within an organization's data centers) or ''in the cloud'' (using cloud services). Background James Dixon, then chief technology officer at Pentaho, coined the term by 2011 to contrast it with data mart, which is a smaller repository of interesting attributes derived from raw data. In promoting data lakes, he argued that data marts have several inherent problems, such as inf ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Pentaho

Pentaho is the brand name for several data management software products that make up the Pentaho+ Data Platform. These include Pentaho Data Integration, Pentaho Business Analytics, Pentaho Data Catalog, and Pentaho Data Optimiser. Overview Pentaho is owned by Hitachi Vantara, and is a separate business unit. Pentaho started out as business intelligence (BI) software developed by the Pentaho Corporation in 2004. It comprises Pentaho Data Integration (PDI) and Pentaho Business Analytics (PBA). These provide data integration, OLAP services, reporting, information dashboards, data mining and extract, transform, load (ETL) capabilities. Pentaho was acquired by Hitachi Data Systems in 2015 and in 2017 became part of Hitachi Vantara. In November 2023, Hitachi Vantara launched the Pentaho+ Platform, comprising the original Pentaho Data Integration and Pentaho Business Analytics software, and new Pentaho Data Catalog, and Pentaho Data Optimiser software products. Hitachi Vantara i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Apache Pig

Apache Pig is a high-level platform for creating programs that run on Hadoop, Apache Hadoop. The language for this platform is called Pig Latin. Pig can execute its Hadoop jobs in MapReduce, Apache Tez, or Apache Spark. Pig Latin abstracts the programming from the Java (programming language), Java MapReduce idiom into a notation which makes MapReduce programming high level, similar to that of SQL for relational database management systems. Pig Latin can be extended using user-defined functions (UDFs) which the user can write in Java (programming language), Java, Python (programming language), Python, JavaScript, Ruby (programming language), Ruby or Groovy (programming language), Groovy and then call directly from the language. History Apache Pig was originally developed at Yahoo!, Yahoo Research around 2006 for researchers to have an ad hoc way of creating and executing MapReduce jobs on very large data sets. In 2007, it was moved into the Apache Software Foundation. Naming ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Map Reduce

MapReduce is a programming model and an associated implementation for processing and generating big data sets with a Parallel computing, parallel and distributed computing, distributed algorithm on a Cluster (computing), cluster. A MapReduce program is composed of a map (parallel pattern), ''map'' procedure (computing), procedure, which performs filtering and sorting (such as sorting students by first name into queues, one queue for each name), and a ''Reduce (parallel pattern), reduce'' method, which performs a summary operation (such as counting the number of students in each queue, yielding name frequencies). The "MapReduce System" (also called "infrastructure" or "framework") orchestrates the processing by Marshalling (computer science), marshalling the distributed servers, running the various tasks in parallel, managing all communications and data transfers between the various parts of the system, and providing for Redundancy (engineering), redundancy and Fault-tolerant compute ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

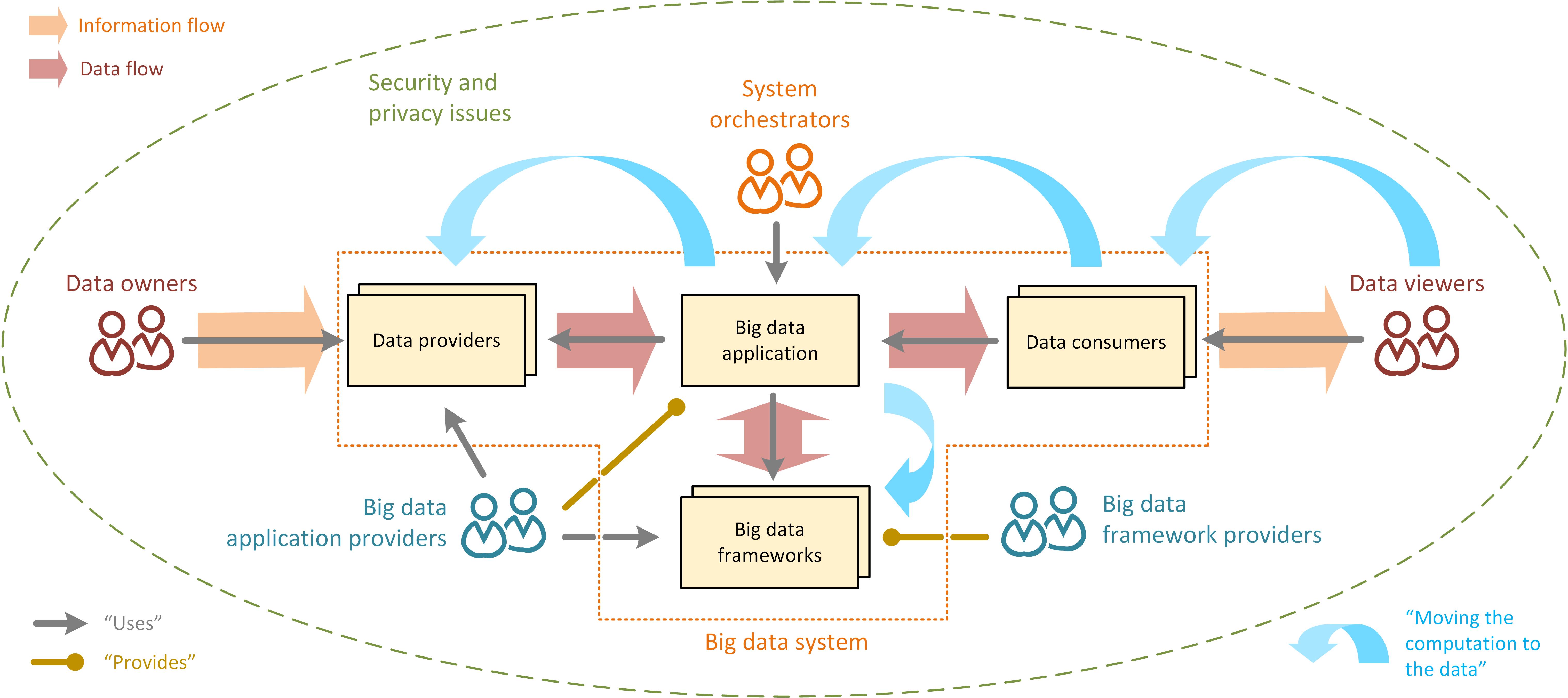

Big Data

Big data primarily refers to data sets that are too large or complex to be dealt with by traditional data processing, data-processing application software, software. Data with many entries (rows) offer greater statistical power, while data with higher complexity (more attributes or columns) may lead to a higher false discovery rate. Big data analysis challenges include Automatic identification and data capture, capturing data, Computer data storage, data storage, data analysis, search, Data sharing, sharing, Data transmission, transfer, Data visualization, visualization, Query language, querying, updating, information privacy, and data source. Big data was originally associated with three key concepts: ''volume'', ''variety'', and ''velocity''. The analysis of big data presents challenges in sampling, and thus previously allowing for only observations and sampling. Thus a fourth concept, ''veracity,'' refers to the quality or insightfulness of the data. Without sufficient investm ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

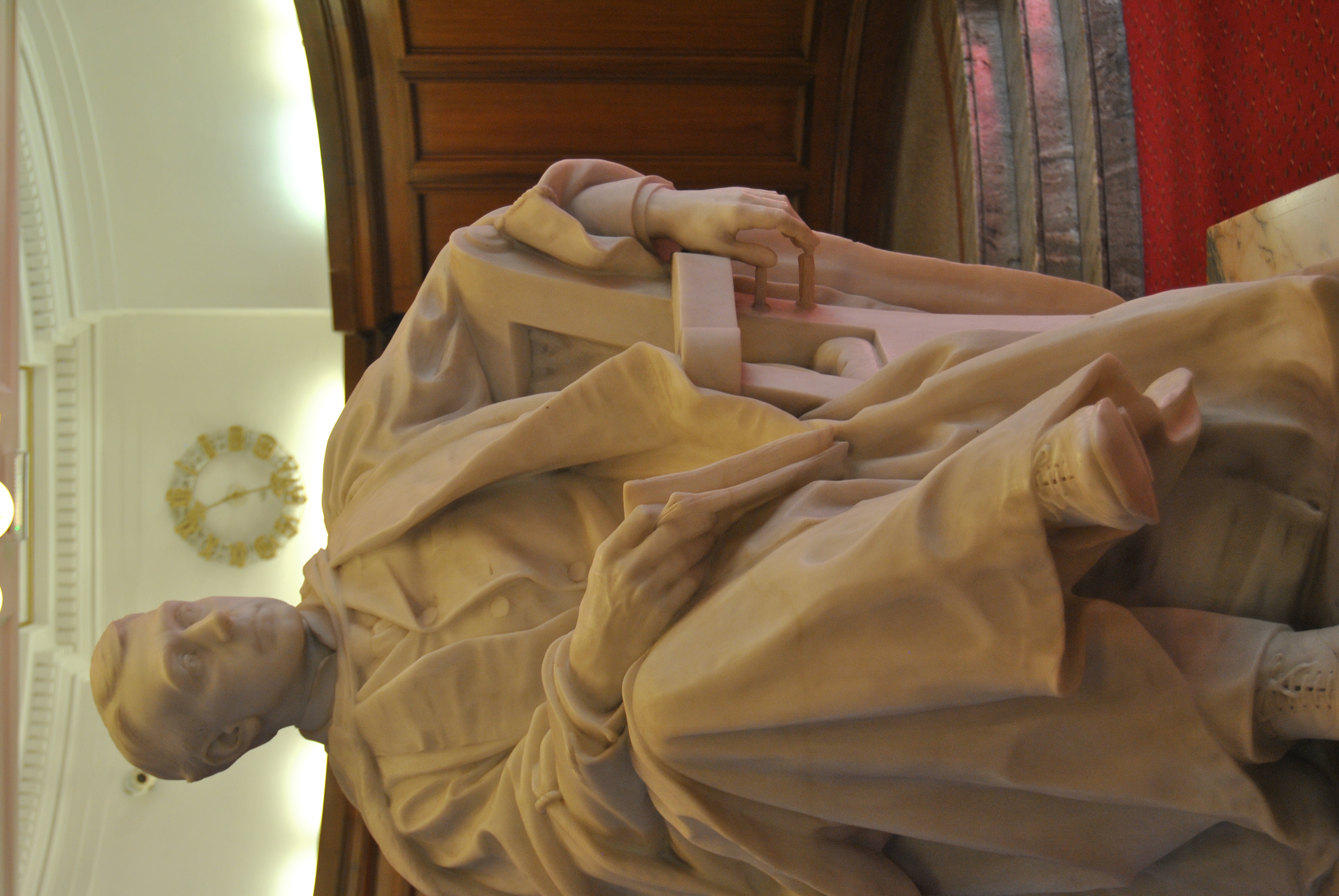

Cardiff University

Cardiff University () is a public research university in Cardiff, Wales. It was established in 1883 as the University College of South Wales and Monmouthshire and became a founding college of the University of Wales in 1893. It was renamed University College, Cardiff in 1972 and merged with the University of Wales Institute of Science and Technology in 1988 to become University of Wales College, Cardiff and then University of Wales, Cardiff in 1996. In 1997 it received Academic degree, degree-awarding powers, but held them in abeyance. It adopted the trade name, operating name of Cardiff University in 1999; this became its legal name in 2005, when it became an independent university awarding its own degrees. Cardiff University is the only List of universities in Wales, Welsh member of the Russell Group of research-intensive British universities. Academics and alumni of the university have included four heads of state or government and two Nobel laureates. the university's academ ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Apache Hadoop

Apache Hadoop () is a collection of open-source software utilities for reliable, scalable, distributed computing. It provides a software framework for distributed storage and processing of big data using the MapReduce programming model. Hadoop was originally designed for computer clusters built from commodity hardware, which is still the common use. It has since also found use on clusters of higher-end hardware. All the modules in Hadoop are designed with a fundamental assumption that hardware failures are common occurrences and should be automatically handled by the framework. Overview The core of Apache Hadoop consists of a storage part, known as Hadoop Distributed File System (HDFS), and a processing part which is a MapReduce programming model. Hadoop splits files into large blocks and distributes them across nodes in a cluster. It then transfers packaged code into nodes to process the data in parallel. This approach takes advantage of data locality, where nodes manipulate ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Amazon S3

Amazon Simple Storage Service (S3) is a service offered by Amazon Web Services (AWS) that provides object storage through a web service interface. Amazon S3 uses the same scalable storage infrastructure that Amazon.com uses to run its e-commerce network. Amazon S3 can store any type of object, which allows uses like storage for Internet applications, backups, disaster recovery, data archives, data lakes for analytics, and hybrid cloud storage. AWS launched Amazon S3 in the United States on March 14, 2006, then in Europe in November 2007. Technical details Design Amazon S3 manages data with an object storage architecture which aims to provide scalability, high availability, and low latency with high durability. The basic storage units of Amazon S3 are objects which are organized into buckets. Each object is identified by a unique, user-assigned key. Buckets can be managed using the console provided by Amazon S3, programmatically with the AWS SDK, or the REST application ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Google Cloud Storage

Google Cloud Storage is an online file storage web service for storing and accessing data on Google Cloud Platform infrastructure. The service combines the performance and scalability of Google's cloud with advanced security and sharing capabilities. It is an ''Infrastructure as a Service'' (IaaS), comparable to Amazon S3. Contrary to Google Drive and according to different service specifications, Google Cloud Storage appears to be more suitable for enterprises. Feasibility User activation is resourced through the API Developer Console. Google Account holders must first access the service by logging in and then agreeing to the Terms of Service, followed by enabling a billing structure. Design Google Cloud Storage stores objects (originally limited to 100 GiB, currently up to 5 TiB) in projects which are organized into buckets. All requests are authorized using Identity and Access Management policies or access control lists associated with a user or service account. Bucket ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cloud Storage Service

A file-hosting service, also known as cloud-storage service, online file-storage provider, or cyberlocker, is an internet hosting service specifically designed to host user files. These services allow users to upload files that can be accessed over the internet after providing a username and password or other authentication. Typically, file hosting services allow HTTP access, and in some cases, FTP access. Other related services include content-displaying hosting services (i.e. video and image), virtual storage, and remote backup solutions. Uses Personal file storage Personal file storage services are designed for private individuals to store and access their files online. Users can upload their files and share them publicly or keep them password-protected. Document-sharing services allow users to share and collaborate on document files. These services originally targeted files such as PDFs, word processor documents, and spreadsheets. However many remote file storage servi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hadoop

Apache Hadoop () is a collection of Open-source software, open-source software utilities for reliable, scalable, distributed computing. It provides a software framework for Clustered file system, distributed storage and processing of big data using the MapReduce programming model. Hadoop was originally designed for computer clusters built from commodity hardware, which is still the common use. It has since also found use on clusters of higher-end hardware. All the modules in Hadoop are designed with a fundamental assumption that hardware failures are common occurrences and should be automatically handled by the framework. Overview The core of Apache Hadoop consists of a storage part, known as Hadoop Distributed File System (HDFS), and a processing part which is a MapReduce programming model. Hadoop splits files into large blocks and distributes them across nodes in a cluster. It then transfers JAR (file format), packaged code into nodes to process the data in parallel. This appro ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

PricewaterhouseCoopers

PricewaterhouseCoopers, also known as PwC, is a multinational professional services network based in London, United Kingdom. It is the second-largest professional services network in the world and is one of the Big Four accounting firms, along with Deloitte, EY, and KPMG. The PwC network is overseen by PricewaterhouseCoopers International Limited, an English private company limited by guarantee. PwC firms are in 140 countries, with 370,000 people. 26% of the workforce was based in the Americas, 26% in Asia, 32% in Western Europe, and 5% in Middle East and Africa. The company's global revenues were US$50.3 billion in FY 2022, of which $18.0 billion was generated by its Assurance practice, $11.6 billion by its Tax and Legal practice and $20.7 billion by its Advisory practice. The firm in its recent actual form was created in 1998 by a merger between two accounting firms: Coopers & Lybrand, and Price Waterhouse. Both firms had histories dating back to the 19th century. The ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |