|

R (programming Language)

R is a programming language for statistical computing and Data and information visualization, data visualization. It has been widely adopted in the fields of data mining, bioinformatics, data analysis, and data science. The core R language is extended by a large number of R package, software packages, which contain Reusability, reusable code, documentation, and sample data. Some of the most popular R packages are in the tidyverse collection, which enhances functionality for visualizing, transforming, and modelling data, as well as improves the ease of programming (according to the authors and users). R is free and open-source software distributed under the GNU General Public License. The language is implemented primarily in C (programming language), C, Fortran, and Self-hosting (compilers), R itself. Preprocessor, Precompiled executables are available for the major operating systems (including Linux, MacOS, and Microsoft Windows). Its core is an interpreted language with a na ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Ross Ihaka

George Ross Ihaka (born 1954) is a Māori people, Māori New Zealander statistician who was an associate professor of statistics at the University of Auckland until his retirement in 2017. Alongside Robert Gentleman (statistician), Robert Gentleman, he is one of the creators of the R (programming language), R programming language. In 2008, Ihaka received the Pickering Medal, awarded by the Royal Society of New Zealand, for his work on R. Education Ihaka completed his undergraduate education at the University of Auckland, and obtained his PhD in 1985 from the University of California, Berkeley supervised by David R. Brillinger. His thesis was on statistical modelling for seismic interferometry and was titled Rūaumoko, after the god of earthquakes, volcanoes and seasons in Māori mythology. Career and research As of 2010, he was working on a new statistical programming language based on Lisp (programming language), Lisp. The Department of Statistics at the University of Auckland ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

R Package

R packages are extensions to the R statistical programming language. R packages contain code, data, and documentation in a standardised collection format that can be installed by users of R, typically via a centralised software repository such as CRAN (the Comprehensive R Archive Network). The large number of packages available for R, and the ease of installing and using them, has been cited as a major factor driving the widespread adoption of the language in data science. Compared to libraries in other programming languages, R packages must conform to a relatively strict specification. The ''Writing R Extensions'' manual specifies a standard directory structure for R source code, data, documentation, and package metadata, which enables them to be installed and loaded using R's in-built package management tools. Packages distributed on CRAN must meet additional standards. According to John Chambers, whilst these requirements "impose considerable demands" on package developers, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

C (programming Language)

C (''pronounced'' '' – like the letter c'') is a general-purpose programming language. It was created in the 1970s by Dennis Ritchie and remains very widely used and influential. By design, C's features cleanly reflect the capabilities of the targeted Central processing unit, CPUs. It has found lasting use in operating systems code (especially in Kernel (operating system), kernels), device drivers, and protocol stacks, but its use in application software has been decreasing. C is commonly used on computer architectures that range from the largest supercomputers to the smallest microcontrollers and embedded systems. A successor to the programming language B (programming language), B, C was originally developed at Bell Labs by Ritchie between 1972 and 1973 to construct utilities running on Unix. It was applied to re-implementing the kernel of the Unix operating system. During the 1980s, C gradually gained popularity. It has become one of the most widely used programming langu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

GNU General Public License

The GNU General Public Licenses (GNU GPL or simply GPL) are a series of widely used free software licenses, or ''copyleft'' licenses, that guarantee end users the freedom to run, study, share, or modify the software. The GPL was the first copyleft license available for general use. It was originally written by Richard Stallman, the founder of the Free Software Foundation (FSF), for the GNU Project. The license grants the recipients of a computer program the rights of the Free Software Definition. The licenses in the GPL series are all copyleft licenses, which means that any derivative work must be distributed under the same or equivalent license terms. The GPL is more restrictive than the GNU Lesser General Public License, and even more distinct from the more widely used permissive software licenses such as BSD, MIT, and Apache. Historically, the GPL license family has been one of the most popular software licenses in the free and open-source software (FOSS) domai ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

Free And Open-source Software

Free and open-source software (FOSS) is software available under a license that grants users the right to use, modify, and distribute the software modified or not to everyone free of charge. FOSS is an inclusive umbrella term encompassing free software and open-source software. The rights guaranteed by FOSS originate from the "Four Essential Freedoms" of '' The Free Software Definition'' and the criteria of '' The Open Source Definition''. All FOSS can have publicly available source code, but not all source-available software is FOSS. FOSS is the opposite of proprietary software, which is licensed restrictively or has undisclosed source code. The historical precursor to FOSS was the hobbyist and academic public domain software ecosystem of the 1960s to 1980s. Free and open-source operating systems such as Linux distributions and descendants of BSD are widely used, powering millions of servers, desktops, smartphones, and other devices. Free-software licenses and open-so ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

Tidyverse

The tidyverse is a collection of open source packages for the R programming language introduced by Hadley Wickham and his team that "share an underlying design philosophy, grammar, and data structures" of tidy data. Characteristic features of tidyverse packages include extensive use of non-standard evaluation and encouraging piping. As of November 2018, the tidyverse package and some of its individual packages comprise 5 out of the top 10 most downloaded R packages. The tidyverse is the subject of multiple books and papers. In 2019, the ecosystem has been published in the '' Journal of Open Source Software''. Its syntax has been referred to as "supremely readable", and some have argued that tidyverse is an effective way to introduce complete beginners to programming, as pedagogically it allows students to quickly begin doing data processing tasks. Moreover, some practitioners have pointed out that data processing tasks are intuitively easier to chain together with tidyverse ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Reusability

In computer programming, reusability describes the quality of a software asset that affects its ability to be used in a software system for which it was ''not'' specifically designed. An asset that is easy to reuse and provides utility is considered to have high reusability. A related concept, leverage involves modifying an existing asset to meet system requirements. The ability to reuse can be viewed as the ability to build larger things from smaller parts, and to identify commonality among the parts. Reusability is often a required characteristic of platform software. Reusability brings several aspects to software development that do not need to be considered when reusability is not required. Reusability may be impacted by various DevOps aspects including: build, packaging, distribution, installation, configuration, deployment, maintenance and upgrade. If these aspects are not considered, software may seem to be reusable based on its design, but may not be reusable in p ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Data Science

Data science is an interdisciplinary academic field that uses statistics, scientific computing, scientific methods, processing, scientific visualization, algorithms and systems to extract or extrapolate knowledge from potentially noisy, structured, or unstructured data. Data science also integrates domain knowledge from the underlying application domain (e.g., natural sciences, information technology, and medicine). Data science is multifaceted and can be described as a science, a research paradigm, a research method, a discipline, a workflow, and a profession. Data science is "a concept to unify statistics, data analysis, informatics, and their related methods" to "understand and analyze actual phenomena" with data. It uses techniques and theories drawn from many fields within the context of mathematics, statistics, computer science, information science, and domain knowledge. However, data science is different from computer science and information science. Turing Awar ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

Data Analysis

Data analysis is the process of inspecting, Data cleansing, cleansing, Data transformation, transforming, and Data modeling, modeling data with the goal of discovering useful information, informing conclusions, and supporting decision-making. Data analysis has multiple facets and approaches, encompassing diverse techniques under a variety of names, and is used in different business, science, and social science domains. In today's business world, data analysis plays a role in making decisions more scientific and helping businesses operate more effectively. Data mining is a particular data analysis technique that focuses on statistical modeling and knowledge discovery for predictive rather than purely descriptive purposes, while business intelligence covers data analysis that relies heavily on aggregation, focusing mainly on business information. In statistical applications, data analysis can be divided into descriptive statistics, exploratory data analysis (EDA), and Statistical h ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

Bioinformatics

Bioinformatics () is an interdisciplinary field of science that develops methods and Bioinformatics software, software tools for understanding biological data, especially when the data sets are large and complex. Bioinformatics uses biology, chemistry, physics, computer science, data science, computer programming, information engineering, mathematics and statistics to analyze and interpret biological data. The process of analyzing and interpreting data can sometimes be referred to as computational biology, however this distinction between the two terms is often disputed. To some, the term ''computational biology'' refers to building and using models of biological systems. Computational, statistical, and computer programming techniques have been used for In silico, computer simulation analyses of biological queries. They include reused specific analysis "pipelines", particularly in the field of genomics, such as by the identification of genes and single nucleotide polymorphis ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Data Mining

Data mining is the process of extracting and finding patterns in massive data sets involving methods at the intersection of machine learning, statistics, and database systems. Data mining is an interdisciplinary subfield of computer science and statistics with an overall goal of extracting information (with intelligent methods) from a data set and transforming the information into a comprehensible structure for further use. Data mining is the analysis step of the " knowledge discovery in databases" process, or KDD. Aside from the raw analysis step, it also involves database and data management aspects, data pre-processing, model and inference considerations, interestingness metrics, complexity considerations, post-processing of discovered structures, visualization, and online updating. The term "data mining" is a misnomer because the goal is the extraction of patterns and knowledge from large amounts of data, not the extraction (''mining'') of data itself. It also is a buzzwo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

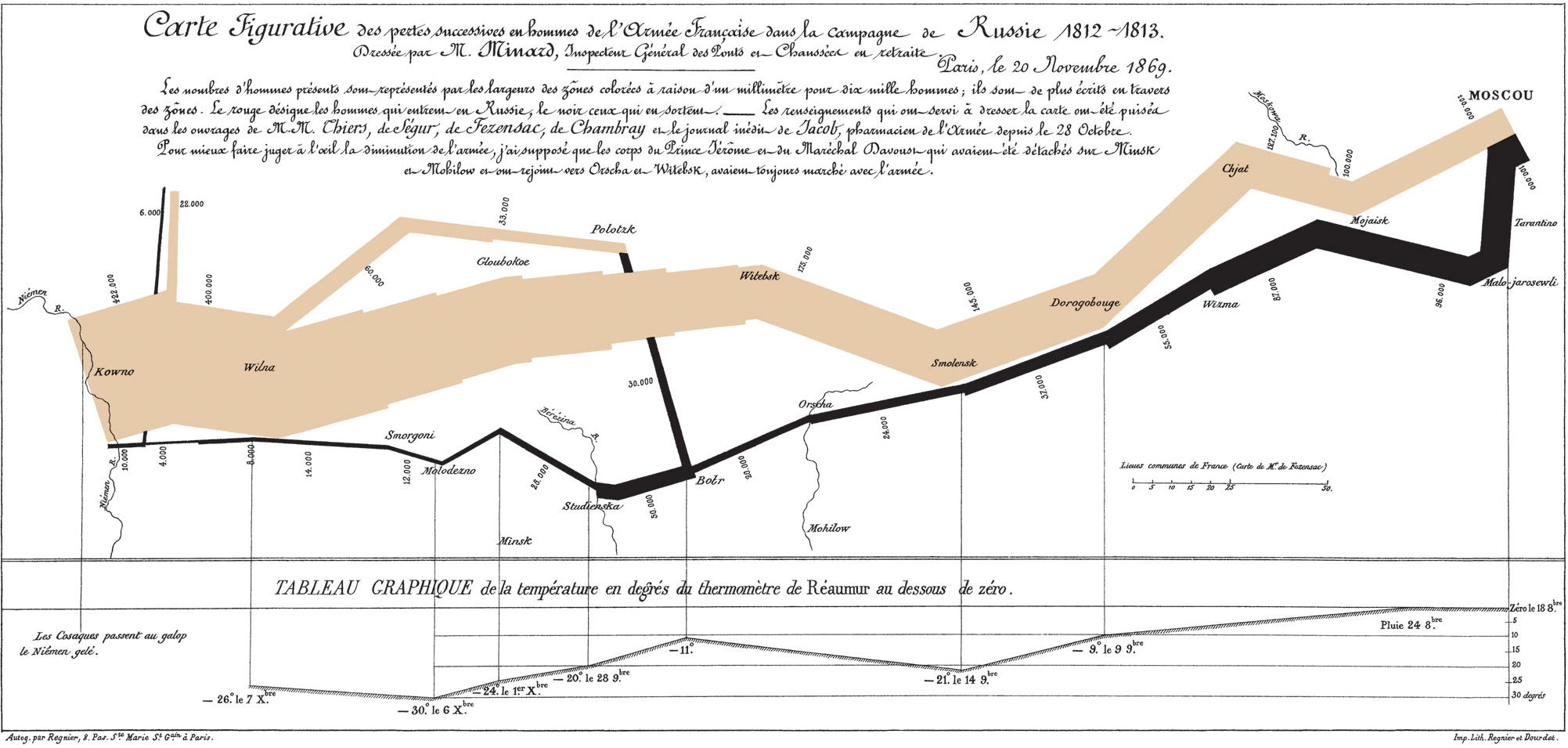

Data And Information Visualization

Data and information visualization (data viz/vis or info viz/vis) is the practice of designing and creating graphic or visual representations of a large amount of complex quantitative and qualitative data and information with the help of static, dynamic or interactive visual items. Typically based on data and information collected from a certain domain of expertise, these visualizations are intended for a broader audience to help them visually explore and discover, quickly understand, interpret and gain important insights into otherwise difficult-to-identify structures, relationships, correlations, local and global patterns, trends, variations, constancy, clusters, outliers and unusual groupings within data (''exploratory visualization''). When intended for the general public (mass communication) to convey a concise version of known, specific information in a clear and engaging manner (''presentational'' or ''explanatory visualization''), it is typically called information gra ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |