|

Low-level Programming Languages

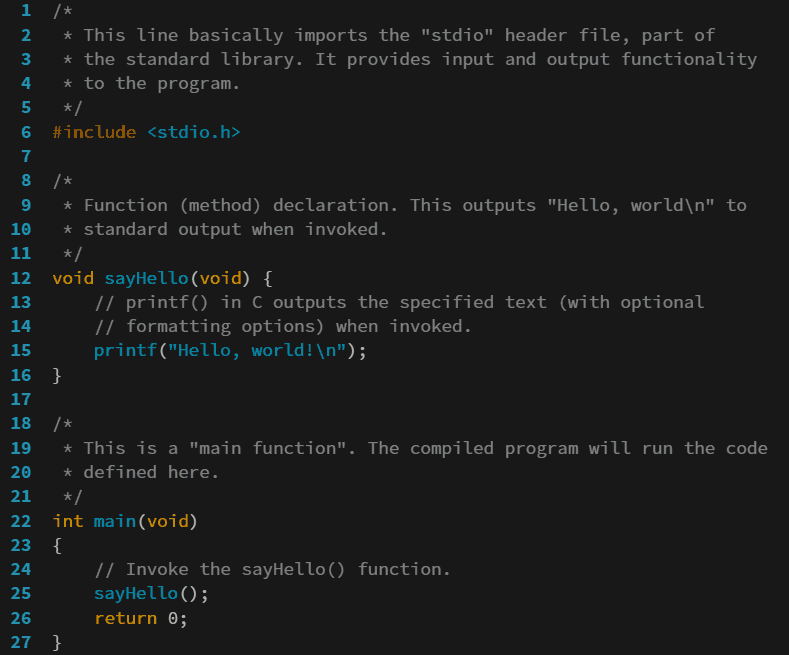

A low-level programming language is a programming language that provides little or no abstraction from a computer's instruction set architecture, memory or underlying physical hardware; commands or functions in the language are structurally similar to a processor's instructions. These languages provide the programmer with full control over program memory and the underlying machine code instructions. Because of the low level of abstraction (hence the term "low-level") between the language and machine language, low-level languages are sometimes described as being "close to the hardware". Programs written in low-level languages tend to be relatively non-portable, due to being optimized for a certain type of system architecture. Low-level languages are directly converted to machine code with or without a compiler or interpreter— second-generation programming languages depending on programming language. A program written in a low-level language can be made to run very quickly, with ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

Programming Language

A programming language is a system of notation for writing computer programs. Programming languages are described in terms of their Syntax (programming languages), syntax (form) and semantics (computer science), semantics (meaning), usually defined by a formal language. Languages usually provide features such as a type system, Variable (computer science), variables, and mechanisms for Exception handling (programming), error handling. An Programming language implementation, implementation of a programming language is required in order to Execution (computing), execute programs, namely an Interpreter (computing), interpreter or a compiler. An interpreter directly executes the source code, while a compiler produces an executable program. Computer architecture has strongly influenced the design of programming languages, with the most common type (imperative languages—which implement operations in a specified order) developed to perform well on the popular von Neumann architecture. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

Semantics (computer Science)

In programming language theory, semantics is the rigorous mathematical study of the meaning of programming languages. Semantics assigns computational meaning to valid strings in a programming language syntax. It is closely related to, and often crosses over with, the semantics of mathematical proofs. Semantics describes the processes a computer follows when executing a program in that specific language. This can be done by describing the relationship between the input and output of a program, or giving an explanation of how the program will be executed on a certain platform, thereby creating a model of computation. History In 1967, Robert W. Floyd published the paper ''Assigning meanings to programs''; his chief aim was "a rigorous standard for proofs about computer programs, including proofs of correctness, equivalence, and termination". Floyd further wrote: A semantic definition of a programming language, in our approach, is founded on a syntactic definition. It mu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Stack-based Memory Allocation

Stacks in computing architectures are regions of memory where data is added or removed in a last-in-first-out (LIFO) manner. In most modern computer systems, each thread has a reserved region of memory referred to as its stack. When a function executes, it may add some of its local state data to the top of the stack; when the function exits it is responsible for removing that data from the stack. At a minimum, a thread's stack is used to store the location of a return address provided by the caller in order to allow return statements to return to the correct location. The stack is often used to store variables of fixed length local to the currently active functions. Programmers may further choose to explicitly use the stack to store local data of variable length. If a region of memory lies on the thread's stack, that memory is said to have been allocated on the stack, i.e. stack-based memory allocation (SBMA). This is contrasted with a heap-based memory allocation (HBMA) ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

X86 Calling Conventions

This article describes the calling conventions used when programming x86 architecture microprocessors. Calling conventions describe the interface of called code: *The order in which atomic (scalar) parameters, or individual parts of a complex parameter, are allocated *How parameters are passed (pushed on the stack, placed in registers, or a mix of both) *Which registers the called function must preserve for the caller (also known as: callee-saved registers or non-volatile registers) *How the task of preparing the stack for, and restoring after, a function call is divided between the caller and the callee This is intimately related with the assignment of sizes and formats to programming-language types. Another closely related topic is name mangling, which determines how symbol names in the code are mapped to symbol names used by the linker. Calling conventions, type representations, and name mangling are all part of what is known as an application binary interface (ABI). There a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |