|

Circuit Complexity

In theoretical computer science, circuit complexity is a branch of computational complexity theory in which Boolean functions are classified according to the size or depth of the Boolean circuits that compute them. A related notion is the circuit complexity of a recursive language that is decided by a uniform family of circuits C_,C_,\ldots (see below). Proving lower bounds on size of Boolean circuits computing explicit Boolean functions is a popular approach to separating complexity classes. For example, a prominent circuit class P/poly consists of Boolean functions computable by circuits of polynomial size. Proving that \mathsf\not\subseteq \mathsf would separate P and NP (see below). Complexity classes defined in terms of Boolean circuits include AC0, AC, TC0, NC1, NC, and P/poly. Size and depth A Boolean circuit with n input bits is a directed acyclic graph in which every node (usually called ''gates'' in this context) is either an input node of in-degree 0 l ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Three Input Boolean Circuit

3 (three) is a number, numeral and digit. It is the natural number following 2 and preceding 4, and is the smallest odd prime number and the only prime preceding a square number. It has religious and cultural significance in many societies. Evolution of the Arabic digit The use of three lines to denote the number 3 occurred in many writing systems, including some (like Roman and Chinese numerals) that are still in use. That was also the original representation of 3 in the Brahmic (Indian) numerical notation, its earliest forms aligned vertically. However, during the Gupta Empire the sign was modified by the addition of a curve on each line. The Nāgarī script rotated the lines clockwise, so they appeared horizontally, and ended each line with a short downward stroke on the right. In cursive script, the three strokes were eventually connected to form a glyph resembling a with an additional stroke at the bottom: ३. The Indian digits spread to the Caliphate in the 9th ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

OR Gate

The OR gate is a digital logic gate that implements logical disjunction. The OR gate outputs "true" if any of its inputs is "true"; otherwise it outputs "false". The input and output states are normally represented by different voltage levels. Description Any OR gate can be constructed with two or more inputs. It outputs a 1 if any of these inputs are 1, or outputs a 0 only if all inputs are 0. The inputs and outputs are binary digits ("bits") which have two possible truth value, logical states. In addition to 1 and 0, these states may be called true and false, high and low, active and inactive, or other such pairs of symbols. Thus it performs a logical disjunction (∨) from mathematical logic. The gate can be represented with the plus sign (+) because it can be used for Disjunction introduction, logical addition. Equivalently, an OR gate finds the ''maximum'' between two binary digits, just as the AND gate finds the ''minimum''. Together with the AND gate and the NOT gate, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

Johan Håstad

Johan Torkel Håstad (; born 19 November 1960) is a Swedish theoretical computer scientist most known for his work on computational complexity theory. He was the recipient of the Gödel Prize in 1994 and 2011 and the ACM Doctoral Dissertation Award in 1986, among other prizes. He has been a professor in theoretical computer science at KTH Royal Institute of Technology in Stockholm, Sweden since 1988, becoming a full professor in 1992. He is a member of the Royal Swedish Academy of Sciences since 2001. He received his B.S. in Mathematics at Stockholm University in 1981, his M.S. in Mathematics at Uppsala University in 1984 and his Ph.D. in Mathematics from MIT in 1986. Håstad's thesis and 1994 Gödel Prize concerned his work on lower bounds on the size of constant-depth Boolean circuits for the parity function. After Andrew Yao proved that such circuits require exponential size, Håstad proved nearly optimal lower bounds on the necessary size through his switching lemma ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Parity Function

In Boolean algebra, a parity function is a Boolean function whose value is one if and only if the input vector has an odd number of ones. The parity function of two inputs is also known as the XOR function. The parity function is notable for its role in theoretical investigation of circuit complexity of Boolean functions. The output of the parity function is the parity bit. Definition The n-variable parity function is the Boolean function f:\^n\to\ with the property that f(x)=1 if and only if the number of ones in the vector x\in\^n is odd. In other words, f is defined as follows: :f(x)=x_1\oplus x_2 \oplus \dots \oplus x_n where \oplus denotes exclusive or. Properties Parity only depends on the number of ones and is therefore a symmetric Boolean function. The ''n''-variable parity function and its negation are the only Boolean functions for which all disjunctive normal forms have the maximal number of 2 ''n'' − 1 monomials of length ''n'' and al ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Claude Shannon

Claude Elwood Shannon (April 30, 1916 – February 24, 2001) was an American mathematician, electrical engineer, computer scientist, cryptographer and inventor known as the "father of information theory" and the man who laid the foundations of the Information Age. Shannon was the first to describe the use of Boolean algebra—essential to all digital electronic circuits—and helped found artificial intelligence (AI). Roboticist Rodney Brooks declared Shannon the 20th century engineer who contributed the most to 21st century technologies, and mathematician Solomon W. Golomb described his intellectual achievement as "one of the greatest of the twentieth century". At the University of Michigan, Shannon dual degreed, graduating with a Bachelor of Science in electrical engineering and another in mathematics, both in 1936. A 21-year-old master's degree student in electrical engineering at MIT, his thesis, "A Symbolic Analysis of Relay and Switching Circuits", demonstrated that electric ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

Log-space Transducer

In computational complexity theory, a log-space reduction is a reduction computable by a deterministic Turing machine using logarithmic space. Conceptually, this means it can keep a constant number of pointers into the input, along with a logarithmic number of fixed-size integers. It is possible that such a machine may not have space to write down its own output, so the only requirement is that any given bit of the output be computable in log-space. Formally, this reduction is executed via a log-space transducer. Such a machine has polynomially-many configurations, so log-space reductions are also polynomial-time reductions. However, log-space reductions are probably weaker than polynomial-time reductions; while any non-empty, non-full language in P is polynomial-time reducible to any other non-empty, non-full language in P, a log-space reduction from an NL-complete language to a language in L, both of which would be languages in P, would imply the unlikely L = NL. It is an ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Log-space Reduction

In computational complexity theory, a log-space reduction is a reduction (complexity), reduction computable by a deterministic Turing machine using logarithmic space. Conceptually, this means it can keep a constant number of Pointer (computer programming), pointers into the input, along with a logarithmic number of fixed-size integers. It is possible that such a machine may not have space to write down its own output, so the only requirement is that any given bit of the output be computable in log-space. Formally, this reduction is executed via a log-space transducer. Such a machine has polynomially-many configurations, so log-space reductions are also polynomial-time reductions. However, log-space reductions are probably weaker than polynomial-time reductions; while any non-empty, non-full language in P (complexity), P is polynomial-time reducible to any other non-empty, non-full language in P, a log-space reduction from an NL (complexity), NL-complete language to a language in L ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

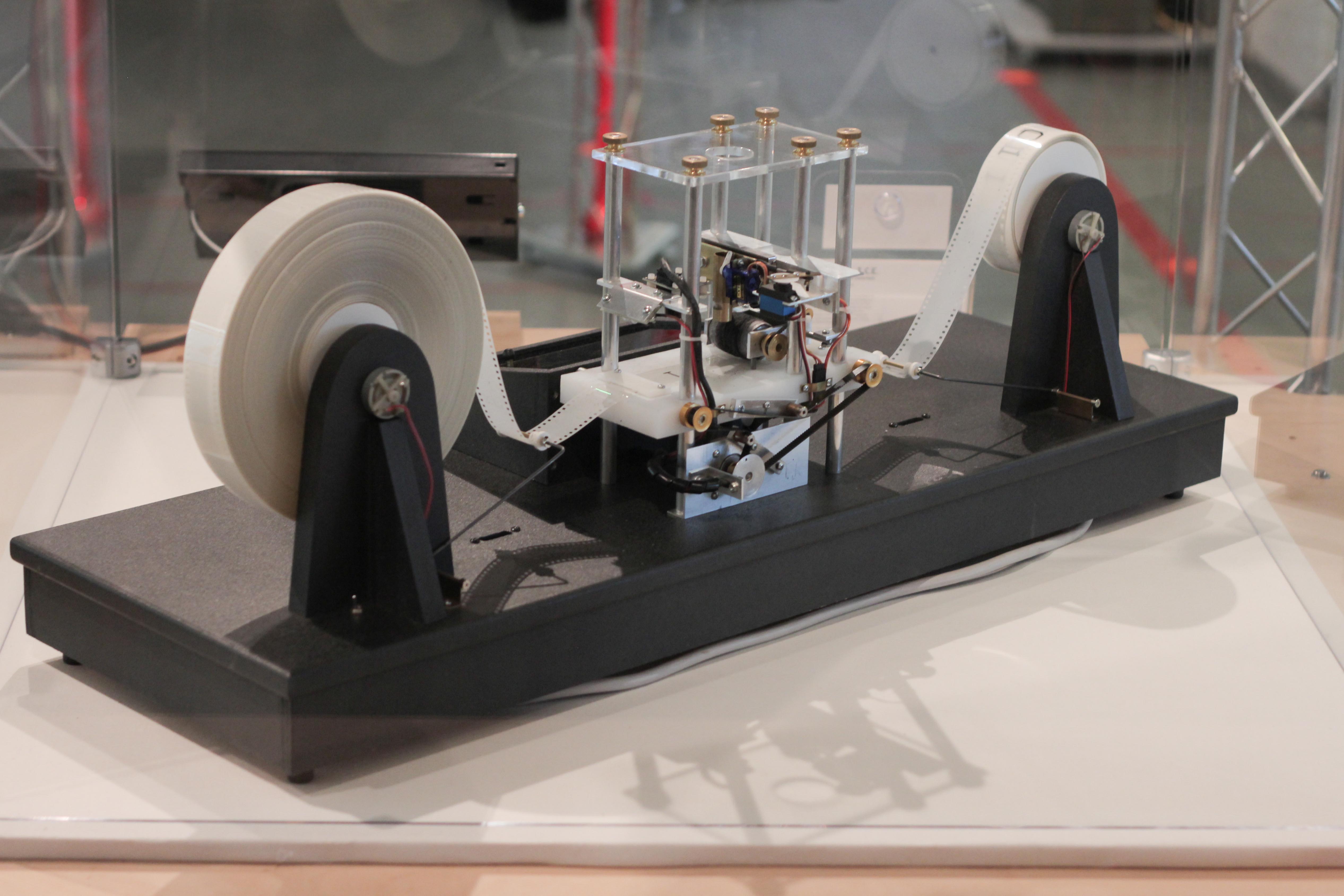

Deterministic Turing Machine

A Turing machine is a mathematical model of computation describing an abstract machine that manipulates symbols on a strip of tape according to a table of rules. Despite the model's simplicity, it is capable of implementing any computer algorithm. The machine operates on an infinite memory tape divided into discrete cells, each of which can hold a single symbol drawn from a finite set of symbols called the alphabet of the machine. It has a "head" that, at any point in the machine's operation, is positioned over one of these cells, and a "state" selected from a finite set of states. At each step of its operation, the head reads the symbol in its cell. Then, based on the symbol and the machine's own present state, the machine writes a symbol into the same cell, and moves the head one step to the left or the right, or halts the computation. The choice of which replacement symbol to write, which direction to move the head, and whether to halt is based on a finite table that specif ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

DLOGTIME

In computational complexity theory, DLOGTIME is the complexity class of all computational problems solvable in a logarithmic amount of computation time on a deterministic Turing machine. It must be defined on a random-access Turing machine, since otherwise the input tape is longer than the range of cells that can be accessed by the machine. It is a very weak model of time complexity: no random-access Turing machine with a smaller deterministic time bound can access the whole input. DLOGTIME machines are rarely used ''as-is''. Instead, they are usually used as part of a theoretical construct for studying reducibility. They cannot be used to produce output that is longer than logarithmic length. However, they can be used to ''indirectly'' produce such an output, in the following sense: Given any log-length binary address, it produces the corresponding bit of the output. Since a polynomial-sized integer has logarithmic-length binary representation, this is possible in DLOGTIME. De ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Computational Resource

In computational complexity theory, a computational resource is a resource used by some computational models in the solution of computational problems. The simplest computational resources are computation time, the number of steps necessary to solve a problem, and memory space, the amount of storage needed while solving the problem, but many more complicated resources have been defined. A computational problem is generally defined in terms of its action on any valid input. Examples of problems might be "given an integer ''n'', determine whether ''n'' is prime", or "given two numbers ''x'' and ''y'', calculate the product ''x''*''y''". As the inputs get bigger, the amount of computational resources needed to solve a problem will increase. Thus, the resources needed to solve a problem are described in terms of asymptotic analysis, by identifying the resources as a function of the length or size of the input. Resource usage is often partially quantified using Big ''O'' notation ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Computational Problem

In theoretical computer science, a computational problem is one that asks for a solution in terms of an algorithm. For example, the problem of factoring :"Given a positive integer ''n'', find a nontrivial prime factor of ''n''." is a computational problem that has a solution, as there are many known integer factorization algorithms. A computational problem can be viewed as a set of ''instances'' or ''cases'' together with a, possibly empty, set of ''solutions'' for every instance/case. The question then is, whether there exists an algorithm that maps instances to solutions. For example, in the factoring problem, the instances are the integers ''n'', and solutions are prime numbers ''p'' that are the nontrivial prime factors of ''n''. An example of a computational problem without a solution is the Halting problem. Computational problems are one of the main objects of study in theoretical computer science. One is often interested not only in mere existence of an algorithm, b ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Turing Machine

A Turing machine is a mathematical model of computation describing an abstract machine that manipulates symbols on a strip of tape according to a table of rules. Despite the model's simplicity, it is capable of implementing any computer algorithm. The machine operates on an infinite memory tape divided into discrete mathematics, discrete cells, each of which can hold a single symbol drawn from a finite set of symbols called the Alphabet (formal languages), alphabet of the machine. It has a "head" that, at any point in the machine's operation, is positioned over one of these cells, and a "state" selected from a finite set of states. At each step of its operation, the head reads the symbol in its cell. Then, based on the symbol and the machine's own present state, the machine writes a symbol into the same cell, and moves the head one step to the left or the right, or halts the computation. The choice of which replacement symbol to write, which direction to move the head, and whet ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |