|

Babenko–Beckner Inequality

In mathematics, the Babenko–Beckner inequality (after K. Ivan Babenko and William E. Beckner) is a sharpened form of the Hausdorff–Young inequality having applications to uncertainty principles in the Fourier analysis of Lp spaces. The (''q'', ''p'')-norm of the ''n''-dimensional Fourier transform is defined to beIwo Bialynicki-Birula. ''Formulation of the uncertainty relations in terms of the Renyi entropies.'arXiv:quant-ph/0608116v2/ref> :\, \mathcal F\, _ = \sup_ \frac,\text1 < p \le 2,\text\frac 1 p + \frac 1 q = 1. In 1961, BabenkoK.I. Babenko. ''An inequality in the theory of Fourier integrals.'' Izv. Akad. Nauk SSSR, Ser. Mat. 25 (1961) pp. 531–542 English transl., Amer. Math. Soc. Transl. (2) 44, pp. 115–128 found this norm for ''even'' integer values of ''q''. Finally, in 1975, using as < ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

William E

William is a masculine given name of Norman French origin.Hanks, Hardcastle and Hodges, ''Oxford Dictionary of First Names'', Oxford University Press, 2nd edition, , p. 276. It became very popular in the English language after the Norman conquest of England in 1066,All Things William"Meaning & Origin of the Name"/ref> and remained so throughout the Middle Ages and into the modern era. It is sometimes abbreviated "Wm." Shortened familiar versions in English include Will, Wills, Willy, Willie, Liam, Bill, and Billy. A common Irish form is Liam. Scottish diminutives include Wull, Willie or Wullie (as in Oor Wullie or the play ''Douglas''). Female forms are Willa, Willemina, Wilma and Wilhelmina. Etymology William is related to the German given name ''Wilhelm''. Both ultimately descend from Proto-Germanic ''*Wiljahelmaz'', with a direct cognate also in the Old Norse name ''Vilhjalmr'' and a West Germanic borrowing into Medieval Latin ''Willelmus''. The Proto-Germa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hausdorff–Young Inequality

The Hausdorff−Young inequality is a foundational result in the mathematical field of Fourier analysis. As a statement about Fourier series, it was discovered by and extended by . It is now typically understood as a rather direct corollary of the Plancherel theorem, found in 1910, in combination with the Riesz-Thorin theorem, originally discovered by Marcel Riesz in 1927. With this machinery, it readily admits several generalizations, including to multidimensional Fourier series and to the Fourier transform on the real line, Euclidean spaces, as well as more general spaces. With these extensions, it is one of the best-known results of Fourier analysis, appearing in nearly every introductory graduate-level textbook on the subject. The nature of the Hausdorff-Young inequality can be understood with only Riemann integration and infinite series as prerequisite. Given a continuous function , define its "Fourier coefficients" by :c_n=\int_0^1 e^f(x)\,dx for each integer . The Hausdo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Uncertainty Principle

In quantum mechanics, the uncertainty principle (also known as Heisenberg's uncertainty principle) is any of a variety of mathematical inequalities asserting a fundamental limit to the accuracy with which the values for certain pairs of physical quantities of a particle, such as position, ''x'', and momentum, ''p'', can be predicted from initial conditions. Such variable pairs are known as complementary variables or canonically conjugate variables; and, depending on interpretation, the uncertainty principle limits to what extent such conjugate properties maintain their approximate meaning, as the mathematical framework of quantum physics does not support the notion of simultaneously well-defined conjugate properties expressed by a single value. The uncertainty principle implies that it is in general not possible to predict the value of a quantity with arbitrary certainty, even if all initial conditions are specified. Introduced first in 1927 by the German physicist Wern ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Fourier Analysis

In mathematics, Fourier analysis () is the study of the way general functions may be represented or approximated by sums of simpler trigonometric functions. Fourier analysis grew from the study of Fourier series, and is named after Joseph Fourier, who showed that representing a function as a sum of trigonometric functions greatly simplifies the study of heat transfer. The subject of Fourier analysis encompasses a vast spectrum of mathematics. In the sciences and engineering, the process of decomposing a function into oscillatory components is often called Fourier analysis, while the operation of rebuilding the function from these pieces is known as Fourier synthesis. For example, determining what component frequencies are present in a musical note would involve computing the Fourier transform of a sampled musical note. One could then re-synthesize the same sound by including the frequency components as revealed in the Fourier analysis. In mathematics, the term ''Fourier a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lp Space

In mathematics, the spaces are function spaces defined using a natural generalization of the -norm for finite-dimensional vector spaces. They are sometimes called Lebesgue spaces, named after Henri Lebesgue , although according to the Bourbaki group they were first introduced by Frigyes Riesz . spaces form an important class of Banach spaces in functional analysis, and of topological vector spaces. Because of their key role in the mathematical analysis of measure and probability spaces, Lebesgue spaces are used also in the theoretical discussion of problems in physics, statistics, economics, finance, engineering, and other disciplines. Applications Statistics In statistics, measures of central tendency and statistical dispersion, such as the mean, median, and standard deviation, are defined in terms of metrics, and measures of central tendency can be characterized as solutions to variational problems. In penalized regression, "L1 penalty" and "L2 penalty" refer ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Fourier Transform

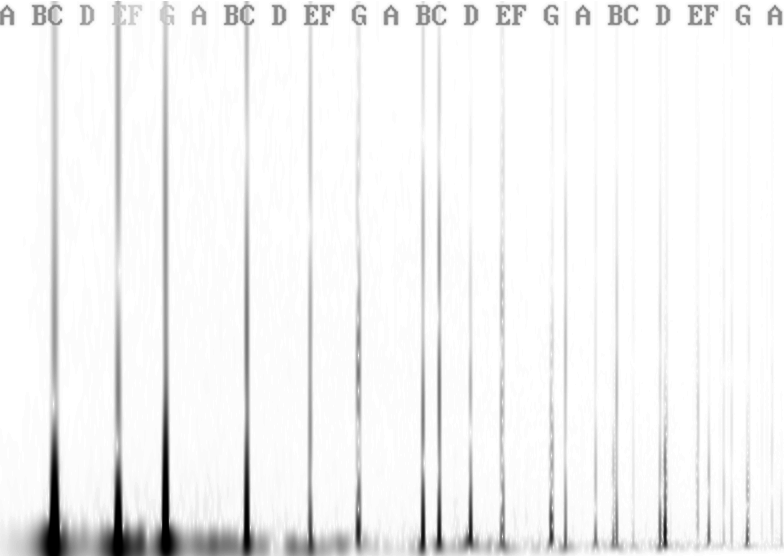

A Fourier transform (FT) is a mathematical transform that decomposes functions into frequency components, which are represented by the output of the transform as a function of frequency. Most commonly functions of time or space are transformed, which will output a function depending on temporal frequency or spatial frequency respectively. That process is also called ''analysis''. An example application would be decomposing the waveform of a musical chord into terms of the intensity of its constituent pitches. The term ''Fourier transform'' refers to both the frequency domain representation and the mathematical operation that associates the frequency domain representation to a function of space or time. The Fourier transform of a function is a complex-valued function representing the complex sinusoids that comprise the original function. For each frequency, the magnitude ( absolute value) of the complex value represents the amplitude of a constituent complex sinusoid wi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hermite Functions

In mathematics, the Hermite polynomials are a classical orthogonal polynomial sequence. The polynomials arise in: * signal processing as Hermitian wavelets for wavelet transform analysis * probability, such as the Edgeworth series, as well as in connection with Brownian motion; * combinatorics, as an example of an Appell sequence, obeying the umbral calculus; * numerical analysis as Gaussian quadrature; * physics, where they give rise to the eigenstates of the quantum harmonic oscillator; and they also occur in some cases of the heat equation (when the term \beginxu_\end is present); * systems theory in connection with nonlinear operations on Gaussian noise. * random matrix theory in Gaussian ensembles. Hermite polynomials were defined by Pierre-Simon Laplace in 1810, though in scarcely recognizable form, and studied in detail by Pafnuty Chebyshev in 1859. Chebyshev's work was overlooked, and they were named later after Charles Hermite, who wrote on the polynom ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Eigenfunction

In mathematics, an eigenfunction of a linear operator ''D'' defined on some function space is any non-zero function f in that space that, when acted upon by ''D'', is only multiplied by some scaling factor called an eigenvalue. As an equation, this condition can be written as Df = \lambda f for some scalar eigenvalue \lambda. The solutions to this equation may also be subject to boundary conditions that limit the allowable eigenvalues and eigenfunctions. An eigenfunction is a type of eigenvector. Eigenfunctions In general, an eigenvector of a linear operator ''D'' defined on some vector space is a nonzero vector in the domain of ''D'' that, when ''D'' acts upon it, is simply scaled by some scalar value called an eigenvalue. In the special case where ''D'' is defined on a function space, the eigenvectors are referred to as eigenfunctions. That is, a function ''f'' is an eigenfunction of ''D'' if it satisfies the equation where λ is a scalar. The solutions to Equation may a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bernoulli Trial

In the theory of probability and statistics, a Bernoulli trial (or binomial trial) is a random experiment with exactly two possible outcomes, "success" and "failure", in which the probability of success is the same every time the experiment is conducted. It is named after Jacob Bernoulli, a 17th-century Swiss mathematician, who analyzed them in his '' Ars Conjectandi'' (1713). The mathematical formalisation of the Bernoulli trial is known as the Bernoulli process. This article offers an elementary introduction to the concept, whereas the article on the Bernoulli process offers a more advanced treatment. Since a Bernoulli trial has only two possible outcomes, it can be framed as some "yes or no" question. For example: *Is the top card of a shuffled deck an ace? *Was the newborn child a girl? (See human sex ratio.) Therefore, success and failure are merely labels for the two outcomes, and should not be construed literally. The term "success" in this sense consists in the res ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Elementary Symmetric Polynomials

In mathematics, specifically in commutative algebra, the elementary symmetric polynomials are one type of basic building block for symmetric polynomials, in the sense that any symmetric polynomial can be expressed as a polynomial in elementary symmetric polynomials. That is, any symmetric polynomial is given by an expression involving only additions and multiplication of constants and elementary symmetric polynomials. There is one elementary symmetric polynomial of degree in variables for each positive integer , and it is formed by adding together all distinct products of distinct variables. Definition The elementary symmetric polynomials in variables , written for , are defined by :\begin e_1 (X_1, X_2, \dots,X_n) &= \sum_ X_j,\\ e_2 (X_1, X_2, \dots,X_n) &= \sum_ X_j X_k,\\ e_3 (X_1, X_2, \dots,X_n) &= \sum_ X_j X_k X_l,\\ \end and so forth, ending with : e_n (X_1, X_2, \dots,X_n) = X_1 X_2 \cdots X_n. In general, for we define : e_k (X_1 , \ldots , X_n )=\sum_ ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Normal Probability Distribution

In statistics, a normal distribution or Gaussian distribution is a type of continuous probability distribution for a real-valued random variable. The general form of its probability density function is : f(x) = \frac e^ The parameter \mu is the mean or expectation of the distribution (and also its median and mode), while the parameter \sigma is its standard deviation. The variance of the distribution is \sigma^2. A random variable with a Gaussian distribution is said to be normally distributed, and is called a normal deviate. Normal distributions are important in statistics and are often used in the natural and social sciences to represent real-valued random variables whose distributions are not known. Their importance is partly due to the central limit theorem. It states that, under some conditions, the average of many samples (observations) of a random variable with finite mean and variance is itself a random variable—whose distribution converges to a normal distrib ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Hermite Polynomials

In mathematics, the Hermite polynomials are a classical orthogonal polynomial sequence. The polynomials arise in: * signal processing as Hermitian wavelets for wavelet transform analysis * probability, such as the Edgeworth series, as well as in connection with Brownian motion; * combinatorics, as an example of an Appell sequence, obeying the umbral calculus; * numerical analysis as Gaussian quadrature; * physics, where they give rise to the eigenstates of the quantum harmonic oscillator; and they also occur in some cases of the heat equation (when the term \beginxu_\end is present); * systems theory in connection with nonlinear operations on Gaussian noise. * random matrix theory in Gaussian ensembles. Hermite polynomials were defined by Pierre-Simon Laplace in 1810, though in scarcely recognizable form, and studied in detail by Pafnuty Chebyshev in 1859. Chebyshev's work was overlooked, and they were named later after Charles Hermite, who wrote on the polynomials in ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

.png)