Definition

Notions of temporal envelope and temporal fine structure may have different meanings in many studies. An important distinction to make is between the physical (i.e., acoustical) and the biological (or perceptual) description of these ENV and TFS cues.

Any sound whose frequency components cover a narrow range (called a narrowband signal) can be considered as an envelope (ENVp, where p denotes the physical signal) superimposed on a more rapidly oscillating carrier, the temporal fine structure (TFSp).

Many sounds in everyday life, including speech and music, are broadband; the frequency components spread over a wide range and there is no well-defined way to represent the signal in terms of ENVp and TFSp. However, in a normally functioning

Notions of temporal envelope and temporal fine structure may have different meanings in many studies. An important distinction to make is between the physical (i.e., acoustical) and the biological (or perceptual) description of these ENV and TFS cues.

Any sound whose frequency components cover a narrow range (called a narrowband signal) can be considered as an envelope (ENVp, where p denotes the physical signal) superimposed on a more rapidly oscillating carrier, the temporal fine structure (TFSp).

Many sounds in everyday life, including speech and music, are broadband; the frequency components spread over a wide range and there is no well-defined way to represent the signal in terms of ENVp and TFSp. However, in a normally functioning Temporal envelope (ENV) processing

Neurophysiological aspects

The neural representation of stimulus envelope, ENVn, has typically been studied using well-controlled ENVp modulations, that is sinusoidally amplitude-modulated (AM) sounds. Cochlear filtering limits the range of AM rates encoded in individual auditory-nerve fibers. In the auditory nerve, the strength of the neural representation of AM decreases with increasing modulation rate. At the level of the

The neural representation of stimulus envelope, ENVn, has typically been studied using well-controlled ENVp modulations, that is sinusoidally amplitude-modulated (AM) sounds. Cochlear filtering limits the range of AM rates encoded in individual auditory-nerve fibers. In the auditory nerve, the strength of the neural representation of AM decreases with increasing modulation rate. At the level of the Psychoacoustical aspects

The perception of ENVp depends on which AM rates are contained in the signal. Low rates of AM, in the 1–8 Hz range, are perceived as changes in perceived intensity, that is loudness fluctuations (a percept that can also be evoked by frequency modulation, FM); at higher rates, AM is perceived as roughness, with the greatest roughness sensation occurring at around 70 Hz; at even higher rates, AM can evoke a weak pitch percept corresponding to the modulation rate. Rainstorms, crackling fire, chirping crickets or galloping horses produce "sound textures" - the collective result of many similar acoustic events - which perception is mediated by ENVn statistics. The auditory detection threshold for AM as a function of AM rate, referred to as the temporal modulation transfer function (TMTF), is best for AM rates in the range from 4 – 150 Hz and worsens outside that range The cutoff frequency of the TMTF gives an estimate of temporal acuity (temporal resolution) for the auditory system. This cutoff frequency corresponds to a time constant of about 1 - 3 ms for the auditory system of normal-hearing humans. Correlated envelope fluctuations across frequency in a masker can aid detection of a pure tone signal, an effect known as comodulation masking release. AM applied to a given carrier can perceptually interfere with the detection of a target AM imposed on the same carrier, an effect termed ''modulation masking''. Modulation-masking patterns are tuned (greater masking occurs for masking and target AMs close in modulation rate), suggesting that the human auditory system is equipped with frequency-selective channels for AM. Moreover, AM applied to spectrally remote carriers can perceptually interfere with the detection of AM on a target sound, an effect termed ''modulation detection interference''. The notion of modulation channels is also supported by the demonstration of selective adaptation effects in the modulation domain. These studies show that AM detection thresholds are selectively elevated above pre-exposure thresholds when the carrier frequency and the AM rate of the adaptor are similar to those of the test tone. Human listeners are sensitive to relatively slow "second-order" AMs cues correspond to fluctuations in the strength of AM. These cues arise from the interaction of different modulation rates, previously described as "beating" in the envelope-frequency domain. Perception of second-order AM has been interpreted as resulting from nonlinear mechanisms in the auditory pathway that produce an audible distortion component at the envelope beat frequency in the internal modulation spectrum of the sounds. Interaural time differences in the envelope provide binaural cues even at high frequencies where TFSn cannot be used.Models of normal envelope processing

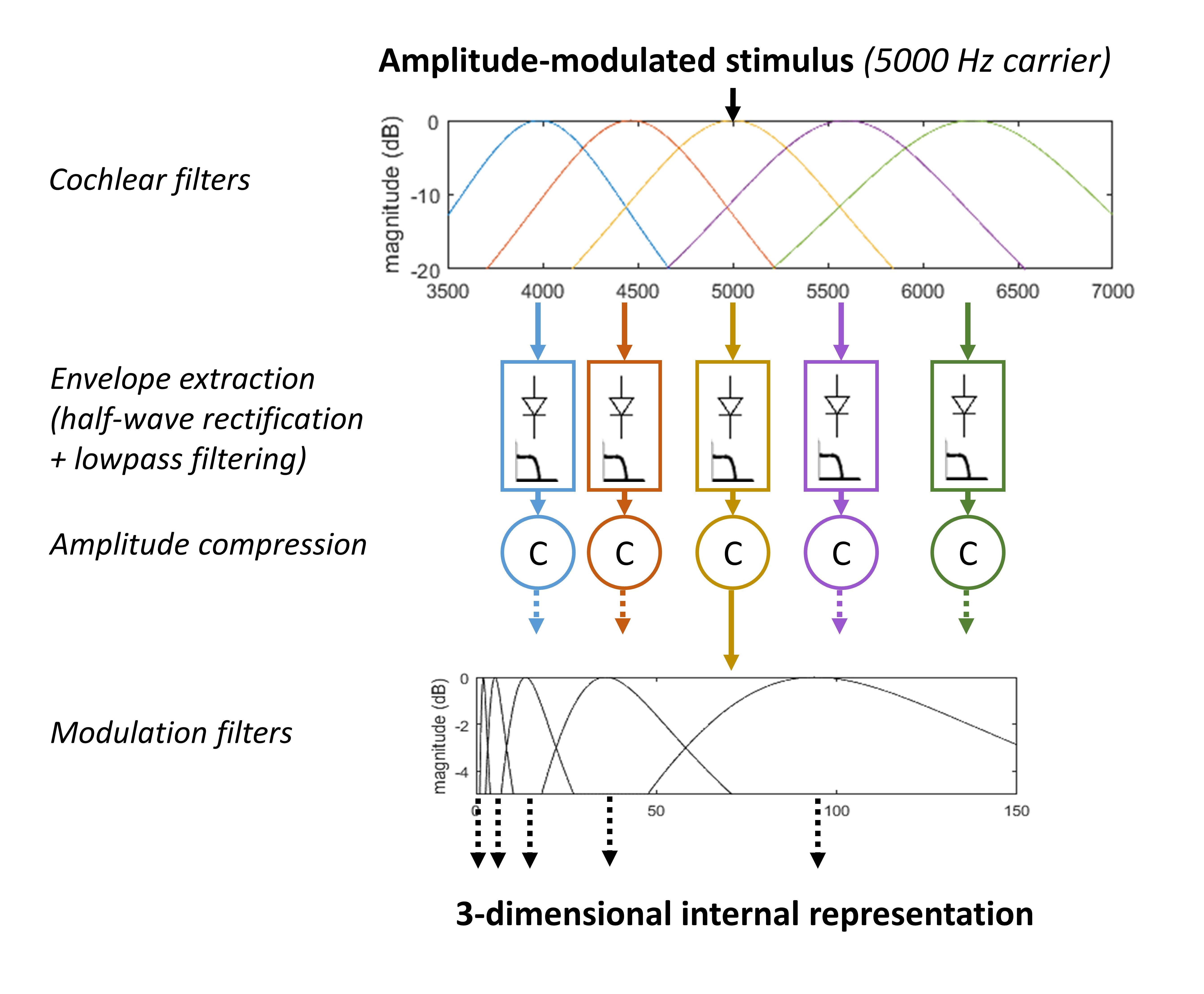

The most basic computer model of ENV processing is the ''leaky integrator model''. This model extracts the temporal envelope of the sound (ENVp) via bandpass filtering, half-wave rectification (which may be followed by fast-acting amplitude compression), and lowpass filtering with a cutoff frequency between about 60 and 150 Hz. The leaky integrator is often used with a decision statistic based on either the resulting envelope power, the max/min ratio, or the crest factor. This model accounts for the loss of auditory sensitivity for AM rates higher than about 60–150 Hz for broadband noise carriers. Based on the concept of frequency selectivity for AM, the perception model of Torsten Dau incorporates broadly tuned bandpass modulation filters (with a Q value around 1) to account for data from a broad variety of psychoacoustic tasks and particularly AM detection for noise carriers with different bandwidths, taking into account their intrinsic envelope fluctuations. This model of has been extended to account for comodulation masking release (see sections above). The shapes of the modulation filters have been estimated and an “envelope power spectrum model” (EPSM) based on these filters can account for AM masking patterns and AM depth discrimination. The EPSM has been extended to the prediction of speech intelligibility and to account for data from a broad variety of psychoacoustic tasks. A physiologically-based processing model simulating brainstem responses has also been developed to account for AM detection and AM masking patterns.

The most basic computer model of ENV processing is the ''leaky integrator model''. This model extracts the temporal envelope of the sound (ENVp) via bandpass filtering, half-wave rectification (which may be followed by fast-acting amplitude compression), and lowpass filtering with a cutoff frequency between about 60 and 150 Hz. The leaky integrator is often used with a decision statistic based on either the resulting envelope power, the max/min ratio, or the crest factor. This model accounts for the loss of auditory sensitivity for AM rates higher than about 60–150 Hz for broadband noise carriers. Based on the concept of frequency selectivity for AM, the perception model of Torsten Dau incorporates broadly tuned bandpass modulation filters (with a Q value around 1) to account for data from a broad variety of psychoacoustic tasks and particularly AM detection for noise carriers with different bandwidths, taking into account their intrinsic envelope fluctuations. This model of has been extended to account for comodulation masking release (see sections above). The shapes of the modulation filters have been estimated and an “envelope power spectrum model” (EPSM) based on these filters can account for AM masking patterns and AM depth discrimination. The EPSM has been extended to the prediction of speech intelligibility and to account for data from a broad variety of psychoacoustic tasks. A physiologically-based processing model simulating brainstem responses has also been developed to account for AM detection and AM masking patterns.

Temporal fine structure (TFS) processing

Neurophysiological aspects

The neural representation of temporal fine structure, TFSn, has been studied using stimuli with well-controlled TFSp: pure tones, harmonic complex tones, and

The neural representation of temporal fine structure, TFSn, has been studied using stimuli with well-controlled TFSp: pure tones, harmonic complex tones, and Psychoacoustical aspects

It is often assumed that many perceptual capacities rely on the ability of the monaural and binaural auditory system to encode and use TFSn cues evoked by components in sounds with frequencies below about 1–4 kHz. These capacities include discrimination of frequency, discrimination of the fundamental frequency of harmonic sounds, detection of FM at rates below 5 Hz, melody recognition for sequences of pure tones and complex tones, lateralization and localization of pure tones and complex tones, and segregation of concurrent harmonic sounds (such as speech sounds). It appears that TFSn cues require correctModels of normal processing: limitations

The separation of a sound into ENVp and TFSp appears inspired partly by how sounds are synthesized and by the availability of a convenient way to separate an existing sound into ENV and TFS, namely theRole in speech and music perception

Role of temporal envelope in speech and music perception

The ENVp plays a critical role in many aspects of auditory perception, including in the perception of speech and music. Speech recognition is possible using cues related to the ENVp, even in situations where the original spectral information and TFSp are highly degraded. Indeed, when the spectrally local TFSp from one sentence is combined with the ENVp from a second sentence, only the words of the second sentence are heard. The ENVp rates most important for speech are those below about 16 Hz, corresponding to fluctuations at the rate of syllables. On the other hand, the

The ENVp plays a critical role in many aspects of auditory perception, including in the perception of speech and music. Speech recognition is possible using cues related to the ENVp, even in situations where the original spectral information and TFSp are highly degraded. Indeed, when the spectrally local TFSp from one sentence is combined with the ENVp from a second sentence, only the words of the second sentence are heard. The ENVp rates most important for speech are those below about 16 Hz, corresponding to fluctuations at the rate of syllables. On the other hand, the Role of TFS in speech and music perception

The ability to accurately process TFSp information is thought to play a role in our perception of pitch (i.e., the perceived height of sounds), an important sensation for music perception, as well as our ability to understand speech, especially in the presence of background noise.Role of TFS in pitch perception

Although pitch retrieval mechanisms in the auditory system are still a matter of debate, TFSn information may be used to retrieve the pitch of low-frequency pure tones and estimate the individual frequencies of the low-numbered (ca. 1st-8th) harmonics of a complex sound, frequencies from which the fundamental frequency of the sound can be retrieved according to, e.g., pattern-matching models of pitch perception. A role of TFSn information in pitch perception of complex sounds containing intermediate harmonics (ca. 7th-16th) has also been suggested and may be accounted for by temporal or spectrotemporal models of pitch perception. The degraded TFSn cues conveyed by cochlear implant devices may also be partly responsible for impaired music perception of cochlear implant recipients.Role of TFS cues in speech perception

TFSp cues are thought to be important for the identification of speakers and for tone identification inRole in environmental sound perception

Environmental sounds can be broadly defined as nonspeech and nonmusical sounds in the listener's environment that can convey meaningful information about surrounding objects and events. Environmental sounds are highly heterogeneous in terms of their acoustic characteristics and source types, and may include human and animal vocalizations, water and weather related events, mechanical and electronic signaling sounds. Given a great variety in sound sources that give rise to environmental sounds both ENVp and TFSp play an important role in their perception. However, the relative contributions of ENVp and TFSp can differ considerably for specific environmental sounds. This is reflected in the variety of acoustic measures that correlate with different perceptual characteristics of objects and events. Early studies highlighted the importance of envelope-based temporal patterning in perception of environmental events. For instance, Warren & Verbrugge, demonstrated that constructed sounds of a glass bottle dropped on the floor were perceived as bouncing when high-energy regions in four different frequency bands were temporally aligned, producing amplitude peaks in the envelope. In contrast, when the same spectral energy was distributed randomly across bands the sounds were heard as breaking. More recent studies using vocoder simulations of cochlear implant processing demonstrated that many temporally-patterned sounds can be perceived with little original spectral information, based primarily on temporal cues. Such sounds as footsteps, horse galloping, helicopter flying, ping-pong playing, clapping, typing were identified with a high accuracy of 70% or more with a single channel of envelope-modulated broadband noise or with only two frequency channels. In these studies, envelope-based acoustic measures such as number of bursts and peaks in the envelope were predictive of listeners’ abilities to identify sounds based primarily on ENVp cues. On the other hand, identification of brief environmental sounds without strong temporal patterning in ENVp may require a much larger number of frequency channels to perceive. Sounds such as a car horn or a train whistle were poorly identified even with as many as 32 frequency channels. Listeners with cochlear implants, which transmit envelope information for specific frequency bands, but do not transmit TFSp, have considerably reduced abilities in identification of common environmental sounds. In addition, individual environmental sounds are typically heard within the context of larger auditory scenes where sounds from multiple sources may overlap in time and frequency. When heard within an auditory scene, accurate identification of individual environmental sounds is contingent on the ability to segregate them from other sound sources or auditory streams in the auditory scene, which involves further reliance on ENVp and TFSp cues (see Role in auditory scene analysis).Role in auditory scene analysis

Effects of age and hearing loss on temporal envelope processing

Developmental aspects

In infancy, behavioral AM detection thresholds and forward or backward masking thresholds observed in 3-month olds are similar to those observed in adults. Electrophysiological studies conducted in 1-month-old infants using 2000 Hz AM pure tones indicate some immaturity in envelope following response (EFR). Although sleeping infants and sedated adults show the same effect of modulation rate on EFR, infants’ estimates were generally poorer than adults’. This is consistent with behavioral studies conducted with school-age children showing differences in AM detection thresholds compared to adults. Children systematically show worse AM detection thresholds than adults until 10–11 years. However, the shape of the TMTF (the cutoff) is similar to adults’ for younger children of 5 years. Sensory versus non-sensory factors for this long maturation are still debated, but the results generally appear to be more dependent on the task or on sound complexity for infants and children than for adults. Regarding the development of speech ENVp processing, vocoder studies suggest that infants as young as 3 months are able to discriminate a change in consonants when the faster ENVp information of the syllables is preserved (< 256 Hz) but less so when only the slowest ENVp is available (< 8 Hz). Older children of 5 years show similar abilities than adults to discriminate consonant changes based on ENVp cues (< 64 Hz).Neurophysiological aspects

The effects of hearing loss and age on neural coding are generally believed to be smaller for slowly varying envelope responses (i.e., ENVn) than for rapidly varying temporal fine structure (i.e., TFSn). Enhanced ENVn coding following noise-induced hearing loss has been observed in peripheral auditory responses from single neurons and in central evoked responses from the auditory midbrain. The enhancement in ENVn coding of narrowband sounds occurs across the full range of modulation frequencies encoded by single neurons. For broadband sounds, the range of modulation frequencies encoded in impaired responses is broader than normal (extending to higher frequencies), as expected from reduced frequency selectivity associated with outer-hair-cell dysfunction. The enhancement observed in neural envelope responses is consistent with enhanced auditory perception of modulations following cochlear damage, which is commonly believed to result from loss of cochlear compression that occurs with outer-hair-cell dysfunction due to age or noise overexposure. However, the influence of inner-hair-cell dysfunction (e.g., shallower response growth for mild-moderate damage and steeper growth for severe damage) can confound the effects of outer-hair-cell dysfunction on overall response growth and thus ENVn coding. Thus, not surprisingly the relative effects of outer-hair-cell and inner-hair-cell dysfunction have been predicted with modeling to create individual differences in speech intelligibility based on the strength of envelope coding of speech relative to noise.Psychoacoustical aspects

For sinusoidal carriers, which have no intrinsic envelope (ENVp) fluctuations, the TMTF is roughly flat for AM rates from 10 to 120 Hz, but increases (i.e. threshold worsens) for higher AM rates, provided that spectral sidebands are not audible. The shape of the TMTF for sinusoidal carriers is similar for young and older people with normal audiometric thresholds, but older people tend to have higher detection thresholds overall, suggesting poorer “detection efficiency” for ENVn cues in older people. Provided that the carrier is fully audible, the ability to detect AM is usually not adversely affected by cochlear hearing loss and may sometimes be better than normal, for both noise carriers and sinusoidal carriers, perhaps because loudness recruitment (an abnormally rapid growth of loudness with increasing sound level) “magnifies” the perceived amount of AM (i.e., ENVn cues). Consistent with this, when the AM is clearly audible, a sound with a fixed AM depth appears to fluctuate more for an impaired ear than for a normal ear. However, the ability to detect changes in AM depth can be impaired by cochlear hearing loss. Speech that is processed with noise vocoder such that mainly envelope information is delivered in multiple spectral channels was also used in investigating envelope processing in hearing impairment. Here, hearing-impaired individuals could not make use of such envelope information as well as normal-hearing individuals, even after audibility factors were taken into account. Additional experiments suggest that age negatively affects the binaural processing of ENVp at least at low audio-frequencies.Models of impaired temporal envelope processing

The perception model of ENV processing that incorporates selective (bandpass) AM filters accounts for many perceptual consequences of cochlear dysfunction including enhanced sensitivity to AM for sinusoidal and noise carriers, abnormal forward masking (the rate of recovery from forward masking being generally slower than normal for impaired listeners), stronger interference effects between AM and FM and enhanced temporal integration of AM. The model of Torsten Dau has been extended to account for the discrimination of complex AM patterns by hearing-impaired individuals and the effects of noise-reduction systems. The performance of the hearing-impaired individuals was best captured when the model combined the loss of peripheral amplitude compression resulting from the loss of the active mechanism in the cochlea with an increase in internal noise in the ENVn domain. Phenomenological models simulating the response of the peripheral auditory system showed that impaired AM sensitivity in individuals experiencing chronic tinnitus with clinically normal audiograms could be predicted by substantial loss of auditory-nerve fibers with low spontaneous rates and some loss of auditory-nerve fibers with high-spontaneous rates.Effects of age and hearing loss on TFS processing

Developmental aspects

Very few studies have systematically assessed TFS processing in infants and children. Frequency-following response (FFR), thought to reflect phase-locked neural activity, appears to be adult-like in 1-month-old infants when using a pure tone (centered at 500, 1000 or 2000 Hz) modulated at 80 Hz with a 100% of modulation depth. As for behavioral data, six-month-old infants require larger frequency transitions to detect an FM change in a 1-kHz tone compared to adults. However, 4-month-old infants are able to discriminate two different FM sweeps, and they are more sensitive to FM cues swept from 150 Hz to 550 Hz than at lower frequencies. In school-age children, performance in detecting FM change improves between 6 and 10 years and sensitivity to low modulation rate (2 Hz) is poor until 9 years. For speech sounds, only one vocoder study has explored the ability of school age children to rely on TFSp cues to detect consonant changes, showing the same abilities for 5-years-olds than adults.Neurophysiological aspects

Psychophysical studies have suggested that degraded TFS processing due to age and hearing loss may underlie some suprathreshold deficits, such as speech perception; however, debate remains about the underlying neural correlates. The strength of phase locking to the temporal fine structure of signals (TFSn) in quiet listening conditions remains normal in peripheral single-neuron responses following cochlear hearing loss. Although these data suggest that the fundamental ability of auditory-nerve fibers to follow the rapid fluctuations of sound remains intact following cochlear hearing loss, deficits in phase locking strength do emerge in background noise. This finding, which is consistent with the common observation that listeners with cochlear hearing loss have more difficulty in noisy conditions, results from reduced cochlear frequency selectivity associated with outer-hair-cell dysfunction. Although only limited effects of age and hearing loss have been observed in terms of TFSn coding strength of narrowband sounds, more dramatic deficits have been observed in TFSn coding quality in response to broadband sounds, which are more relevant for everyday listening. A dramatic loss of tonotopicity can occur following noise induced hearing loss, where auditory-nerve fibers that should be responding to mid frequencies (e.g., 2–4 kHz) have dominant TFS responses to lower frequencies (e.g., 700 Hz). Notably, the loss of tonotopicity generally occurs only for TFSn coding but not for ENVn coding, which is consistent with greater perceptual deficits in TFS processing. This tonotopic degradation is likely to have important implications for speech perception, and can account for degraded coding of vowels following noise-induced hearing loss in which most of the cochlea responds to only the first formant, eliminating the normal tonotopic representation of the second and third formants.Psychoacoustical aspects

Several psychophysical studies have shown that older people with normal hearing and people with sensorineural hearing loss often show impaired performance for auditory tasks that are assumed to rely on the ability of the monaural and binaural auditory system to encode and use TFSn cues, such as: discrimination of sound frequency, discrimination of the fundamental frequency of harmonic sounds, detection of FM at rates below 5 Hz, melody recognition for sequences of pure tones and complex sounds, lateralization and localization of pure tones and complex tones, and segregation of concurrent harmonic sounds (such as speech sounds). However, it remains unclear to which extent deficits associated with hearing loss reflect poorer TFSn processing or reduced cochlear frequency selectivity.Models of impaired processing

The quality of the representation of a sound in the auditory nerve is limited by refractoriness, adaptation, saturation, and reduced synchronization (phase locking) at high frequencies, as well as by the stochastic nature of actions potentials. However, the auditory nerve contains thousands of fibers. Hence, despite these limiting factors, the properties of sounds are reasonably well represented in the ''population'' nerve response over a wide range of levels and audio frequencies (seeTransmission by hearing aids and cochlear implants

Temporal envelope transmission

Individuals with cochlear hearing loss usually have a smaller than normal dynamic range between the level of the weakest detectable sound and the level at which sounds become uncomfortably loud. To compress the large range of sound levels encountered in everyday life into the smallTemporal fine structure transmission

Hearing aids usually process sounds by filtering them into multiple frequency channels and applying AGC in each channel. Other signal processing in hearing aids, such as noise reduction, also involves filtering the input into multiple channels. The filtering into channels can affect the TFSp of sounds depending on characteristics such as the phase response and group delay of the filters. However, such effects are usually small. Cochlear implants also filter the input signal into frequency channels. Usually, the ENVp of the signal in each channel is transmitted to the implanted electrodes in the form an electrical pulses of fixed rate that are modulated in amplitude or duration. Information about TFSp is discarded. This is justified by the observation that people with cochlear implants have a very limited ability to process TFSp information, even if it is transmitted to the electrodes, perhaps because of a mismatch between the temporal information and the place in the cochlea to which it is delivered Reducing this mismatch may improve the ability to use TFSp information and hence lead to better pitch perception. Some cochlear implant systems transmit information about TFSp in the channels of the cochlear implants that are tuned to low audio frequencies, and this may improve the pitch perception of low-frequency sounds.Training effects and plasticity of temporal-envelope processing

Perceptual learning resulting from training has been reported for various auditory AM detection or discrimination tasks, suggesting that the responses of central auditory neurons to ENVp cues are plastic and that practice may modify the circuitry of ENVn processing. The plasticity of ENVn processing has been demonstrated in several ways. For instance, the ability of auditory-cortex neurons to discriminate voice-onset time cues for phonemes is degraded following moderate hearing loss (20-40 dB HL) induced by acoustic trauma. Interestingly, developmental hearing loss reduces cortical responses to slow, but not fast (100 Hz) AM stimuli, in parallel with behavioral performance. As a matter of fact, a transient hearing loss (15 days) occurring during the "critical period" is sufficient to elevate AM thresholds in adult gerbils. Even non-traumatic noise exposure reduces the phase-locking ability of cortical neurons as well as the animals' behavioral capacity to discriminate between different AM sounds. Behavioral training or pairing protocols involving neuromodulators also alter the ability of cortical neurons to phase lock to AM sounds. In humans, hearing loss may result in an unbalanced representation of speech cues: ENVn cues are enhanced at the cost of TFSn cues (see: Effects of age and hearing loss on temporal envelope processing). Auditory training may reduce the representation of speech ENVn cues for elderly listeners with hearing loss, who may then reach levels comparable to those observed for normal-hearing elderly listeners. Last, intensive musical training induces both behavioral effects such as higher sensitivity to pitch variations (for Mandarin linguistic pitch) and a better synchronization of brainstem responses to the f0-contour of lexical tones for musicians compared with non-musicians.Clinical evaluation of TFS sensitivity

Fast and easy to administer psychophysical tests have been developed to assist clinicians in the screening of TFS-processing abilities and diagnosis of suprathreshold temporal auditory processing deficits associated with cochlear damage and ageing. These tests may also be useful for audiologists and hearing-aid manufacturers to explain and/or predict the outcome of hearing-aid fitting in terms of perceived quality, speech intelligibility or spatial hearing. These tests may eventually be used to recommend the most appropriate compression speed in hearing aids or the use of directional microphones. The need for such tests is corroborated by strong correlations between slow-FM or spectro-temporal modulation detection thresholds and aided speech intelligibility in competing backgrounds for hearing-impaired persons. Clinical tests can be divided into two groups: those assessing monaural TFS processing capacities (TFS1 test) and those assessing binaural capacities (binaural pitch, TFS-LF, TFS-AF). TFS1: this test assesses the ability to discriminate between a harmonic complex tone and its frequency-transposed (and thus, inharmonic) version. Binaural pitch: these tests evaluate the ability to detect and discriminate binaural pitch, and melody recognition using different types of binaural pitch. TFS-LF: this test assesses the ability to discriminate low-frequency pure tones that are identical at the two ears from the same tones differing in interaural phase. TFS AF: this test assesses the highest audio frequency of a pure tone up to which a change in interaural phase can be discriminated.Objective measures using envelope and TFS cues

Signal distortion, additive noise, reverberation, and audio processing strategies such as noise suppression and dynamic-range compression can all impact speech intelligibility and speech and music quality. These changes in the perception of the signal can often be predicted by measuring the associated changes in the signal envelope and/or temporal fine structure (TFS). Objective measures of the signal changes, when combined with procedures that associate the signal changes with differences in auditory perception, give rise to auditory performance metrics for predicting speech intelligibility and speech quality. Changes in the TFS can be estimated by passing the signals through a filterbank and computing the coherence between the system input and output in each band. Intelligibility predicted from the coherence is accurate for some forms of additive noise and nonlinear distortion, but works poorly for ideal binary mask (IBM) noise suppression. Speech and music quality for signals subjected to noise and clipping distortion have also been modeled using the coherence or using the coherence averaged across short signal segments. Changes in the signal envelope can be measured using several different procedures. The presence of noise or reverberation will reduce the modulation depth of a signal, and multiband measurement of the envelope modulation depth of the system output is used in the speech transmission index (STI) to estimate intelligibility. While accurate for noise and reverberation applications, the STI works poorly for nonlinear processing such as dynamic-range compression. An extension to the STI estimates the change in modulation by cross-correlating the envelopes of the speech input and output signals. A related procedure, also using envelope cross-correlations, is the short-time objective intelligibility (STOI) measure, which works well for its intended application in evaluating noise suppression, but which is less accurate for nonlinear distortion. Envelope-based intelligibility metrics have also been derived using modulation filterbanks and using envelope time-frequency modulation patterns. Envelope cross-correlation is also used for estimating speech and music quality. Envelope and TFS measurements can also be combined to form intelligibility and quality metrics. A family of metrics for speech intelligibility, speech quality, and music quality has been derived using a shared model of the auditory periphery that can represent hearing loss. Using a model of the impaired periphery leads to more accurate predictions for hearing-impaired listeners than using a normal-hearing model, and the combined envelope/TFS metric is generally more accurate than a metric that uses envelope modulation alone.See also

*References

{{Reflist, 32em Psychoacoustics