Simulation-based optimization on:

[Wikipedia]

[Google]

[Amazon]

Simulation-based optimization (also known as simply simulation optimization) integrates

Simulation‐Based Optimization: Parametric Optimization Techniques and Reinforcement Learning

Springer, 2nd Edition (2015)

optimization

Mathematical optimization (alternatively spelled ''optimisation'') or mathematical programming is the selection of a best element, with regard to some criteria, from some set of available alternatives. It is generally divided into two subfiel ...

techniques into simulation

A simulation is an imitative representation of a process or system that could exist in the real world. In this broad sense, simulation can often be used interchangeably with model. Sometimes a clear distinction between the two terms is made, in ...

modeling and analysis. Because of the complexity of the simulation, the objective function

In mathematical optimization and decision theory, a loss function or cost function (sometimes also called an error function) is a function that maps an event or values of one or more variables onto a real number intuitively representing some "cost ...

may become difficult and expensive to evaluate. Usually, the underlying simulation model is stochastic, so that the objective function must be estimated using statistical estimation techniques (called output analysis in simulation methodology).

Once a system is mathematically modeled, computer-based simulations provide information about its behavior. Parametric simulation methods can be used to improve the performance of a system. In this method, the input of each variable is varied with other parameters remaining constant and the effect on the design objective is observed. This is a time-consuming method and improves the performance partially. To obtain the optimal solution with minimum computation and time, the problem is solved iteratively where in each iteration the solution moves closer to the optimum solution. Such methods are known as ‘numerical optimization’, ‘simulation-based optimization’ or 'simulation-based multi-objective optimization' used when more than one objective is involved.

In simulation experiment, the goal is to evaluate the effect of different values of input variables on a system. However, the interest is sometimes in finding the optimal value for input variables in terms of the system outcomes. One way could be running simulation experiments for all possible input variables. However, this approach is not always practical due to several possible situations and it just makes it intractable to run experiments for each scenario. For example, there might be too many possible values for input variables, or the simulation model might be too complicated and expensive to run for a large set of input variable values. In these cases, the goal is to iterative find optimal values for the input variables rather than trying all possible values. This process is called simulation optimization.

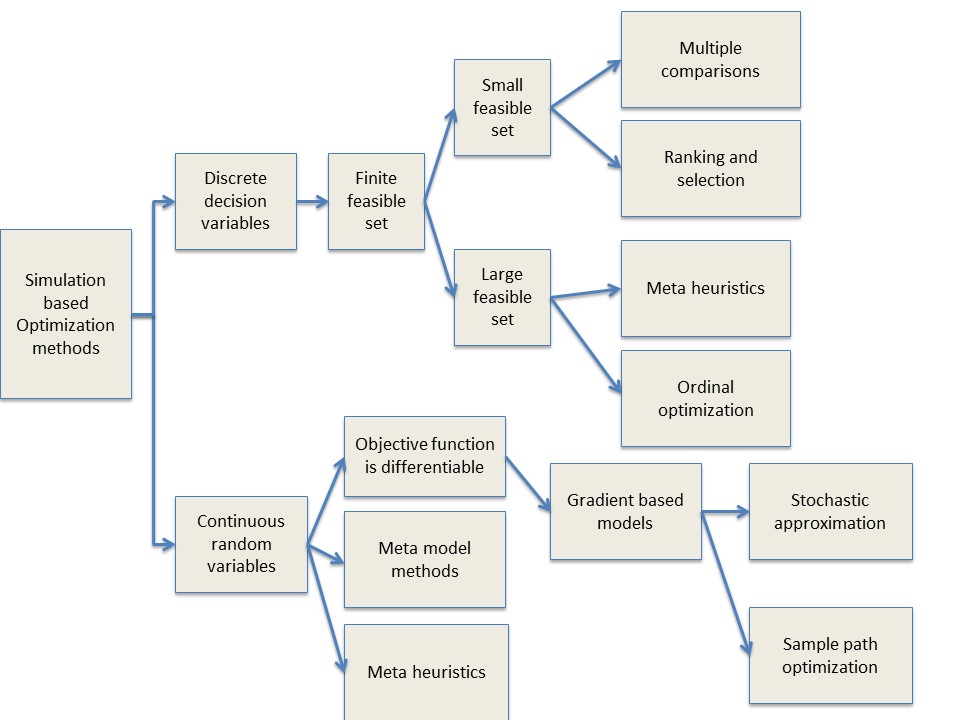

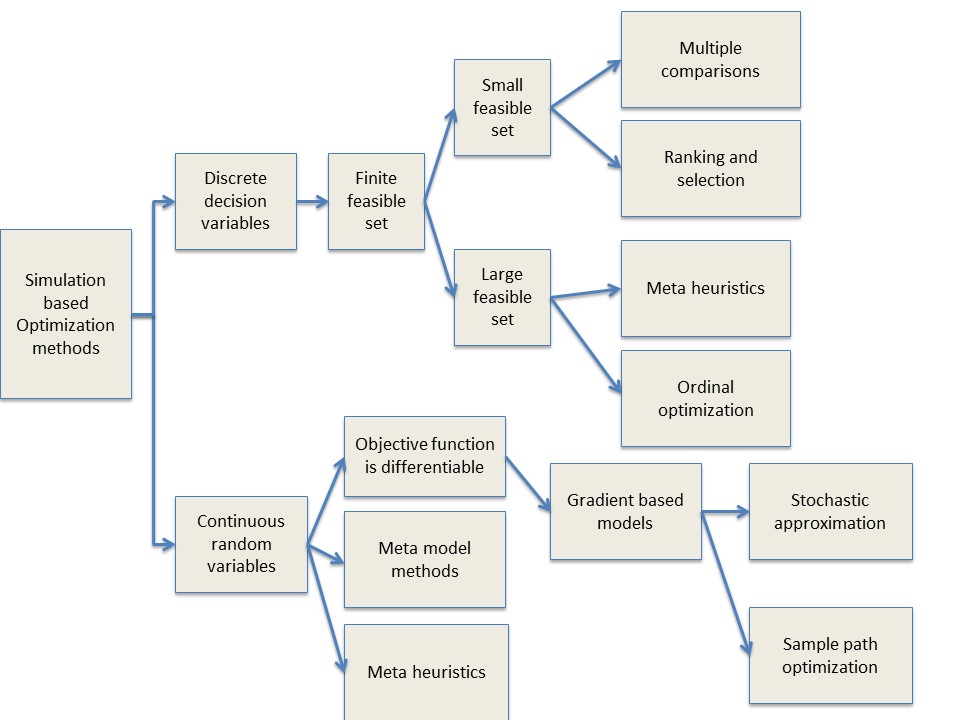

Specific simulation–based optimization methods can be chosen according to Figure 1 based on the decision variable types.

Optimization

Mathematical optimization (alternatively spelled ''optimisation'') or mathematical programming is the selection of a best element, with regard to some criteria, from some set of available alternatives. It is generally divided into two subfiel ...

exists in two main branches of operations research:

''Optimization parametric (static)'' – The objective is to find the values of the parameters, which are “static” for all states, with the goal of maximizing or minimizing a function. In this case, one can use mathematical programming

Mathematical optimization (alternatively spelled ''optimisation'') or mathematical programming is the selection of a best element, with regard to some criteria, from some set of available alternatives. It is generally divided into two subfiel ...

, such as linear programming

Linear programming (LP), also called linear optimization, is a method to achieve the best outcome (such as maximum profit or lowest cost) in a mathematical model whose requirements and objective are represented by linear function#As a polynomia ...

. In this scenario, simulation helps when the parameters contain noise or the evaluation of the problem would demand excessive computer time, due to its complexity.

''Optimization control (dynamic)'' – This is used largely in computer science

Computer science is the study of computation, information, and automation. Computer science spans Theoretical computer science, theoretical disciplines (such as algorithms, theory of computation, and information theory) to Applied science, ...

and electrical engineering

Electrical engineering is an engineering discipline concerned with the study, design, and application of equipment, devices, and systems that use electricity, electronics, and electromagnetism. It emerged as an identifiable occupation in the l ...

. The optimal control is per state and the results change in each of them. One can use mathematical programming, as well as dynamic programming. In this scenario, simulation can generate random samples and solve complex and large-scale problems.Abhijit GosaviSimulation‐Based Optimization: Parametric Optimization Techniques and Reinforcement Learning

Springer, 2nd Edition (2015)

Simulation-based optimization methods

Some important approaches in simulation optimization are discussed below.Statistical ranking and selection methods (R/S)

Ranking and selection methods are designed for problems where the alternatives are fixed and known, and simulation is used to estimate the system performance. In the simulation optimization setting, applicable methods include indifference zone approaches, optimal computing budget allocation, and knowledge gradient algorithms.Response surface methodology (RSM)

In response surface methodology, the objective is to find the relationship between the input variables and the response variables. The process starts from trying to fit a linear regression model. If the P-value turns out to be low, then a higher degree polynomial regression, which is usually quadratic, will be implemented. The process of finding a good relationship between input and response variables will be done for each simulation test. In simulation optimization, response surface method can be used to find the best input variables that produce desired outcomes in terms of response variables.Heuristic methods

Heuristic methods change accuracy by speed. Their goal is to find a good solution faster than the traditional methods, when they are too slow or fail in solving the problem. Usually they find local optimal instead of the optimal value; however, the values are considered close enough of the final solution. Examples of these kinds of methods include tabu search andgenetic algorithms

In computer science and operations research, a genetic algorithm (GA) is a metaheuristic inspired by the process of natural selection that belongs to the larger class of evolutionary algorithms (EA). Genetic algorithms are commonly used to g ...

.

Metamodels enable researchers to obtain reliable approximate model outputs without running expensive and time-consuming computer simulations. Therefore, the process of model optimization can take less computation time and cost.

Stochastic approximation

Stochastic approximation is used when the function cannot be computed directly, only estimated via noisy observations. In these scenarios, this method (or family of methods) looks for the extrema of these function. The objective function would be: :